PhD student Jason Yosinski's joint work on how deep-learning algorithms can be easily tricked has attracted more press attention, scoring writeups in Wired and MIT Technology Review:

"A technique called deep learning has enabled Google and other companies to make breakthroughs in getting computers to understand the content of photos. Now researchers at Cornell University and the University of Wyoming have shown how to make images that fool such software into seeing things that aren’t there."

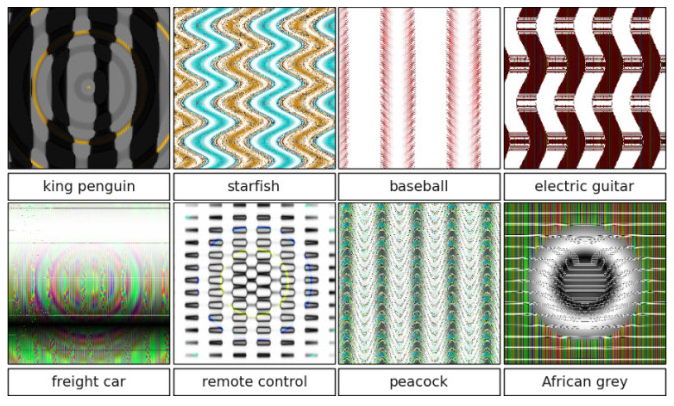

"...the group generated random imagery using evolutionary algorithms.

Essentially, they bred highly-effective visual bait. A program would produce an image, and then mutate it slightly. Both the copy and the original were shown to an “off the shelf” neural network trained on ImageNet, a data set of 1.3 million images, which has become a go-to resource for training computer vision AI. If the copy was recognized as something — anything — in the algorithm’s repertoire with more certainty the original, the researchers would keep it, and repeat the process.... Eventually, this technique produced dozens images that were recognized by the neural network with over 99 percent confidence. To you, they won’t seem like much. A series of wavy blue and orange lines. A mandala of ovals. Those alternating stripes of yellow and black. But to the AI, they were obvious matches: Star fish. Remote control. School bus."