AniGraph

OpenGL (and rasterization-based graphics in general) is weird. It's a huge complicated messy system designed to do very specific things incredibly fast. It is not designed to make doing those things easy---that is why engines like Unity and Unreal are incredibly successful: they hide away a lot of that messiness during development. This assignment will be a chance to peek behind that curtain. AniGraph is a framework I created to facilitate that peek in the setting of a large intro course like CS4620. It will make your life easier, but not as easy as a complete game engine. You are still going to have to face plenty of messiness. The hope is that you will come out of that experience with a better understanding of how things work, which also tends to make people better users of high-level tools and engines in the end.

Anigraph is built on top of ThreeJS and WebGL. You are free to extend AniGraph by writing your own ThreeJS code if you like, but we ask that you only use standard functionality, and not copy example code you found online. Outside of this class, it is common for people to copy-past code from ThreeJS's extensive library of examples without understanding how it works, but this can make extending functionality quite hard. Don't do this. Also, we ask you not to use ThreeJS implementations of things that we consider features (for example, ThreeJS has a standard toon shader material). To be safe, list any ThreeJS functionality you use that isn't already part of AniGraph in your writeup.

Application Structure

The main top-level entry points for writing an AniGraph app will be the scene model (subclass of ASceneModel) and scene controller (subclass of ASceneController). To facilitate this customization we've provided some base example subclasses for you to start with:

- ExampleSceneModel: This is where you will add new nodes to your scene model and update application logic involving multiple nodes.

- ExampleSceneController: The scene controller mainly handles two tasks. The first is to create new views when nodes are added to the model hierarchy. The second is to manage any interactions or communication between the scene model and the rest of your device (e.g., managing interaction modes and render targets).

You can think of the scene model as the root of our scene graph. The models and views that correspond to an individual node of our scene graph make up a "scene node". Most of the work in writing an AniGraph application involves:

- Writing custom nodes by writing custom descendant classes of ANodeModel and ANodeView classes.

- Writing custom materials by implementing vertex and fragment (pixel) shaders, and shader models.

- Writing logic in the scene model class that defines behavior involving multiple nodes (e.g., one node kicks another node in the face)

- Writing custom interaction modes, which specify how users interact with the application

MainSceneModel

For the most part, any logic that is not specific to a particular node class will go in the MainSceneModel class. In particular, initScene() and timeUpdate(t?:number, ...args:any[]). In the starter code you will find:

async PreloadAssets(): You can optionally specify assets like shaders or textures to preload.initCamera(): Set up the camera projection and initial poseinitViewLight: Places a point light. In the example code this point light is added as a child of the camera, which means there will be a light source located at the camera that moves with the camera. Warning: you can turn the intensity of this light to 0 if you don't want it to light your scene, but you may not want to remove it completely, as this could complicate the binding of some shaders.initScene(): this function will set up a new scene. It is a required function. Check out the related helper functions in ExampleSceneModel.ts, which are used in the starter scenes:initTerrain(): initializes the terrain modelinitCharacters(): initializes the player and bot models

getCoordinatesForCursorEvent(event: AInteractionEvent): optionally define a mapping from event coordinates based on the scene model. You may not need this, but it is offered in case anyone needs to re-map user input from different devices or has issues on different machines that we couldn't anticipate.timeUpdate(t?: number, ...args:any[]): this will be your main entry point for the logic that runs each time a frame is rendered.tcan be provided by the controller, or you can use the model's clocksceneModel.clock.time. Sometimes you want separate clocks so that you can decouple the speed of your application logic from user interaction logic.

MainSceneController

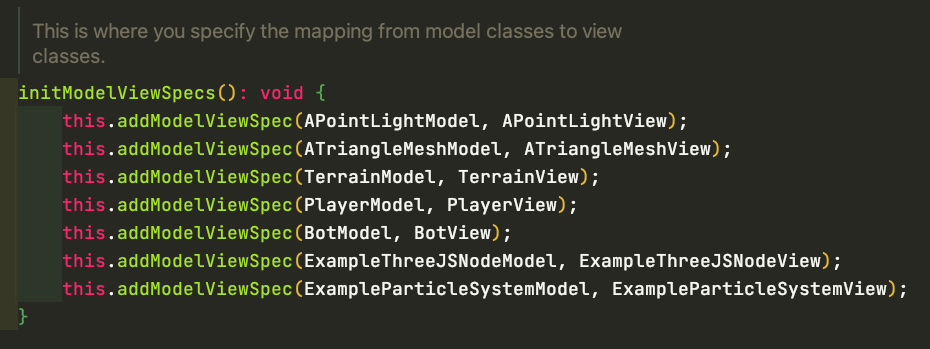

initModelViewSpecs(): This is the function where you specify what view classes should be created for each model node class in your application.initScene(): You can use this to set the background appearance of your scene. The example code uses a cube map of space.initInteractions(): This is where you declare interaction modes, which you can switch between from the control panel or programatically usingthis.setCurrentInteractionMode(...)onAnimationFrameCallback(context:AGLContext): This function updates the model and renders the view.

Setting up the MVC Spec

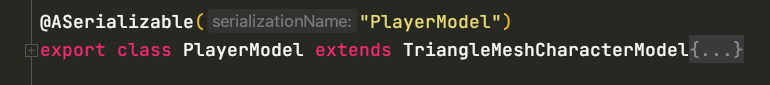

This works just like it did in C1. There are two things you need to do to create a new mapping from a descendant class of ANodeModel3D to one of ANodeView. First, when you define the custom model class, make sure to decorate it with @ASerializable. Here is an example of what that looks like from the definition of APlayerModel:

Second, you will need to add the mapping to the scene controller. This is done in the scene controller method initModelViewSpecs(), which might look like this:

From here, the associated view class should be created any time a corresponding model is added to the scene.

You can look at the example node classes (each is a pair consisting of one custom ANodeModel3D subclass and one ANodeView subclass) to see how they are created and customized.

Shaders and Materials

Adding a 3rd Dimension

You used modified 2D versions of AniGraph already in assignments 0-2. For the final project, we'll be taking advantage of a lot more functionality. In previous AniGraph assignments, transformations were represented with 3x3 matrices. In 3D, that's not gonna cut it...

For the full 3D version of AniGraph, we have two ways to represent transformations. The first is using a general 4x4 matrix, represented with the class Mat4. The second option is to use a NodeTransform3D instance, which has:

transform.position:Vec3| Represents the point that the transform maps the origin to.transform.rotation:Quaternion| Represents a 3D rotationtransform.scale:Vec3| Represents the scale factor of the matrix in x, y, and z

The corresponding matrix for a node transform is given by M, defined as:

let P = Mat4.Translation3D(transform.position);

let R = transform.rotation.Mat4();

let S = Mat4.Scale3D(transform.scale);

M = P.times(R).times(S);

There is additionally a transform.anchor:Vec3 property that can be used to shift the origin of the input coordinate system to the transform. However, I would caution about modifying the anchor value in transformations unless you feel very confident in what you are doing, as it can complicate other common operations. The anchor property modifies M by right-multiplying it with a translation such that:

let A = Mat4.Translation3D(transform.anchor.times(-1))

M = M.times(A);

You can convert between matrices and node transforms with:

let m:Mat4;

let nt:NodeTransform3D;

let nodeTransformFromMatrix = NodeTransform3D.FromMatrix(m);

let matFromNodeTransform = nt.getMat4();

Both Mat4 and NodeTransform3D implement the Transformation3DInterface interface, which can be useful if you want to type a function so that it accepts either. Just keep in mind that there are 4x4 matrices that you can't represent as NodeTransform3D objects, and there is no way to distinguish an anchor transform from a translation in a Mat4, so be aware of scenarios where information can change or be lost by converting between these representations.