Modeling and Rendering Fabrics at Micron-Resolution

Abstract: Rendering fabrics using micro-appearance models—fiber-level microgeometry coupled with a fiber scattering model can take hours per frame. We present a fast, precomputation-based algorithm for rendering both single and multiple scattering in fabrics with repeating structure illuminated by directional and spherical Gaussian lights.

Precomputed light transport (PRT) is well established but challenging to apply directly to cloth. This paper shows how to decompose the problem and

pick the right approximations to achieve very high accuracy, with significant performance gains over path tracing. We treat single and multiple scattering

separately and approximate local multiple scattering using precomputed transfer functions represented in spherical harmonics. We handle shadowing between fibers with precomputed per-fiber-segment visibility functions, using two different representations to separately deal with low and high frequency spherical Gaussian lights.

Our algorithm is designed for GPU performance and high visual quality. Compared to existing PRT methods, it is more accurate. In tens of seconds on a commodity GPU, it renders high-quality supersampled images that take path tracing tens of minutes on a compute cluster.

Links:

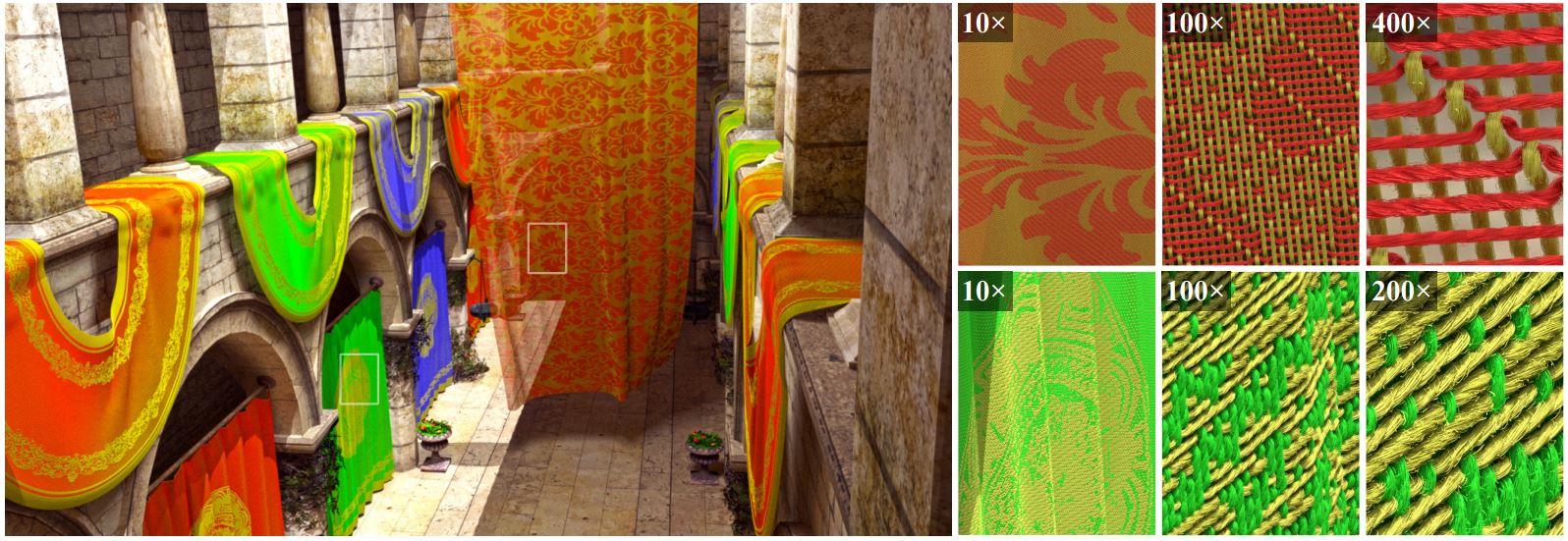

Fiber-Level On-the-Fly Procedural Textiles

Fujun Luan1,

Shuang Zhao2,

and Kavita Bala1

1Cornell University, 2U C Irvine

Computer Graphics Forum (Eurographics Symposium on Rendering), 2017

Download: [ Paper PDF (17.8 MB) ]

Abstract: Procedural textile models are compact, easy to edit, and can achieve state-of-the-art realism with fiber-level details. However, these complex models generally need to be fully instantiated (aka. realized) into 3D volumes or fiber meshes and stored in memory, We introduce a novel realization-minimizing technique that enables physically based rendering of procedural textiles, without the need of full model realizations. The key ingredients of our technique are new data structures and search algorithms that look up regular and flyaway fibers on the fly, efficiently and consistently. Our technique works with compact fiber-level procedural yarn models in their exact form with no approximation imposed. In practice, our method can render very large models that are practically unrenderable using existing methods, while using considerably less memory (60–200X less) and achieving good performance.

Links:

Fitting Procedural Yarn Models for Realistic Cloth Rendering

Shuang Zhao1,

Fujun Luan2,

and Kavita Bala2

1U C Irvine, 2Cornell University

ACM Transactions on Graphics (SIGGRAPH 2016), 35(4), July 2016

Download: [ Paper PDF (17.0 MB)]

Abstract: Fabrics play a significant role in many applications in design, prototyping,

and entertainment. Recent fiber-based models capture the

rich visual appearance of fabrics, but are too onerous to design and

edit. Yarn-based procedural models are powerful and convenient,

but too regular and not realistic enough in appearance. In this paper,

we introduce an automatic fitting approach to create high-quality

procedural yarn models of fabrics with fiber-level details. We fit

CT data to procedural models to automatically recover a full range

of parameters, and augment the models with a measurement-based

model of flyaway fibers. We validate our fabric models against CT

measurements and photographs, and demonstrate the utility of this

approach for fabric modeling and editing.

Links:

Abstract: Micro-appearance models explicitly model the interaction of light with microgeometry at the fiber scale to produce realistic appearance. To effectively match them to real fabrics, we introduce a new appearance matching framework to determine their parameters. Given a micro-appearance model and photographs of the fabric under many different lighting conditions, we optimize for parameters that best match the photographs using a method based on calculating derivatives during rendering. This highly applicable framework, we believe, is a useful research tool because it simplifies development and testing of new models.

Using the framework, we systematically compare several types of micro-appearance models. We acquired computed microtomography (micro CT) scans of several fabrics, photographed the fabrics under many viewing/illumination conditions, and matched several appearance models to this data. We compare a new fiber-based light scattering model to the previously used microflake model. We also compare representing cloth microgeometry using volumes derived directly from the micro CT data to using explicit fibers reconstructed from the volumes. From our comparisons we make the following conclusions: (1) given a fiber-based scattering model, volume- and fiber-based microgeometry representations are capable of very similar quality, and (2) using a fiber-specific scattering model is crucial to good results as it achieves considerably higher accuracy than prior work.

Links:

Modular Flux Transfer: Efficient Rendering of High-Resolution Volumes with Repeated Structures

Shuang Zhao1,

Miloš Hašan2,

Ravi Ramamoorthi3,

and Kavita Bala1

1Cornell University, 2Autodesk, 3Universify of California, Berkeley

ACM Transactions on Graphics (SIGGRAPH 2013), 32(4), July 2013

Download: [ Paper PDF (15 MB) ]

Abstract: The highest fidelity images to date of complex materials like cloth use extremely high-resolution volumetric models. However, rendering such complex volumetric media is expensive, with brute-force path tracing often the only viable solution. Fortunately, common volumetric materials (fabrics, finished wood, synthesized solid textures) are structured, with repeated patterns approximated by tiling a small number of exemplar blocks. In this paper, we introduce a precomputation-based rendering approach for such volumetric media with repeated structures based on a modular transfer formulation. We model each exemplar block as a voxel grid and precompute voxel-to-voxel, patch-to-patch, and patch-to-voxel flux transfer matrices. At render time, when blocks are tiled to produce a high-resolution volume, we accurately compute low-order scattering, with modular flux transfer used to approximate higher-order scattering. We achieve speedups of up to 12X over path tracing on extremely complex volumes, with minimal loss of quality. In addition, we demonstrate that our approach outperforms photon mapping on these materials.

Video:

Media Coverage:

Links:

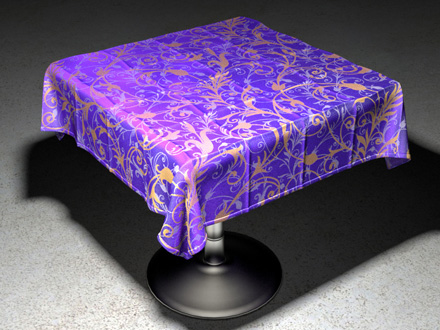

Abstract: Woven fabrics have a wide range of appearance determined by their small-scale 3D structure. Accurately modeling this structural detail can produce highly realistic renderings of fabrics and is critical for predictive rendering of fabric appearance. But building these yarn-level volumetric models is challenging. Procedural techniques are manually intensive, and fail to capture the naturally arising irregularities which contribute significantly to the overall appearance of cloth. Techniques that acquire the detailed 3D structure of real fabric samples are constrained only to model the scanned samples and cannot represent different fabric designs.

This paper presents a new approach to creating volumetric models of woven cloth, which starts with user-specified fabric designs and produces models that correctly capture the yarn-level structural details of cloth. We create a small database of volumetric exemplars by scanning fabric samples with simple weave structures. To build an output model, our method synthesizes a new volume by copying data from the exemplars at each yarn crossing to match a weave pattern that specifies the desired output structure. Our results demonstrate that our approach generalizes well to complex designs and can produce highly realistic results at both large and small scales.

Video:

Links:

TOG Cover Image:

Abstract: The appearance of complex, thick materials like textiles is determined by their 3D structure, and they are incompletely described by surface reflection models alone. While volume scattering can produce highly realistic images of such materials, creating the required volume density models is difficult. Procedural approaches require significant programmer effort and intuition to design specialpurpose algorithms for each material. Further, the resulting models lack the visual complexity of real materials with their naturallyarising irregularities.

This paper proposes a new approach to acquiring volume models, based on density data from X-ray computed tomography (CT) scans and appearance data from photographs under uncontrolled illumination. To model a material, a CT scan is made, resulting in a scalar density volume. This 3D data is processed to extract orientation information and remove noise. The resulting density and orientation fields are used in an appearance matching procedure to define scattering properties in the volume that, when rendered, produce images with texture statistics that match the photographs. As our results show, this approach can easily produce volume appearance models with extreme detail, and at larger scales the distinctive textures and highlights of a range of very different fabrics like satin and velvet emerge automatically -- all based simply on having accurate mesoscale geometry.

Video:

Media Coverage:

Links: