Resource Efficient Machine Learning

(aka Test-time Cost Sensitive Learning / Budgeted Learning)

Imagine a doctor examining a patient. The doctor has the option to perform several tests, some of which are cheap and can be used in most cases, e.g. measuring the pulse or heart-rate. Others, such as X-Ray, fMRI- or CT-scans, are expensive and should only be performed when necessary. If we regard examinations as features, the current leading paradigm in machine learning is analogous to a doctor who first performs every possible test on a patient and only after they are all completed begins to infer if there are any abnormalities.

In machine learning, the cost of feature extraction has been widely ignored over the past years. This is a fundamental shortcoming, which is exposed when algorithms are used in large scale industrial applications such as search engines or email spam filters. Here, companies must operate within fixed budgets and strictly control their resources. The accumulated cost of feature extraction dominates the run-time of the algorithm and can become the limiting factor that prevents improvements and innovation. Addressing this shortcoming will allow companies and research institutions around the world to drastically improve their cost effectiveness and enable them to include new features that would be prohibitively expensive under the existing machine learning regime. As the key ingredient in many machine learning applications often lies in the domain expertise of such features (for example in web-search, email spam filtering, recommender systems, personalized medicine), I hope that test-time budgeted learning will translate directly into a surge of improvements across many high impact application domains.

Data:

Throughout our publications, we made extensive use of the Yahoo! Learn to Rank Challenge version 2.0 data set. It contains the original Yahoo web search ranking data and annotated feature costs.

It took us some time to get all the feature costs and to make sure the data could be released. So if you use it in your publication, please cite BIBTEX.

Publications:

[PDF] Gao Huang, Shichen Liu, Laurens van der Maaten, Kilian Q. Weinberger

CondenseNet: An Efficient DenseNet using Learned Group Convolutions

The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018, in press…

[PDF] Gao Huang, Danlu Chen, Tianhong Li, Felix Wu, Laurens van der Maaten, Kilian Q. Weinberger

Multi-Scale Dense Networks for Resource Efficient Image Classification

International Conference on Learning Representations (ICLR), 2018, in press …

[PDF] Yann Wang, Lequnn Wang, Yurongn You, Xun Zou, Vincentn Chen, Serenan Li, Bharathn Hariharan, Gao Huang, Kilian Q. Weinberger

Resource Aware Person Re-identification across Multiple Resolutions

The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018, in press…

[PDF] W. Chen, J. Wilson, S. Tyree, K. Weinberger, and Y. Chen, Proc. Compressing Convolutional Neural Networks in Frequency Domain. ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD-16), 2016.

[PDF][Video] Wenlin Chen, James T. Wilson, Stephen Tyree, Kilian Q. Weinberger, Yixin Chen. Compressing Neural Networks with the Hashing Trick. International Conference on Machine Learning (ICML), Lille, France, pp. 2285–2294, 2015.

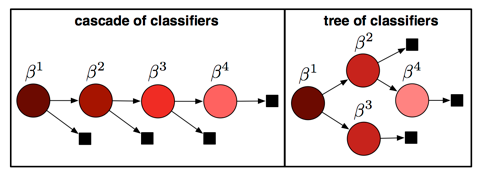

[PDF][CODE][BIBTEX] Zhixiang Xu, Matt J. Kusner, Kilian Q. Weinberger, Minmin Chen, Olivier Chapelle, Budgeted Learning with Trees and Cascades. Journal of Machine Learning Research (JMLR), 15(Jun):2113−2144, 2014.

[PDF][CODE][BIBTEX] Matt Kusner, Stephen Tyree, Kilian Q. Weinberger, Kunal Agrawal, Stochastic Neighbor Compression. International Conference on Machine Learning (ICML), Beijing China (in press ...)

[PDF][CODE][BIBTEX] Zhixiang (Eddie) Xu, Matt J. Kusner, Gao Huang, Kilian Q. Weinberger. Anytime Representation Learning. Proceedings of 30th International Conference on Machine Learning (ICML), Atlanta, GA, pages 1076-1084, 2013.

[PDF][BIBTEX] Zhixiang (Eddie) Xu, Matt J. Kusner, Kilian Q. Weinberger, Minmin Chen. Cost-Sensitive Tree of Classifiers. Proceedings of 30th International Conference on Machine Learning (ICML), Atlanta, GA, pages 133-141, 2013.

[PDF][CODE][BIBTEX] Zhixiang (Eddie) Xu, Kilian Q. Weinberger, Olivier Chapelle. The Greedy Miser: Learning under Test-time Budgets. Proceedings of 29th International Conference on Machine Learning (ICML), Edinburgh Scotland, Omnipress, pages 1175--1182, 2012.

[PDF][CODE][BIBTEX] Minmin Chen, Zhixiang (Eddie) Xu, Kilian Q. Weinberger, Olivier Chapelle, Dor Kedem. Classifier Cascade: Tradeoff between Accuracy and Feature Evaluation Cost. Proceedings of the Fifteenth International Conference on Artificial Intelligence and Statistics (AISTATS), JMLR W&C Proceedings 22: AISTATS 2012, pages 218-226, MIT Press.