Chapter 18

Common Knowledge of Rationality

In this chapter, we turn to using our model of knowledge to reason about games.

18.1 Knowledge and Games

We must first define what it means for a knowledge structure to be “appropriate” for a game :

Definition 18.1. We say that a knowledge structure tuple is appropriate for a finite game if:

- (the players are the same)

- (the outcome in each world is an action profile in the game)

- For all , and all , , where denotes the component of . (That is, players always know their own strategy.)

In other words, each world models what action each of the players take; players have beliefs about what others are doing and believing, and we assume players always know their own action. See Figure 18.1 for a simple knowledge structure appropriate for the Prisoner’s Dilemma game.

Figure 18.1: A knowledge network for the Prisoner’s Dilemma. This graph looks similar to the one we considered for the muddy children puzzle, but notice that there each player knows the other player’s state but not their own, whereas here each player knows their own strategy but not the other’s. Also observe that, while in this network, there is exactly one state per action profile, this does not necessarily need to hold; other networks for the same game may have several nodes with the same action profile but different beliefs.

We can now define an event , which denotes the event that player is acting “rationally.” We take a conservative view and provide a minimal (and weak) definition of what it means to be rational. Basically, we define what it means to not be “crazy” or “totally irrational”: following the discussion in chapter 1, we say that player is (minimally) rational at a world if there does not exists some strategy that would give strictly better utility in every world considers possible. (Clearly, if such a strategy were to exist, then would be stupid not to use it.) More precisely, let

and let denote the event that everyone is rational:

Note that if a player is rational at some state , this effectively means that there is action that strictly dominates in every world considers possible. Based on this observation, we can get the following simple characterizations of states where holds:

Theorem 18.1. Let be a finite normal-form game, and let be an action profile. Then the following statements are equivalent:

- is not strictly dominated (i.e., survives one round of iterative strict dominance).

- There exists a knowledge structure that is appropriate for and a state such that and .

Proof. We first prove that (1) implies (2) and then that (2) implies (1).

(1) (2). Consider an action profile that survives one step of iterative strict dominance. Construct a knowledge structure where we have a state for every possible action profile such that:

- Let ;

- Let (that is, has no idea what any other player is doing, but knows their own action).

It is easily verified that in every world, players know their beliefs and their strategy; they know their strategy by definition, and the fact that they know their beliefs follows from the fact that the beliefs of a player are determined solely by their strategy. We can now claim that is the world we are looking for. By our definition of , . Furthermore, since is not strictly dominated, we have by the definition of that .

(2) (1). Assume there exists some knowledge structure appropriate for and some state such that and . Assume for contradiction that there exists some player and some strategy that strictly dominates . Since , there must exist some state such that:

But, because players “know their own strategy” (since is appropriate for ), we have that . It follows that

which contradicts the fact that strictly dominates .

■

18.2 An Epistemic Characterization of ISD

In this section, we instead show how to get an “epistemic” (i.e., knowledge-based) characterization of ISD and PNE. As we saw in chapter 16, it is often natural to not only assume that everyone is rational, but also that it is commonly known that everyone is rational (i.e., everyone is rational, everyone knows that everyone is rational, everyone knows that everyone knows that everyone is rational, and so on)—this is referred to as common knowledge of rationality (CKR). The following theorem shows that CKR characterizes exactly the set of strategies surviving ISD.

Theorem 18.2. Let be a finite normal-form game, and let be an action profile. Then the following statements are equivalent:

- survives iterative strict dominance.

- There exists a knowledge structure that is appropriate for and a state such that and ω ∈ C RAT (i.e., common knowledge of rationality holds at ).

Proof. [Advanced] Again, we separately show each direction.

(1) (2). Let be the set of strategies for player surviving ISD, and let be the set of strategy profiles surviving ISD. We construct a knowledge structure appropriate for where we have a state for every possible action profile such that:

- ;

- (that is, knows their own action, but otherwise considers all strategy profiles for that survive ISD possible).

Just as in the proof of Theorem 18.1, we can easily verify that in every world, players know their strategy and beliefs. We additionally have the following claim:

Claim 18.1. Consider the knowledge structure defined above. For every , we have that ω ∈ RAT.

Proof. Assume for contradiction that there exists some state and some player such that . That is, there exists some that strictly dominates with respect to for all . By our construction of (specifically, ), thus strictly dominates with respect to . Thus, intuitively, should have been deleted by ISD (since dominates it with respect to the remaining set of strategies), which would be a contradiction. In fact, the only reason why may not be removed in the ISD process at this stage is if were not inside , since ISD only considers strict dominance by strategies still surviving. But in that case, was deleted due to being dominated by some strategy ; in turn, this strategy is either in or was previously removed due to being dominated by some strategy . Since the strategy space is finite, we must eventually will reach some , which strictly dominates itself with respect to by transitivity of strict dominance (and the fact that the strategy space shrinks, preventing a strategy strictly dominated earlier in the process from not being strictly dominated later). This, then, contradicts the assumption that .

■

Given this claim, it directly follows that for every player , and state , ; thus, . Consequently, we thus have that for every , , and so on. By induction it follows that for every , and every , , and thus we also have that .

Thus for every strategy profile that survives ISD, we have that and the state satisfy the required conditions.

(2) (1). Consider some knowledge structure appropriate for . Let denote the set of strategies for player surviving rounds of ISD (and define as the set of strategy profiles surviving ISD). We shall prove by induction that for any state (i.e., where everybody knows that everybody knows …( times) …that everyone is rational), we have . The base case () is proven by Theorem 18.1 above.

For the inductive step, assume the statement is true for , and let us prove it for . Consider some and some player . Note that if , then (since, by the definition of beliefs, ); consequently, as well. So, by the induction hypothesis, ; furthermore, for every , . Since , it follows by definition that is an action that is inside , but not strictly dominated by any action with respect to ; hence, (i.e., it will survive one more round). And since the above holds for all players, we have . So, for any , for any , implying that survives ISD.

■

18.3 An Epistemic Characterization of PNE

Can we hope to also get an epistemic characterization of PNE? To do this, we need to add additional assumptions about the knowledge of players. In particular, we will need to assume that everyone knows not only their own strategy, but also the strategies of others! Specifically, let be the event that all players know all players’ strategies; formally,

Theorem 18.3. Let be a finite normal-form game, and let be an action profile. Then the following statements are equivalent:

Proof. [Advanced] We prove equivalence by showing that (1) implies (2) implies (3) implies (1).

(1) (2). Consider a structure with just one state such that and . Clearly, at , and hold, and consequently, by induction, also .

(2) (3). Trivial (because implies ).

(3) (1). and taken together imply that for every player , player ’s strategy at is a best response with respect to ; thus, is a PNE.

■

18.4 Knowledge vs. Belief: Explaining Bubbles in the Market

So far in our discussion we have only considered a model of knowledge. We may also use a similar model to reason about belief; we can define a belief structure in exactly the same way as a knowledge structure, except that we remove the second-to-last condition in Definition 17.1—that is, we no longer require that in every state , players always need to consider possible. We say that a player believes if holds in every world considers possible, and we define a belief event in exactly the same ways as (except that it is defined on a “belief structure” rather than a “knowledge structure”).

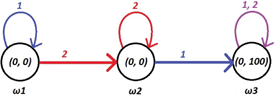

Note that once we drop the second-to-last condition in Definition 17.1, the two graph properties of knowledge networks discussed in Section 17.2 (i.e., self-loops and bidirectional edges) no longer need to hold for “belief networks”: self-loops are no longer required and as a consequence, neither are bidirectional edges. To see how this makes things different, let us once more consider an auction scenario with two players and a single item and the belief structure depicted in Figure 18.2. Assume that the true state of the world is . Player 1 here knows the true state of the world. However, player 2 has an incorrect belief: he believes that is the true state of the world. (Notice that there is no self-loop for player 2 at , so he does not even consider the true state possible; this could not have happened in a knowledge structure.) Thus, player 2 believes that player 1 believes that player 2 values the item at 100, when player 1 (correctly) believes that player 2 values it at zero!

Figure 18.2: A belief network for a second-price auction. Some states in a belief network (here, and ) lead to players having incorrect beliefs.

In other words, player 2 (incorrectly) believes that he is smarter than player 1, who (according to player 2) incorrectly believes that player 2 is foolishly valuing the good at 100. How much do you think an auctioneer could sell this item for in such a situation? Remember that not only everyone thinks that the item is worthless, but we also have that everyone believes that everyone thinks the item is worthless! But, as is turns out, just the fact that somebody believes that somebody else believes that the item is worth 100 means that a sufficiently clever auctioneer can sell it for close to 100, assuming that common belief of rationality holds (i.e., it is common belief that everyone is rational, where common belief is defined exactly as common knowledge but in belief structures). In essence, we get a “bubble” in the market where the item gets sold at a high price despite nobody actually having a high valuation for it.

We will briefly and informally explain how this can happen. Consider a standard second-price auction, but change it slightly so that the seller will also decide to forgo some small amount of money and distribute back to the players as a “participation reward”; the money gets split among the players based on how much the players bid (so that player who bid a lot gets a larger fraction of ). Intuitively, adding such a participation reward should make it worse for the seller, as he now loses out of the profit he makes from selling the item. But, as we shall see (and as is common in practice), adding small “welcome gifts” can be a way to extort more money from the buyers!

More precisely, in an auction with players, player gets as a participation reward where is the player’s bid; note that each such participation reward is smaller than and thus in total, the auctioneer never spends more than . Intuitively, however, this incentivizes players to bid high, especially if they are sure to never win in the auction (as in this case, they increase their participation reward). As we shall argue, in any outcome that is consistent with CKR, the object is sold for close to 100. The reasoning works as follows:

- We first claim that at , a rational player 2 must bid at least 100. To show this, note that if we had been running a standard second-price auction (without a participation reward), then player 2 clearly can never improve their utility by bidding less than 100 (since he will anyway end up paying the same price if he wins the auctions, and in case he loses, he gets a utility of ). Now, since the actual auction we are running has been modified to include the participation reward, note that by bidding less than 100, player 2 gets a strictly smaller part of the reward . Thus, we conclude that by bidding less than 100, player 2 gets strictly less in utility (since player 2’s utility is the utility he would have gotten in a standard second-price auction plus the participation reward).

- Now, in , since player 1 believes (incorrectly) that the true state is , a rational player 1 must bid at least 99: he knows that player 2 (being rational) bids at least 100, thus by bidding 99 he gets as much as possible of the participation reward.

- Thus, in the true state of the world, , player 2, who incorrectly believes that the true state is , must bid at least 98 (since he is sure that player 1 will bid at least 99).

- Finally, at , player 1, who correctly believes that the true state is must bid at least 97 (since he is sure that player 2 will bid at least 98).

We conclude that in the true state of the world, , player 1 will bid at least 97 and player 2 will bid at least 98; thus, the item will be sold for at least 97, and the auctioneer is guaranteed to receive a utility of at least for a worthless item!

Notes

Our treatment of the knowledge-based characterizations of ISD and PNE follows ideas from [TW88, AB95, HP09], but we explore them here in a somewhat different setting that enables a significantly simpler analysis. As far as we know, these characterizations are new (and our formalism is closest in spirit to [HP09, CMP16]).

Closely related characterization results was first proven by [TW88] and [AB95] relying on a different (stronger) notion of rationality. More precisely, in our treatment we consider a weak “possibilistic” notion of rationality. A stronger definition of rationality can be obtained by considering knowledge structures with probabilities: we no longer only have just a set of worlds players consider possible at each world, but we also assign probabilities to the worlds a player considers possible (e.g., a player may consider some worlds more likely than others). In such a setting, the most popular notion of rationality requires a player ’s strategy at to maximize expected utility given the distribution of strategies for players induced by their beliefs. CKR is characterized by ISD in this setting as long as we change the definition of ISD to also allow for domination by “mixed strategies” (that is, we remove actions that are strictly dominated by a probability distribution over actions)—this was the result first proved by [TW88]. An analogous characterizations of (mixed-strategy) Nash equilibria were obtained in [AB95].

The bubbles in the market example originates from the treatment in [CMP16] where a very similar auction mechanism is analyzed (but the actual example is new).