Chapter 17

Knowledge and Common Knowledge

As we have already seen in chapter 16, reasoning about players’ knowledge about other players’ knowledge was instrumental in understanding herding behavior. In this chapter, we introduce formal models for reasoning about such higher-level knowledge (and beliefs). But before doing so, let us first develop some more intuitions, through the famous “muddy children” puzzle.

17.1 The Muddy Children Puzzle

Suppose a group of children are in a room. Some of the children have mud on their foreheads; all of the children can see any other child’s forehead, but they are unable to see, feel, or otherwise detect whether they themselves have mud on their own forehead. Their father enters the room, announces that some of the children have mud on their foreheads, and asks if anyone knows for sure that they have mud on their forehead. All of the children say “no.”

The father asks the same question repeatedly, but the children continue to say “no,” until, suddenly, on the tenth round of questioning, all of the children with mud on their foreheads answer “yes”! How many children had mud on their foreheads?

The answer to this question is, in fact, 10. More generally, we can show the following (informally stated) claim:

Claim 17.1 (informal). All of the muddy children will say “yes” in, and not before, the round of questioning if and only if there are exactly muddy children.

Proof. (informal) We here provide an informal inductive argument appealing to “intuitions” about what it means to “know” something—later, we shall formalize a model of knowledge that will enable a formal proof. Let be the statement that the claim is true for children. That is, we’re trying to prove that is true for all . We prove the claim by induction.

Base case: We begin by showing . Because the father mentions that there are some muddy children, if there is only one muddy child, they will see nobody else in the room with mud on their forehead and know in the first round that they are muddy. Conversely, if there are two or more muddy children, they are unable to discern immediately whether they have mud on their own forehead; all they know for now is that some children (which may or may not include themselves) are muddy.

The inductive step: Now assume that is true for ; we will show . Suppose there are exactly muddy children. Since there are more than muddy children, by the induction hypothesis nobody will say “yes” before round . In that round, each muddy child sees other muddy children, and knows thus that there are either or muddy children total. However, by the induction hypothesis, they are able to infer that, were there only muddy children, someone would have said “yes” in the previous round; since nobody has spoken yet, each muddy child is able to deduce that there are in fact muddy children, including themselves.

If there are strictly more than muddy children, however, then all children can tell that there are at least muddy children just by looking at the others; hence, by the induction hypothesis, they can infer from the start that nobody will say “yes” in round . So they will have no more information than they did initially in round , and will be unable to tell whether they are muddy as a result.

■

17.2 Kripke’s “Possible Worlds” Model

Let us now introduce a model of knowledge that allows us to formally reason about these types of problems. We use an approach first introduced by the philosopher Saul Kripke. To reason about beliefs and knowledge, the idea is to consider not only facts about the “actual” world we are in, but also to consider a set of “possible” worlds . Each possible world, , specifies some “outcome” ; think of the outcome as the set of “ground facts” that we care about—for instance, in the muddy children example, specifies which children are muddy. Additionally, each world specifies “beliefs” for all of the players. In contrast to our treatment in chapter 16, we here focus on “possibilistic” (as opposed to “probabilistic” beliefs): for each player , player ’s beliefs at world —denoted —are specified as a set of worlds; think of these as the worlds that player considers possible at (i.e., worlds that cannot rule out as being impossible according to him).1 We now, intuitively, say that a player knows some statement at some world if is true in every world considers possible at (i.e., holds at all the worlds in ).

Knowledge structures Let us turn to formalizing the possible worlds model, and this notion of knowledge.

Definition 17.1. A (finite) knowledge structure is a tuple such that:

- is a finite set of players.

- is a finite set; we refer to as the set of “possible worlds” or “possible states of the world.”

- is a set of outcomes.

- is a function that maps worlds to outcomes .

- For all , maps worlds to sets of possible worlds; we refer to as the beliefs of at —that is, these are the worlds that considers possible at .

- For all , , it holds that . (That is, in every world , players always consider that world possible.)

- For all , , and , it holds that . (That is, at every world , in every world players consider possible, they have the same beliefs as in .)

Definition 17.2. Given a knowledge structure , we define an event, , in as a subset of states (think of these as the set of state where “ holds”). We say that holds at a world if . Given an event , define the event (“player knows ”) as the set of worlds such that . Whenever is clear from the context, we simply write . Finally, define the event (“everyone knows ”) as .

The last two conditions in Definition 17.1 deserve some clarification. Given our definition of knowledge (Definition 17.2), the last condition in Definition 17.1 is equivalent to saying that players “know their beliefs”—it requires that at every world , in every world they consider possible (in ), their beliefs are the same as in (and thus at , players “know their beliefs at ”).

The second-to-last condition, on the other hand, says that at any world , players never rule out (the true world) . Intuitively, if is the true state of the world, players can never have seen evidence that is impossible and thus they should never rule it out. In particular, this condition means that players can never know something that is not true: if something holds in every world a player considers possible, then it must hold in the actual world, since the actual world is deemed possible. While this condition is appropriate for defining knowledge, it may not always be the best way to model beliefs—indeed, sometimes people may be fully convinced of false statements! We will return to this point later when discussing the difference between knowledge and beliefs.

Knowledge partitions Let us point out a useful consequence of the two conditions we just discussed. A direct consequence of last condition is that for each player , and any two worlds , we have that either or and are disjoint. The second-to-last condition, in turn, implies that the beliefs of a player cover the full set of possible worlds. Thus, taken together, we have that the beliefs of a player partitions the states of the world into different disjoint “cells” where the beliefs of the player are all the same—these cells are sometime referred to as the knowledge partitions or knowledge cells of a player.

Knowledge networks To reason about knowledge structures it is convenient to represent them as “knowledge networks/graphs”: The nodes of the graph corresponds to the possible state of the world, ; we label each node by the outcome, , in it. The edges in the graph represent the players’ beliefs: we draw a directed edge with label between nodes if (that is, if player considers possible in ). Note that this graph has two important features:

- Self-loops: By the second-to-last condition in Definition 17.1, each node has a self-loop labeled by every player identity .

- Bidirectional edges: By a combination of the second-to-last and the last conditions, we have that every edge is bidirectional: if a player considers possible at (and we draw an edge from to with label ), then by the last condition (i.e., that players know their beliefs), player must consider possible the same worlds at and , and then by the second-to-last condition (i.e., that players know the true state of the world), player must consider possible at , and thus player must also consider possible at (and consequently, we need to draw an edge from to with label ).

To gain some familiarity with knowledge structures and their network representations, let us consider two examples. We first consider a simple example in the context of single-good auctions, and then return to the muddy children puzzle.

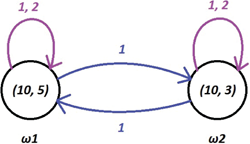

Example (A single-good auction) Consider a single-item auction with two buyers. Let the outcome space be the set of pairs (where specifies the valuation of player ). Consider a knowledge structure with just two possible worlds, and , such that , , and , , and . See Figure 17.1 for an illustration of the knowledge network corresponding to this knowledge structure.

Figure 17.1: A knowledge network for a single-item auction, where the world state is dependent on player 2’s valuation. This captures the fact that player 2 is fully aware of his valuation, but player 1 is not.

Note that in both worlds, player 1 knows his own valuation (), but he does not know player 2’s valuation—as far as he knows it may be either or . In contrast, player 2 knows both his own and player 1’s valuation for the item in both worlds.

Also, note that if we were to change the structure so that , this would no longer be a valid knowledge structure, as the last condition in Definition 17.1 is no longer satisfied (since but ); in particular, in player 1 would now consider it possible that he knows player 2’s valuation is 3 whereas he does not actually know it.

Note that a world determines not only players’ beliefs over outcomes (valuations, in the above example), but also players’ beliefs about what other players believe about outcomes. We refer to these beliefs about beliefs (or beliefs about beliefs about beliefs , etc.) as players’ higher-level beliefs. Such higher-level beliefs are needed to reason about the muddy children puzzle as we shall now see.

Example (the muddy children) Assume for simplicity that we have two children. To model the situation, consider an outcome space (i.e., an outcome specifies whether each of the children is muddy (M) or clean (C)), and the set of possible worlds such that:

By the rules of the game, we have some restrictions on each player’s beliefs. Player 1’s beliefs are defined as follows:

- (player 1 knows player 2 is muddy, but cannot tell whether or not he himself is);

- (player 1 knows player 2 is clean, but cannot tell whether or not he himself is).

Analogously for player 2:

- ;

- .

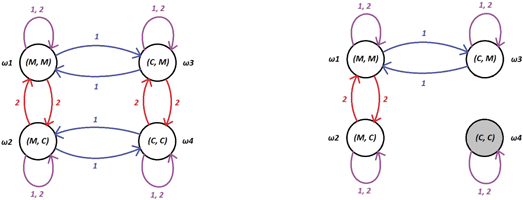

The knowledge network corresponding to this situation is depicted in Figure 17.2. To reason about this game, define the event as the set of worlds where player is muddy, and let the event . Consider now a situation where both children are muddy; that is, the true state of the world, is . Notice that

Figure 17.2: Left: the knowledge network for the muddy children example. Right: The knowledge network when the father announces that at least one child is muddy. Notice that, unless the state is , one of the two children is now able to determine the current state.

since player 1 knows that someone (player 2) is muddy. (Recall that denotes the set of states where player 1 knows that the event holds.) Similarly,

Furthermore, note that for both players ,

where for any event , denotes the complement of the event , since nobody initially knows whether they are muddy or not.

17.3 Common Knowledge

Does the father’s announcement that there is some muddy child tell the children something that they did not already know? At first sight it may seem like it does not: the children already knew that some child is muddy! However, since the announcement was public, they do actually learn something new, as we shall now see.

Before the announcement, player 1 considers it possible that player 2 considers it possible that the true state of the world is and thus that nobody is muddy. But after the announcement, this is no longer possible: we know that under no circumstances, can never be deemed possible; that is, in the knowledge network, we should now remove all arrows that point to , as seen in the right of Figure 17.2. In particular, we now have that everyone knows that someone is muddy (which previously was not known); that is,

In fact, since the announcement was public, the fact that “someone is muddy” becomes commonly known—that is, everyone knows it, everyone knows that everyone knows it, everyone knows that everyone knows that everyone knows it, and so on. We formalize this notion in the straightforward way:

Definition 17.3. Define the event (“level- knowledge of holds”) as follows:

Define the event (“ is common knowledge”) as follows:

So, after the announcement, we have

The important takeaway here is that:

- if a statement gets communicated in private, the statement becomes known to the receiver; whereas,

- if the statement is publicly announced, the statement gets commonly known among the recipients.

There is an interesting graph-theoretic way to characterize common knowledge using the knowledge network corresponding to the knowledge structure:

Proposition 17.1. Given a knowledge structure , and a state , we have that common knowledge of holds at (i.e., ) if and only if holds at every state that is reachable from in the knowledge network induced by .

Proof. We prove by induction that holds at some state if and only if holds at at every state that is reachable through a path of length from . The base case is trivial. To prove the induction step, consider some state .

We first show that if , then must hold at every state that can be reached through a path of length from . Consider some length- path . By the definition of , we have that , and thus by the induction hypothesis we have that (as can be reached through a length -path (namely, from ).

We next show that if holds at every state that can be reached through a path of length from , then . To show that , we need to show that for every , and every , we have that . Consider any such state . By our assumption that holds at every state that can be reached through a path of length from , it follows that also holds at every state that can be reached through a path of length from (as any such state can be reached through a path of length from ). By the induction hypothesis, it thus follows that .

■

Back to the muddy children Let us return to the muddy children puzzle. Does the announcement of the father enable anyone to know whether they are muddy? The answer is no. In fact, by inspecting the knowledge network resulting from removing , we still have for ,

So, everyone will reply “no” to the first question. How do these announcements (of the answers “no”) change the players’ knowledge? The players will now know that states and are impossible, since and ; hence, if either of these states were true, one of the two children would have answered “yes” to the first question.

So, when the father asks the second time, the graph is reduced to only the state , and so both children know that they are muddy and can answer “yes.” The same analysis can be applied to a larger number of players; by using an inductive argument similar to the one at the start of the chapter, we can prove using this formalism that after questions all states where or fewer children are muddy have been removed. And so, in any state where there exist muddy children, all of them will respond “yes” to the question.

17.4 Can We Agree to Disagree? [Advanced]

Consider some knowledge structure , and let be some probability mass function over the set of states ; think of as modeling players’ “prior” probabilistic beliefs “at birth” before they receive any form of information/signals about the state of the world. This probability mass function is referred to as the common prior over states, as it is common to all players.

In a given world , a player may have received additional information and then potentially “knows” that not every state in is possible—they now only consider states in possible. Consequently, we can now define a player ’s (subjective) posterior beliefs in by conditioning on the set of states . Concretely, given some event ,

- Let (read “i assigns probability to the event ”) denote the set of states such that ; that is, the set of states where

holds.

A natural question that arises is whether it can be common knowledge that two players disagree on the probability of some event holding. Aumann’s “agreement theorem” shows that this cannot happen. Formally,

Theorem 17.1. Let be a knowledge structure, and let be a common prior over . Suppose there exists some world such that

Then, .

Before proving this theorem, let us first state a simple lemma about probabilities:

Lemma 17.1. Let be disjoint events and let (that is, partition, or “tile,” the set ). Then, for any event , if for all we have that , it follows that .

Proof. The lemma follows directly by expanding out the definition of conditional probabilities. In particular, by Claim A.4, we have:

which concludes the claim.

■

We now turn to the proof of the agreement theorem.

Proof of Theorem 17.1. Let denote the event that assigns probability to and assigns probability to . Consider some state where is common knowledge; that is,

As noted above, this means that holds at every state that is “reachable” from in the network graph; let denote the set of states that are reachable from .

Let denote a sequence of states such that the beliefs of in those states “tile” all of ; that is,

and for any two , we have that is disjoint from . Since, as noted above, the beliefs of a player partitions the states of the world, such a set of states is guaranteed to exist. Since holds at every state in , we have that for every ,

By Lemma 17.1 (since the beliefs “tile” ), it follows that . But, by the same argument, it also follows that and thus we have that .

■

Let us remark that it suffices to consider a knowledge structure with just two players—thus, when we say that it is common knowledge that the players disagree on the probability they assign to some event, it suffices to say that it is common knowledge only among them. In other words, the players cannot “agree that they disagree” on the probability they assign to the event.

17.5 The “No-Trade” Theorem [Advanced]

So far, when we have been discussing markets and auctions, we have not considered the value the seller has for the item they are selling, and thus whether the seller is willing to go through with the sale. This was actually without loss of generality, since the seller could simply also act as an additional buyer to “buy-back” the item in case nobody is bidding high enough.

However, in our treatment so far we assume that (1) the players always know how much the item is worth to them, and (2) the players may have different private valuations of items. Indeed, the reason the trade takes place is because of the difference in these private valuations. For instance, if the seller of a house in NYC is moving to California, the house is worth less to him than to a buyer who wants to live in NYC.

Let us now consider a different scenario. We have some financial instrument (e.g., a stock) with some true “objective” value (based on the dividends that the stock will generate). Players, however, have uncertainty about what the value of the instrument is. To model this, consider now a random variable on the probability space —think of as the value of the financial instrument. (Note that in every state , is some fixed value; players, however, are uncertain about this value since they do not know what the true state of the world is.) Assume that one of the players, say , owns the financial instrument and wants to sell it to player . When can such trades take place? A natural condition for a trade to take place is that there exists some price such that ’s expected valuation for the instrument is at least and ’s expected valuation for the instrument is less than (otherwise, if is “risk-neutral,” would prefer to hold on to it). In other words, we say that the instrument defined by random variable is -tradable between and at if and . Let denote the event that the instrument (modeled by ) is -tradable between and .

Using a similar proof to the agreement theorem, we can now obtain a surprising no-trade theorem, which shows that it cannot be common knowledge that an instrument is tradable! The intuition for why this theorem holds is that if I think the instrument is worth , and then find out that you are willing to sell an instrument at (and thus must think it is worth ), I should update my beliefs with the new information that you want to sell, which will lower my own valuation.

Theorem 17.2. Let be a knowledge structure, let be a common prior over , and let be a random variable over . There does not exist some world and price such that

Proof. Assume for contradiction that there exists some . Again, let denote the set of states that are reachable from , and let denote a set of states such that the beliefs of in those states “tile” all of . Since holds at every state in , we have that for every ,

By expanding out the definition of conditional expectation (using Claim A.6), we get:

But, by applying the same argument to player , we instead get that

which is a contradiction.

■

So, how should we interpret this surprising result? Obviously people are trading financial instruments! We outline a few reasons why such trades may be taking place.

- We are assuming that both players perceive the value of the instrument as the expectation of their actual value (where the expectation is taken according to the players’ beliefs)—that is, we assume both players are risk-neutral. But, in reality, different players may have different “risk profiles,” and may thus be willing to trade even if they have exactly the same beliefs. For instance, an institutional investor (who is risk-neutral) would be very happy to buy an instrument that is worth with probability and otherwise, for , but a small investor (who is risk-averse) maybe be very happy to agree to sell such an instrument for (thus, agreeing to take a “loss” in expected profits for a sure gain).

- Another reason we may see trades taking place is that the common knowledge assumption may be too strong. While it is natural to assume that I must know that you want to trade with me in order for the trade to take place, it is not clear that we actually must have common knowledge of the fact that we both agree to the trade; thus trades can take place before common knowledge of the trade taking places “has occurred.”

- Finally, and perhaps most importantly, the theory assumes that all players are rational. In reality, there are lots of noise-traders whose decision to trade may be “irrational” or “erratic”; noise-traders do not necessarily trade based on their expected valuation of the instrument—in fact, such a valuation may not even be well-defined to them. Indeed, many private persons buying a stock (or index fund) probably have not done enough research to even establish a reasonable belief about the value of the company (or companies in the case of an index fund), but rather buy based on the “speculative” belief that the market will go up.

17.6 Justified True Belief and the Gettier Problems

In our treatment of knowledge and beliefs, we are assuming that the knowledge structure is exogenously given—for instance, in the muddy children example, it was explicitly given as part of the problem description. Coming up with the right knowledge structure for a situation, however, is nontrivial.

In fact, even just defining what it means for a player to know something is a classic problem in philosophy; without a clear understanding of what it means for a player to know some statement , we cannot come up with knowledge structure where holds in all the worlds the player considers possible. The classic way of defining knowledge is through the, so-called, justified true belief (JTB) paradigm: according to it, you know if (a) you believe , (b) you have a “reason” to believe it (i.e., the belief is justified), and (c) is actually true.

However, there are several issues with this approach, as demonstrated by the now-famous “Gettier problems”: Let us say that you walk into a room with a thermometer. You observe that the thermometer say 70 degrees, and consequently you believe that the temperature is 70 degrees; furthermore, the temperature in the room is 70 degrees. According to the JTB paradigm, you would then know that the temperature is 70 degrees, since (a) you believe it, (b) you have a reason for doing so (you read it off the thermometer), and (c) the temperature indeed is 70 degrees. Indeed, if the thermometer is working, then this seems reasonable.

But what if, instead, it just so happened that the thermometer was broken but stuck on 70 degrees (by a fluke). In this case, would you still “know” that it is 70 degrees in the room? Most people would argue that you do not, but according to the JTB paradigm, you do “know” it. As this discussion shows, even just defining what it means to “know” something is nontrivial.

Notes

The muddy children puzzle is from [Lit53]. As mentioned above, our approach to modeling beliefs and knowledge follows that of the philosopher Saul Kripke [Kri59]. A similar approach was introduced independently, but subsequently, by the game-theorist Robert Aumann [Aum76]. (Aumann received the Nobel Prize in Economic Sciences in 2005.)

Our treatment most closely follows the works of Kripke [Kri59], Hintikka [Hin62], and Halpern and Moses [HM90]. The analysis of the muddy children puzzle is from [HM90]. An extensive treatment of knowledge and common knowledge can be found in [FHMV95].

Aumann’s agreement theorem was proven in [Aum76] and the “no-trade theorem” was proven by Milgrom and Stokey [MS82] (although our formalization of this theorem is somewhat different).

The JTB approach is commonly attributed to the works of Plato (from approximately 2,400 years ago), but it has also been argued that already Plato pointed out issues with this approach; the “Gettier problems” were introduced by the philosopher Gettier [Get63].

1Later on, we will be able to extend the model to also incorporate probabilistic beliefs over worlds.