Motion-driven Concatenative Synthesis of Cloth Sounds

SIGGRAPH 2012

Abstract

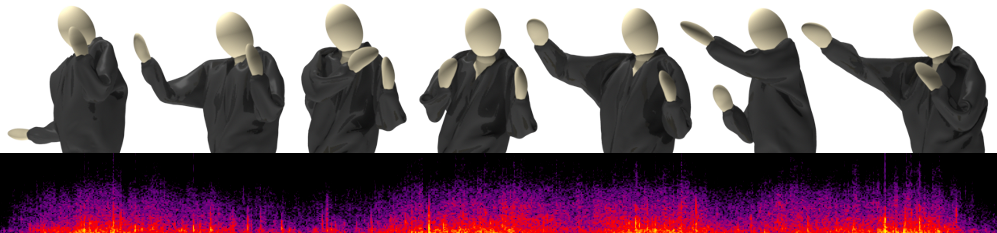

We present a practical data-driven method for automatically synthesizing plausible soundtracks for physics-based cloth animations

running at graphics rates. Given a cloth animation, we analyze the

deformations and use motion events to drive crumpling and friction

sound models estimated from cloth measurements. We synthesize

a low-quality sound signal, which is then used as a target signal for

a concatenative sound synthesis (CSS) process. CSS selects a sequence of microsound units, very short segments, from a database

of recorded cloth sounds, which best match the synthesized target

sound in a low-dimensional feature-space after applying a hand-tuned warping function. The selected microsound units are con-

catenated together to produce the final cloth sound with minimal

filtering. Our approach avoids expensive physics-based synthesis

of cloth sound, instead relying on cloth recordings and our motion-driven CSS approach for realism. We demonstrate its effectiveness

on a variety of cloth animations involving various materials and

character motions, including first-person virtual clothing with binaural sound.

Links

- Paper (18M)

- Supplemental Video (192MB)

- Supplemental Data (195MB) - Contains crumpling samples, frictional sound models, database recordings, and target signal videos for all materials in the paper examples. Also includes a video of a typical manual correspondence session.

Citation

Steven S. An, Doug L. James, and Steve Marschner, Motion-driven Concatenative Synthesis of Cloth Sounds,

ACM Transaction on Graphics (SIGGRAPH 2012), August, 2012.

Video

Acknowledgements

We would like to thank anonymous reviewers for helpful feedback,

Sean Chen for the measurement device circuitry, the Clark Hall Machine Shop, Carol Krumhansl for the use of her sound isolation

room, Taylan Cihan for early recording assistance, Tianyu Wang

for signal processing discussions, and Noah Snavely for thoughts

on shape matching. The data used in this project was obtained from

mocap.cs.cmu.edu. The database was created with funding from

NSF EIA-0196217. DLJ acknowledges early discussions with Dinesh Pai and Chris Twigg on cloth sounds. We acknowledge funding and support from the National Science Foundation (CAREER-0430528, HCC-0905506, IIS-0905506), fellowships from the Alfred P. Sloan Foundation and the John Simon Guggenheim Memorial Foundation, and donations from Pixar and Autodesk. This research was conducted in conjunction with the Intel Science and

Technology Center-Visual Computing.

Any opinions, findings,

and conclusions or recommendations expressed in this material are

those of the authors and do not necessarily reflect the views of the

National Science Foundation or others.