Publications

Counterfactual Risk Minimization: Learning from Logged Bandit Feedback. [abstract, WWW Companion 2015] [paper, ICML2015] [extended, JMLR2015].

Adith Swaminathan and Thorsten Joachims.

The logs of an interactive learning system contain valuable information about the system's performance and user experience.

However they are biased (actions preferred by the system are over-represented) and incomplete (user feedback for actions not taken by the system is unavailable).

So they cannot be used to train and evaluate better systems through supervised learning.

We developed a new learning principle and an efficient algorithm that re-uses such logs to solve structured prediction problems.

The key insight was to reason about the variance introduced when fixing the biased logs, and to use an efficient conservative approximation of this variance

so that we can solve the problem at scale (software available online).

The Self-Normalized Estimator for Counterfactual Learning. NIPS 2015.

Adith Swaminathan and Thorsten Joachims.

We identified an interesting, new kind of overfitting in the batch learning from logged bandit feedback scenario which we called propensity overfitting.

The conventional counterfactual risk estimator ("Inverse Propensity Scoring" --- IPS) is very vulnerable to this overfitting when optimized over rich hypothesis spaces.

We identified one property, equivariance, that guards against this problem and motivated the use of the self-normalized risk estimator for this problem.

Off-Policy Evaluation for Slate Recommendation. ICML 2016 Personalization Workshop.

Adith Swaminathan, Akshay Krishnamurthy, Alekh Agarwal, Miroslav Dudík, John Langford, Damien Jose, Imed Zitouni.

Counterfactual techniques based on the standard "Inverse Propensity Scoring" (IPS) trick fail catastrophically when the number of actions that a system can take is

large --- e.g., when recommending slates in an information retrieval application with combinatorially many rankings of items.

We developed an estimator for evaluating slate recommenders that exploits combinatorial structure when constructing a slate and dominates the performance of IPS-based methods.

Recommendations as Treatments: Debiasing Learning and Evaluation. ICML 2016.

Tobias Schnabel, Adith Swaminathan, Ashudeep Singh, Navin Chandak, Thorsten Joachims

The Netflix Prize was a challenge to predict how a population of users would rate an inventory of items,

using user-volunteered item-ratings. However, there are severe biases --- “Missing Not At Random” (MNAR) --- in this volunteered data.

We developed the first principled discriminative approach to this MNAR problem, showed that it works reliably on two user-volunteered ratings datasets,

and provided an implementation online.

Unbiased Ranking Evaluation on a Budget. WWW Companion 2015.

Tobias Schnabel, Adith Swaminathan and Thorsten Joachims.

Evaluating structured prediction systems is hard and costly. We re-wrote several popular evaluation metrics for these systems as Monte Carlo estimates

and used ideas from importance sampling to derive a simple, efficient, and unbiased strategy that also generates re-usable judgements.

Unbiased Comparative Evaluation of Ranking Functions. ICTIR 2016.

(Best Presentation Award)

Tobias Schnabel, Adith Swaminathan, Peter Frazier and Thorsten Joachims.

The Monte Carlo estimation view of ranking evaluation we developed above unified several prior approaches to building re-usable test collections.

We developed new estimators and principled document sampling strategies that substantially improve statistical efficiency in several comparative evaluation scenarios

(e.g. when compiling a leaderboard of competing rankers).

Unbiased Learning-to-Rank with Biased Feedback. WSDM 2017.

(Best Paper Award)

Thorsten Joachims, Adith Swaminathan, Tobias Schnabel.

Can we avoid relying on randomization when collecting user feedback for training learning to rank (L2R) algorithms?

We can, by piggybacking on the randomness in user actions!

User feedback is typically biased, so we re-purposed user click models as propensity estimators to obtain "de-biased" user feedback.

These propensity-weighted click-logs were then used to train standard L2R algorithms, and showed very strong results on the arXiv search engine.

Temporal corpus summarization using submodular word coverage. CIKM 2012.

Ruben Sipos, Adith Swaminathan, Pannaga Shivaswamy and Thorsten Joachims.

Evolving corpora can be summarized through timelines indicating the influential elements.

We created interesting timelines of articles in the ACL anthology and NIPS conference proceedings

over a span of 15 years using landmark documents, influential authors and topic key-phrases.

We achieved this by formulating a sub-modular objective extending the coverage problem over words

(traditional summarization) to incorporate coverage across time.

Beyond myopic inference in Big Data pipelines. KDD 2013.

Karthik Raman, Adith Swaminathan, Johannes Gehrke and Thorsten Joachims.

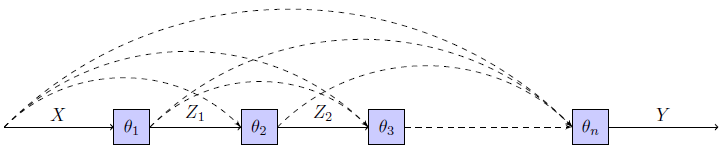

Pipelines constructed using modular components have very brittle performance:

errors in earlier components cascade through the pipeline causing

catastrophic failures in the eventual output.

We explored simple ideas from Probabilistic Graphical Models to make

the inference procedure more robust, while still using

off-the-shelf components to compose pipelines.

Mining videos from the web for electronic textbooks. ICFCA 2014.

Rakesh Agrawal, Maria Christoforaki, Sreenivas Gollapudi, Anitha Kannan, Krishnaram Kenthapadi and Adith Swaminathan.

There is a wealth of educational content available online, but the primary teaching aid

for the vast majority of students (e.g. in India and China) continues to be the textbook.

Recognizing how textbook sections are organized and mining them for concepts using Formal Concept Analysis,

we automatically retrieved appropriate videos from Youtube Edu.

Using transcripts made available by educators to remain accessible to deaf students,

we side-stepped the challenge of automatic audio transcription or content-based video retrieval.