Chapter 4

Coordination in Social Networks

Recall the iPhone/Android game we considered in the introduction. We now have the necessary tools for analyzing it. We begin by considering a simple form of this game on a social network described by an undirected graph.

4.1 Plain Networked Coordination Games

Given some undirected graph , consider a game being played by the nodes of the graph (i.e., the nodes represent the players), defined as follows. Each pair of adjacent nodes participate in a two-player “coordination game” (to be defined shortly) and the utility of a node is defined as the sum of the utilities of all games it participated in. Additionally, we require each node to only select a single action (to be used in all the games it participates in).

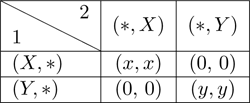

A bit more precisely, each node in the graph selects a single action (a “product”)—either or —and participates in the following coordination game (where both players get the same utility in every outcome) with each of its neighbors (assume ):

- If they “match,” they get the same positive utility or ;

- If they “mismatch,” they get utility .

Note that the utility, , of matching on may be different from the utility, , of matching on . Without loss of generality, we assume ; that is, product is at least as good as product . The utility of a node is then defined to be the sum of its utilities in all the coordination games it participates in. Formally,

Definition 4.1. Given an undirected graph , we say that the game is a plain networked coordination game induced by and the coordination utility if:

- , ,

- , where is the set of neighbors of the node in .

In the sequel, to simplify notation, we will have some particular plain networked coordination game in mind, and we will let denote the utility of coordinating at (i.e., ) and the utility of coordinating at (i.e., ).

A simple decision rule: The Adoption threshold Consider a node that has neighbors (friends), a fraction of whom choose and the remaining fraction of whom choose . Then ’s utility for choosing is and its utility for choosing is . What action should take to maximize its utility (i.e., to best respond to the actions of the other players)?

- Choose if —that is, .

- Choose if —that is, .

- Choose either or if .

So, no matter what the number of ’s neighbors is, the decision ultimately only depends on the fraction of its neighbors choosing or (and the game utilities). We refer to the ratio as the (global) adoption threshold for product .

4.2 Convergence of BRD

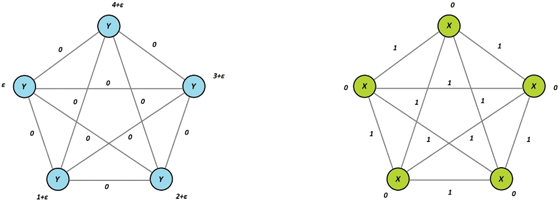

We now turn to the question of analyzing what equilibria look like in these games. Clearly, everyone choosing the same product is a PNE (everyone choosing is the “better” equilibrium, but everyone choosing is still a PNE). But there are other equilibria as well, such as the ones illustrated in Figure 4.1.

Figure 4.1: Illustrations of equilibria in a plain networked coordination game. The left example is an equilibrium when the adoption threshold is or more; the right example shows how to sustain a “nontrivial” equilibrium even when the adoption threshold is smaller, namely, (or more).

Intuitively, the reason why these “nontrivial” equilibria arise is that there is some network structure that “blocks” the better action from spreading to more nodes in the network. We will return to an analysis of this phenomenon in chapter 5; for now, we will consider the question of whether we can converge to an equilibrium using best-response dynamics.

As we observed in the previous chapter, BRD in a game converges if and only if has an ordinal potential function. Here, given a plain coordination game , we will consider the social welfare function given by

Whenever the game is clear from context, we omit it as a superscript for and . Notice that by expanding out the definition of , we get

(4.1)

Let us now prove that is an ordinal potential function of the game.

Claim 4.1. is an ordinal potential function for any plain networked coordination game .

Proof. Consider an action profile and some player who can improve their utility by deviating to ; that is,

(4.2)

We need to show that , or equivalently that . Notice that when considering , the only games that are affected are those between and the neighbors of , . So, by Equation 4.1, the difference in the potential is

which by Equation 4.2 is strictly positive.

■

Thus, by Theorem 3.1 (which proved that BRD converges if the game has an ordinal potential function), we directly get the following theorem.

Theorem 4.1. BRD converges in every plain networked coordination game.

Socially optimal outcomes We say that an outcome is socially optimal if it is an outcome that maximizes social welfare; that is, the social welfare in the outcome is at least as high as the social welfare in any other outcome . In other words, is socially optimal in if

Note that there may not necessarily exist a unique outcome that maximizes social welfare, so several outcomes may be socially optimal.

Let us also remark that if social welfare is an ordinal potential function (as we showed was the case for plain networked coordination games), then whatever outcome we start off with, if people deviate to make themselves better off, they also make the outcome better for “the world in aggregate” (although some players can get worse off). In particular, this implies that if we start out with a socially optimal outcome, BRD will stay there. Note that this is not always the case: for instance in the Prisoner’s Dilemma, the socially optimal outcome is for both players to cooperate, but BRD moves away from this outcomes (as it is not a PNE).

The fact that this happens here, however, should not be too surprising: if , there is a unique outcome that maximizes SW (namely, everyone choosing ) and this outcome is a PNE, thus BRD will stay there; if , there are two outcomes (everyone choosing or everyone choosing ), which maximize SW, both of which are PNE.

4.3 Incorporating Intrinsic Values

So far we have assumed that players’ utilities depend only on their coordination utilities; in particular, this implies that everyone has the same “intrinsic value” for each product. In reality, people may have different intrinsic value for different products (e.g., in the absence of network effects, some people naturally prefer iPhones and others Androids).

To model this, we can consider a more general class of games where utility is defined as follows:

(4.3)

Here, denotes the intrinsic value of to player . Formally, the notion of a networked coordination game is defined just as in Definition 4.1, except that we now also parametrize the game by an intrinsic value function for each node and use Equation 4.3 to define utility.

Notice that nodes can no longer employ the same simple decision rule of checking whether the fraction of its neighbors playing exceeds some fixed global threshold —rather, each node has its own subjective adoption threshold that depends on the number of ’s neighbors and its intrinsic value function (Exercise: Derive what the subjective adoption threshold is). Also, notice that it is now no longer clear what equilibria look like, or even that they exist, since there might exist a conflict between the intrinsic value of an action to a player and the desire to coordinate with their friends!

Example 4.1. Consider the simple star graph in Figure 4.2 with nodes, consisting of a “central” node connected to neighbors, and consider the networked coordination game induced by this graph and the following coordination and intrinsic utilities (where is some arbitrarily small constant):

- Let .

- For all other nodes , and .

- Let (i.e., in the game as described above).

Figure 4.2: The star graph described in the example, with the equilibrium outcome highlighted ( in blue, in green).

So every node’s coordination game indicates no network preference between and . But intrinsically “strongly” prefers , while all other nodes intrinsically “weakly” prefer .

What happens if everyone chooses in this game?

- has maximized their intrinsic utility () and maximized coordination utility (1 on each of edges, for a total of ), so there is no incentive to switch.

- Other nodes currently have no intrinsic utility for and get 1 in coordination utility, so it benefits them to switch to instead, receiving in intrinsic utility and losing 1 in coordination utility.

- This outcome has social welfare , but it is not an equilibrium (since nodes gain by switching actions).

What happens if everyone chooses ?

- Neighbors of have maximized their intrinsic utility () and maximized coordination utility (1), so there is no incentive for them to switch.

- currently has no intrinsic utility and in coordination utility (1 on each edge), so it benefits to switch to instead, receiving in intrinsic utility and losing in coordination utility.

- This outcome has social welfare , but it also is not an equilibrium.

In fact, if we apply the BRD process we will always up end in a outcome where chooses and the other nodes choose , no matter where we start. This follows from the fact that is strictly dominant for and is strictly dominant for all the other nodes; thus playing and everyone else playing is the only PNE (and by Claim 1.5, BRD quickly converges to it).

But in this outcome, there is no “coordination” utility; receives in intrinsic utility and the other nodes receive each, for a total of only in social welfare. There is actually quite a significant gap—a factor of close to —between the equilibrium and the “coordination” outcomes where everyone plays either or . In fact, it is not hard to see that the outcome where everyone plays is the only socially optimal outcome in this game; we leave it as an exercise to prove this. We refer to the existence of such a gap as an inefficiency of equilibria (i.e., that selfish behavior leads to “inefficient” outcomes).

Furthermore, notice that this gap is essentially independent of the number of nodes, , as long as . Thus, an even simpler example illustrating this gap is obtained by simply considering the case when —that is, we are simply playing a coordination game between two players. (We consider the slightly more complicated star example as it illustrates the issue even in a connected graph with a large number of nodes.)

Can we still prove that equilibria always exist networked coordination games (with intrinsic values) by showing that best-response dynamics converge? Social welfare is no longer an ordinal potential function; as a counterexample, consider the outcome in the graph above where all nodes choose . If switches to , improving their own utility, the social welfare actually decreases by (from to ), even though actually only increases their utility by (which can be arbitrarily small)!

However, we can choose a better potential function that is ordinal; namely:

That is, we sum coordination utilities over every edge once (rather than twice as in the definition of social welfare; see Equation 4.1), and add the intrinsic values for each node.

Theorem 4.2. BRD converges in every networked coordination game.

Proof. Let be above-described potential function; by Theorem 3.1, it suffices to show that it is ordinal. Consider an action profile and some player who can improve their utility by deviating to ; that is,

We will show that . Once again, in considering , note that only coordination games between and and the intrinsic value for are affected by changing to . So,

which concludes the proof.

■

4.4 The Price of Stability

As we saw in the earlier example, BRD might decrease social welfare—in particular, even a single player best responding once can significantly bring down the social welfare. A natural question thus arising is: How bad can the “gap” between the social welfare in the “best” equilibrium (i.e., the PNE with the largest social welfare) and in the socially optimal outcome be?

More formally, we denote by

the maximum social welfare (MSW) obtainable in the game . In games with non-negative utilities (such as networked coordination games), we refer to the ratio between the MSW and the SW of the best PNE as the price of stability in the game.

Definition 4.2. The price of stability in a game with non-negative utilities is defined as

where denotes the set of PNE in .

Roughly speaking, the price of stability measures by how much selfish behavior necessarily degrades the “efficiency” of outcomes. (There is also a related notion of price of anarchy, which is defined as the ratio between MSW and the worst PNE—this notion instead measures by how much selfish behavior can, in the most pessimistic case, degrade performance. In the sequel, however, we will focus only on studying the price of stability.)

As we now show, selfish behavior cannot degrade performance by more than a factor 2.

Theorem 4.3. For every networked coordination game , there exists a PNE in such that

(In other words, the price of stability in is at most 2.)

Proof. Observe that for the potential function defined above, for every ,

Now, pick any outcome that maximizes (and thus achieves a SW of MSW). Then run BRD starting from until it converges to some outcome profile —as shown in Theorem 4.2 it will always converge, and this final outcome is a PNE. While SW may decrease at each step, as shown in the proof of Theorem 4.2 can only increase. Hence, we have

as desired.

■

Recall that our “star graph” exhibited an example of a game with a gap of close to between the best equilibrium and the socially optimal outcome, whereas Theorem 4.3 shows that the gap can never be more than 2. It turns out that the gap of is actually tight, but showing this requires a slightly more elaborate analysis appealing to iterated strict dominance.

Theorem 4.4. For every , there exists some -player networked coordination game such that for every PNE in we have that

(In other words, the price of stability can be arbitrarily close to 2.)

Proof. Let be a complete graph on nodes. Let and for every player , let . (See Figure 4.3.) Let ; note that , and so . Let us next analyze PNE in this game. We will show that is the only strategy profile that survives iterated strict dominance (ISD); by Claim 1.3 (showing that if a unique strategy survives ISD, it must be the unique NE of the game), it thus follows that is also the only PNE of the game.

Figure 4.3: The construction presented in Theorem 4.4 for , detailing the intrinsic utilities (next to the nodes) and coordination utilities (on the edges) achieved in (left) the equilibrium and (right) the outcome which maximizes social welfare.

Showing that only survives ISD We will show by induction that after the round of ISD, players must have removed action . The base case () is trivial. For the induction step, assume the claim is true for round and let us show it for round . In the round, by the induction hypothesis, players can only be playing . Therefore, player can get utility at most by playing (since the intrinsic value of is 0, and they can can coordinate with at most players); on the other hand, by playing , player is guaranteed to get (in intrinsic value); thus, is strictly dominated and must be removed, which proves the induction step.

Concluding the price of stability bound Note that

Thus,

By choosing an appropriately small , this value can be made arbitrarily close to 1/2.

■

4.5 Incorporating Strength of Friendship

We finally consider an even more general networked coordination model where we place a “weight” on each (undirected) edge —think of this weight as the “strength of the friendship” (measured, for example, by how many minutes we spend on the phone with each other, or how many messages we send to each other)—and now also weigh the coordination utility of the game between and by . That is,

We note that the potential function, as well as social welfare, arguments made above (i.e., Theorem 4.2 and Theorem 4.3) directly extend to this more general model, by defining

Note, however, that a player’s decision whether to play is no longer just a function of the fraction of their neighbors playing ; rather, we now need to consider a weighted subjective threshold where node switches to whenever the fraction of their neighbors weighted by exceeds .

Notes

The notion of networked coordination games (the way we consider them) was introduced by Young [You02]. Young also showed the existence of PNE in such games.

The gap between the maximum social welfare and the social welfare in equilibria (i.e., the “inefficiency” of equilibria) was first studied by Koutsoupias and Papadimitriou [KP09], but the notion considered there—“price of anarchy”—considered the gap between MSW and the worst PNE. In contrast, the notion of price of stability (which we considered here) considers the gap between MSW and the best PNE. This notion was first studied in [SM03], and the term “price of stability” was first coined in [ADK08]; [ADK08] was also the first paper to study price of stability using potential functions. The results on price of stability in coordination games that we have presented here are from Morgan and Pass [MP16].

It turns out that the star graph example we presented (and its gap of ) is also tight in some respect: Note that in that example, the coordination game is “coordination symmetric” in the sense that ; for such games, Theorem 4.3 can be strengthened to show that the price of stability never exceeds ; see [MP16] for more details.