|

I am a PhD candidate in the Department of Computer Science at Cornell University, advised by Professor Bharath Hariharan. Prior to Cornell, I received my Bachelor's Degree in Computer Science and Mathematics from the University of Michigan, Ann Arbor. Email / CV / Google Scholar / Github / LinkedIn / Research Statement |

|

ResearchMy research lies in the intersection of computer vision and machine learning. Specifically, I work on building perception systems that are broadly useful for all problem domains (e.g., remote sensing, medical imagery, self-driving vehicles). Toward this goal, I have identified three major problems: label efficiency (STARTUP, GRAFT), deployment to novel domains (Rote-DA), and trustworthiness. Most of my past works have focused on the first two problems. I am currently investigating how to use multimodal sensory input to improve the label efficiency of perception models and how we could build trustworthy specialist perception models from large-scale frontier models. (See my research statement for more!) Below is a list of my papers. (*) indicates equal contributions. Representative papers are highlighted. |

|

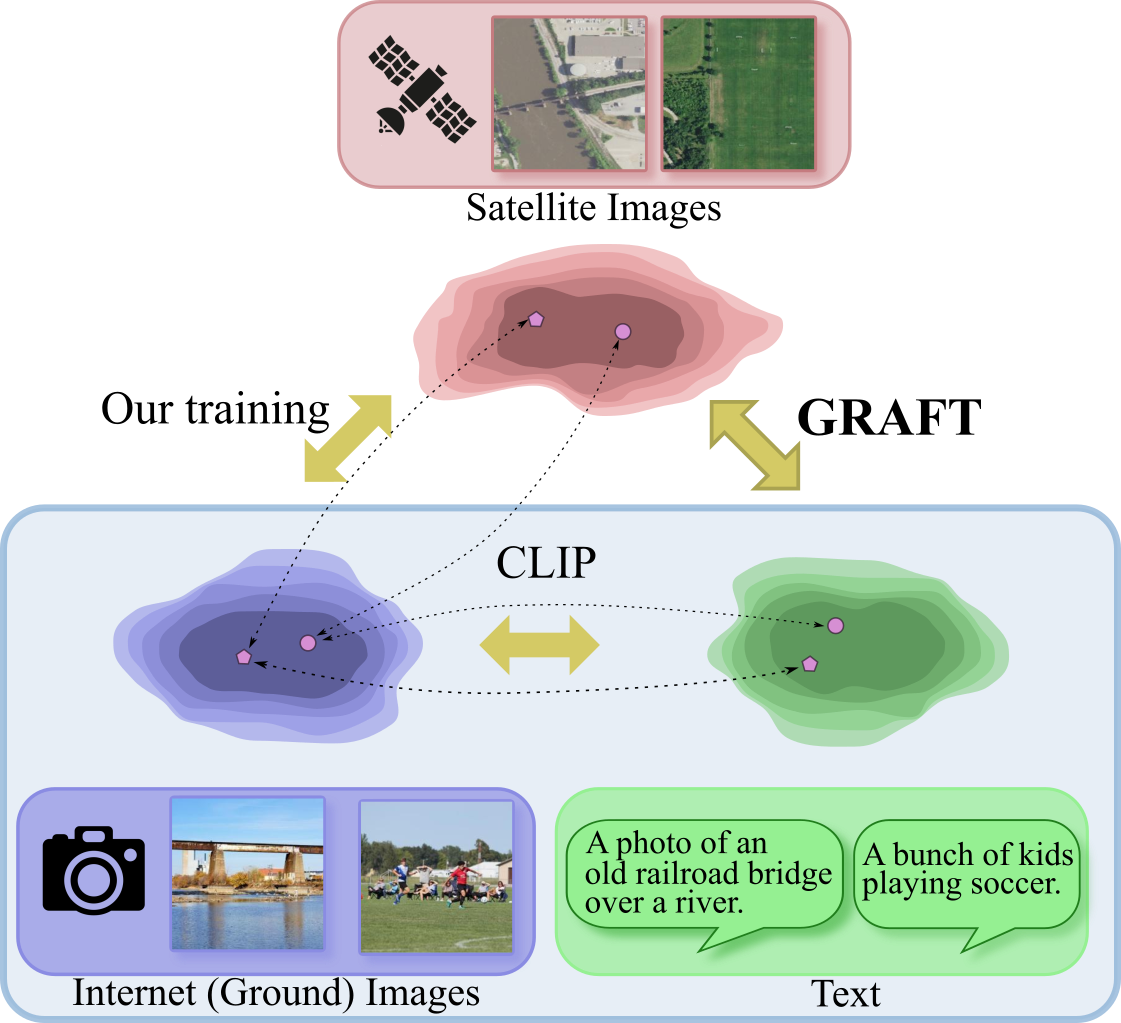

Remote Sensing Vision-Language Foundation Models without Annotations via Ground Remote Alignment

Utkarsh Mall*, Cheng Perng Phoo*, Meilin Kelsey Liu, Carl Vondrick, Bharath Hariharan, Kavita Bala International Conference on Learning Representations (ICLR), 2024. TLDR: We use ground images as intermediary to connect satellite imagery to natural language (encoded using CLIP), yielding VLMs without textual annoations. |

|

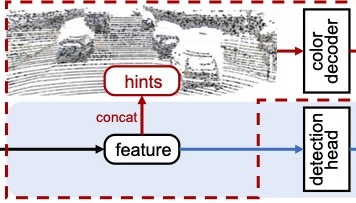

Pre-training LiDAR-based 3D Object Detectors through Colorization

Tai-Yu Pan, Chenyang Ma, Tianle Chen, Cheng Perng Phoo, Katie Z Luo, Yurong You, Mark Campbell, Kilian Q Weinberger, Bharath Hariharan, Wei-Lun Chao International Conference on Learning Representations (ICLR), 2024. TLDR: We pre-train a point cloud detector by tasking it to fill in the missing colors within the point cloud. |

|

Reward Finetuning for Faster and More Accurate Unsupervised Object Discovery

Katie Z Luo*, Zhenzhen Liu*, Xiangyu Chen*, Yurong You, Sagie Benaim, Cheng Perng Phoo, Mark Campbell, Wen Sun, Bharath Hariharan, Kilian Q. Weinberger Conference on Neural Information Processing Systems (NEURIPS), 2023. TLDR: We reframe object discovery as an RL problem and design a reward function to enable faster and more accurate discovery of objects in driving scenes without human supervision. |

|

Emergent Correspondence from Image Diffusion

Luming Tang*, Menglin Jia*, Qianqian Wang*, Cheng Perng Phoo, Bharath Hariharan Conference on Neural Information Processing Systems (NEURIPS), 2023. TLDR: Features from off-the-shelf image diffusion models could be used to identify semantic and geometric correspondence without further training. |

|

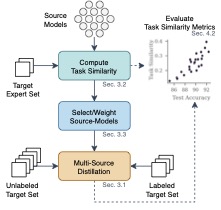

Distilling from Similar Tasks for Transfer Learning on a Budget

Kenneth Borup, Cheng Perng Phoo, Bharath Hariharan International Conference on Computer Vision (ICCV), 2023. TLDR: We construct label- and compute-efficient models by identifying and distilling from suitable pre-trained models. |

|

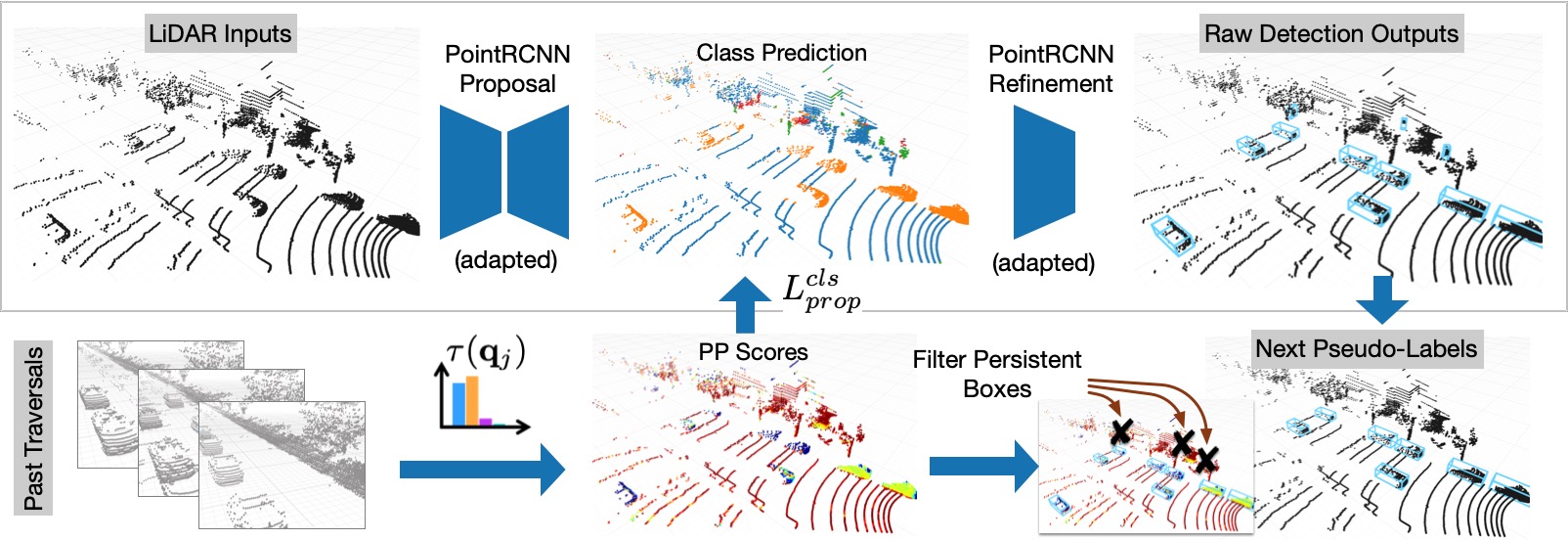

Unsupervised Adaptation from Repeated Traversals for Autonomous Driving

Yurong You*, Cheng Perng Phoo*, Katie Z Luo*, Travis Zhang, Wei-Lun Chao, Bharath Hariharan, Mark Campbell, Kilian Q. Weinberger Conference on Neural Information Processing Systems (NEURIPS), 2022. Code TLDR: Unlabeled LiDAR scans from repeated traversals could be used to disambiguate foreground and background objects, yielding cleaner signals for self-training adaptation. |

|

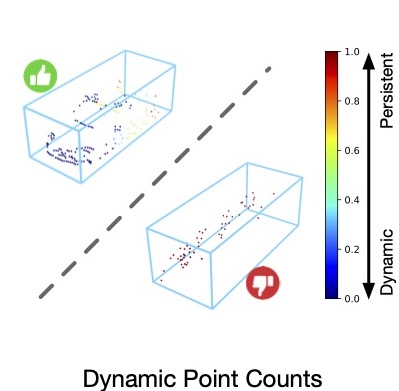

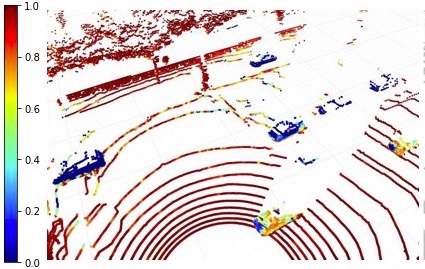

Learning to Detect Mobile Objects from LiDAR Scans Without Labels

Yurong You*, Katie Z Luo*, Cheng Perng Phoo, Wei-Lun Chao, Wen Sun, Bharath Hariharan, Mark Campbell, Kilian Q. Weinberger IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022. Code TLDR: Comparing unlabeled LiDAR scans from multiple traversals on the same location could uncover dynamic LiDAR points that could be used to train a mobile object detector in an unsupervised/self-supervised manner. |

|

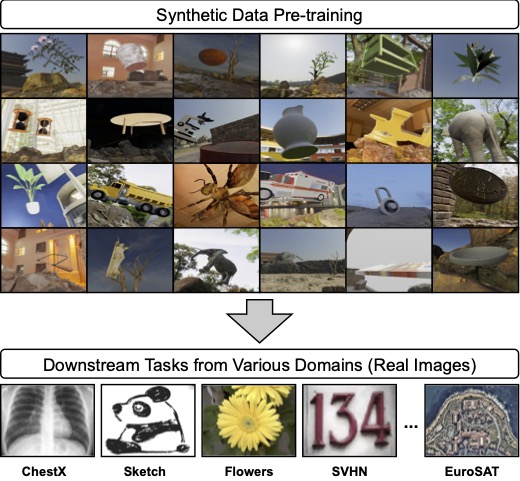

Task2Sim: Towards Effective Pre-training and Transfer from Synthetic Data

Samarth Mishra, Rameswar Panda, Cheng Perng Phoo, Chun-Fu (Richard) Chen, Leonid Karlinsky, Kate Saenko, Venkatesh Saligrama, Rogerio S. Feris IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022. Code TLDR: Different downstream tasks require different representations pre-trained on synthetic data generated using different configurations (lighting, object poses, etc). We use reinforcement learning to learn a policy that maps a compact task representation to the appropriate synthetic data configuration. |

|

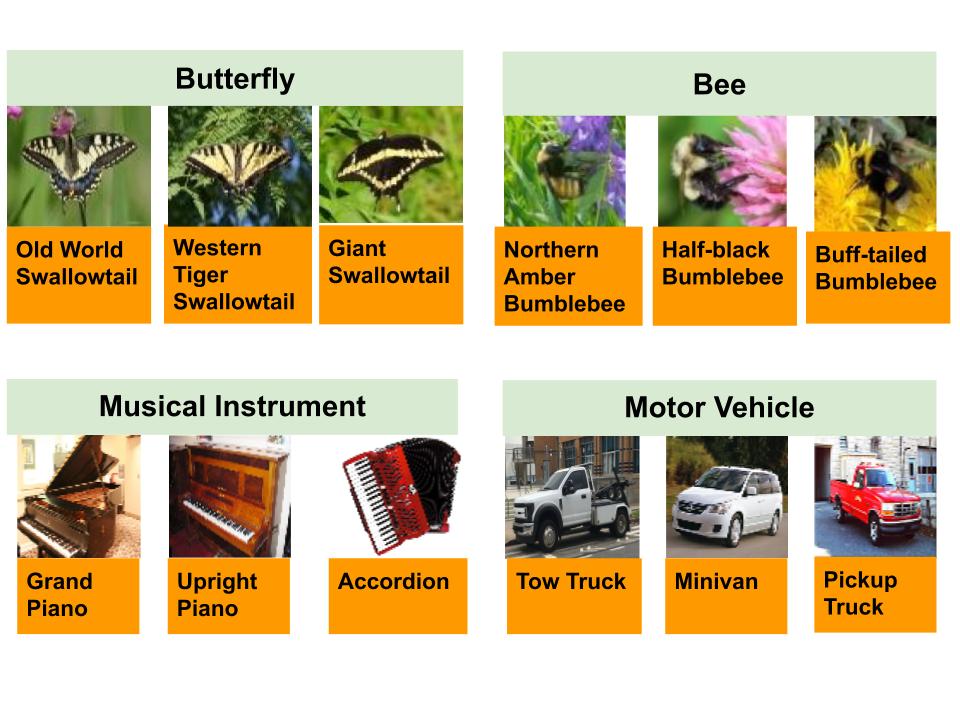

Coarsely-labeled Data for Better Few-shot Transfer

Cheng Perng Phoo, Bharath Hariharan International Conference on Computer Vision (ICCV), 2021. Code TLDR: Coarsely-labeled data can be cheap to acquire and can be used to learn a better representation for few-shot learning. |

|

Self-training for Few-shot Transfer Across Extreme Task Differences

Cheng Perng Phoo, Bharath Hariharan International Conference on Learning Representations (ICLR) , 2021. Oral (53/2997 Submissions) Code TLDR: We can build strong neural representations for novel domains by (self-)training students to replicate pseudo-labels produced by a teacher from another, unrelated problem domain. |

|

Predicting Risk of Sport-Related Concussion in Collegiate Athletes and Military Cadets: A Machine Learning Approach Using Baseline Data from the CARE Consortium Study

Joel Castellanos, Cheng Perng Phoo, James T. Eckner, Lea Franco, Steven P. Broglio, Mike McCrea, Thomas McAllister, Jenna Wiens Sports Medicine Journal, 2020 TLDR: Baseline tests conducted on college athletes and military cadets before each semester could contain information for identifying athletes/military cadets who are at a higher risk of experiencing a concussion. |

|

Heart Sound Classification based on Temporal Alignment Techniques

Jose Javier Gonzalez, Cheng Perng Phoo, Jenna Wiens Computing In Cardiology (CinC), 2016 Code TLDR: We use temporal alignment techniques such as dynamic time warping to extract features from heart sound recordings for identifying patients at risk of adverse cardiovascular outcomes. |

Awards/Services/Experiences

|

|

Many thanks to Jon Barron for the awesome template! Some of the icons used in this website are from flaticon. |