Summary

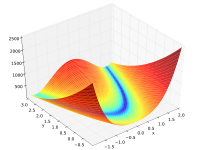

Surrogate methods (also called response surface methods) fit a model function (the surrogate) to the objective function based on data sampling. The surrogate model is then used to guide where the algorithm should sample next. Our Python Surrogate Optimization Toolbox (PySOT) implements several parallel surrogate optimization methods on a variety of computing platforms, using our package of Python Optimization Asynchronous Plumbing (POAP) to handle the details of asking workers for function evaluations. Our methods support asynchronous parallel execution, which is of particular interest when it is possible to get potentially incomplete function information (e.g. upper or lower bounds) at much less expense than a full function evaluation.

Related

Papers

Best student paper.

@inproceedings{2015-middleware,

author = {Gencer, Adam Efe and Sirer, Emin Gun and Van Renesse, Robbert and Bindel, David},

title = {Configuring Distributed Computations Using Response Surfaces},

booktitle = {Proceedings of Middleware 2015},

month = dec,

year = {2015},

notable = {Best student paper.},

doi = {10.1145/2814576.2814730}

}

Abstract:

Configuring large distributed computations is a challenging task. Efficiently executing distributed computations requires configuration tuning based on careful examination of application and hardware properties. Considering the large number of parameters and impracticality of using trial and error in a production environment, programmers tend to make these decisions based on their experience and rules of thumb. Such configurations can lead to underutilized and costly clusters, and missed deadlines.

In this paper, we present a new methodology for determining desired hardware and software configuration parameters for distributed computations. The key insight behind this methodology is to build a response surface that captures how applications perform under different hardware and software configuration. Such a model can be built through iterated experiments using the real system, or, more efficiently, using a simulator. The resulting model can then generate recommendations for configuration parameters that are likely to yield the desired results even if they have not been tried either in simulation or in real-life. The process can be iterated to refine previous predictions and achieve better results.

We have implemented this methodology in a configuration recommendation system for MapReduce 2.0 applications. Performance measurements show that representative applications achieve up to $5 \times$ performance improvement when they use the recommended configuration parameters compared to the default ones.

Talks

Software for Parallel Global Optimization

Symposium for Christine Shoemaker

rbf globalopt

•

meeting local invited

RBF Response Surfaces with Inequality Constraints

SIAM CSE

rbf

•

minisymposium external organizer

Radial Basis Function Interpolation with Bound Constraints

Cornell SCAN Seminar

rbf

•

seminar local