Field-Guide-Inspired Zero-Shot Learning

Utkarsh Mall, Bharath Hariharan, Kavita Bala

Cornell University

In ICCV 2021

Abstract

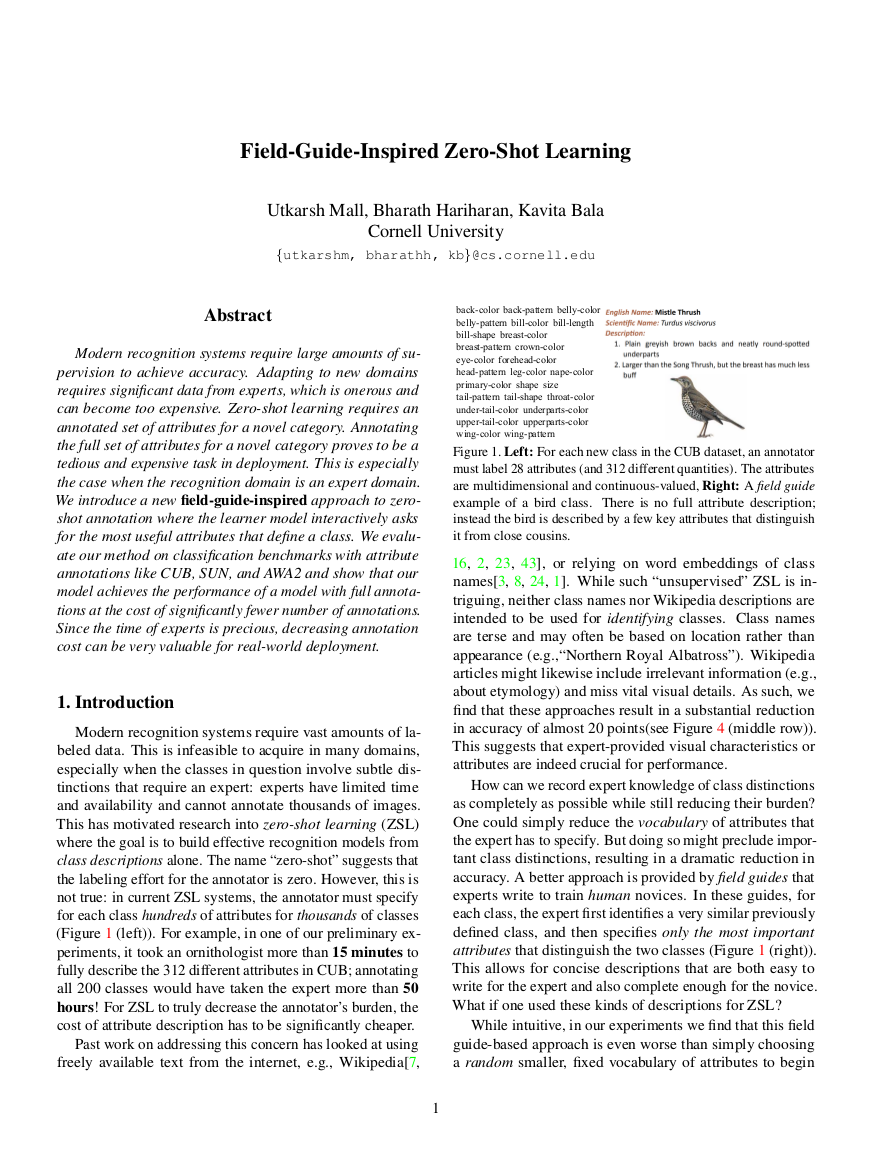

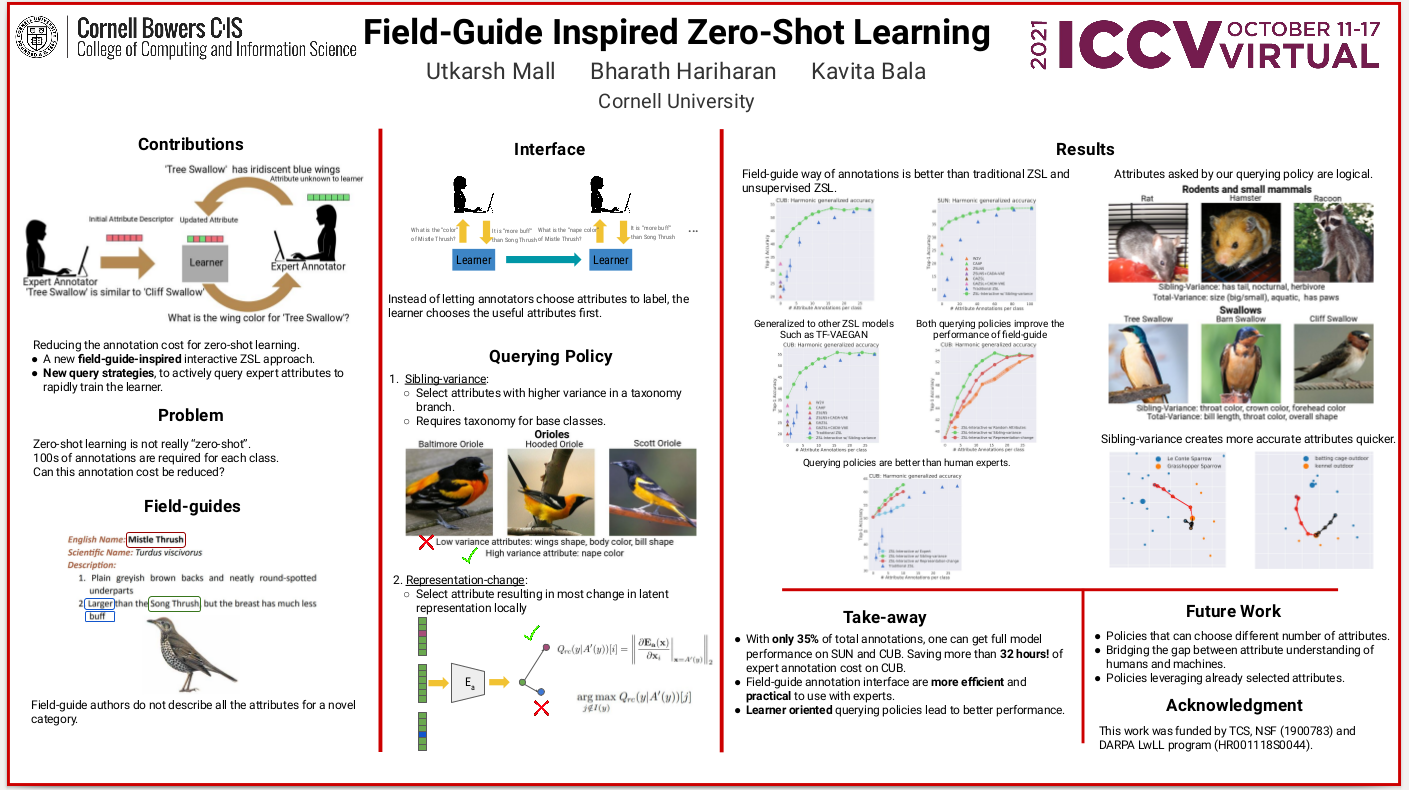

Modern recognition systems require large amounts of supervision to achieve accuracy. Adapting to new domains requires significant data from experts, which is onerous and can become too expensive. Zero-shot learning requires an annotated set of attributes for a novel category. Annotating the full set of attributes for a novel category proves to be a tedious and expensive task in deployment. This is especially the case when the recognition domain is an expert domain. We introduce a new field-guide-inspired approach to zero-shot annotation where the learner model interactively asks for the most useful attributes that define a class. We evaluate our method on classification benchmarks with attribute annotations like CUB, SUN, and AWA2 and show that our model achieves the performance of a model with full annotations at the cost of a significantly fewer number of annotations. Since the time of experts is precious, decreasing annotation cost can be very valuable for real-world deployment.

Overview of our field-guide-inspired workflow. (a) An expert annotator introduces the learner to a novel class by first providing a similar base class. (b) The Learner then interactively asks for values of different attributes using an Acquistion function. (c) The expert annotator provides the values of the attributes and the learner updates its state and class description

Paper

[pdf] [arXiv] [supplementary pdf]

Utkarsh Mall, Bharath Hariharan, and Kavita Bala. "Field-Guide-Inspired Zero-Shot Learning". In ICCV, 2021.

@inproceedings{fgzsl-21,

title={Field-Guide-Inspired Zero-Shot Learning},

author={Mall, Utkarsh and Hariharan, Bharath and Bala, Kavita},

booktitle={ICCV},

year={2021}

}