I graduated in August 2015 and joined Amazon as a Research Scientist, where I am working on an exciting new project that centers around computer vision. I completed my PhD from the Department of Computer Science at Cornell University. I was advised by Ashutosh Saxena and worked on understanding human environments and activities for enabling assistive robots to work with and around people. My research interest lies at the intersection of machine learning, computer vision and robotics.

My research focuses on developing machine learning techniques for understanding human environments and activities from visual data, which would enable new applications such as healthcare monitoring systems, self-driving cars, robot assistants, etc. My research is summarized by the following projects.

An important aspect of human perception is anticipation, which we use extensively in our day-to-day activities when interacting with other humans as well as with our surroundings. Anticipating which activities will a human do next (and how to do them) can enable an assistive robot to plan ahead for reactive responses in the human environments. We propose a graphical model that captures the rich context of activities and object affordances, and obtain the distribution over a large space of future human activities.

Publications: TPAMI'14, RSS'13 (best student paper award), ICML'13

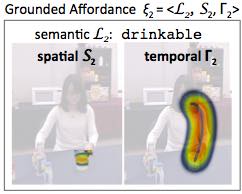

Objects in human environments support various functionalities which govern how people interact with their environments in order to perform tasks. In this work, we discuss how to represent and learn a functional understanding of an environment. Such an understanding is useful for many applications such as activity detection and assistive robotics.

Publications: ECCV'14

Being able to detect and recognize human activities is essential for several applications, including smart homes and personal assistive robotics. In this work, we perform detection and recognition of unstructured human activity in unstructured environments. We use a RGBD sensor (Microsoft Kinect) as the input sensor, and compute a set of features based on human pose and motion, as well as based on image and point-cloud information.

Publications: IJRR'13

We consider the problem of semantic scene labeling from RGB-D data for personal robots. Given the input co-registered RGB, Depth image pair, the colored 3D pointclouds can be easily obtained. We formulate a Conditional Random Field model that captures various features and contextual relations, including the local visual appearance and shape cues, object co-occurence relationships and geometric relationships.

Publications: NIPS'11, IJRR'12

"Anticipating Human Activities using Object Affordances for Reactive Robotic Response", Hema S. Koppula and Ashutosh Saxena. In IEEE Transactions in Pattern Analysis and Machine Intelligence (TPAMI), 2015 (Earlier best student paper award at RSS'13). [pdf, project page, code/data]

"Modeling 3D Environments through Hidden Human Context", Yun Jiang, Hema S. Koppula and Ashutosh Saxena. Conditionally accepted in IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI), 2015. [pdf, project page]

"Learning Human Activities and Object Affordances from RGB-D Videos", Hema S. Koppula, Rudhir Gupta and Ashutosh Saxena. In International Journal of Robotics Research (IJRR), 2013. [pdf, project page, code/data]

"Contextually Guided Semantic Labeling and Search for Three-dimensional Point Clouds", Hema S. Koppula, Abhishek Anand, Thorsten Joachims and Ashutosh Saxena. In International Journal of Robotics Research (IJRR), 2013. [pdf, project page, code/data]

Car That Knows Before You Do: Anticipating Maneuvers via Learning Temporal Driving Models", Ashesh Jain, Hema S. Koppula, Bharad Raghavan, Ashutosh Saxena. In International Conference on Computer Vision (ICCV), 2015 [PDF, project page]

Recurrent Neural Networks for Driver Activity Anticipation via Sensory-Fusion Architecture", Ashesh Jain, Avi Singh, Hema S. Koppula, Shane Soh, Ashutosh Saxena. Tech Report (under review), September 2015 [arXiv, Code]

"Physically Grounded Spatio-Temporal Object Affordances", Hema S. Koppula and Ashutosh Saxena. In European Conference on Computer Vision (ECCV), 2014. [pdf, project page]

"Anticipatory Planning for Human-Robot Teams", Hema S. Koppula, Ashesh Jain and Ashutosh Saxena. In International Symposium on Experimental Robotics (ISER), 2014. [pdf, project page]

"Anticipating Human Activities using Object Affordances for Reactive Robotic Response", Hema S. Koppula and Ashutosh Saxena. In Robotics: Science and Systems (RSS), 2013. (oral, best student paper) [pdf, project page, code/data]

"Learning Spatio-Temporal Structure from RGB-D Videos for Human Activity Detection and Anticipation", Hema S. Koppula and Ashutosh Saxena. In International Conference on Machine Learning (ICML), 2013. [pdf, project page]

"Hallucinated Humans as the Hidden Context for Labeling 3D Scenes", Yun Jiang, Hema S. Koppula and Ashutosh Saxena. In Conference on Computer Vision and Pattern Recognition (CVPR), 2013. (oral) [pdf, project page ]

"Semantic Labeling of 3D Point Clouds for Indoor Scenes", Hema S. Koppula, Abhishek Anand, Thorsten Joachims and Ashutosh Saxena. In Neural Information Processing Systems (NIPS), 2011. [pdf, project page, code/data]

"Alignment of Short Length Parallel Corpora with an Application to Web Search", Jitendra Ajmera, Hema S. Koppula, Krishna P. Leela, Shibnath Mukherjee and Mehul Parsana. In ACM International Conference on Information and Knowledge Management (CIKM), 2010. [pdf]

"Learning URL Patterns for Webpage De-Duplication", Hema S. Koppula, Krishna P. Leela, Amit Agarwal, Krishna Prasad Chitrapura, Sachin Garg and Amit Sasturkar. In ACM International Conference on Web Search and Data Mining (WSDM), 2010. [pdf]

"URL Normalization for De-duplication of Web Pages", Amit Agarwal, Hema S. Koppula, Krishna P. Leela, Krishna P. Chitrapura, Sachin Garg and Pavan Kumar. In ACM International Conference on Information and Knowledge Management (CIKM), 2009. [pdf]

"Anticipating the Future By Constructing Human Activities using Object Affordances", Hema S. Koppula and Ashutosh Saxena. In NIPS workshop on Constructive Machine Learning , 2013. [pdf]

"Anticipating Human Activities using Object Affordances for Reactive Robotic Response", Hema S. Koppula and Ashutosh Saxena. In ICML workshop in Robot Learning , 2013. [pdf]

"Labeling 3D scenes for Personal Assistant Robots", Hema S. Koppula, Abhishek Anand,Thorsten Joachims, and Ashutosh Saxena. In RSS workshop on RGB-D Cameras, 2011. [pdf]

"Growth of the Flickr Social Network", Alan Mislove, Hema S. Koppula, Krishna P. Gummadi, Peter Druschel, and Bobby Bhattacharjee. In Proceedings of the 1st ACM SIGCOMM Workshop On Social Networks (WOSN), 2008. [pdf ]

Anticipating Activities for Reactive Robotic Response

(Finalist for Best Video Award at IROS'13)

Descriptive Labelling of Activities

Contextually Guided Scene Understanding

Collaborative Planning for Human Robot Teams