We've been studying the characteristics of parallel network traffic between two clusters communicating across high speed, long distance links. This type of traffic is becoming more commonplace as retailers, banks and government agencies start using multiple data centers for their applications. The move towards multiple data centers is driven both by a desire (or regulatory requirement) for disaster tolerance, the need to reduce latency to end users, or simple economics - running multiple data centers may be easier than running one larger one.

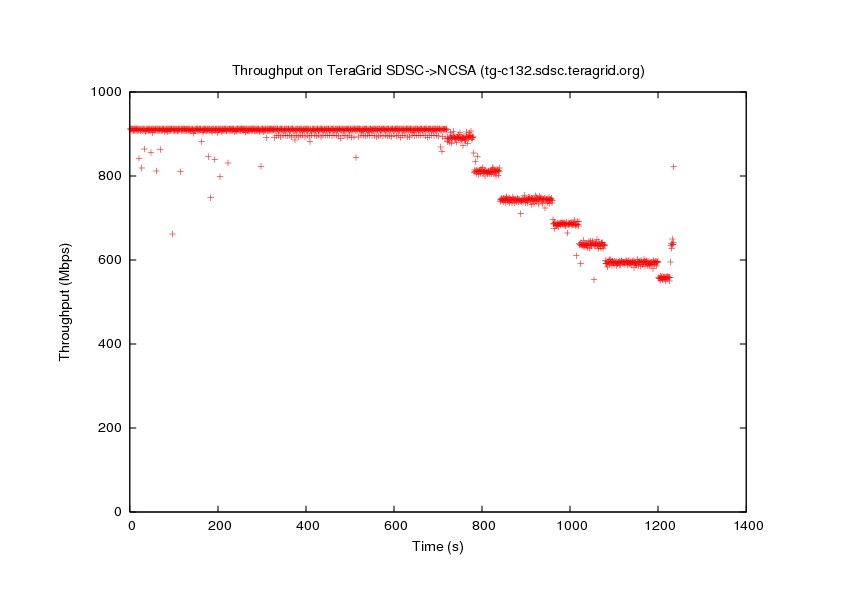

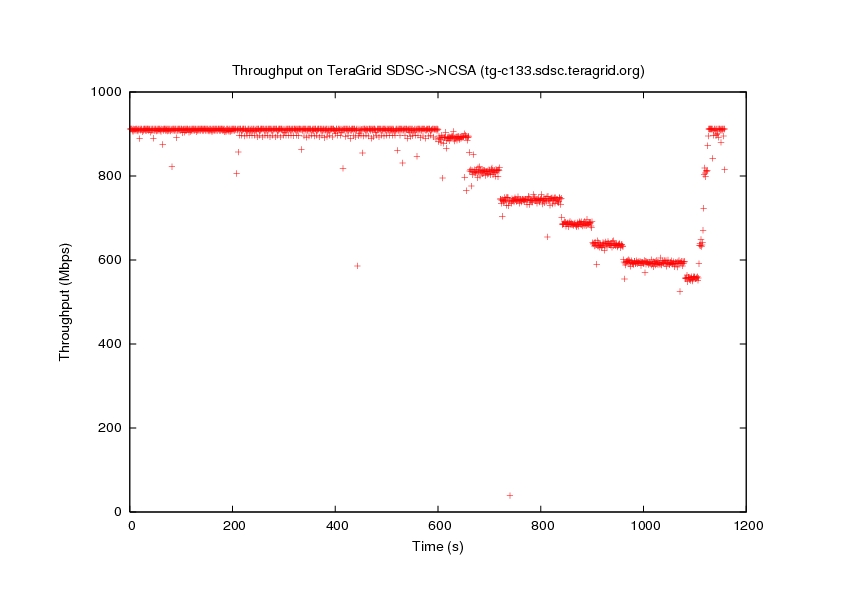

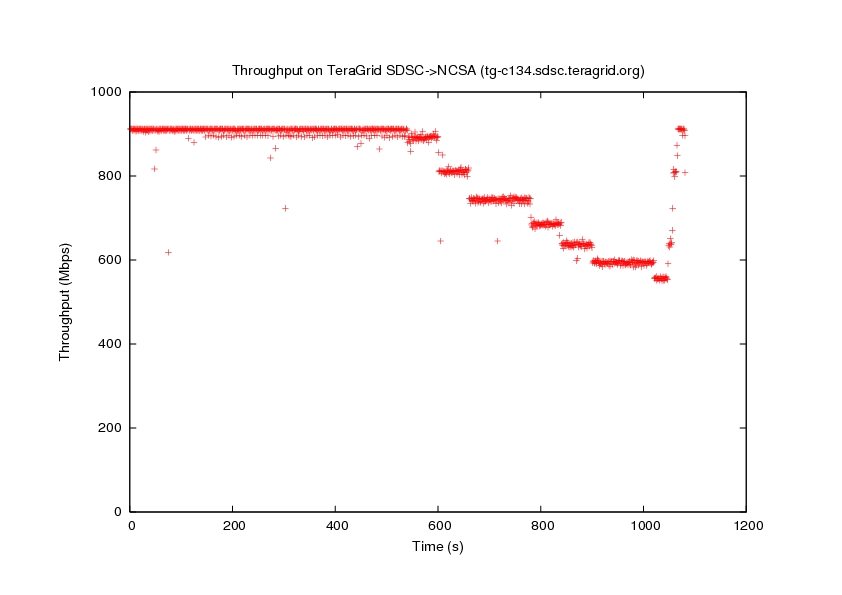

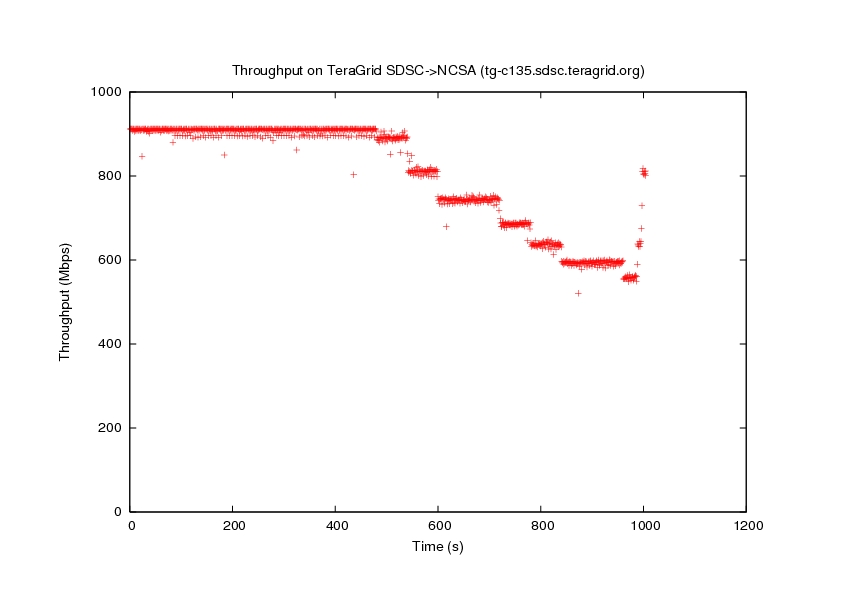

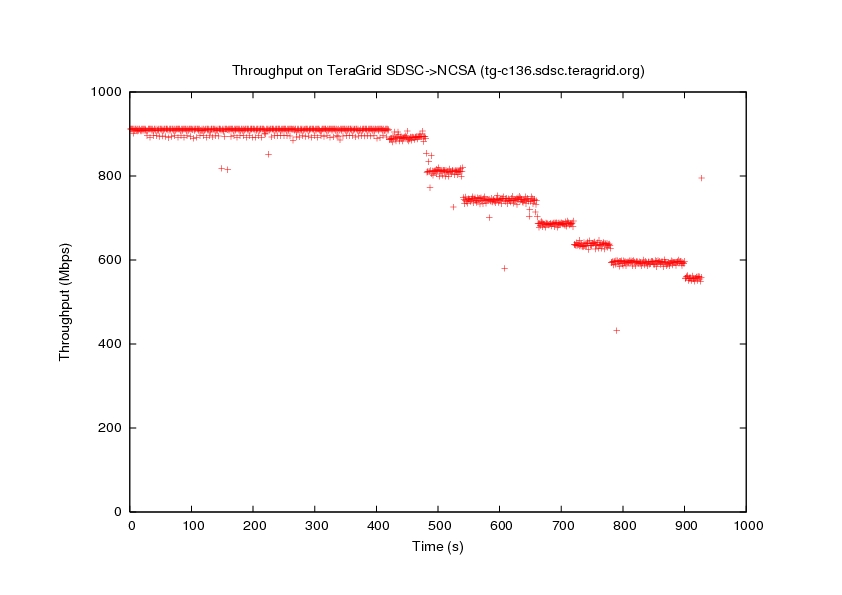

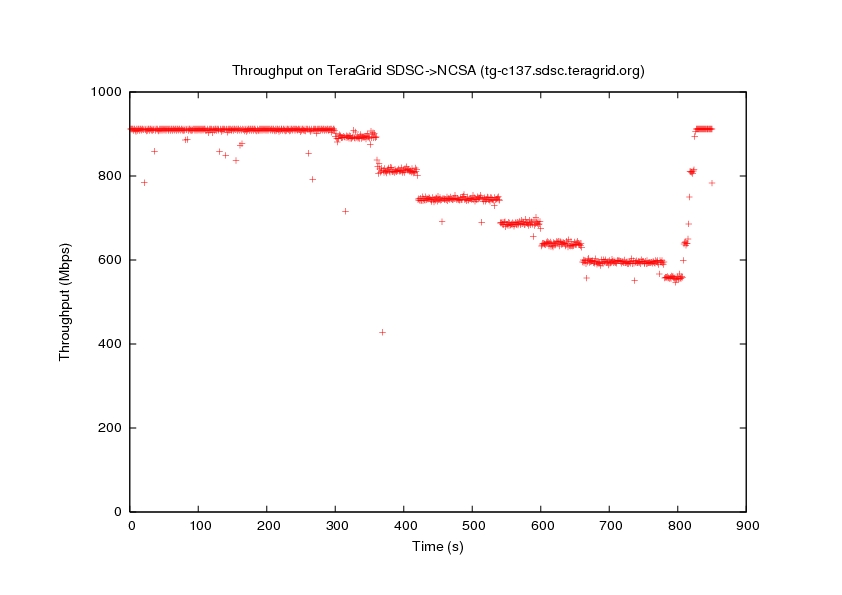

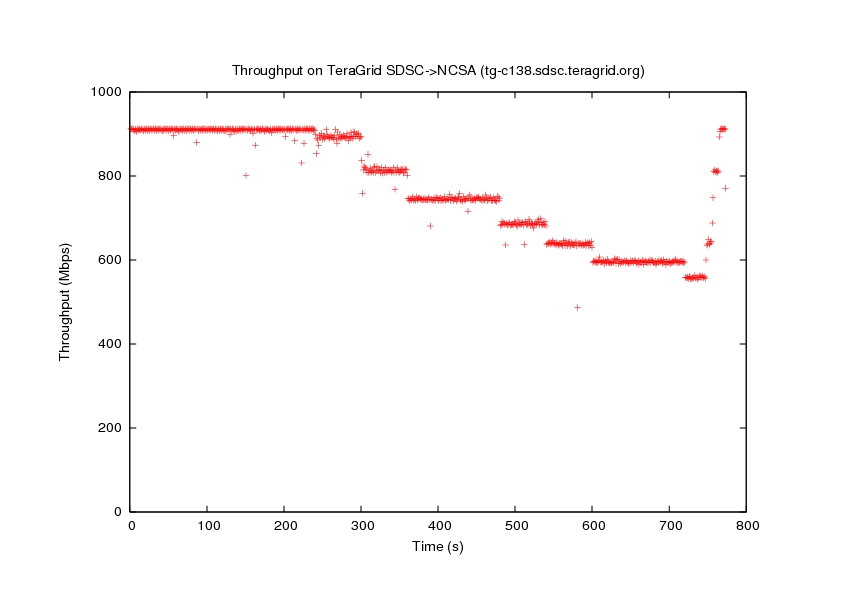

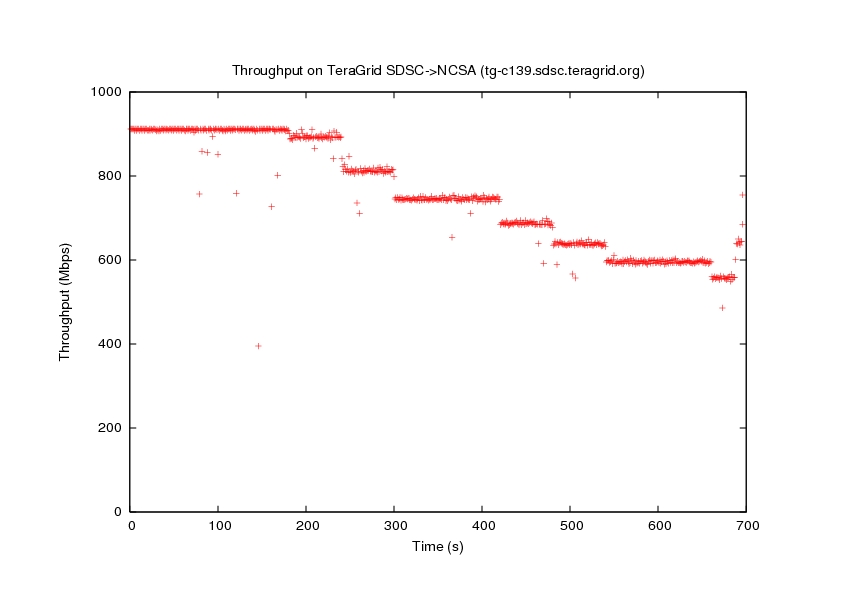

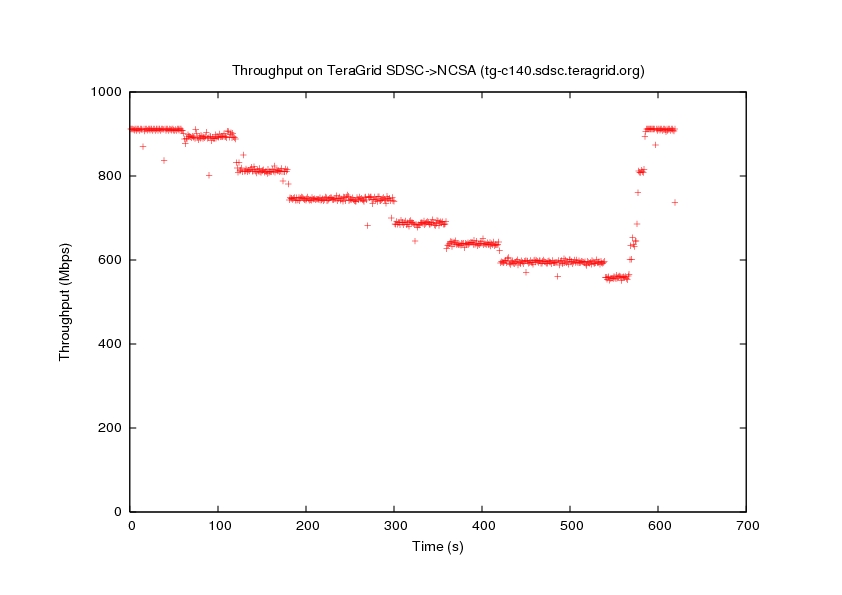

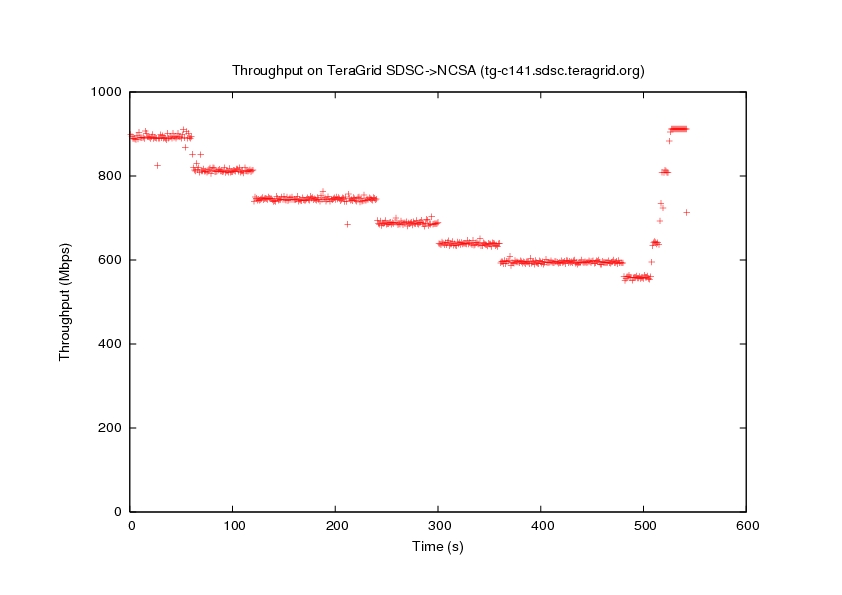

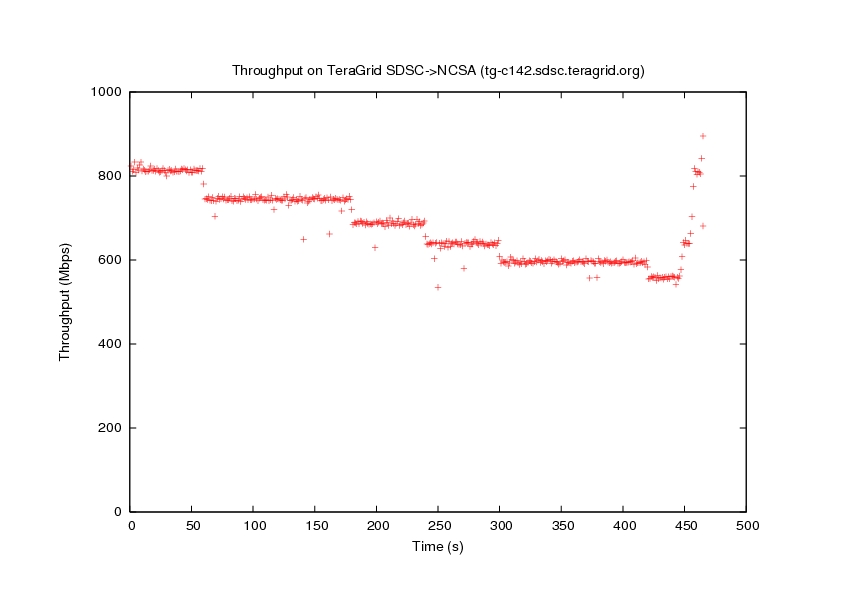

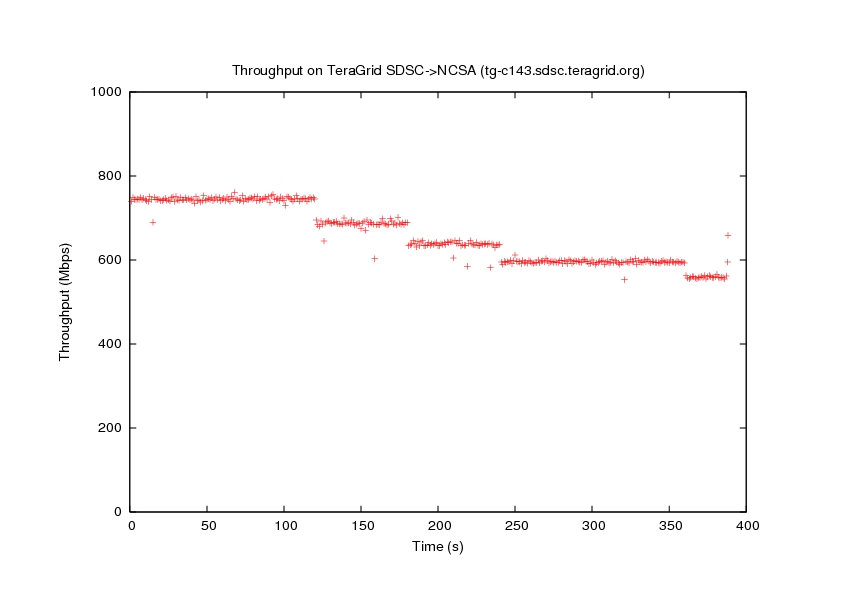

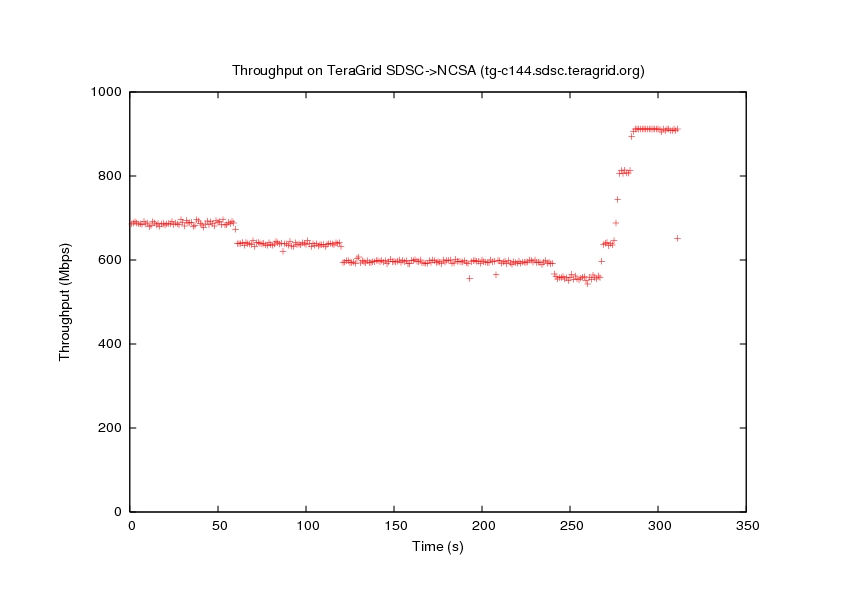

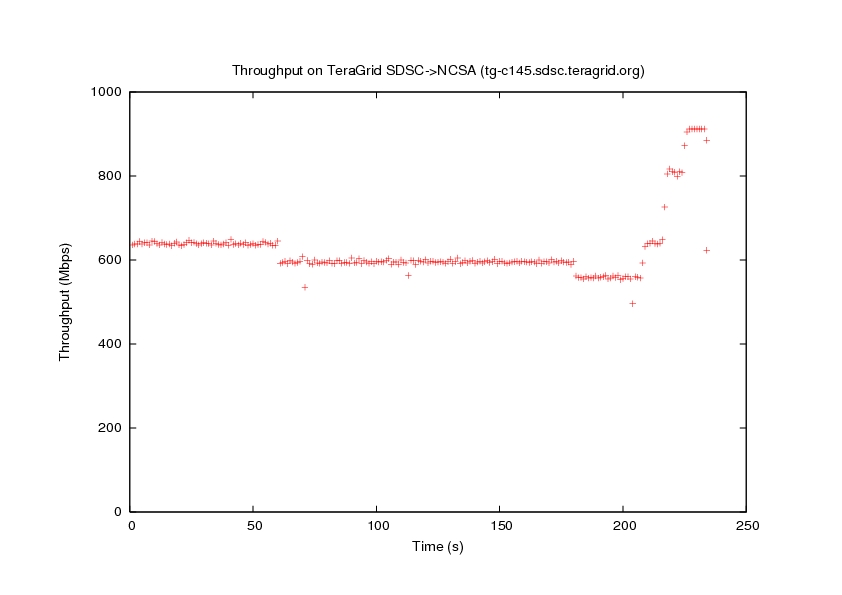

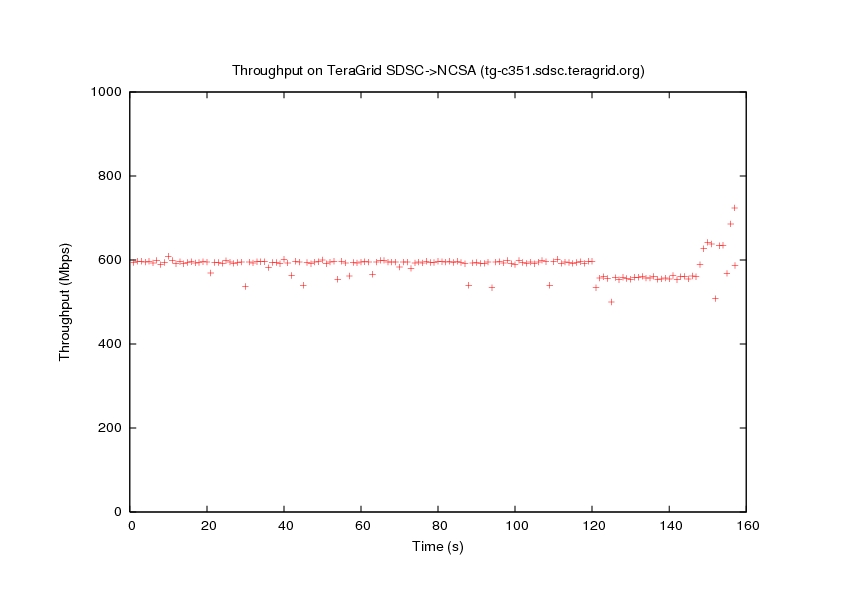

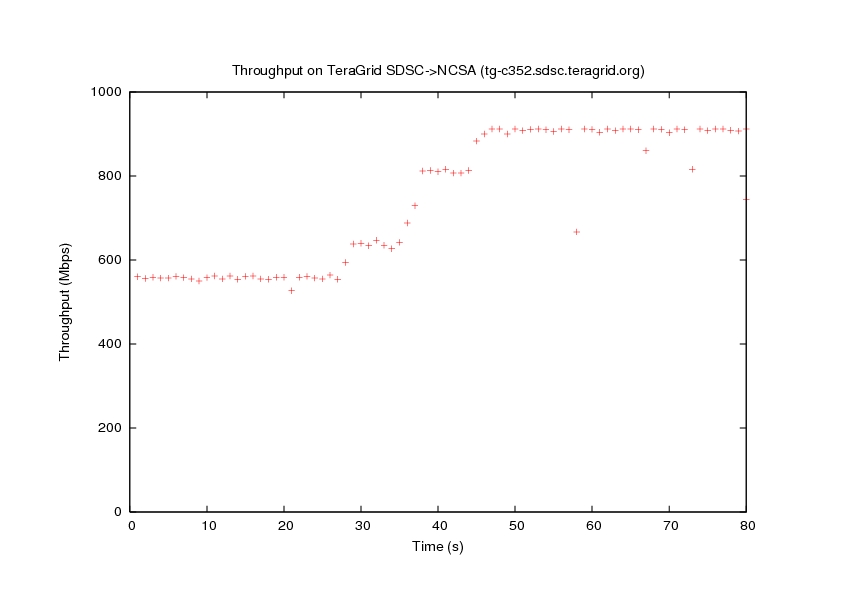

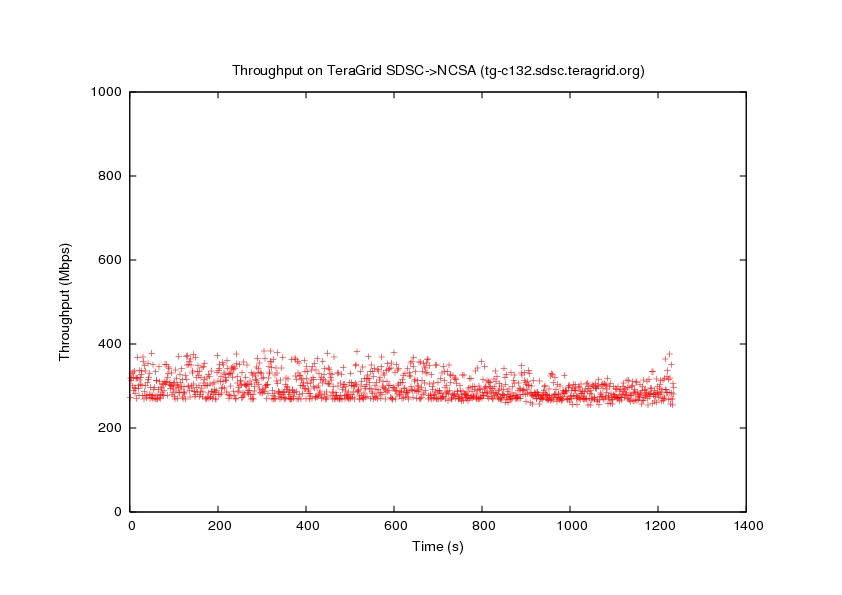

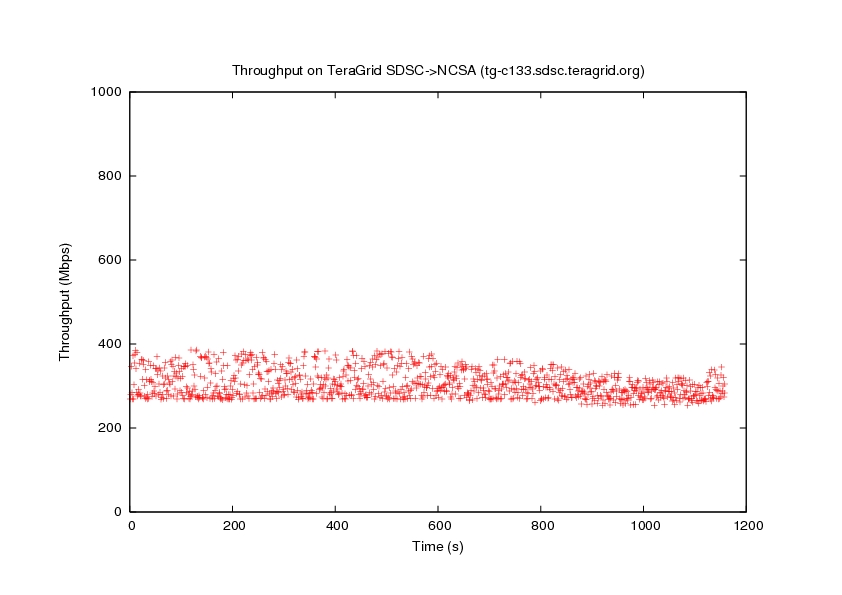

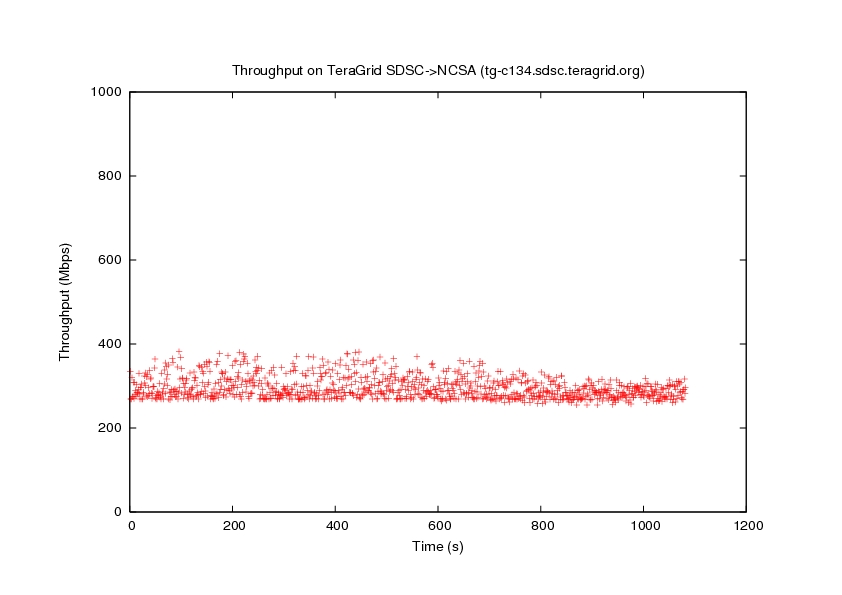

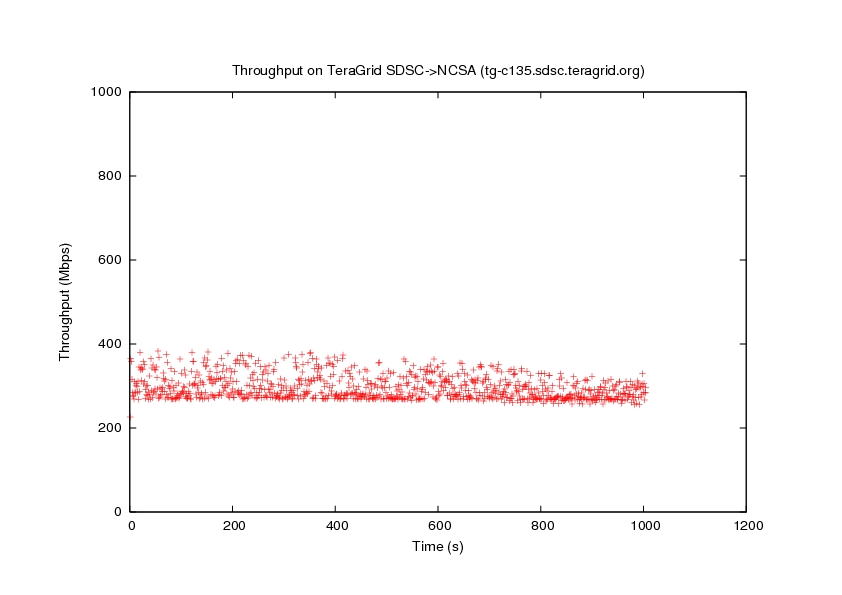

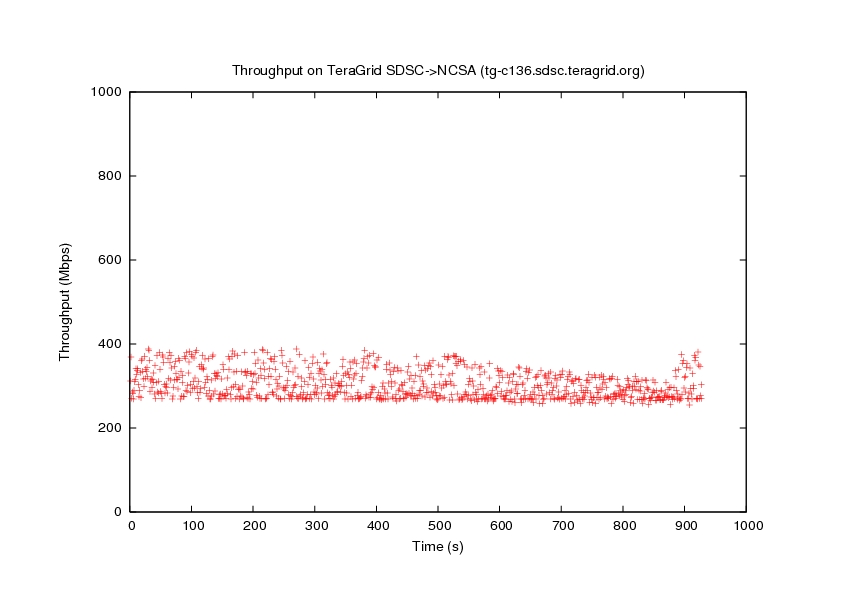

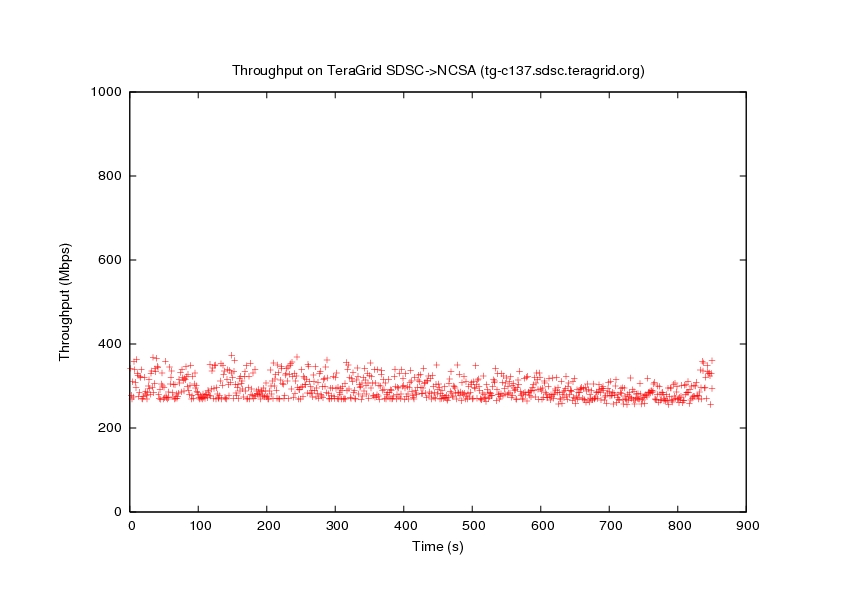

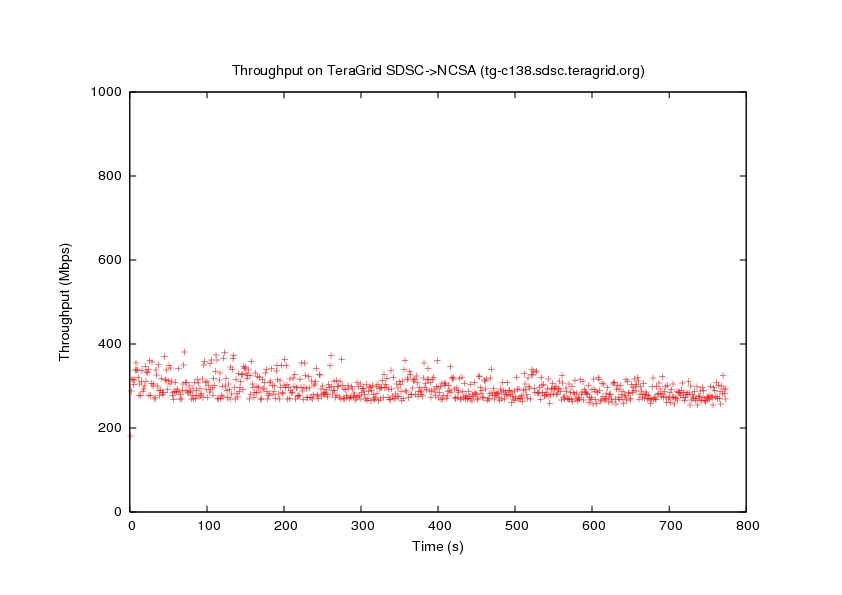

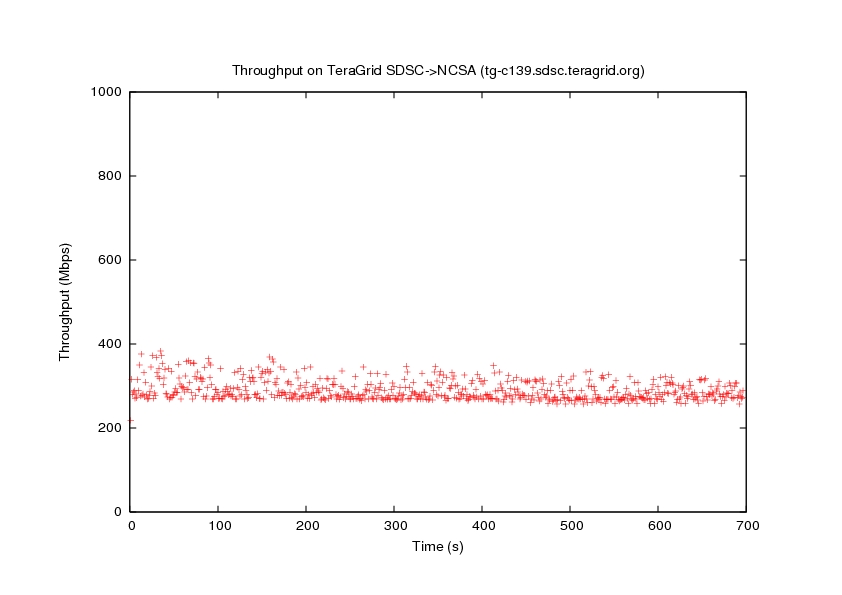

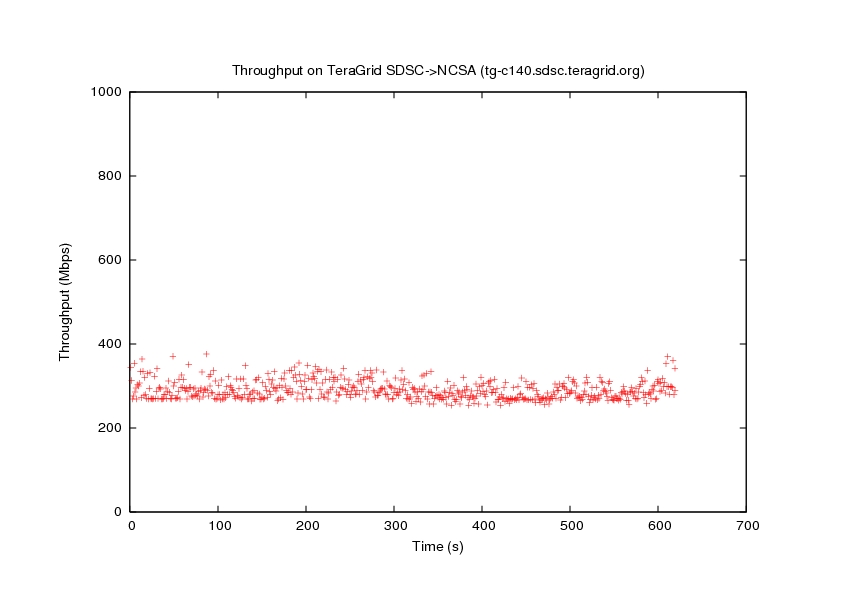

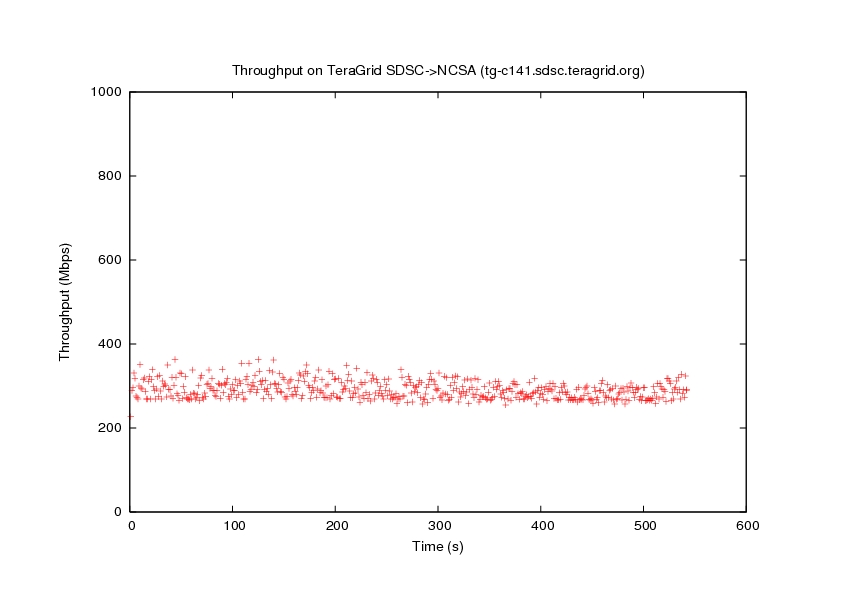

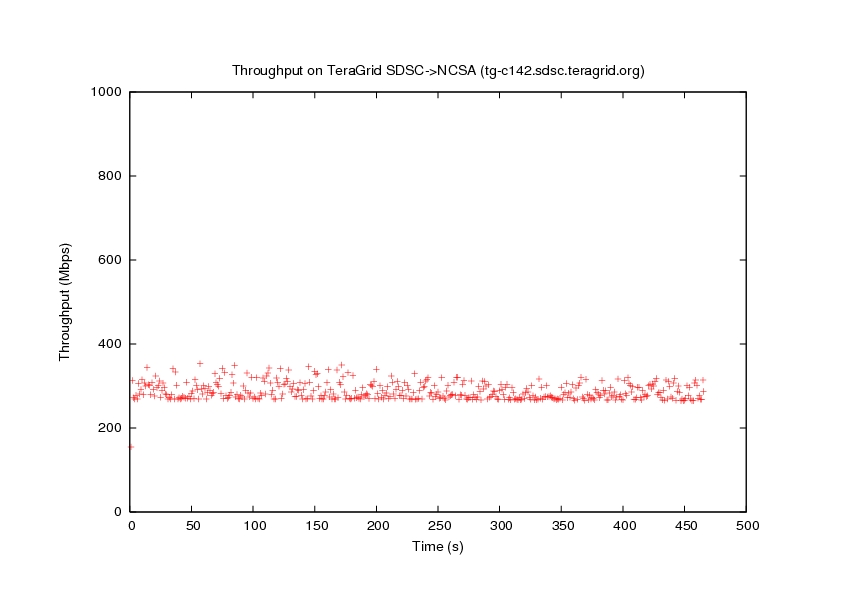

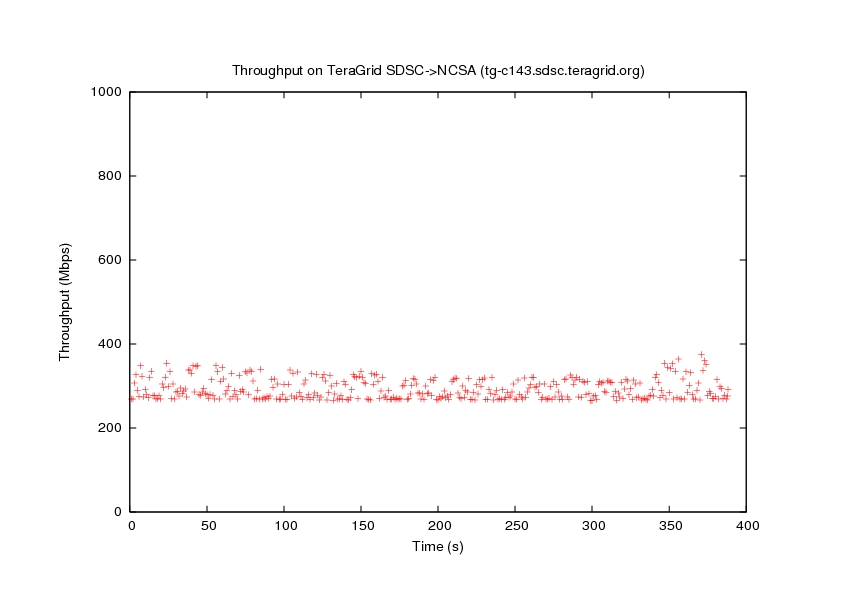

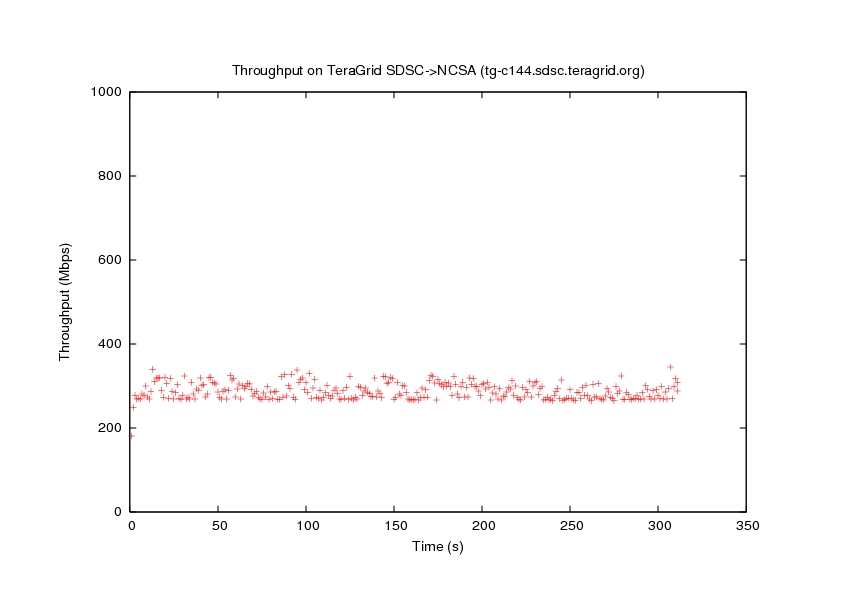

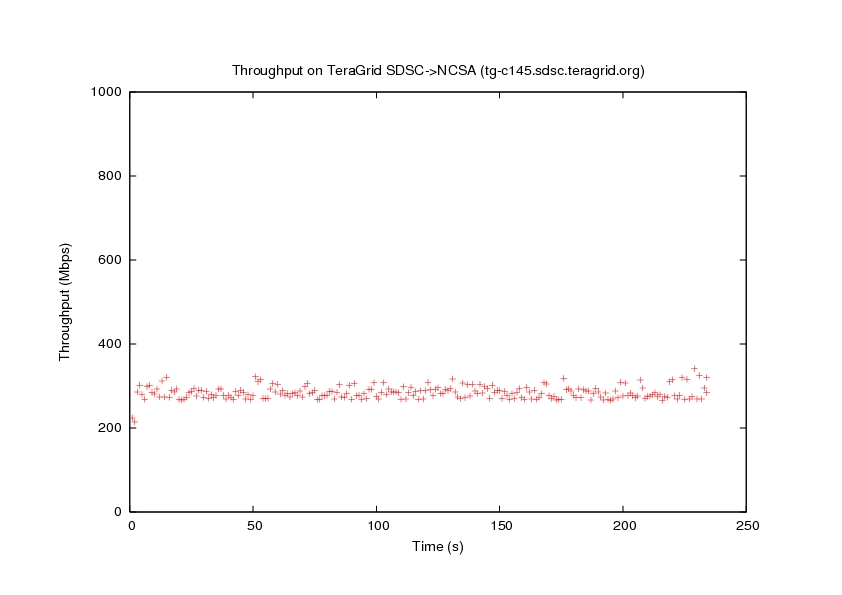

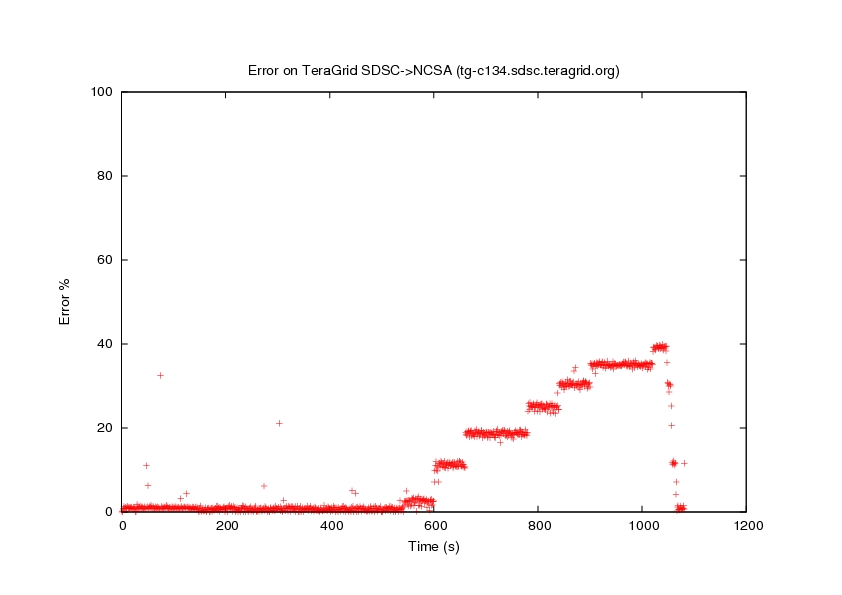

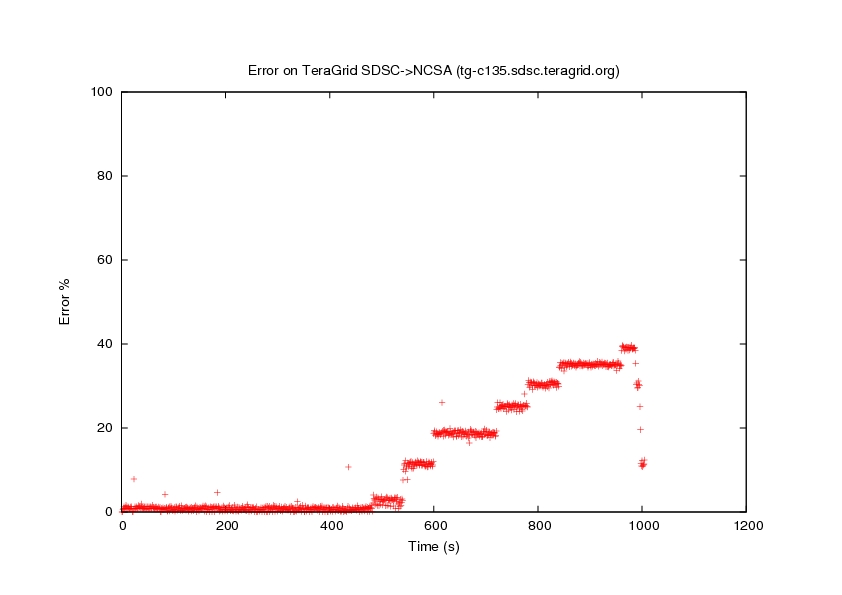

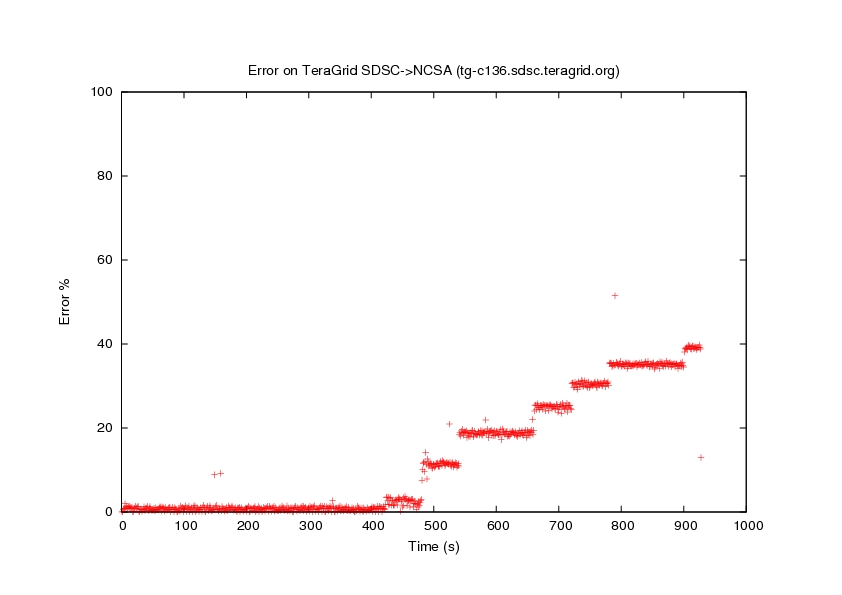

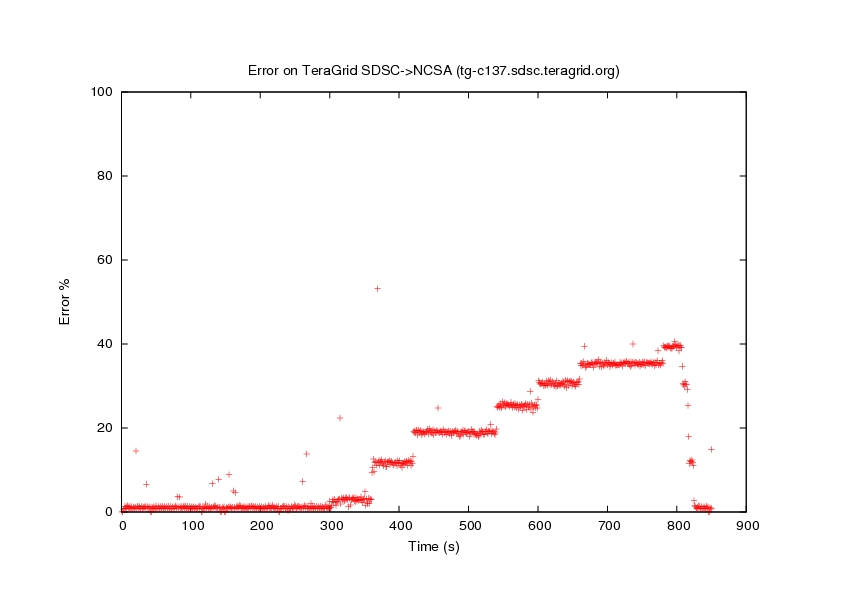

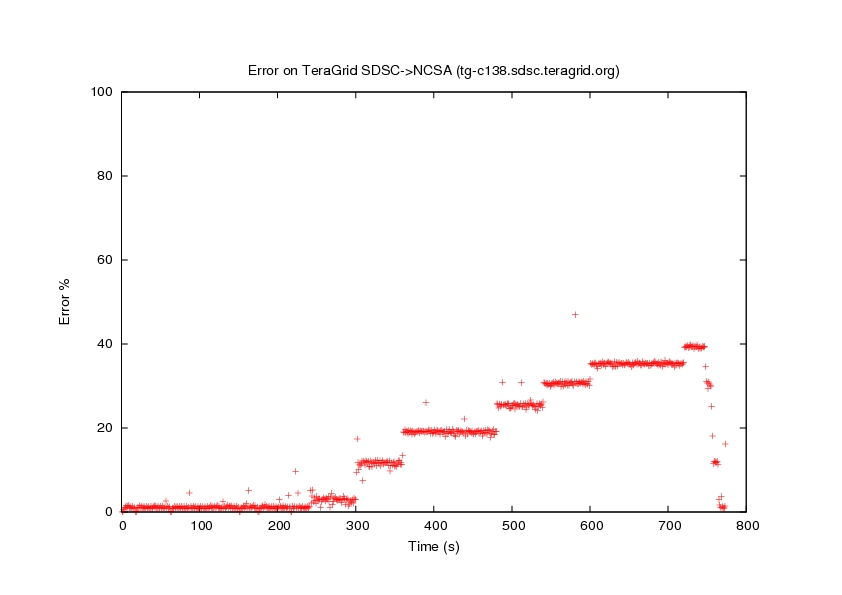

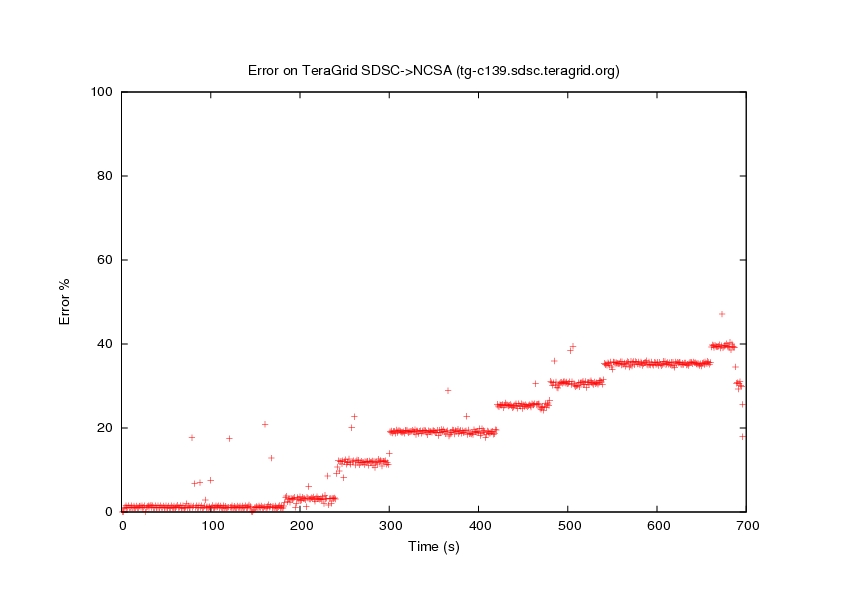

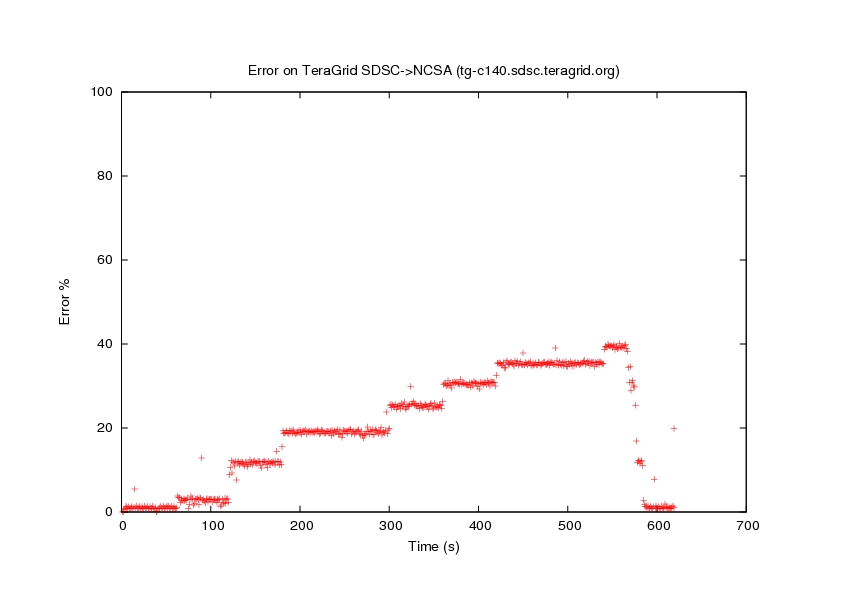

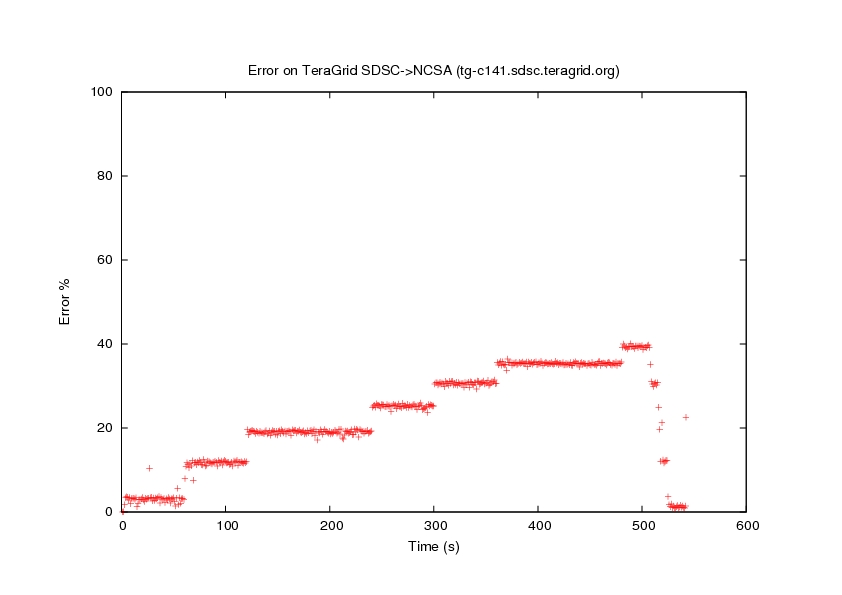

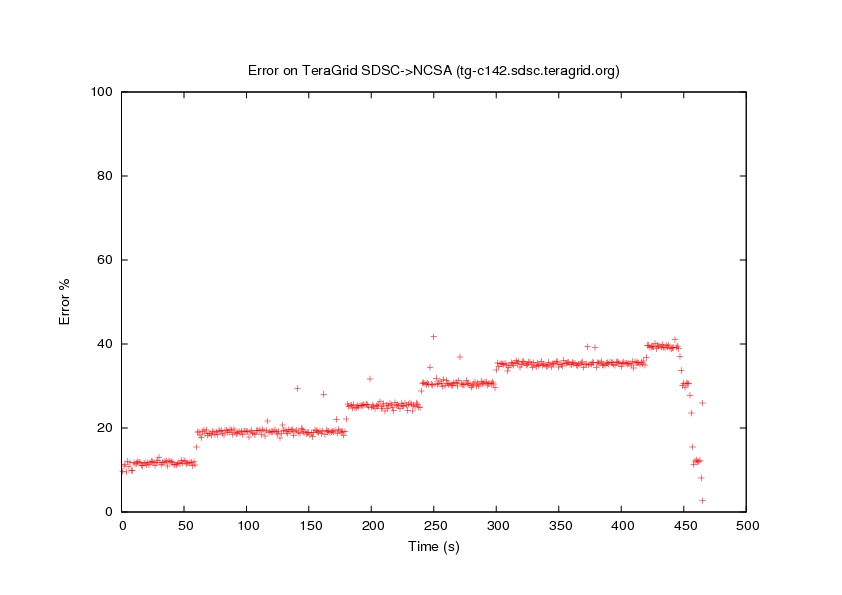

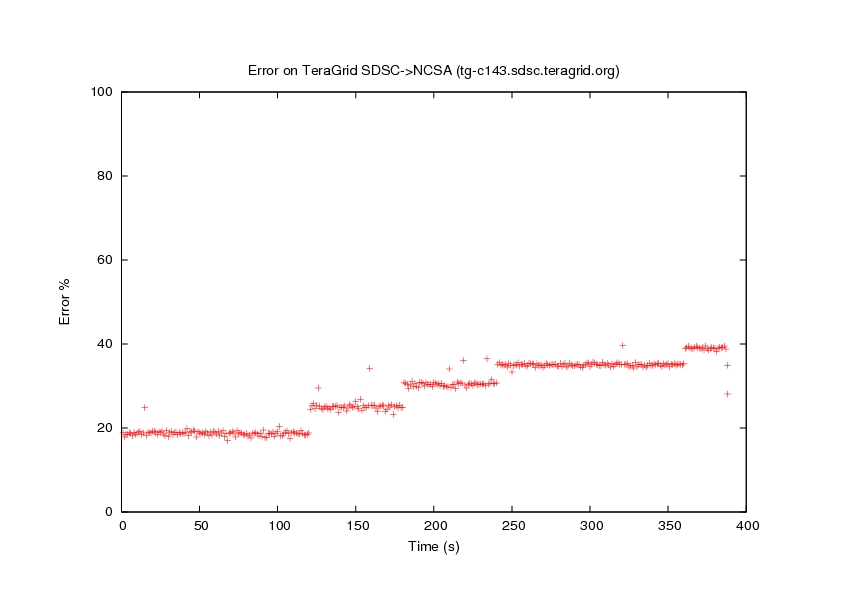

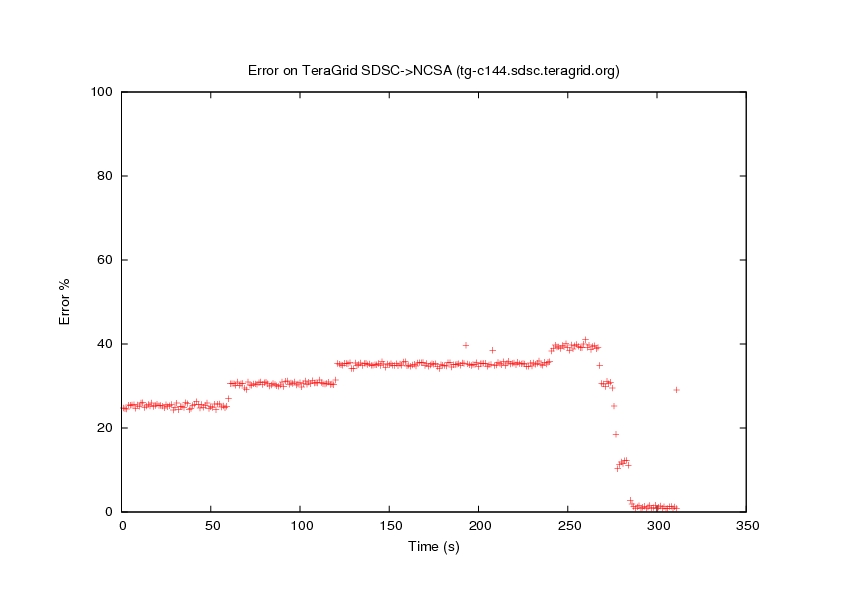

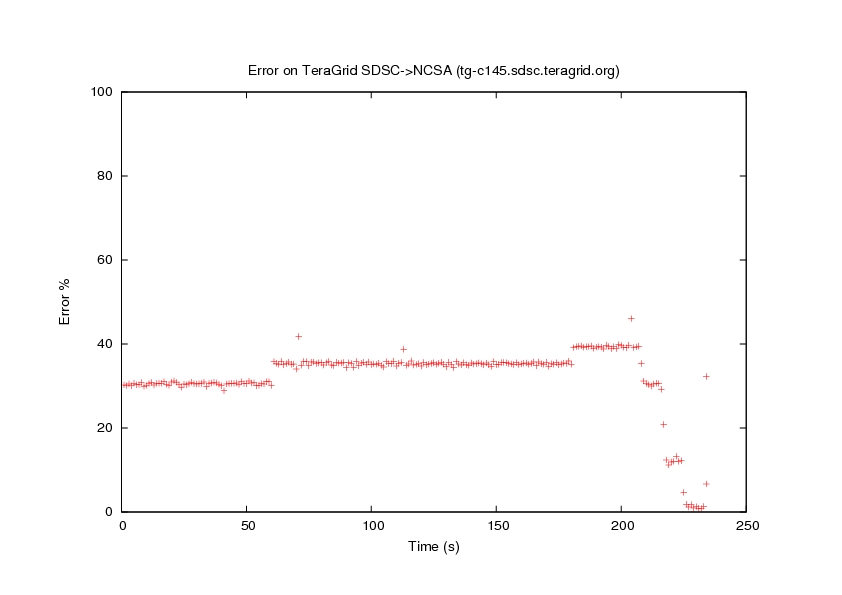

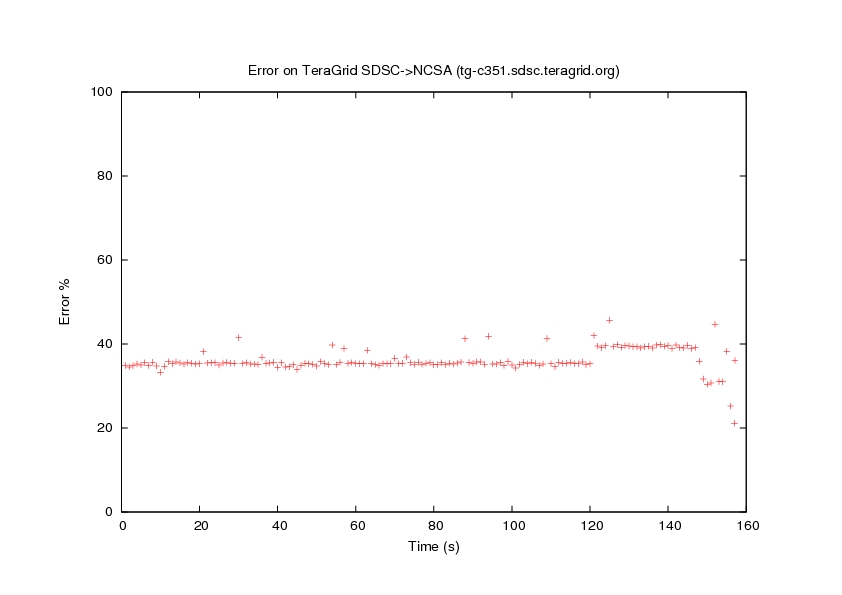

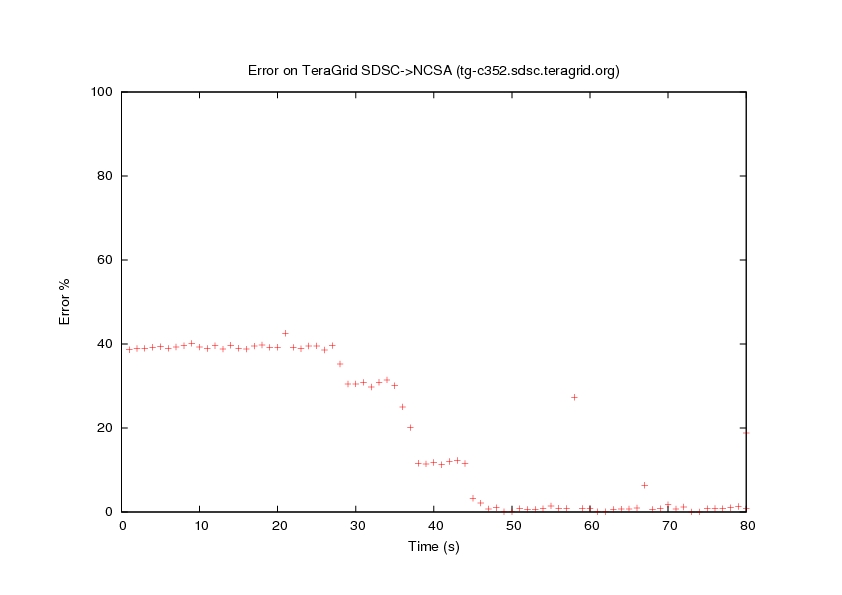

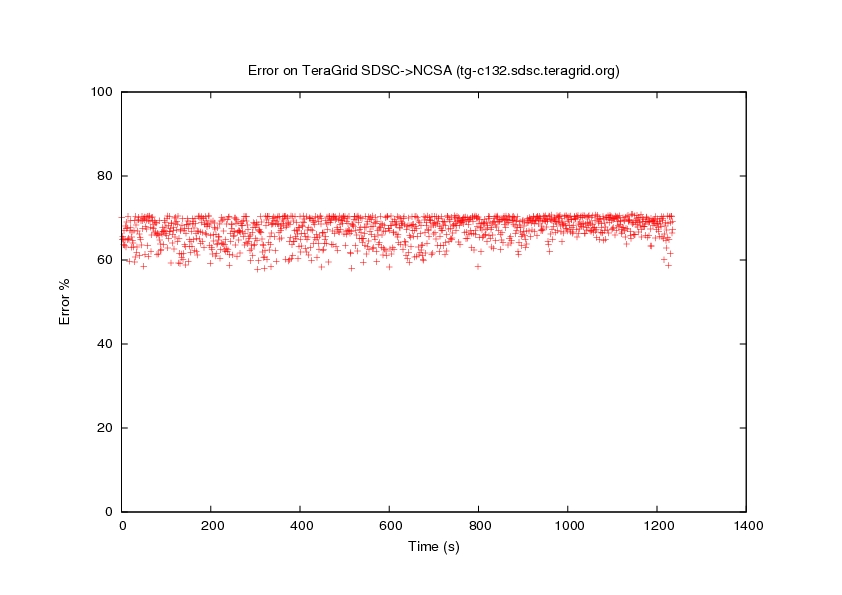

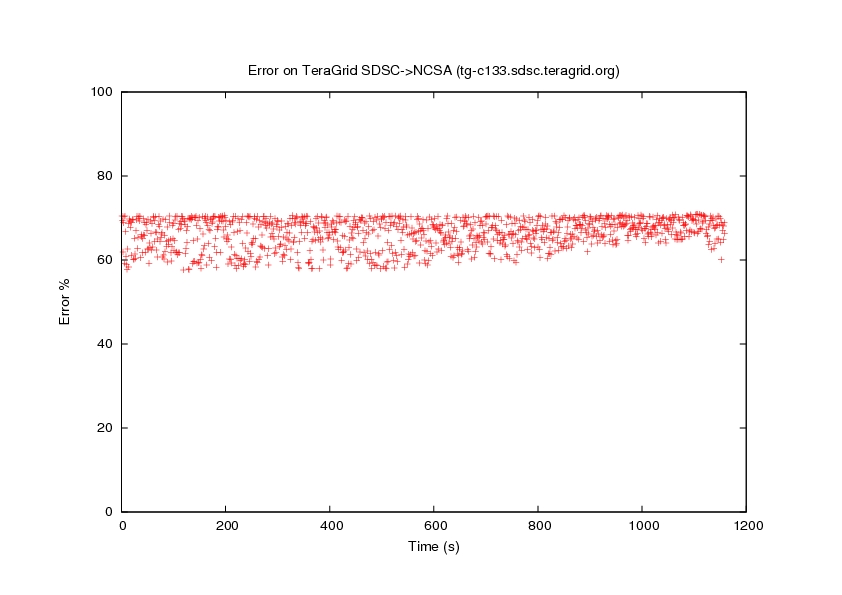

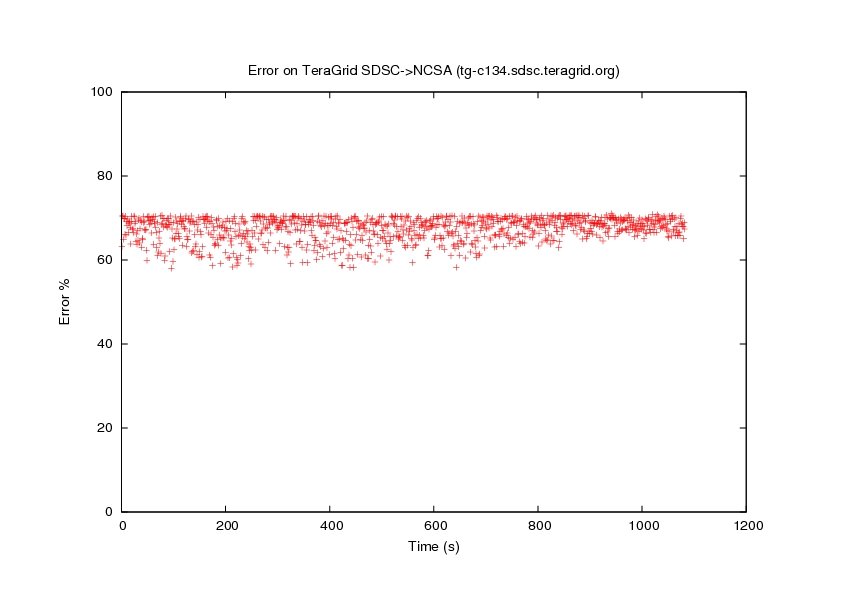

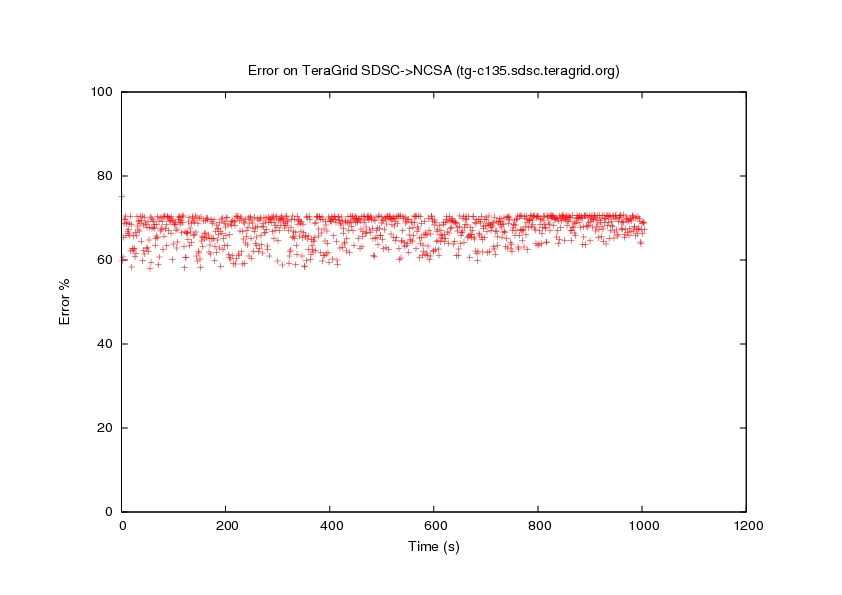

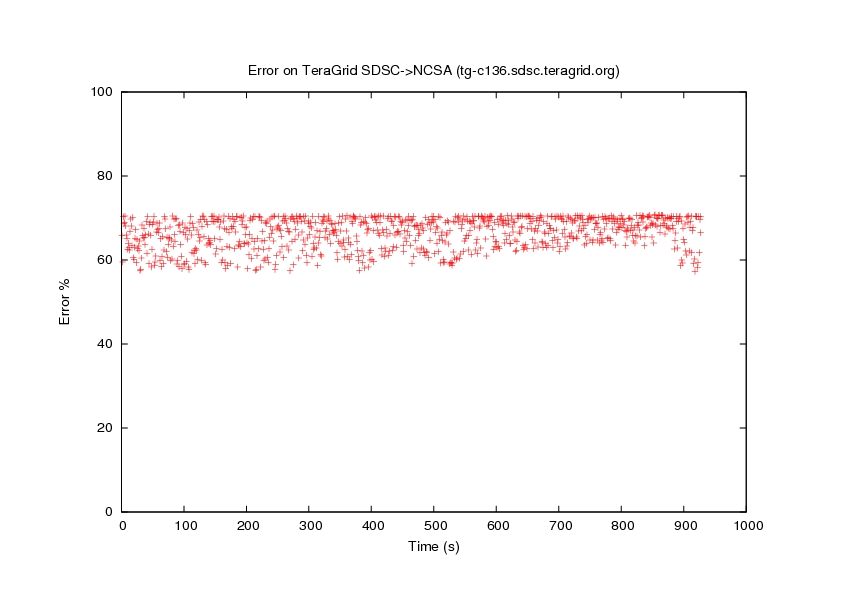

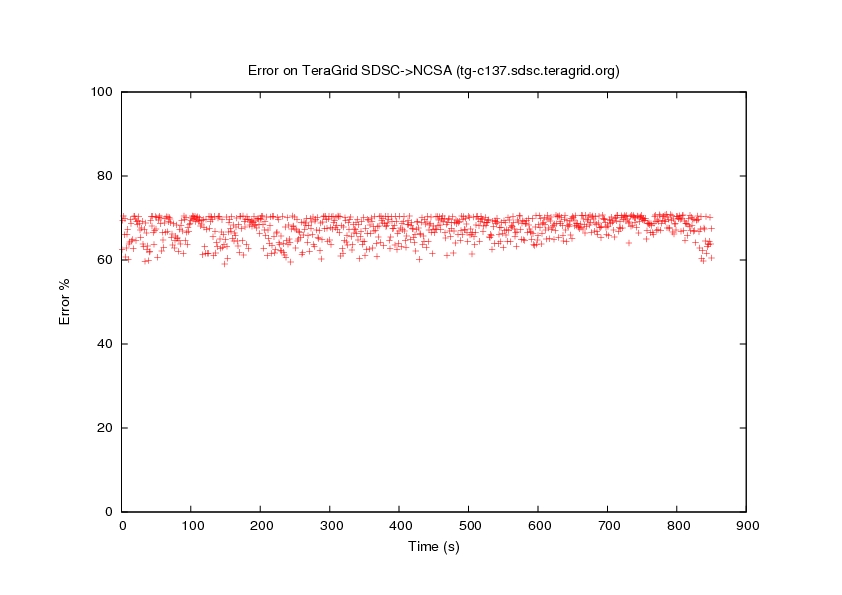

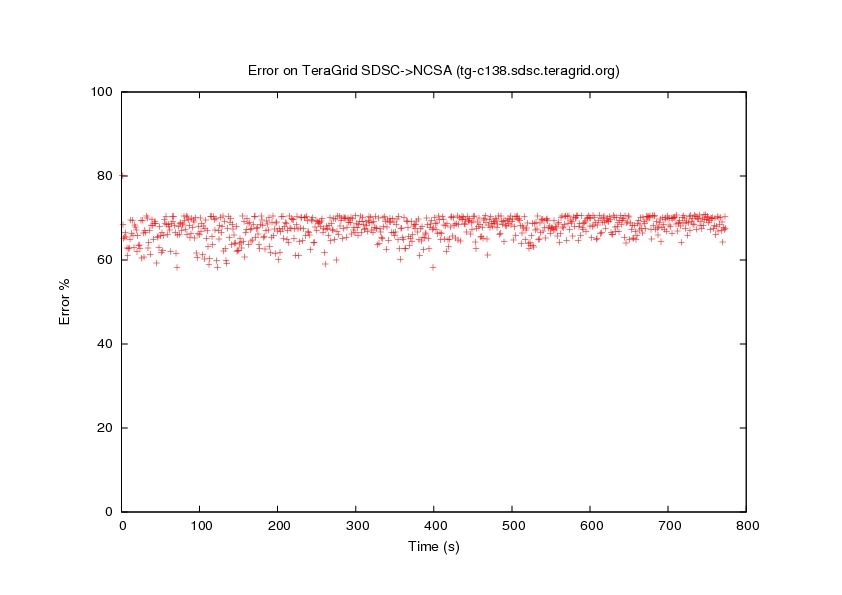

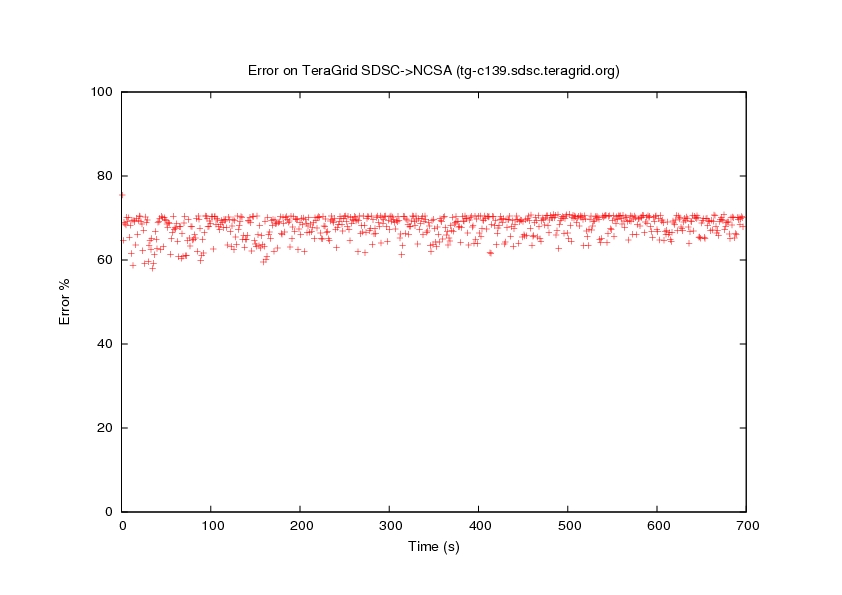

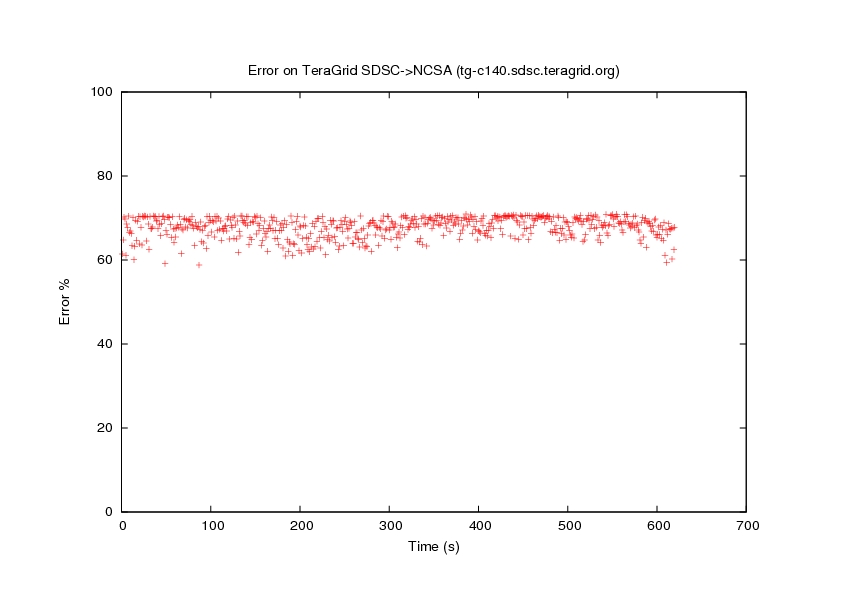

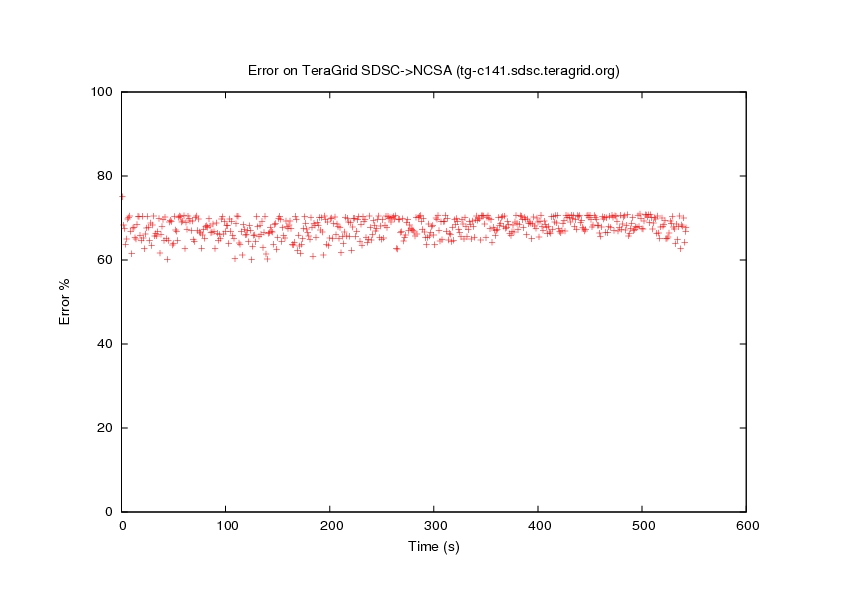

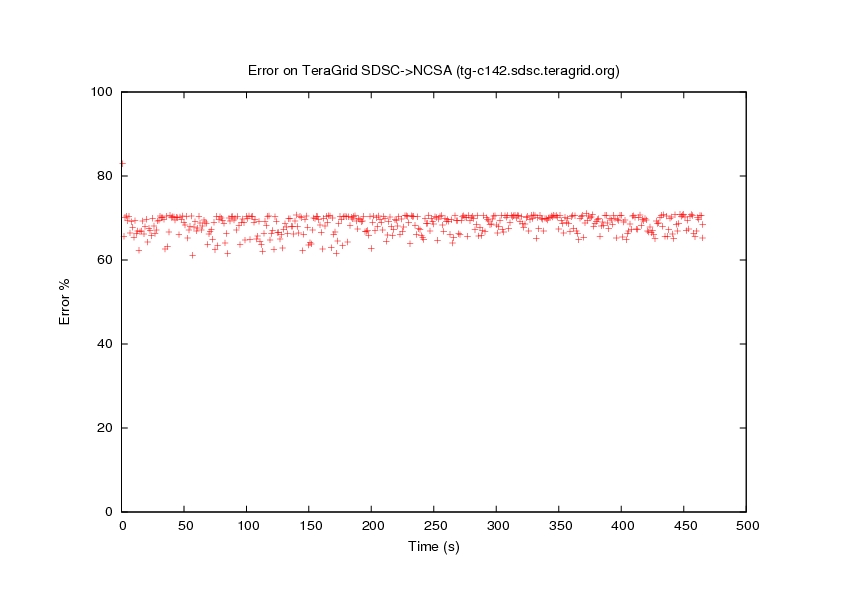

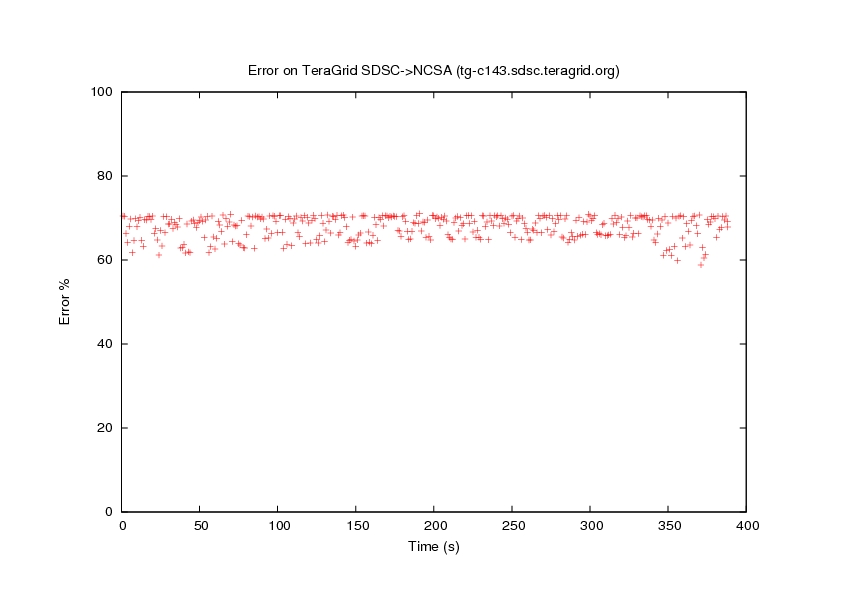

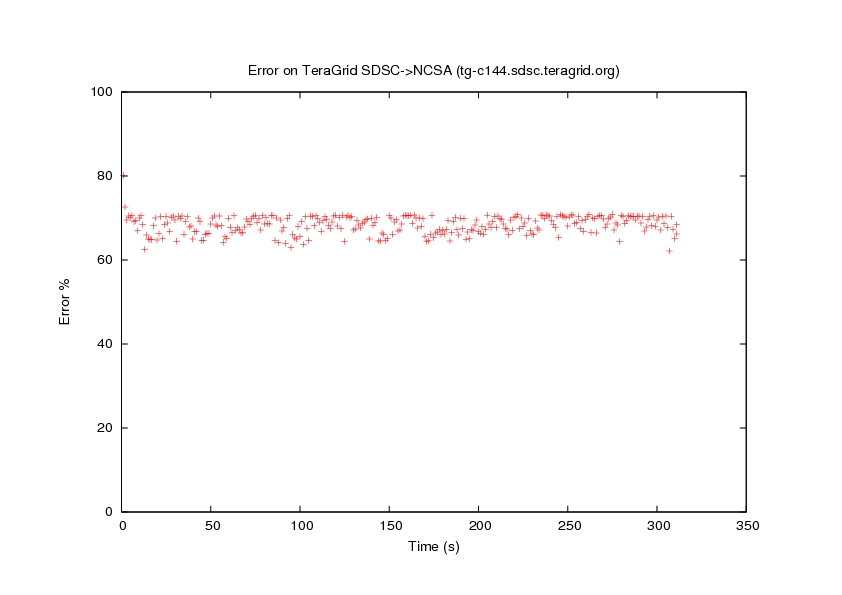

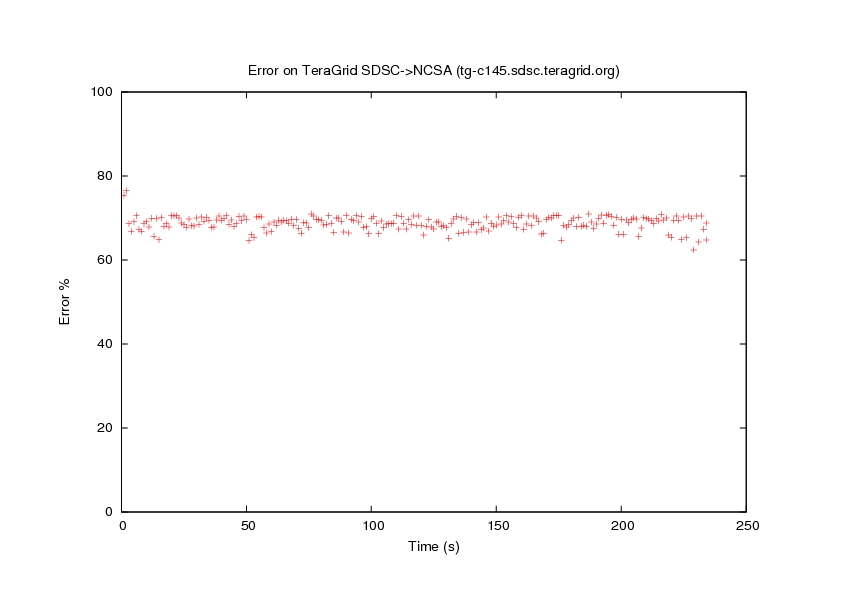

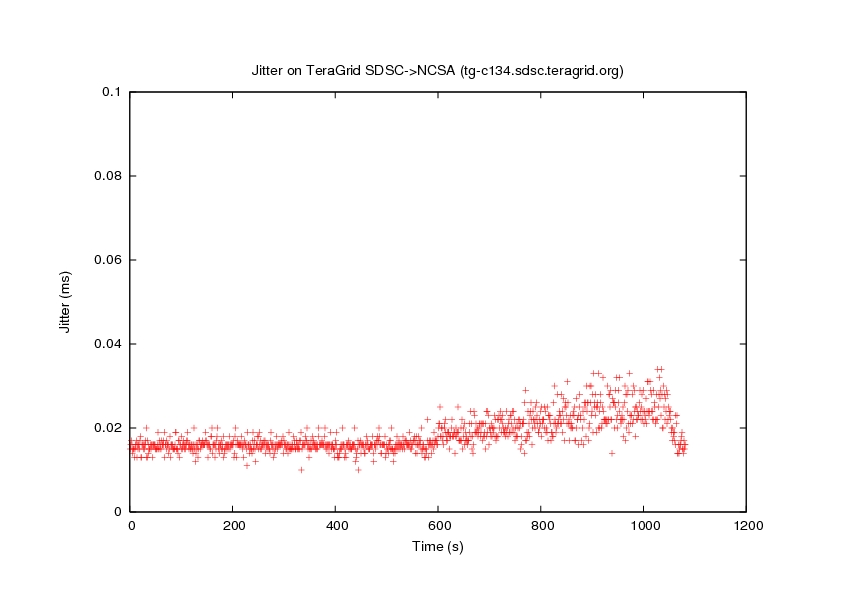

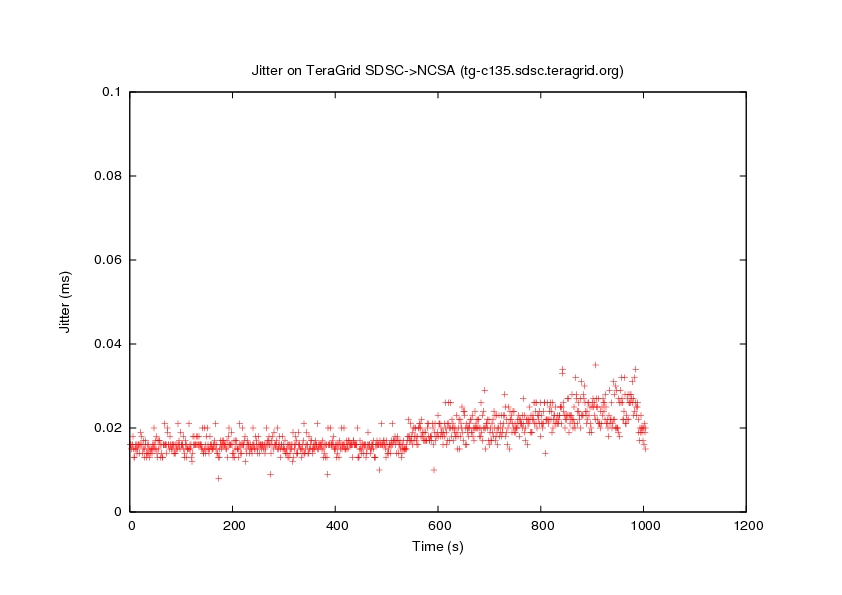

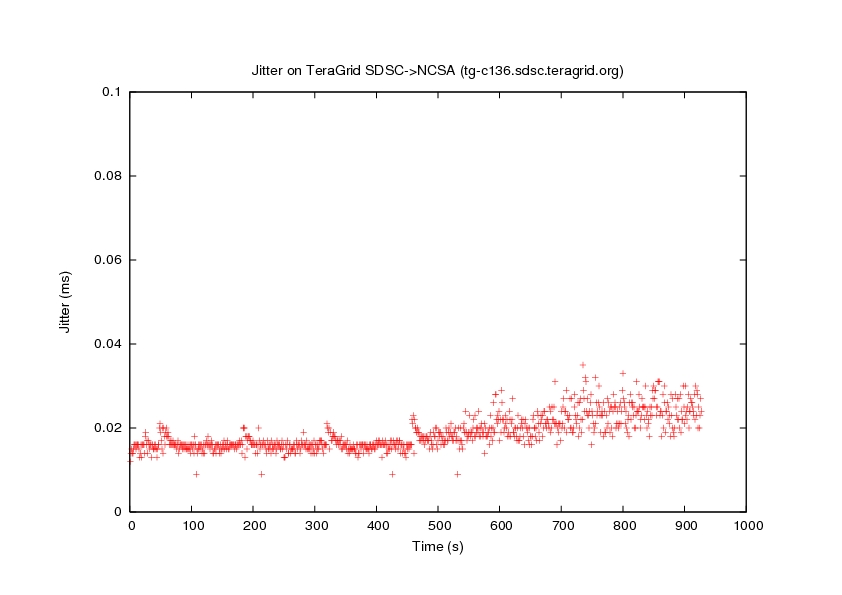

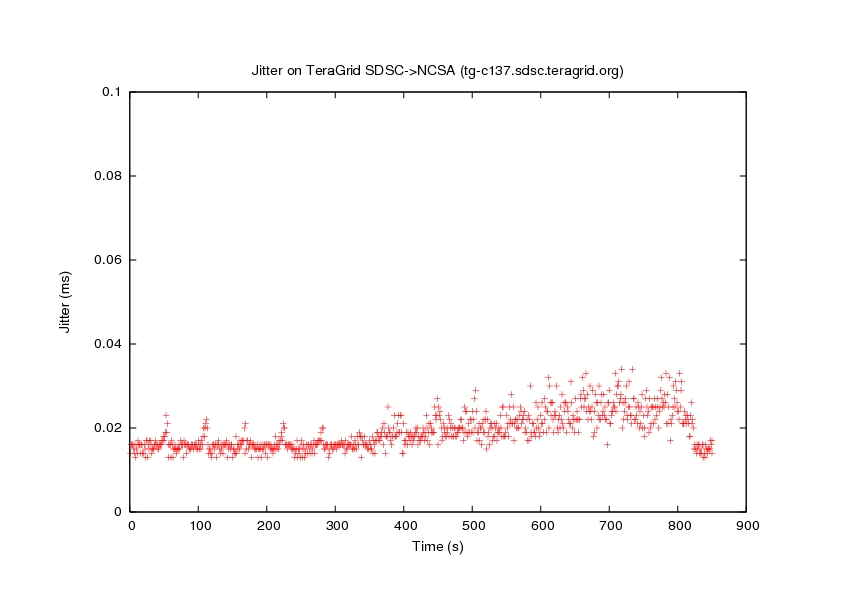

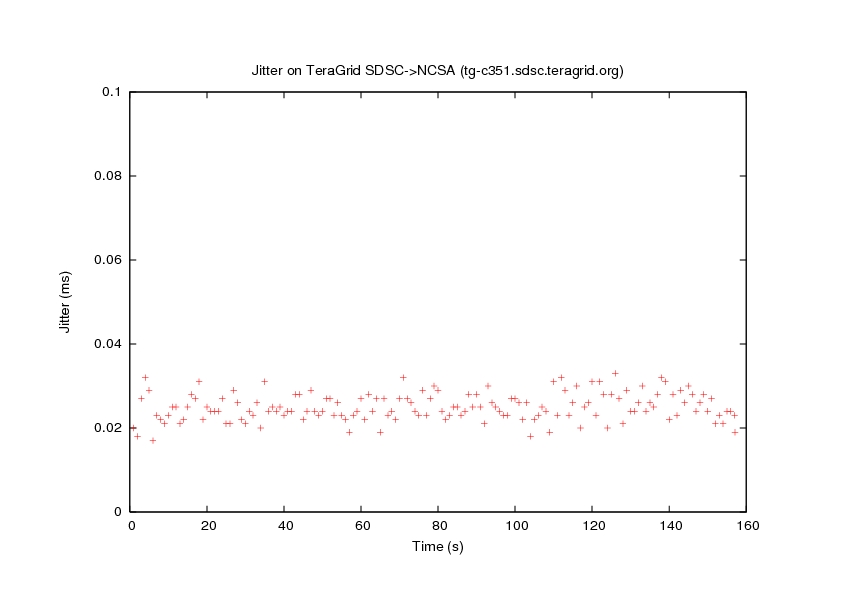

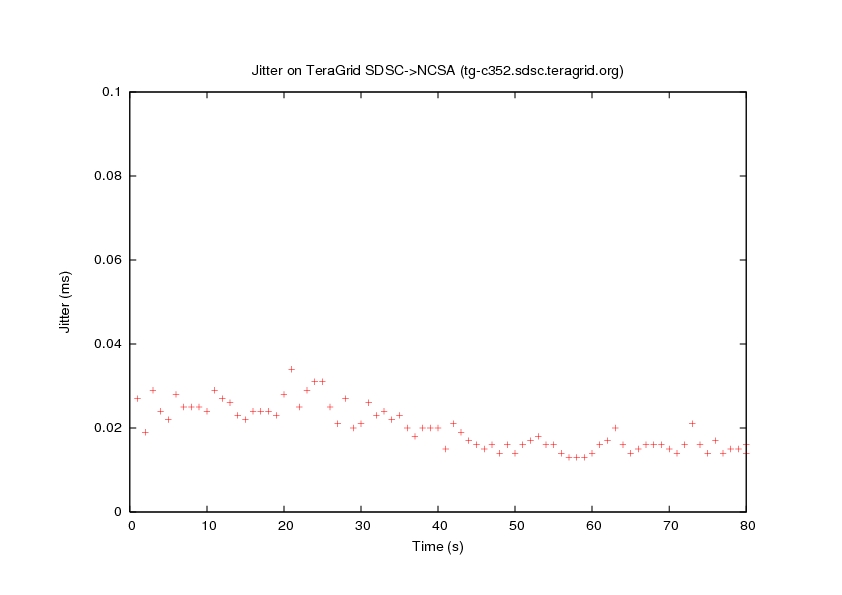

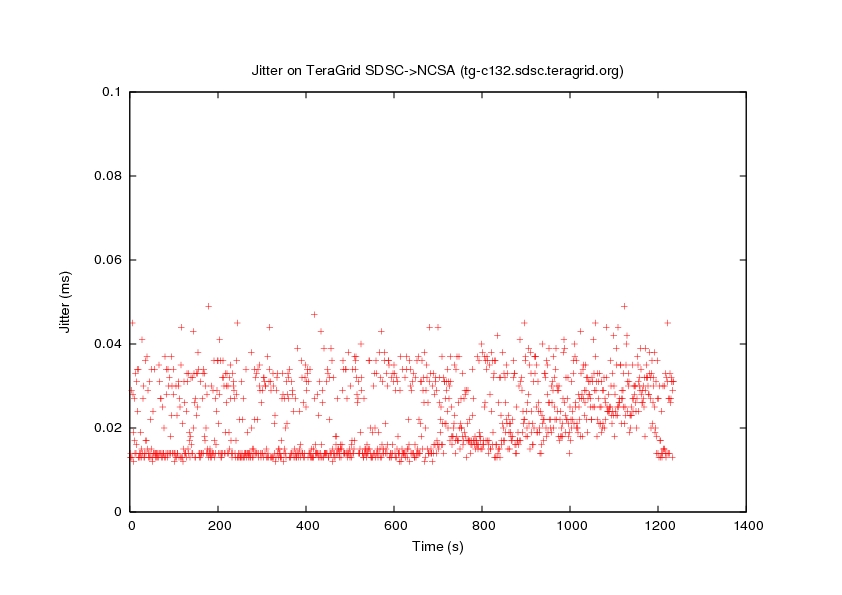

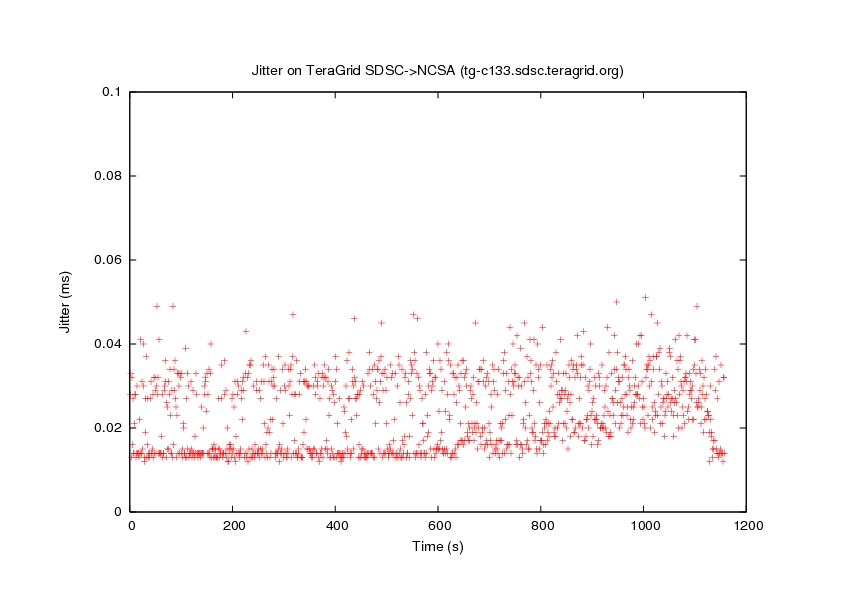

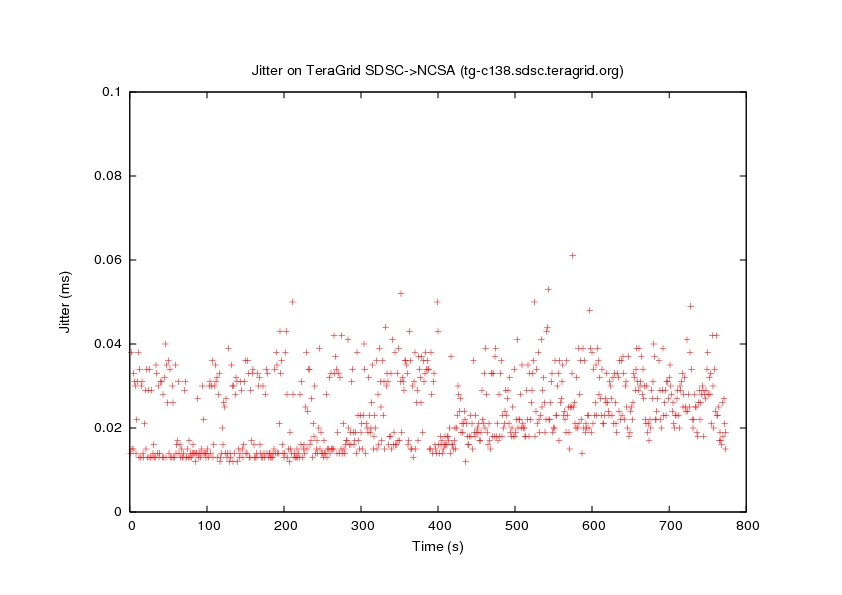

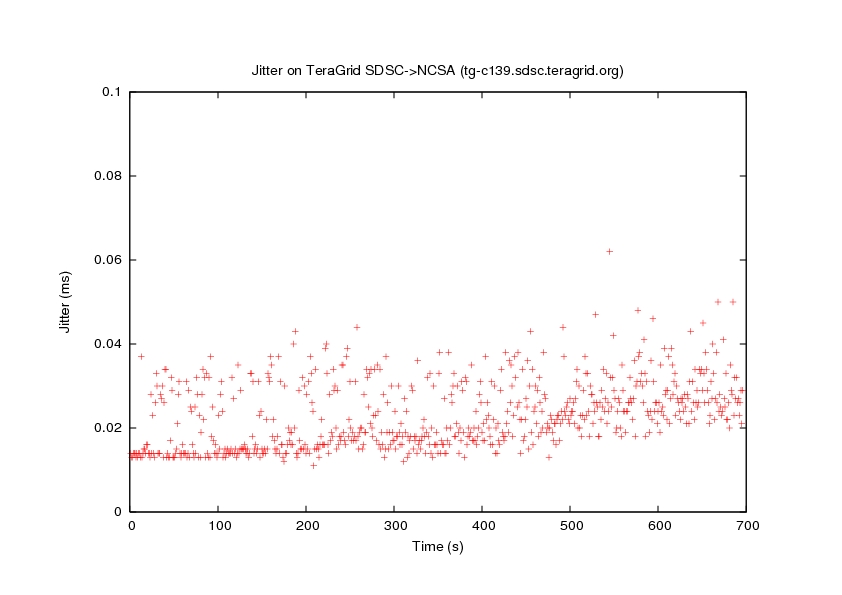

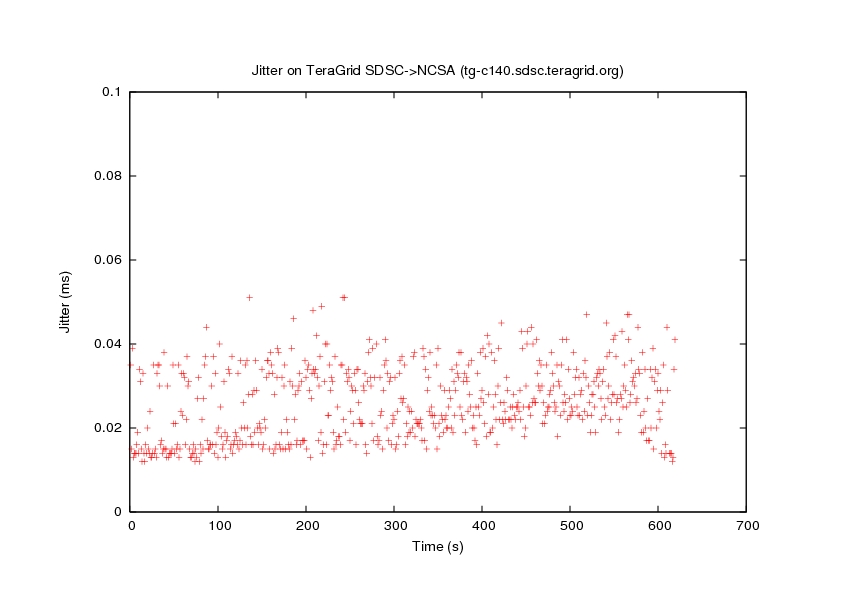

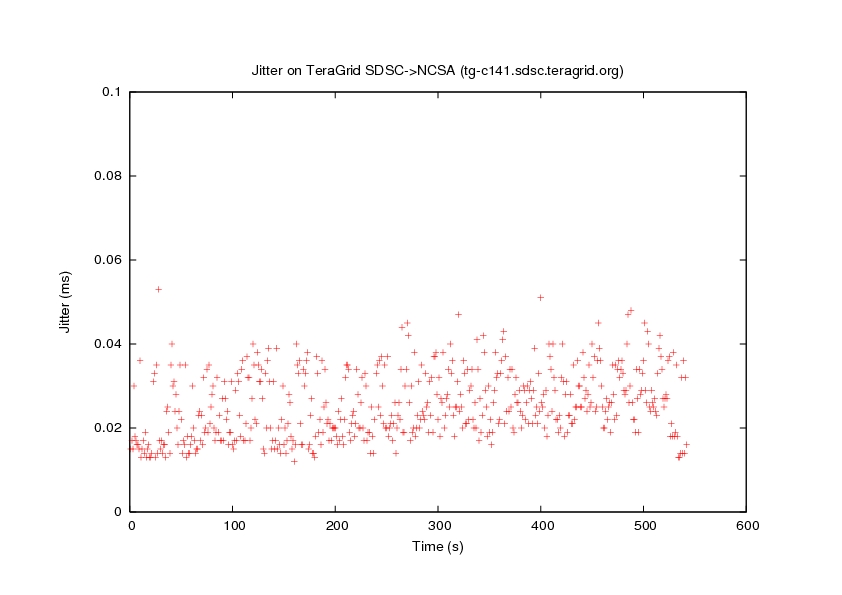

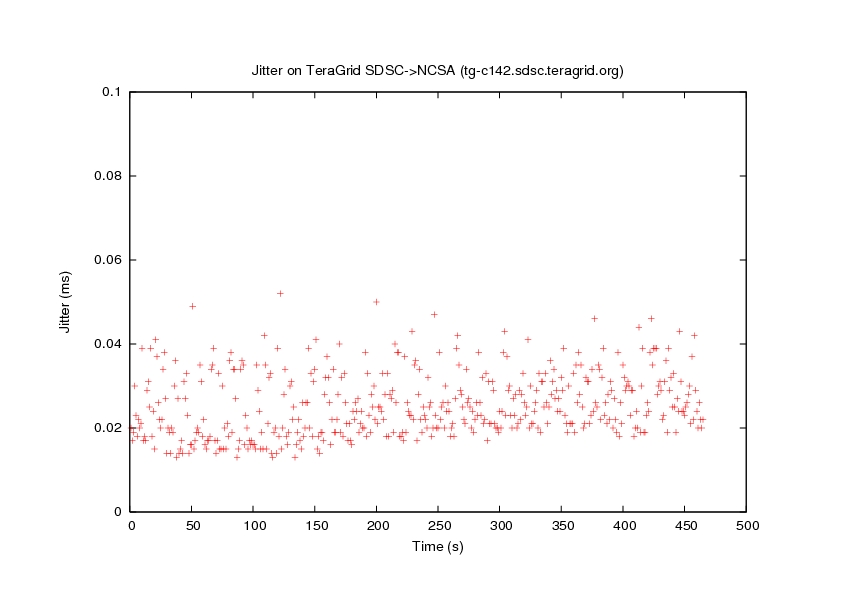

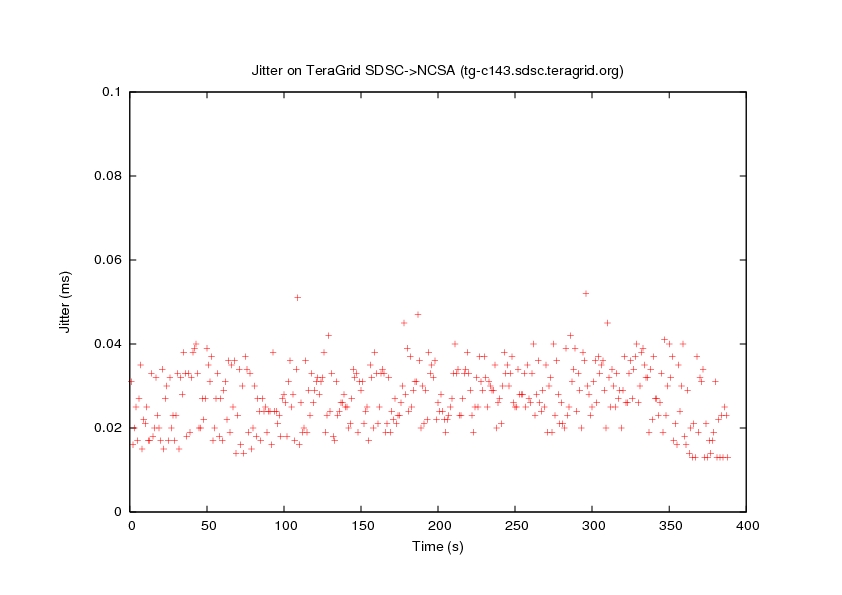

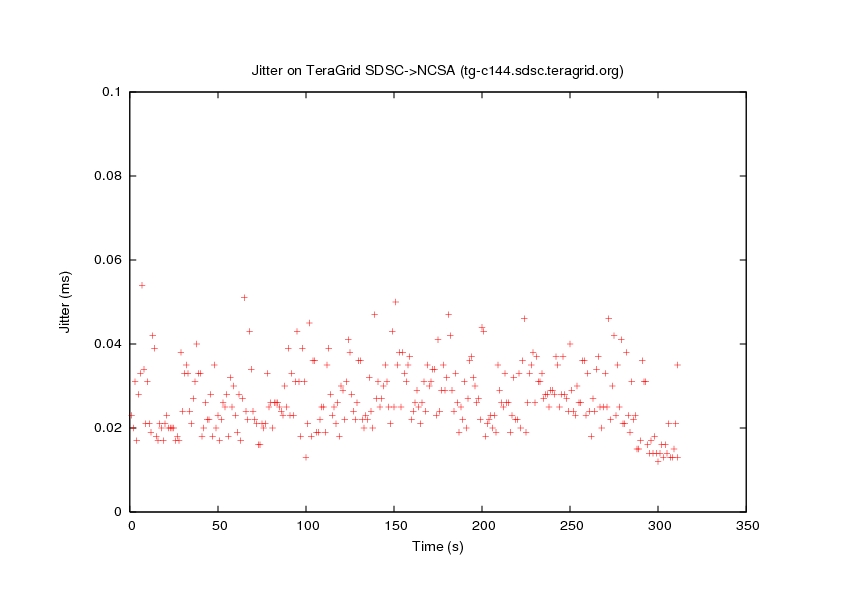

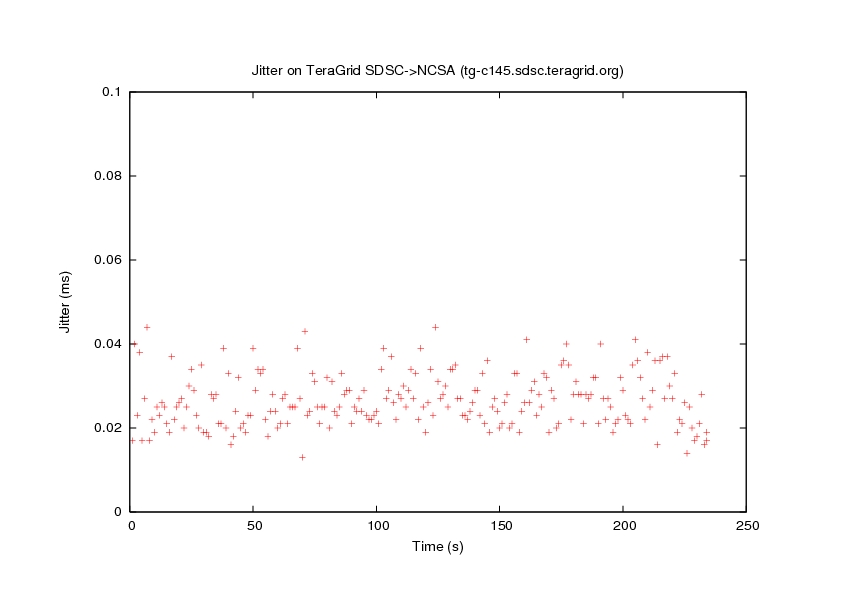

The experimental study was run between two TeraGrid sites at multiple dates in January-March 2007. Teragrid is a fully operational, nationwide collection of super computers and high performance clusters, inter-connected by > 10Gbps networks.

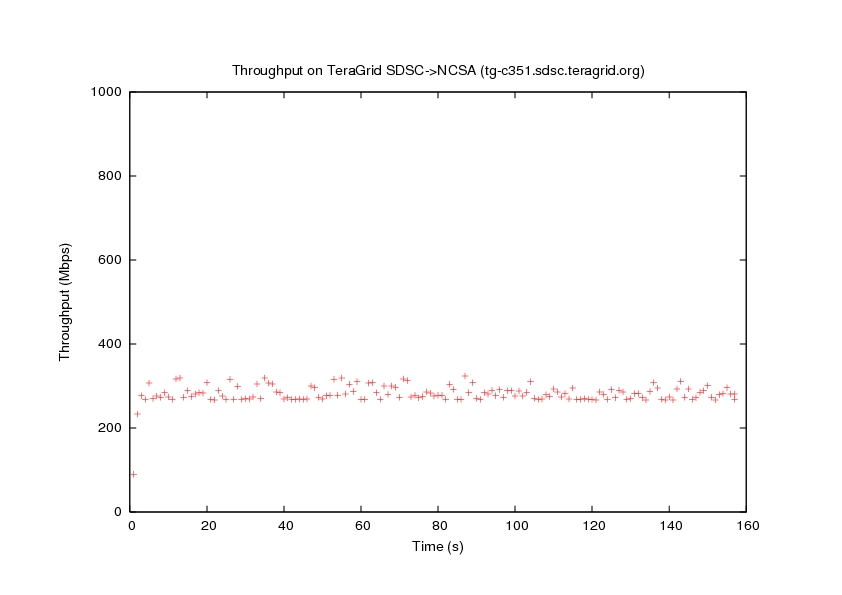

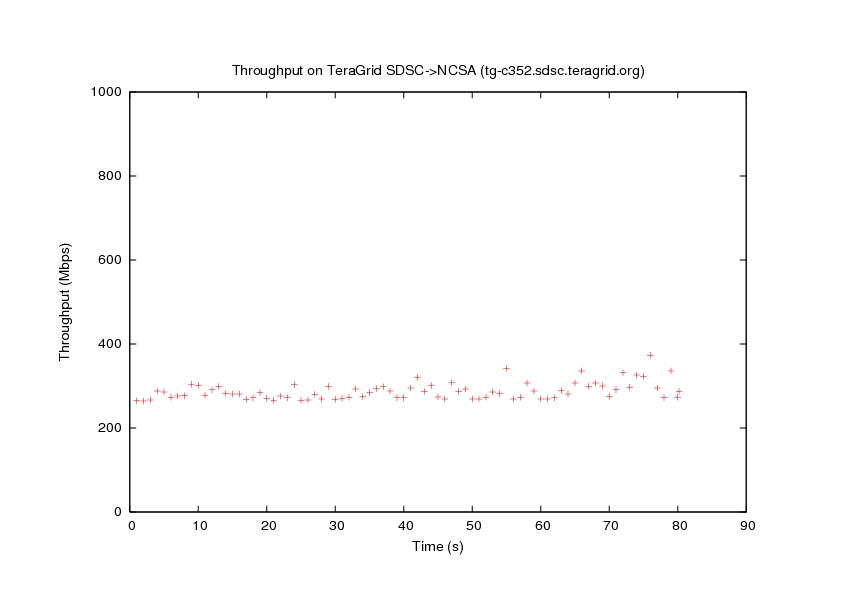

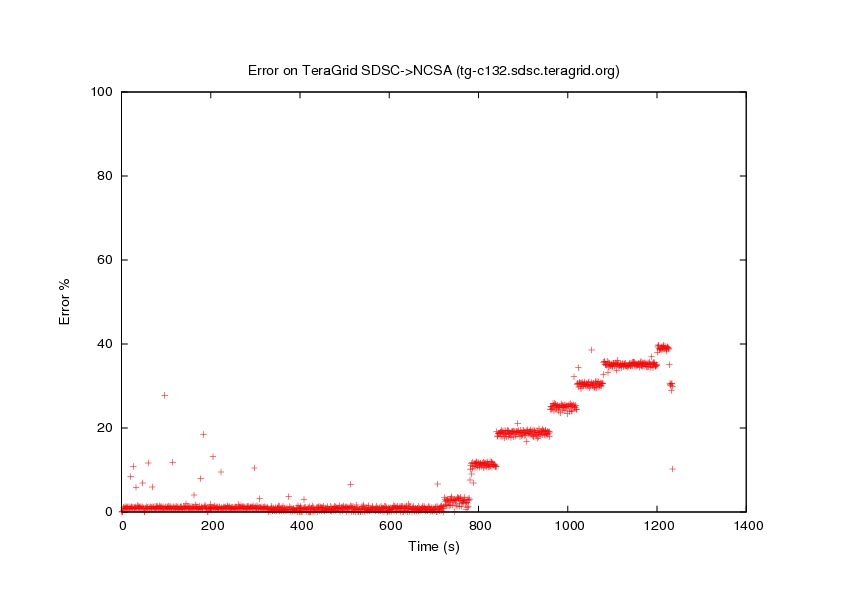

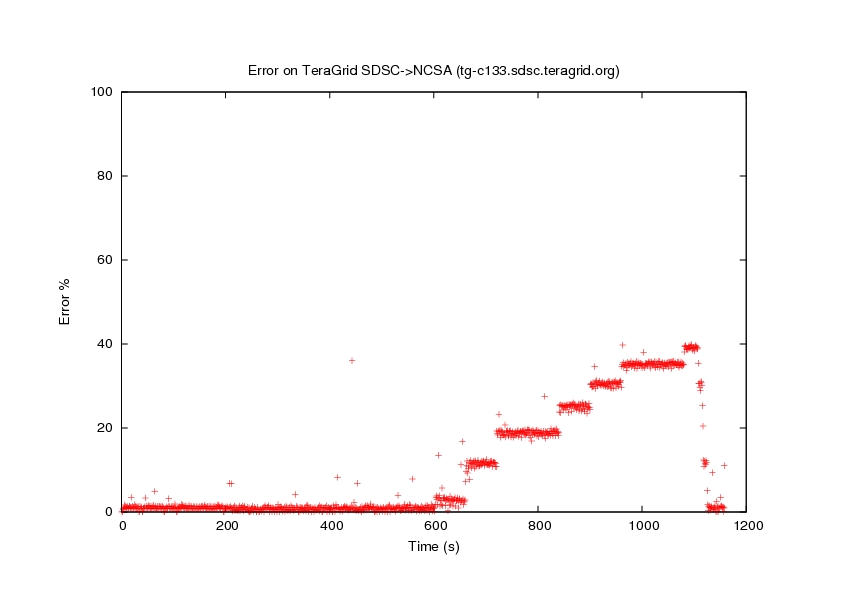

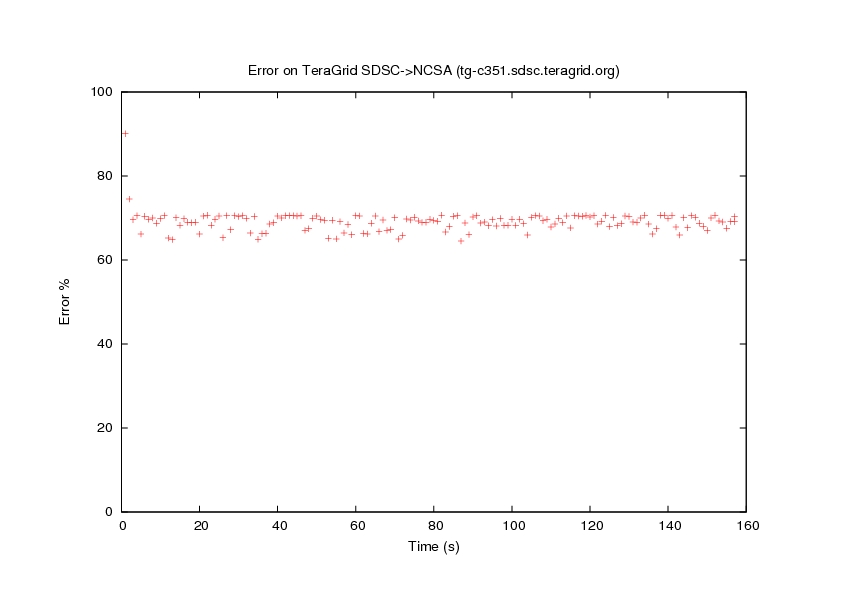

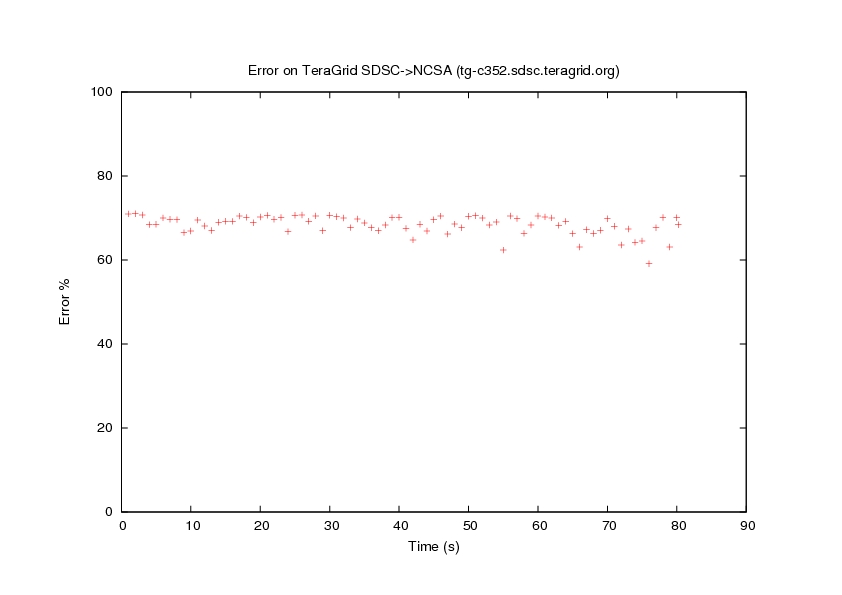

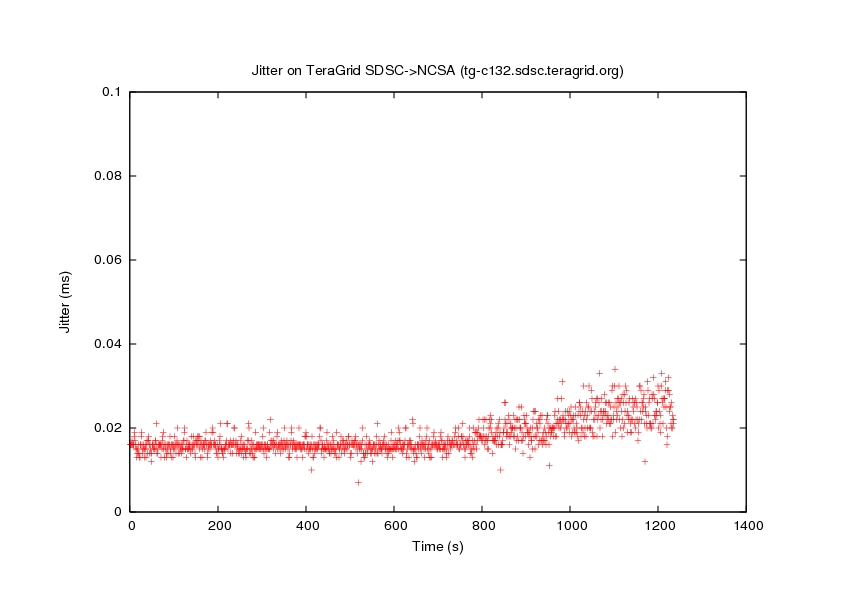

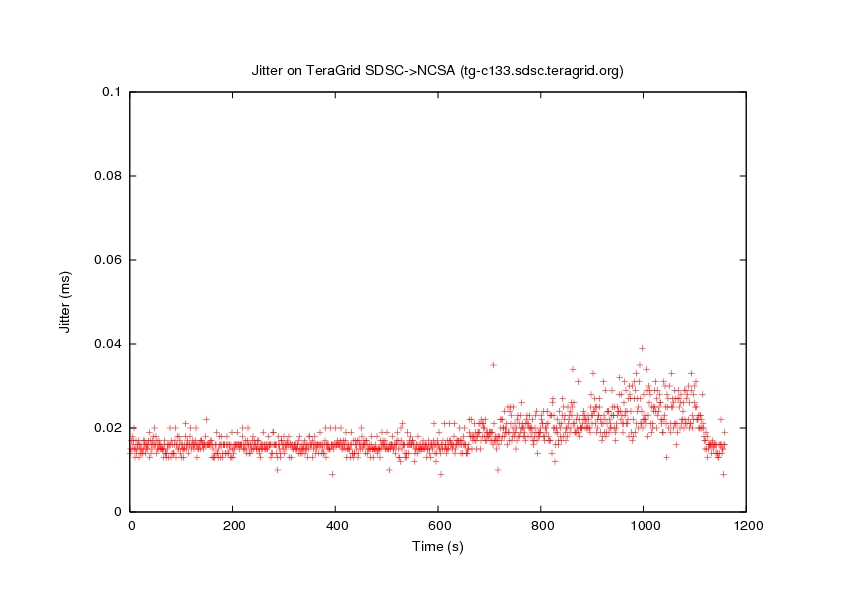

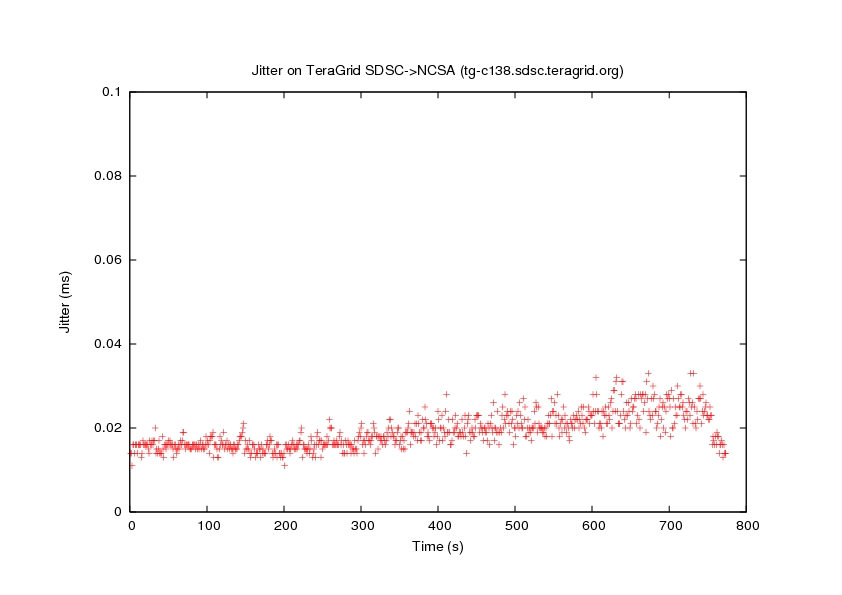

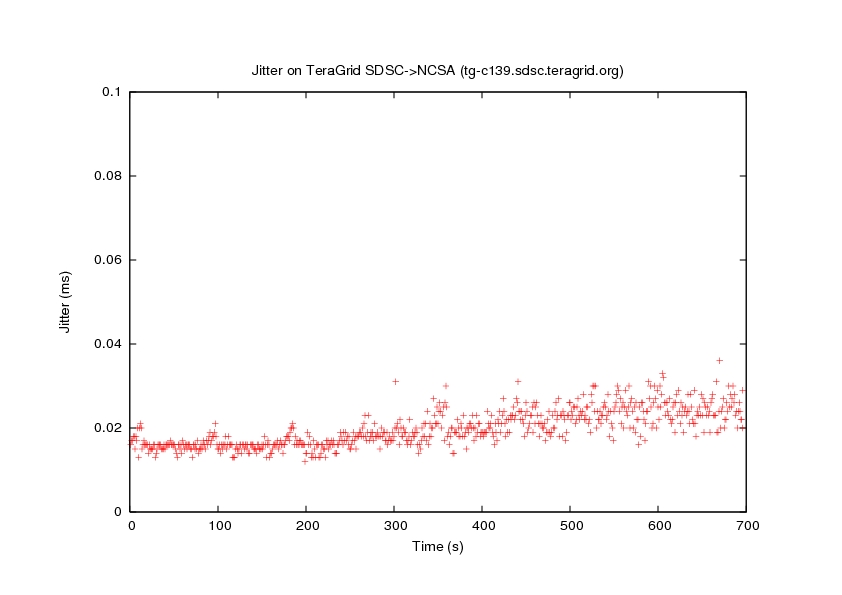

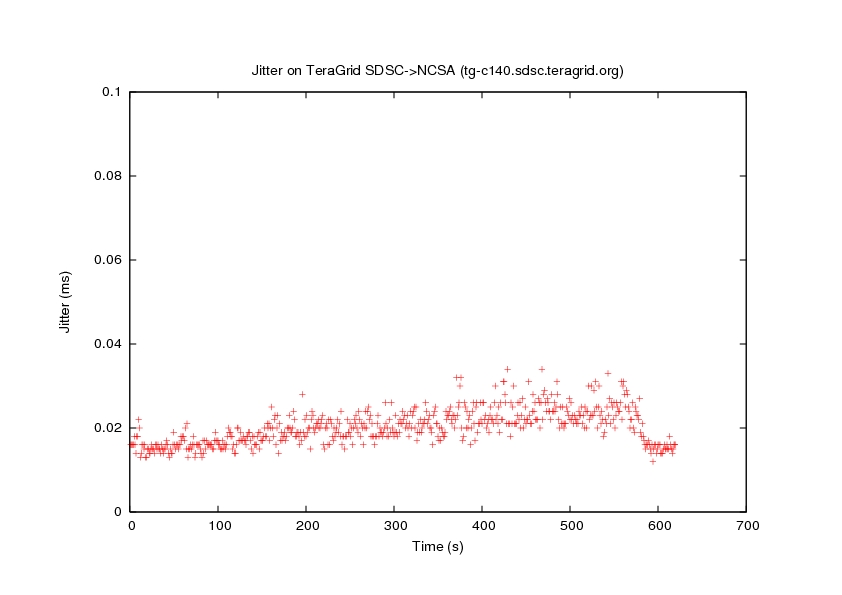

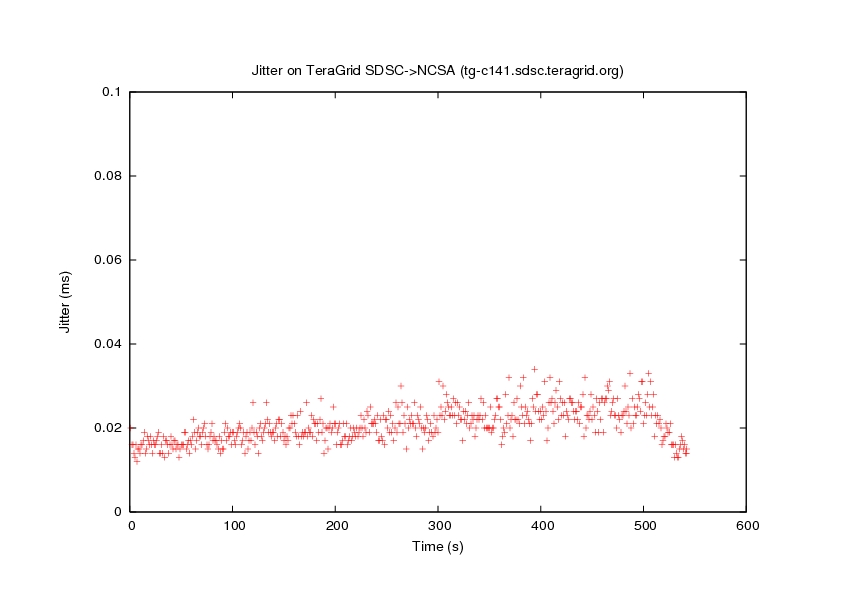

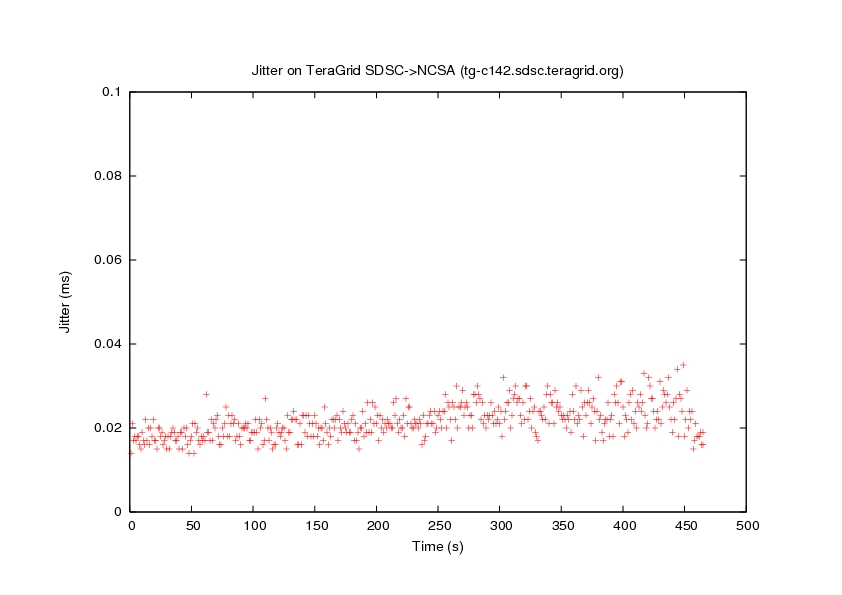

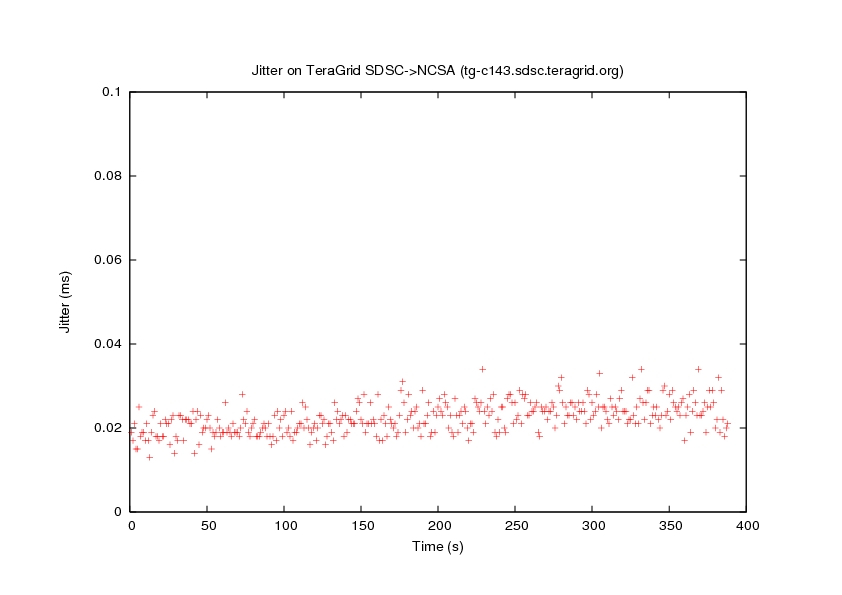

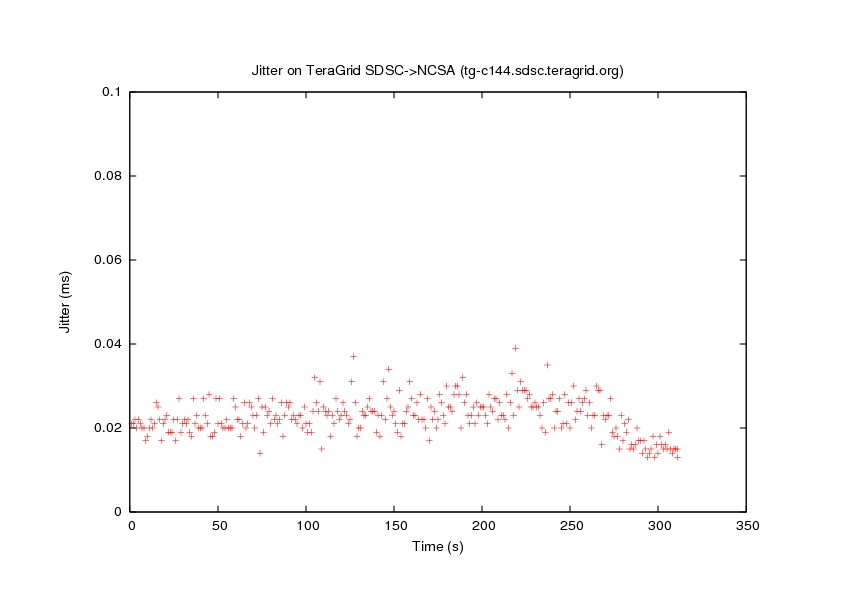

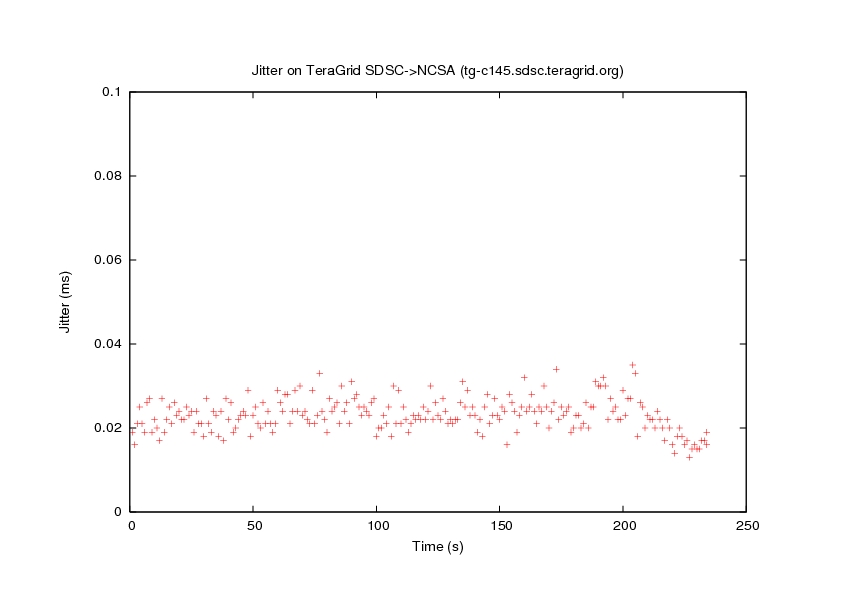

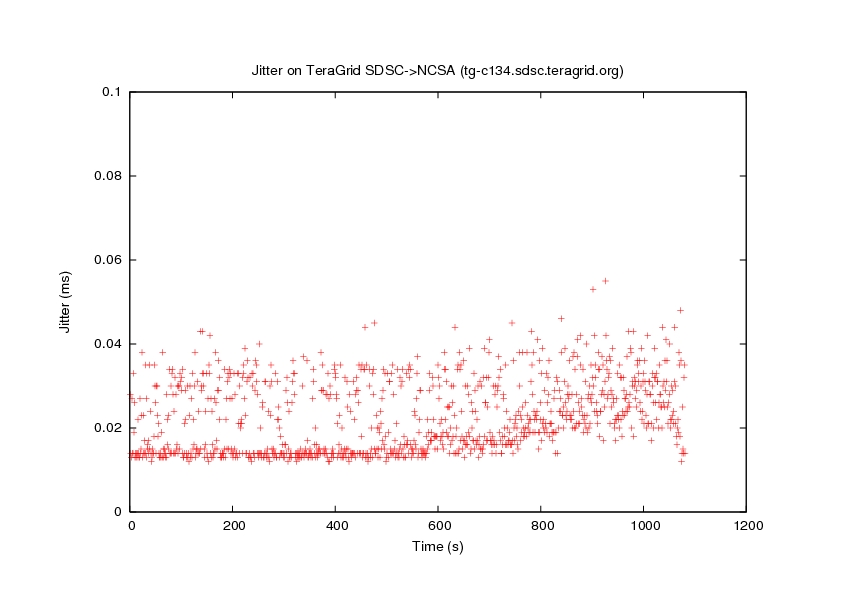

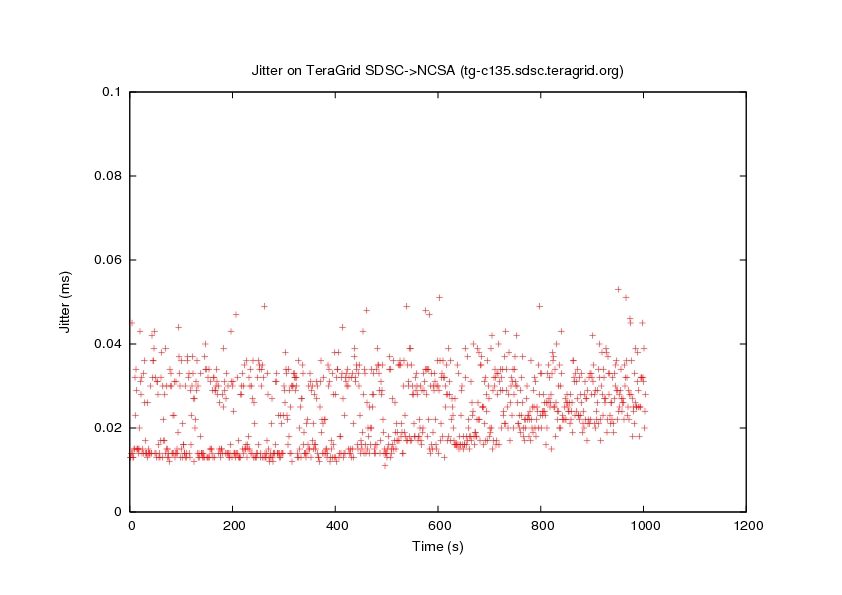

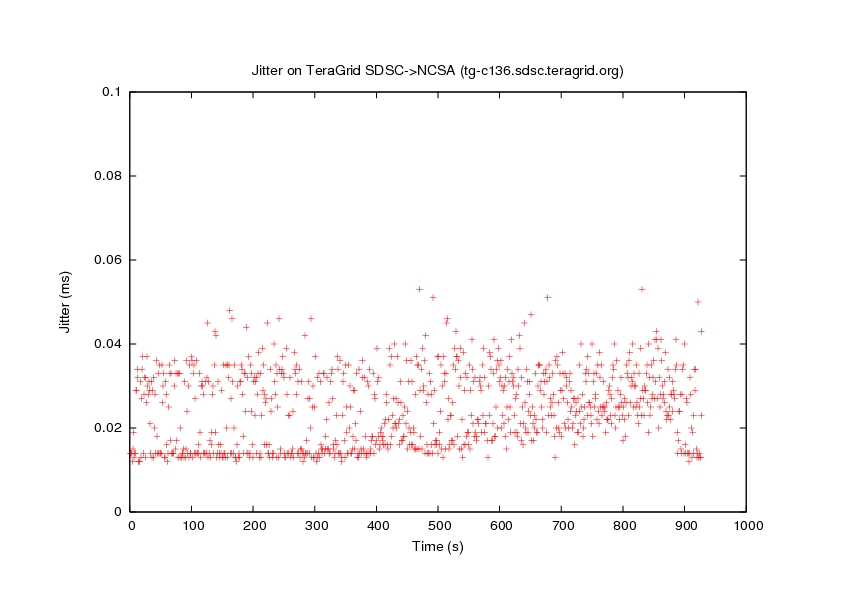

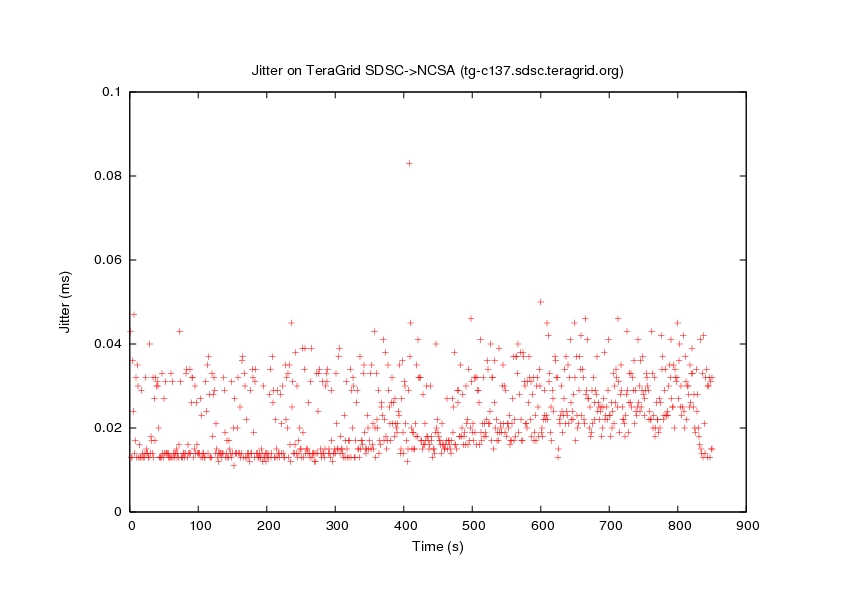

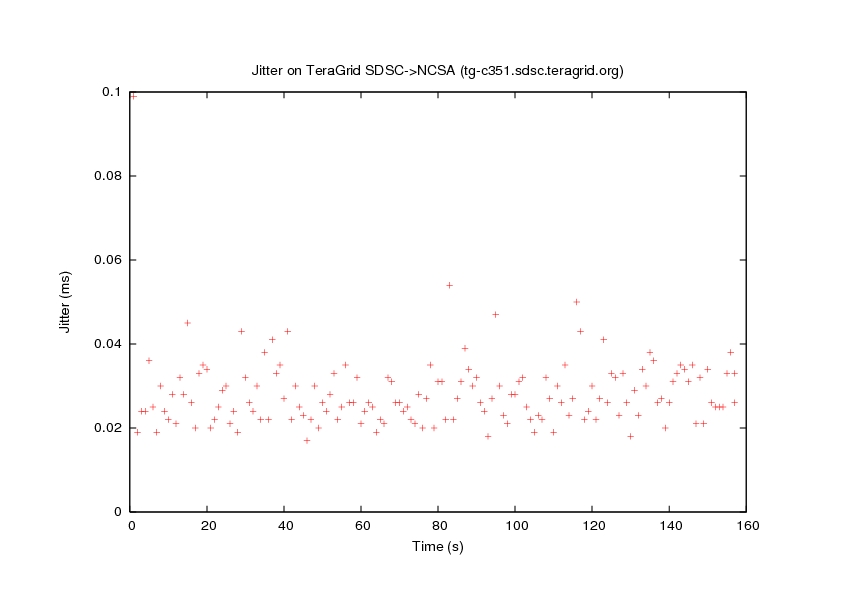

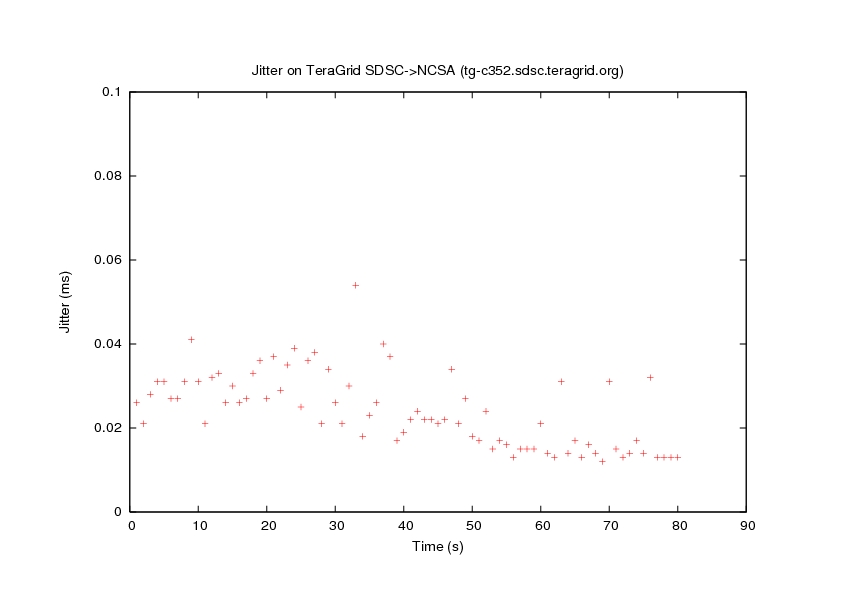

The two clusters chosen for our experiments was hosted at the San Diego Supercomputer Center in California, and the National Center for Supercomputing Applications in Illinois. The two sites are roughly 1679 miles (2703 km) apart. During the experiments we measured an average RTT of around 60ms

The experiments were run with between 2 and 16 nodes at each site. Each node is an Intel Xeon equipped with 1Gbps ethernet and 4Gpbs Myrinet connections (the latter is used by the cluster internal storage area network) with at least 4GB of RAM and was dedicated to the experiment. The nodes at each site are interconnected with a Cisco [[big honking thing - check]] with an internal switching capacity of [[verify]] Gbps. With the nodes being switched, the inter-cluster cross traffic from other users should be minimal.

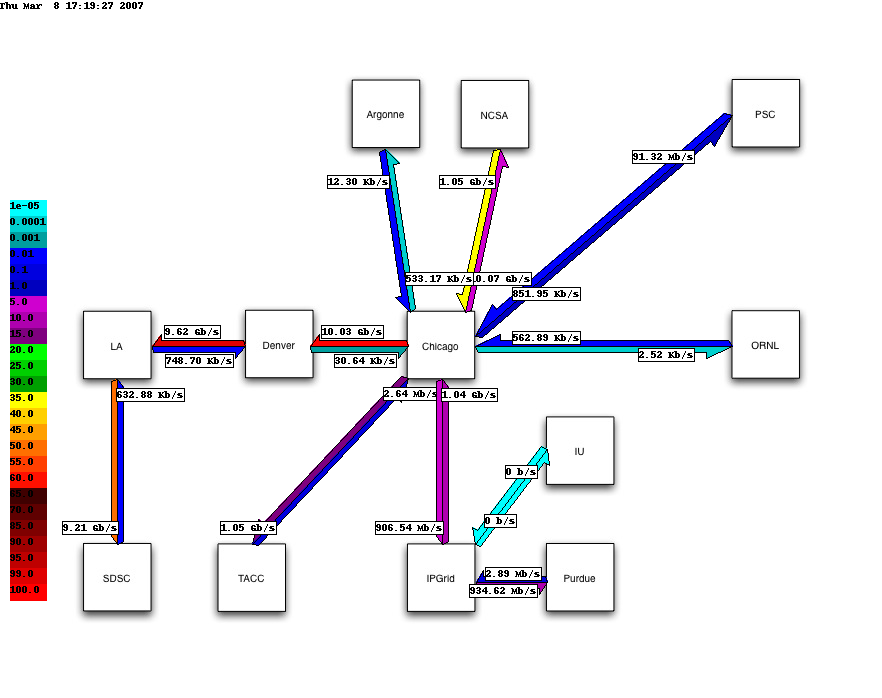

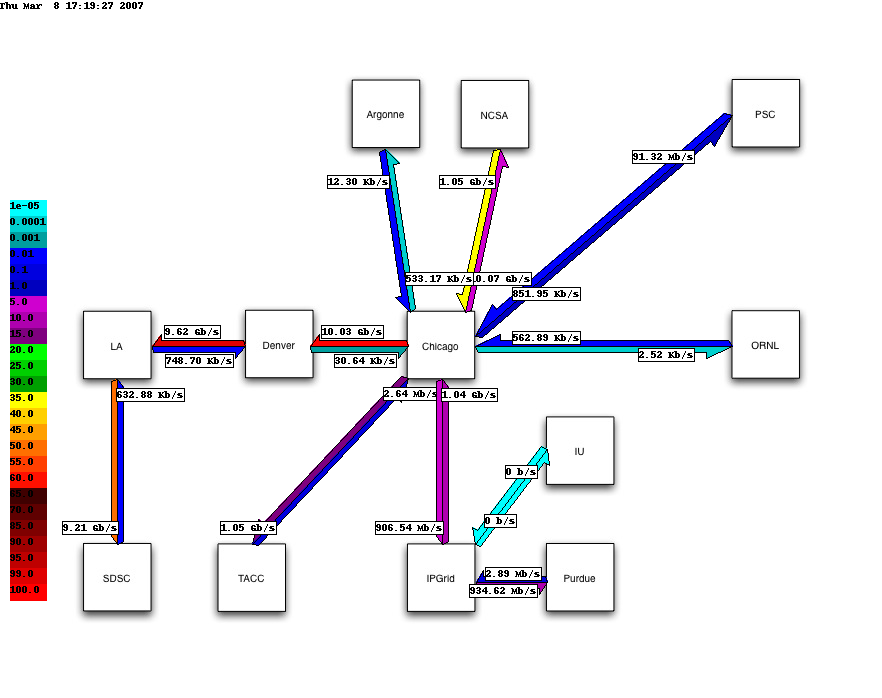

The following is a snapshot of the network backbone (including the network throughput) connecting the SDSC and NCSA sites during one of our experiments. The network path is: SDSC->LA->Denver->Chicago->NCSA.

For traffic generation I had to hack iperf version 2 slightly to record trains of losses on the link. I used 2 main traffic types: Parallel and Single Receiver. In the Parallel setup I paired a single sender node in SDSC with a single receiver node at NCSA, and then varied the number of pairs. In the Single receiver case I picked a single receiver at NCSA, and then varied the number of sender nodes at SDSC connected to that single receiver node. These two scenarios should capture the most common communication patterns between clusters of machines.

Last update: 14th March 2007