In this assignment you will implement Monte Carlo direct illumination in a ray tracing renderer. This assignment is to be done in pairs and it is due in two weeks, on March 14.

The framework I am handing out is a very minimal Java ray tracer (just a few hours' coding away from the framework we use for assignment 1 in CS465). It supports illumination from a background and from lambertian emitting surfaces. It renders direct illumination only and does so using uniform sampling (with respect to projected solid angle) over the hemisphere at the shading point.

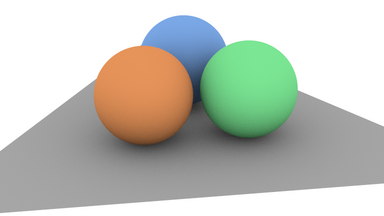

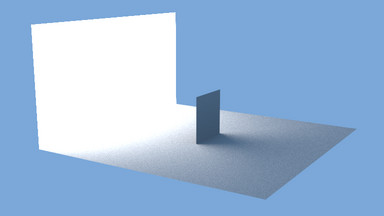

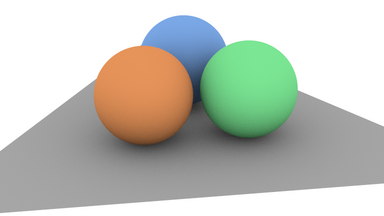

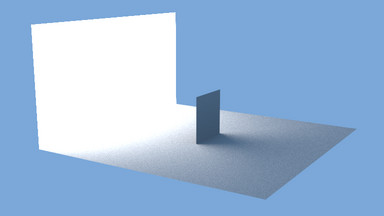

This sampling scheme is pretty inefficient for most scenes, but it does work. Here are two images generated from the test scenes three-spheres.xml and perp-lum.xml with 3600 samples per pixel:

At this (absurd) number of samples per pixel, the spheres image is pretty nicely converged but the other one still has noticeable noise.

One notable omission from the ray tracer is any kind of acceleration structure. This means you don't want to render any scenes with more than a dozen or so primitives. It would be a simple matter to retrofit an acceleration structure that someone wrote for assignment 5 in CS465 onto this renderer, and if you do that I'd encourage you to share it with the others in the class.

Your assignment is to extend the ray tracer in four ways:

Adding this, without thinking about sampling, is a simple engineering exercise: you read in the map, and then do a texture lookup whenever a ray hits the background. Nearest-neighbor interpolation is fine—the point is illumination, not seeing the background directly.

The standard source for these maps is Paul Debevec's collection of light probe images. Probably the easiest to work with are the .pfm images with the cross-cube layout.

Using the map as a probability distribution from which to draw samples is not entirely straightforward. We'll talk some more about the options in class.

Evaluating the microfacet model is old hat by now. Generating samples exactly according to the BRDF is not really possible; instead, generate samples by drawing normals at random from the microfacet distribution. You will have to figure out exactly what your PDF is, but this is not too hard.

The only hard thing about stratification is bookkeeping. I have

made a start by including the Sampler interface (with a trivial

implementation). For this assignment you won't need much

machinery, since there are only three 2D domains to stratify

over: the pixel, the light sources, and the BRDF. By passing

the sampler around, you can ensure that these three domains are

each stratified and that the 6D pattern is

Once you have managed to propagate 2D random seeds to the right places, each of your sample-generation methods will just warp the square onto the appropriate domain in a way that observes the desired PDF.

Once all the other machinery is in place, this is pretty straightforward. You need to make sure you can correctly evaluate all your PDFs, even for a point that you didn't generate from that PDF.