Project 5 Part A: The Power of Diffusion Models!

Implement and deploy diffusion models for image generation. The first part of a larger project.

Hole Filling

"Make it Real"

A Lithograph of a Waterfall

A Lithograph of a Skull

An Oil Painting of an Old Man

An Oil Painting of People Around a Fire

Key Information

| Assigned | Thursday, April 17, 2025 (fork the starter code from Part A: GitHub Classroom, and Part B: GitHub Classroom) |

| Due |

Part A: Friday, April 25, 2025 (early due date): submit to

your Part A GitHub repo and CMSX by 8:00 PM Part B: Tuesday, May 6, 2025 (Extended): submit to your Part B GitHub repo and CMSX by 11:59 PM |

| Code Submission |

For each part, submit the following to your GitHub Classroom repository:

- Completed notebook file ( .ipynb) with figures and results- Python backup file ( .py) with your final implementation

Also save your Colab notebook with figures and results as a PDF and upload it to the corresponding CMSX assignment for Part A and Part B. |

| Individual Project | This project must be completed individually (no group work). |

Detailed Instructions for Part A

Overview

In Part A, you’ll get hands-on experience with pre-trained diffusion models. You’ll implement the sampling loop and apply the model to creative image generation tasks such as inpainting and optical illusions.

This part is designed to help you understand how diffusion models work by exploring their behavior step by step. All work will be completed in a provided Colab notebook, available from GitHub Classroom.

Submission Instructions

Please submit the following to your Part A GitHub Classroom repository:

- The completed notebook file (

.ipynb) with your figures, results, and explanations - A Python backup file (

.py) with your final implementation

In addition, save your Colab notebook as a PDF and upload it to the CMSX assignment for Part A.

Tip: Start early! This part involves running pre-trained models, which may take time to execute on Colab.

Part 0: Setup

Gaining Access to DeepFloyd

We are going to use the DeepFloyd

IF diffusion model.

DeepFloyd is a two stage model trained by Stability AI.

The first stage produces images of size

- Make a Hugging Face account and log in.

- Accept the license on the model card of DeepFloyd/IF-I-XL-v1.0. Accepting the license on the stage I model card will auto accept for the other IF models.

- Log in locally by entering your Hugging Face Hub access token below. You should be able to find and create tokens here.

Disclaimer about Text Embeddings

DeepFloyd was trained as a text-to-image model, which takes text prompts as input and outputs images that are aligned with the text. Throughout this notebook, you will see that we ask you to generate with the prompt "a high quality photo". We want you to think of this as a "null" prompt that doesn't have any specific meaning, and is simply a way for the model to do unconditional generation. You can view this as using the diffusion model to "force" a noisy image onto the "manifold" of real images.

In the later sections, we will guide this project with a more detailed text prompt.

Downloading Precomputed Text Embeddings

Because the text encoder is very large, and barely fits on a free tier

Colab GPU, we have precomputed a couple of text embeddings for you to

try.

You can download the .pth file here.

This should hopefully save some headaches from GPU out of memory errors.

At the end of part A of the project, we provide you code if you want to

try your own text prompts. If you'd like, you can pay $10 for Colab Pro

and avoid needing to load the two models on different sessions.

In the notebook, we instantiate DeepFloyd's stage_1 and

stage_2 objects used for generation, as well as several

text

prompts for sample generation.

- For the 3 text prompts that we provide, display the caption and the

output of the model. Reflect on the quality of the outputs and their

relationships to the text prompts. Make sure to try at least 2

different

num_inference_stepsvalues. - Report the random seed that you're using here. You should use the same seed all subsequent parts.

Part 1: Sampling Loops

In this part of the problem set, you will write your own "sampling loops" that use the pretrained DeepFloyd denoisers. These should produce high quality images such as the ones generated above.You will then modify these sampling loops to solve different tasks such as inpainting or producing optical illusions.

Diffusion Models Primer

Starting with a clean image,

A diffusion model tries to reverse this process by denoising the image.

By giving a diffusion model a noisy

To generate images from the diffusion model (sampling), we start with

pure noise at timestep

For the DeepFloyd models,

The exact amount of noise added at each step is dictated by noise

coefficients,

1.1 Implementing the Forward Process

A key part of diffusion is the forward process, which takes a clean image and adds noise to it. In this part, we will write a function to implement this. The forward process is defined by:

which is equivalent to computing

You will need to use the alphas_cumprod variable, which

contains the

Deliverables

- Implement the

noisy_im = forward(im, t)function - Show the test image at noise level [250, 500, 750].

Hints

- The

torch.randn_likefunction is helpful for computing - Use the

alphas_cumprodvariable, which contains an array of the hyperparameters, withalphas_cumprod[t]corresponding to

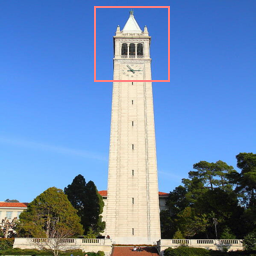

Cornell Tower

Noisy Cornell Tower at t=250

Noisy Cornell Tower at t=500

Noisy Cornell Tower at t=750

1.2 Classical Denoising

Let's try to denoise these images using classical methods. Again, take noisy images for timesteps [250, 500, 750], but use Gaussian blur filtering to try to remove the noise. Getting good results should be quite difficult, if not impossible.

Deliverables- For each of the 3 noisy test images from the previous part, show your best Gaussian-denoised version side by side.

-

torchvision.transforms.functional.gaussian_bluris useful. Here is the documentation.

Noisy Cornell Tower at t=250

Noisy Cornell Tower at t=500

Noisy Cornell Tower at t=750

Gaussian Blur Denoising at t=250

Gaussian Blur Denoising at t=500

Gaussian Blur Denoising at t=750

1.3 One-Step Denoising

Now, we'll use a pretrained diffusion model to denoise. The actual

denoiser can be found at stage_1.unet.

This is a UNet that has already been trained on a very, very

large dataset of

Because this diffusion model was trained with text conditioning, we

also need a text prompt embedding. We provide the embedding for the

prompt "a high quality photo" for you to use. Later on, you can

use your own text prompts.

Deliverables

- For the 3 noisy images from 1.2 (t = [250, 500, 750]):

- Using the UNet, denoise the image by estimating the noise.

- Estimate the noise in the new noisy image, by passing it

through

stage_1.unet - Remove the noise from the noisy image to obtain an estimate of the original image.

- Visualize the original image, the noisy image, and the estimate of the original image

Hints

- When removing the noise, you can't simply subtract the noise

estimate. Recall that in equation A.2 we need to scale the noise. Look

at equation A.2 to figure out how we predict

- You will probably have to wrangle tensors to the correct device

and

into the correct data types. The functions

.to(device)and.half()will be useful. The denoiser is loaded ashalfprecision (to save memory). - The signature for the unet is

stage_1.unet(image, t, encoder_hidden_states=prompt_embeds, return_dict=False). You need to pass in the noisy image, the timestep, and the prompt embeddings. Thereturn_dictargument just makes the output nicer. - The unet will output a tensor of shape (1, 6, 64, 64). This is because DeepFloyd was trained to predict the noise as well as variance of the noise. The first 3 channels is the noise estimate, which you will use. The second 3 channels is the variance estimate which you may ignore.

- To save GPU memory, you should wrap all of your code in a

with torch.no_grad():context. This tells torch not to do automatic differentiation, and saves a considerable amount of memory.

Noisy Cornell Tower at t=250

Noisy Cornell Tower at t=500

Noisy Cornell Tower at t=750

One-Step Denoised Cornell Tower at t=250

One-Step Denoised Cornell Tower at t=500

One-Step Denoised Cornell Tower at t=750

1.4 Iterative Denoising

In part 1.3, you should see that the denoising UNet does a much better job of projecting the image onto the natural image manifold, but it does get worse as you add more noise. This makes sense, as the problem is much harder with more noise!

But diffusion models are designed to denoise iteratively. In this part we will implement this.

In theory, we could start with noise

It turns out, we can actually speed things up by skipping steps. The rationale for why this is possible is due to a connection with differential equations. It's a tad complicated, and not within scope for this course, but if you're interested you can check out this excellent article.

To skip steps we can create a new list of timesteps that we'll call

strided_timesteps, which does just this.

strided_timesteps will

correspond to the noisiest image (and thus the largest strided_timesteps[-1] will correspond to a clean image.

One

simple way of constructing this list is by introducing a regular

stride

step (e.g. stride of 30 works well).

On the ith denoising step we are at strided_timesteps[i], and want to get to strided_timesteps[i+1] (from more noisy to less noisy).

To

actually do this, we have the following formula:

where:

alphas_cumprod, as explained above.

add_variance, to do this for you.

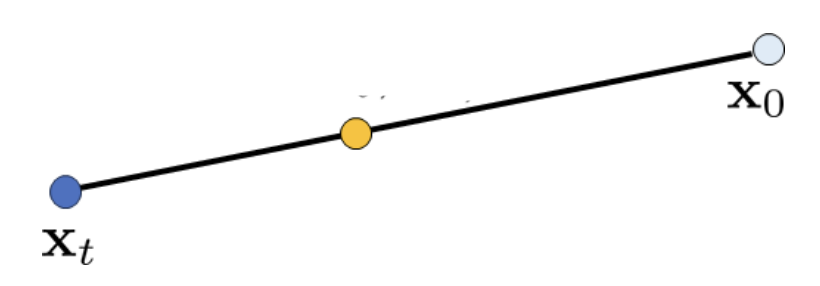

You can think of this as a linear interpolation between the signal and

noise:

Interpolation

First, create the list strided_timesteps. You should

start at timestep 990, and take step sizes of size 30 until you arrive at

0. After completing the problem set, feel free to try different

"schedules" of timesteps.

Also implement the function iterative_denoise(image,

i_start), which takes a noisy image image, as well

as a starting index i_start. The function should denoise

an image starting at timestep timestep[i_start], applying

the above formula to obtain an image at timestep t' =

timestep[i_start + 1], and repeat iteratively until we arrive at

a clean image.

Add noise to the test image im to timestep

timestep[10] and display this image. Then run the

iterative_denoise function on the noisy image, with

i_start = 10, to obtain a clean image and display it. Please

display every 5th image of the denoising loop. Compare this to the

"one-step" denoising method from the previous section, and to gaussian

blurring.

Deliverables

Usingi_start = 10:

- Create

strided_timesteps: a list of monotonically decreasing timesteps, starting at 990, with a stride of 30, eventually reaching 0. Also initialize the timesteps using the functionstage_1.scheduler.set_timesteps(timesteps=strided_timesteps) - Show the noisy image every 5th loop of denoising (it should gradually become less noisy)

- Show the final predicted clean image, using iterative denoising

- Show the predicted clean image using only a single denoising step, as was done in the previous part. This should look much worse.

- Show the predicted clean image using gaussian blurring, as was done in part 1.2.

- Complete the

iterative_denoisefunction

- Remember, the unet will output a tensor of shape (1, 6, 64, 64).

This is because DeepFloyd was trained to predict the noise as well as

variance of the noise. The first 3 channels is the noise estimate,

which you will use here.

The second 3 channels is the variance estimate which you will pass to

the

add_variancefunction - Read the documentation for the

add_variancefunction to figure out how to use it to add the - Depending on if your final images are torch tensors or numpy arrays, you may need to modify the `show_images` call a bit.

Noisy Cornell Tower at t=90

Noisy Cornell Tower at t=240

Noisy Cornell Tower at t=390

Noisy Cornell Tower at t=540

Noisy Cornell Tower at t=690

Original

Iteratively Denoised Cornell Tower

One-Step Denoised Cornell Tower

Gaussian Blurred Cornell Tower

1.5 Diffusion Model Sampling

In part 1.4, we use the diffusion model to denoise an image. Another

thing

we can do with the iterative_denoise function is to

generate

images from scratch. We can do this by setting i_start = 0

and passing in random noise. This effectively denoises pure noise.

Please

do this, and show 5 results of "a high quality photo".

Deliverables

- Show 5 sampled images.

Hints

- Use

torch.randnto make the noise. - Make sure you move the tensor to the correct device and correct data

type by calling

.half()and.to(device). - The quality of the images will not be spectacular, but should be reasonable images. We will fix this in the next section with CFG.

Sample 1

Sample 2

Sample 3

Sample 4

Sample 5

1.6 Classifier-Free Guidance (CFG)

You may have noticed that the generated images in the prior section are not very good, and some are completely non-sensical. In order to greatly improve image quality (at the expense of image diversity), we can use a technicque called Classifier-Free Guidance.

In CFG, we compute both a conditional and an unconditional noise

estimate. We denote these

Please implement the iterative_denoise_cfg function,

identical to the iterative_denoise function but using

classifier-free guidance.

To get an unconditional noise estimate, we can just pass an empty prompt

embedding to the diffusion model (the model was trained to predict an

unconditional noise estimate when given an empty text prompt).

Disclaimer

Disclaimer

Before, we used "a high quality photo" as a "null"

condition.

Now, we will use the actual "" null prompt for

unconditional

guidance for CFG. In the later part, you should always use

""

null prompt for unconditional guidance and use "a high quality

photo" for unconditional generation.

Deliverables

- Implement the

iterative_denoise_cfgfunction - Show 5 images of

"a high quality photo"with a CFG scale of

Hints

- You will need to run the UNet twice, once for the conditional prompt embedding, and once for the unconditional

- The UNet will predict both a conditional and an unconditional

variance. Just use the conditional variance with the

add_variancefunction. - The resulting images should be much better than those in the prior section.

Sample 1 with CFG

Sample 2 with CFG

Sample 3 with CFG

Sample 4 with CFG

Sample 5 with CFG

1.7 Image-to-image Translation

Note: You should use CFG from this point forward.In part 1.4, we take a real image, add noise to it, and then denoise. This effectively allows us to make edits to existing images. The more noise we add, the larger the edit will be. This works because in order to denoise an image, the diffusion model must to some extent "hallucinate" new things -- the model has to be "creative." Another way to think about it is that the denoising process "forces" a noisy image back onto the manifold of natural images.

Here, we're going to take the original test image, noise it a little, and force it back onto the image manifold without any conditioning. Effectively, we're going to get an image that is similar to the test image (with a low-enough noise level). This follows the SDEdit algorithm.

To start, please run the forward process to get a noisy test image, and

then run the iterative_denoise_cfg function using a

starting

index of [1, 3, 5, 7, 10, 20] steps and show the results, labeled with

the

starting index. You should see a series of "edits" to the original

image,

gradually matching the original image closer and closer.

- Edits of the test image, using the given prompt at noise levels [1,

3, 5, 7, 10, 20] with text prompt

"a high quality photo" - Edits of 2 of your own test images, using the same procedure.

Hints

- You should have a range of images, gradually looking more like the original image

SDEdit with i_start=1

SDEdit with i_start=3

SDEdit with i_start=5

SDEdit with i_start=7

SDEdit with i_start=10

SDEdit with i_start=20

Cornell Tower

1.7.1 Editing Hand-Drawn and Web Images

This procedure works particularly well if we start with a nonrealistic image (e.g. painting, a sketch, some scribbles) and project it onto the natural image manifold.

Please experiment by starting with hand-drawn or other non-realistic images and see how you can get them onto the natural image manifold in fun ways.

We provide you with 2 ways to provide inputs to the model:

- Download images from the web

- Draw your own images

Please find an image from the internet and apply edits exactly as above. And also draw your own images, and apply edits exactly as above. Feel free to copy the prior cell here. For drawing inspiration, you can check out the examples on this project page.

Deliverables

- 1 image from the web of your choice, edited using the above method for noise levels [1, 3, 5, 7, 10, 20] (and whatever additional noise levels you want)

- 2 hand drawn images, edited using the above method for noise levels [1, 3, 5, 7, 10, 20] (and whatever additional noise levels you want)

- We provide you with preprocessing code to convert web images to the format expected by DeepFloyd

- Unfortunately, the drawing interface is hardcoded to be 300x600 pixels, but we need a square image. The code will center crop, so just draw in the middle of the canvas.

Bear at i_start=1

Bear at i_start=3

Bear at i_start=5

Bear at i_start=7

Bear at i_start=10

Bear at i_start=20

Bear

House at i_start=1

House at i_start=3

House at i_start=5

House at i_start=7

House at i_start=10

House at i_start=20

Original House Sketch

1.7.2 Inpainting

We can use the same procedure to implement inpainting (following the RePaint paper). That is,

given an image

To do this, we can run the diffusion denoising loop. But at every step,

after obtaining

Essentially, we leave everything inside the edit mask alone, but we

replace everything outside the edit mask with our original image -- with

the correct amount of noise added for timestep

Please implement this below, and edit the picture to inpaint the top of the Cornell Tower.

Deliverables

- A properly implemented

inpaintfunction - The test image inpainted (feel free to use your own mask)

- 2 of your own images edited (come up with your own mask)

- look at the results from this paper for inspiration

Hints

- Reuse the

forwardfunction you implemented earlier to implement inpainting - Because we are using the diffusion model for tasks it was not trained for, you may have to run the sampling process a few times before you get a nice result.

- You can copy and paste your iterative_denoise_cfg function. To get inpainting to work should only require (roughly) 1-2 additional lines and a few small changes.

Cornell Tower

Mask

Hole to Fill

Cornell Tower Inpainted

1.7.3 Text-Conditional Image-to-image Translation

Now, we will do the same thing as SDEdit, but guide the

projection with a text prompt. This is no longer pure

"projection to the natural image manifold" but also adds control using

language. This is simply a matter of changing the prompt from

"a high quality photo" to any of the precomputed prompts we

provide you (if you want to use your own prompts, see appendix).

- Edits of the test image, using the given prompt at noise levels [1, 3, 5, 7, 10, 20]

- Edits of 2 of your own test images, using the same procedure

- The images should gradually look more like original image, but also look like the text prompt.

Snowy Mountain at noise level 1

Snowy Mountain at noise level 3

Snowy Mountain at noise level 5

Snowy Mountain at noise level 7

Snowy Mountain at noise level 10

Snowy Mountain at noise level 20

Cornell Tower

1.8 Visual Anagrams

In this part, we are finally ready to implement Visual

Anagrams and create optical illusions with diffusion models. In

this part, we will create an image that looks like "an oil

painting of people around a campfire", but when flipped upside

down will reveal "an oil painting of an old man".

To do this, we will denoise an image "an oil painting of an old man", to obtain noise estimate

"an oil painting of people around a campfire", to get noise

estimate

The full algorithm will be:

where UNet is the diffusion model UNet from before,

- Correctly implemented

visual_anagramsfunction - A visual anagram where on one orientation

"an oil painting of people around a campfire"is displayed and, when flipped,"an oil painting of an old man"is displayed. - 2 more illusions of your choice that change appearance when you flip it upside down.

- You may have to run multiple times to get a really good result for the same reasons as above.

An Oil Painting of an Old Man

An Oil Painting of People around a Campfire

1.9 Hybrid Images

In this part we'll implement Factorized Diffusion and create hybrid images just like in project 2.

In order to create hybrid images with a diffusion model we can use a

similar technique as above. We will create a composite noise estimate

where UNet is the diffusion model UNet,

- Correctly implemented

make_hybridsfunction - An image that looks like a

skullfrom far away but awaterfallfrom close up - 2 more hybrid images of your choosing.

- use

torchvision.transforms.functional.gaussian_blur - You may have to run multiple times to get a really good result for the same reasons as above

Hybrid image of a skull and a waterfall

Extra Credit: Create Something Cool!

Use what you’ve learned in this project to create something cool. Show your code and the resulting image(s) directly in the notebook. Have fun!

Optional: Using your own Prompts and Upsampling Generations

We provide you with code in the notebook to use your own prompts and upsample your generations!

Acknowledgements

This project was a joint effort by Daniel Geng, Ryan Tabrizi, and Hang Gao, advised by Liyue Shen, Andrew Owens, and Alexei Efros.

Last updated 16 April 2025