Last time we talked about parallel computing in machine learning, which allows us to take advantage of the parallel capabilities of our hardware to substantially speed up training and inference.

This is an instance of the general principle: Use algorithms that fit your hardware, and use hardware that fits your algorithms.

But compute is only half the story of making algorithms that fit the hardware.

How data is stored and accessed can be just as important as how it is processed.

This is especially the case for machine learning tasks, which often run on very large datasets that can push the limits of the memory subsystem of the hardware.

Today, we'll be talking about how memory affects the performance of the machine learning pipeline.¶

How do modern CPUs handle memory?¶

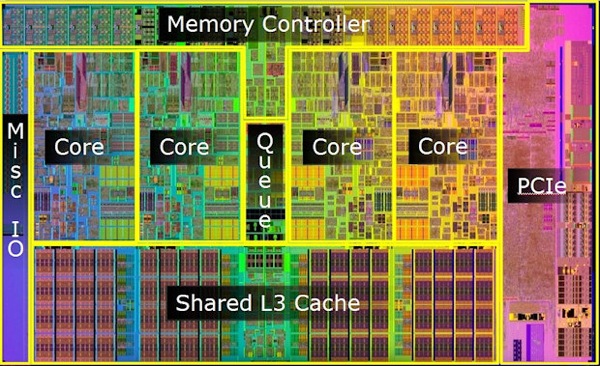

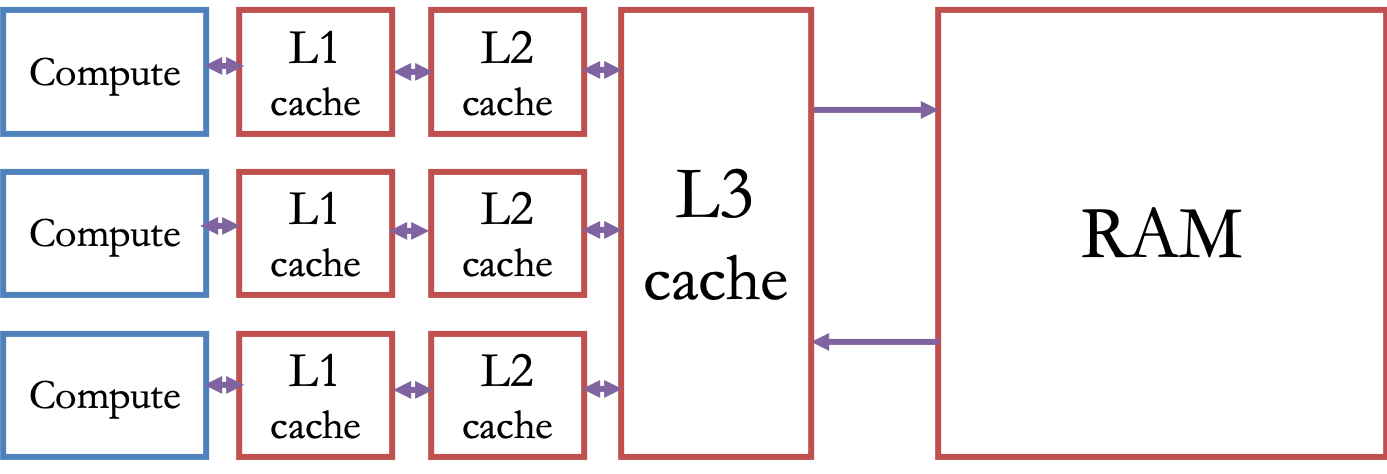

CPUs have a deep cache hierarchy. In fact, many CPUs are mostly cache by area.

The motivation for this was the ever-increasing gap between the speed at which the arithmetic units on the CPU could execute instructions and the time it took to read/write data to system memory.

Without some faster cache to temporarily store data, the performance of the CPU would be bottlenecked by the cost of reading and/or writing to RAM after every instruction.

But CPUs also have caches¶

Caches are small and fast memories that are located physically on the CPU chip, and which mirror data stored in RAM so that it can be accessed more quickly by the CPU.

The usual setup of memory on a CPU¶

a fast L1 cache (typically about 32KB) on each core

a somewhat slower, but larger L2 cache (e.g. 256 KB) on each core

an even slower and even larger L3 cache (e.g. 2 MB/core) shared among cores

DRAM — off-chip memory

Persistent storage — a hard disk or flash drive

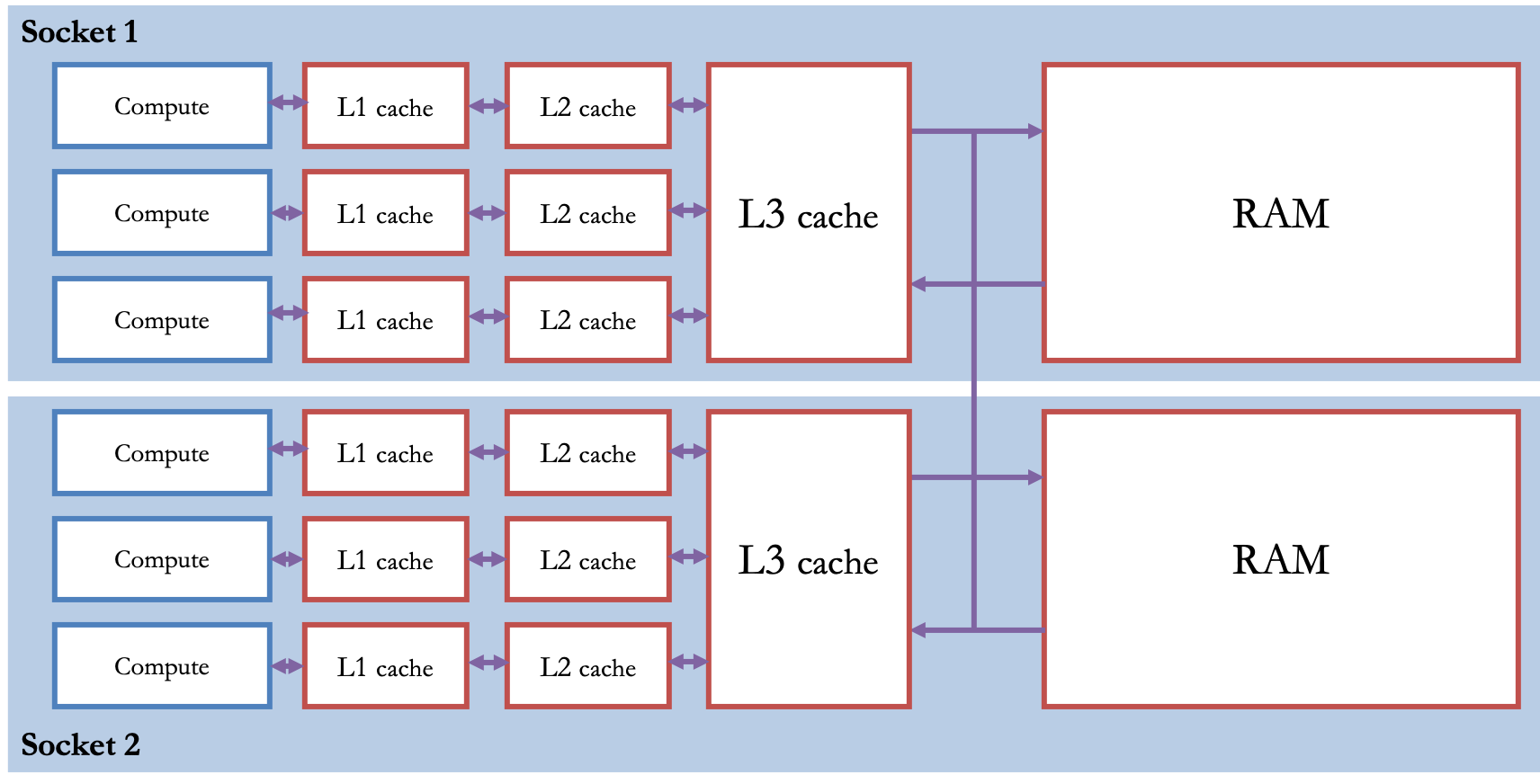

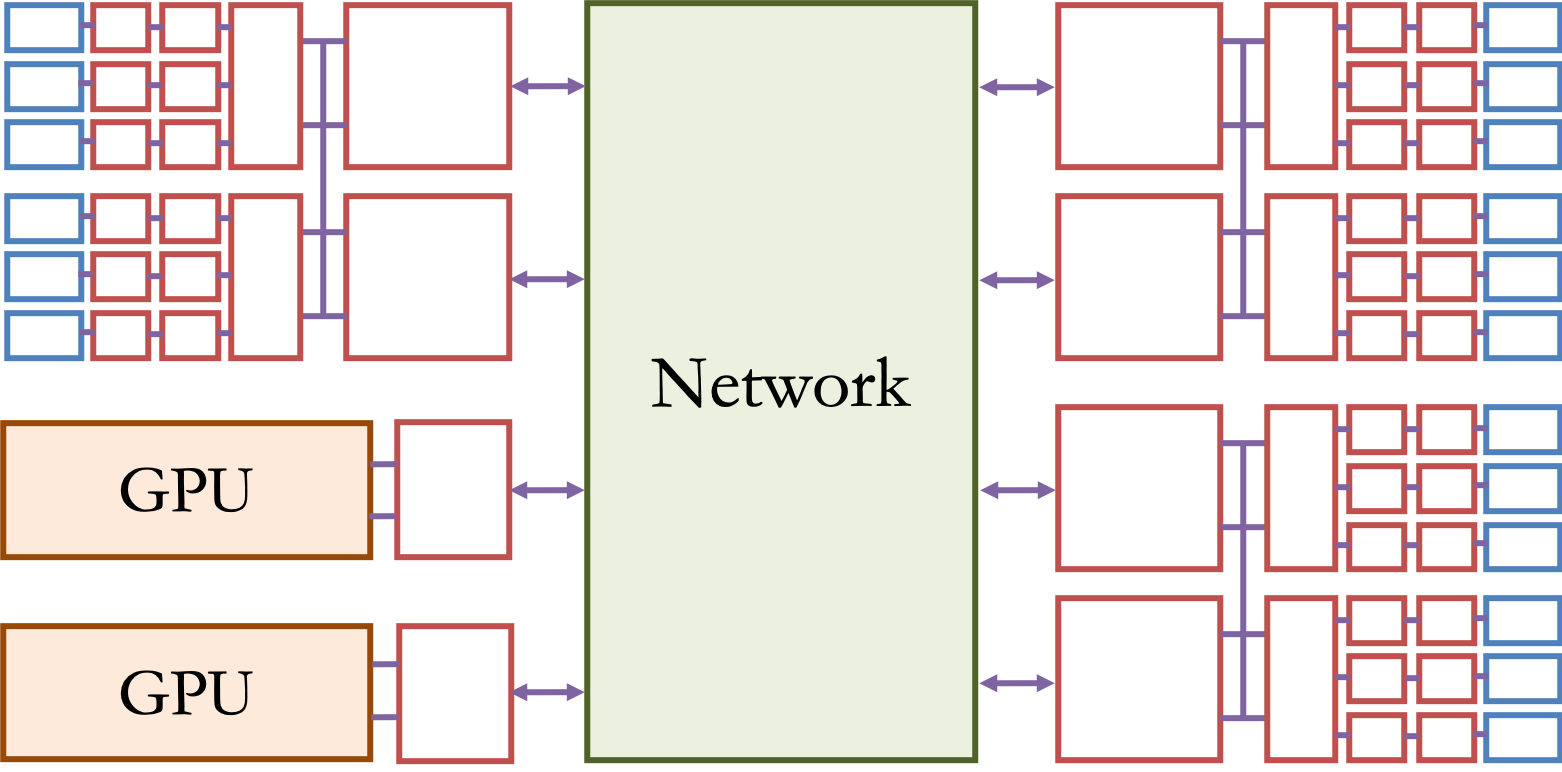

A model of a multi-socket computer¶

Multiple CPU chips on the same motherboard communicate with each other through physical connections on the motherboard.

The full view across multiple machines¶

Multiple CPU chips on the same motherboard communicate with each other through physical connections on the motherboard.

One important thing to notice here:

As we zoom out, much more of this diagram is "memory" boxes than compute boxes.¶

Hand-wavy consequence: as we scale up, the effect of memory becomes more and more important.

Another important take-away:

Memory has a hierarchical structure¶

Memories lower in the hierarchy are faster, but smaller

Memories higher in the hierarchy are larger, but slower, and are often shared among many compute units

Two ways to measure performance of a part of the memory hierarchy.¶

Latency: how much time does it take to access data at a new address in memory?

Throughput (a.k.a. bandwidth): how much data total can we access in a given length of time?

We saw these metrics earlier when evaluating the effect of parallelism.

Ideally, we'd like all of our memory accesses to go to the fast L1 cache, since it has high throughput and low latency.

What prevents this from happening in a practical program?

Result: the hardware needs to decide what is stored in the cache at any given time.

It wants to avoid, as much as possible, a situation in which the processor needs to access data that's not stored in the cache—this is called a cache miss.

Hardware uses two heuristics:

The principle of temporal locality: if a location in memory is accessed, it is likely that that location will be accessed again in the near future.

The principle of spatial locality: if a location in memory is accessed, it is likely that other nearby locations will be accessed in the near future.

Memory Locality¶

Temporal locality and spatial locality are both types of memory locality.

We say that a program has good spatial locality and/or temporal locality and/or memory locality when it conforms to these heuristics.

When a program has good memory locality, it makes good use of the caches available on the hardware.

In practice, the throughput of a program is often substantially affected by the cache, and can be improved by increasing locality.

Prefetching¶

A third important heuristic used by both the hardware and the compiler to improve cache performance is prefetching.

Prefetching loads data into the cache before it is ever accessed, and is particularly useful when the program or the hardware can predict what memory will be used ahead of time.

Question: What can we do in the ML pipeline to increase locality and/or enable prefetching?

DEMO

A matrix multiply of $A \in \mathbb{R}^{m \times n}$ and $B \in \mathbb{R}^{n \times p}$, producing output $C \in \mathbb{R}^{m \times p}$, can be written by running

$$ C_{i,k} \mathrel{+}= A_{i,j} \cdot B_{j,k} $$for each value of $i \in \{1, \ldots, m\}$, $j \in \{1, \ldots, n\}$, and $k \in \{1, \ldots, p\}$. The natural way to do this is with three for loops. But what order should we run these loops? And how does the way we store $A$, $B$, and $C$ affect performance?

#include <stdlib.h>

#include <stdio.h>

#include <math.h>

#include <time.h>

#include <sys/time.h>

#ifdef A_COL_MAJOR

#define A_(i,j) A[j*n + i]

#define A_NAME Ac

#else

#define A_(i,j) A[j + i*m]

#define A_NAME Ar

#endif

#ifdef B_COL_MAJOR

#define B_(i,j) B[j*p + i]

#define B_NAME Bc

#else

#define B_(i,j) B[j + i*n]

#define B_NAME Br

#endif

#ifdef C_COL_MAJOR

#define C_(i,j) C[j*p + i]

#define C_NAME Cc

#else

#define C_(i,j) C[j + i*m]

#define C_NAME Cr

#endif

#ifdef LOOP_IJK

#define i_ i

#define m_ m

#define j_ j

#define n_ n

#define k_ k

#define p_ p

#define LOOP_IDXS ijk

#endif

#ifdef LOOP_JIK

#define i_ j

#define m_ n

#define j_ i

#define n_ m

#define k_ k

#define p_ p

#define LOOP_IDXS jik

#endif

#ifdef LOOP_IKJ

#define i_ i

#define m_ m

#define j_ k

#define n_ p

#define k_ j

#define p_ n

#define LOOP_IDXS ikj

#endif

#ifdef LOOP_JKI

#define i_ j

#define m_ n

#define j_ k

#define n_ p

#define k_ i

#define p_ m

#define LOOP_IDXS jki

#endif

#ifdef LOOP_KIJ

#define i_ k

#define m_ p

#define j_ i

#define n_ m

#define k_ j

#define p_ n

#define LOOP_IDXS kij

#endif

#ifdef LOOP_KJI

#define i_ k

#define m_ p

#define j_ j

#define n_ n

#define k_ i

#define p_ m

#define LOOP_IDXS kji

#endif

#define PASTER(A, B, C, D, E, F) A ## B ## C ## D ## E ## F

#define FUNCTION_NAME(LI, AN, BN, CN) PASTER( test_ , LI , _ , AN , BN , CN )

double FUNCTION_NAME(LOOP_IDXS,A_NAME,B_NAME,C_NAME) (int m, int n, int p, int num_runs) {

float* A = malloc(sizeof(float) * m * n);

float* B = malloc(sizeof(float) * n * p);

float* C = malloc(sizeof(float) * m * p);

for (int i = 0; i < m*n; i++) {

A[i] = rand();

}

for (int i = 0; i < n*p; i++) {

B[i] = rand();

}

for (int i = 0; i < m*p; i++) {

C[i] = 0.0;

}

double average_time = 0.0;

for (int iters = 0; iters < num_runs; iters++) {

double cpu_time_used;

long start, end;

struct timeval timecheck;

gettimeofday(&timecheck, NULL);

start = (long)timecheck.tv_sec * 1000 + (long)timecheck.tv_usec / 1000;

for (int i_ = 0; i_ < m_; i_++) {

for (int j_ = 0; j_ < n_; j_++) {

for (int k_ = 0; k_ < p_; k_++) {

C_(i,k) += A_(i,j) * B_(j,k);

}

}

}

gettimeofday(&timecheck, NULL);

end = (long)timecheck.tv_sec * 1000 + (long)timecheck.tv_usec / 1000;

cpu_time_used = (end - start)*0.001;

average_time += cpu_time_used;

printf("time elapsed: %f seconds\n", cpu_time_used);

}

printf("\naverage time: %lf seconds\n\n", average_time / num_runs);

float rv = 0.0;

for (int i = 0; i < m; i++) {

for (int k = 0; k < p; k++) {

rv += C[i*p + k];

}

}

printf("digest: %e\n", rv);

free(A);

free(B);

free(C);

return average_time / num_runs;

}

using Libdl # open a dynamic library that links to the C code

tm_lib = Libdl.dlopen("lecture16-demo/test_memory.lib");

function test_mmpy(loop_order::String, Amaj::String, Bmaj::String, Cmaj::String, m, n, p, num_runs)

@assert(loop_order in ["ijk","ikj","jki","jik","kij","kji"])

@assert(Amaj in ["r","c"])

@assert(Bmaj in ["r","c"])

@assert(Cmaj in ["r","c"])

f = Libdl.dlsym(tm_lib, "test_$(loop_order)_A$(Amaj)B$(Bmaj)C$(Cmaj)")

ccall(f, Float64, (Int32, Int32, Int32, Int32), m, n, p, num_runs)

end

test_mmpy (generic function with 1 method)

d = 512;

num_runs = 10;

measurements = []

for loop_order in ["ijk","ikj","jki","jik","kij","kji"]

for Am in ["r","c"]

for Bm in ["r","c"]

for Cm in ["r","c"]

push!(measurements, (loop_order * "_" * Am * Bm * Cm, test_mmpy(loop_order, Am, Bm, Cm, d, d, d, num_runs)));

end

end

end

end

time elapsed: 0.011000 seconds time elapsed: 0.011000 seconds time elapsed: 0.009000 seconds time elapsed: 0.009000 seconds time elapsed: 0.009000 seconds time elapsed: 0.009000 seconds time elapsed: 0.009000 seconds time elapsed: 0.009000 seconds time elapsed: 0.009000 seconds time elapsed: 0.009000 seconds average time: 0.009400 seconds digest: 1.547224e+27 time elapsed: 0.443000 seconds time elapsed: 0.444000 seconds time elapsed: 0.446000 seconds time elapsed: 0.452000 seconds time elapsed: 0.443000 seconds time elapsed: 0.444000 seconds time elapsed: 0.444000 seconds time elapsed: 0.442000 seconds time elapsed: 0.441000 seconds time elapsed: 0.443000 seconds average time: 0.444200 seconds digest: 1.548630e+27 time elapsed: 0.078000 seconds time elapsed: 0.076000 seconds time elapsed: 0.076000 seconds time elapsed: 0.078000 seconds time elapsed: 0.076000 seconds time elapsed: 0.078000 seconds time elapsed: 0.076000 seconds time elapsed: 0.075000 seconds time elapsed: 0.076000 seconds time elapsed: 0.077000 seconds average time: 0.076600 seconds digest: 1.543532e+27 time elapsed: 0.507000 seconds time elapsed: 0.497000 seconds time elapsed: 0.499000 seconds time elapsed: 0.500000 seconds time elapsed: 0.496000 seconds time elapsed: 0.496000 seconds time elapsed: 0.491000 seconds time elapsed: 0.494000 seconds time elapsed: 0.492000 seconds time elapsed: 0.494000 seconds average time: 0.496600 seconds digest: 1.542556e+27 time elapsed: 0.009000 seconds time elapsed: 0.009000 seconds time elapsed: 0.010000 seconds time elapsed: 0.009000 seconds time elapsed: 0.009000 seconds time elapsed: 0.010000 seconds time elapsed: 0.009000 seconds time elapsed: 0.010000 seconds time elapsed: 0.009000 seconds time elapsed: 0.010000 seconds average time: 0.009400 seconds digest: 1.545646e+27 time elapsed: 0.437000 seconds time elapsed: 0.439000 seconds time elapsed: 0.439000 seconds time elapsed: 0.439000 seconds time elapsed: 0.440000 seconds time elapsed: 0.438000 seconds time elapsed: 0.438000 seconds time elapsed: 0.439000 seconds time elapsed: 0.439000 seconds time elapsed: 0.447000 seconds average time: 0.439500 seconds digest: 1.543104e+27 time elapsed: 0.075000 seconds time elapsed: 0.076000 seconds time elapsed: 0.075000 seconds time elapsed: 0.076000 seconds time elapsed: 0.075000 seconds time elapsed: 0.076000 seconds time elapsed: 0.075000 seconds time elapsed: 0.075000 seconds time elapsed: 0.076000 seconds time elapsed: 0.075000 seconds average time: 0.075400 seconds digest: 1.547254e+27 time elapsed: 0.492000 seconds time elapsed: 0.493000 seconds time elapsed: 0.492000 seconds time elapsed: 0.493000 seconds time elapsed: 0.491000 seconds time elapsed: 0.494000 seconds time elapsed: 0.494000 seconds time elapsed: 0.492000 seconds time elapsed: 0.492000 seconds time elapsed: 0.492000 seconds average time: 0.492500 seconds digest: 1.548429e+27 time elapsed: 0.142000 seconds time elapsed: 0.145000 seconds time elapsed: 0.142000 seconds time elapsed: 0.143000 seconds time elapsed: 0.144000 seconds time elapsed: 0.144000 seconds time elapsed: 0.145000 seconds time elapsed: 0.142000 seconds time elapsed: 0.143000 seconds time elapsed: 0.141000 seconds average time: 0.143100 seconds digest: 1.546149e+27 time elapsed: 0.130000 seconds time elapsed: 0.130000 seconds time elapsed: 0.130000 seconds time elapsed: 0.130000 seconds time elapsed: 0.129000 seconds time elapsed: 0.129000 seconds time elapsed: 0.129000 seconds time elapsed: 0.131000 seconds time elapsed: 0.129000 seconds time elapsed: 0.130000 seconds average time: 0.129700 seconds digest: 1.548177e+27 time elapsed: 0.082000 seconds time elapsed: 0.081000 seconds time elapsed: 0.081000 seconds time elapsed: 0.082000 seconds time elapsed: 0.082000 seconds time elapsed: 0.089000 seconds time elapsed: 0.082000 seconds time elapsed: 0.082000 seconds time elapsed: 0.085000 seconds time elapsed: 0.082000 seconds average time: 0.082800 seconds digest: 1.542770e+27 time elapsed: 0.082000 seconds time elapsed: 0.082000 seconds time elapsed: 0.083000 seconds time elapsed: 0.082000 seconds time elapsed: 0.082000 seconds time elapsed: 0.081000 seconds time elapsed: 0.082000 seconds time elapsed: 0.082000 seconds time elapsed: 0.081000 seconds time elapsed: 0.082000 seconds average time: 0.081900 seconds digest: 1.548804e+27 time elapsed: 0.147000 seconds time elapsed: 0.149000 seconds time elapsed: 0.155000 seconds time elapsed: 0.148000 seconds time elapsed: 0.148000 seconds time elapsed: 0.147000 seconds time elapsed: 0.148000 seconds time elapsed: 0.148000 seconds time elapsed: 0.149000 seconds time elapsed: 0.148000 seconds average time: 0.148700 seconds digest: 1.544855e+27 time elapsed: 0.147000 seconds time elapsed: 0.148000 seconds time elapsed: 0.147000 seconds time elapsed: 0.147000 seconds time elapsed: 0.147000 seconds time elapsed: 0.146000 seconds time elapsed: 0.148000 seconds time elapsed: 0.147000 seconds time elapsed: 0.148000 seconds time elapsed: 0.149000 seconds average time: 0.147400 seconds digest: 1.548595e+27 time elapsed: 0.130000 seconds time elapsed: 0.130000 seconds time elapsed: 0.133000 seconds time elapsed: 0.145000 seconds time elapsed: 0.135000 seconds time elapsed: 0.131000 seconds time elapsed: 0.131000 seconds time elapsed: 0.133000 seconds time elapsed: 0.134000 seconds time elapsed: 0.131000 seconds average time: 0.133300 seconds digest: 1.549668e+27 time elapsed: 0.130000 seconds time elapsed: 0.130000 seconds time elapsed: 0.132000 seconds time elapsed: 0.131000 seconds time elapsed: 0.132000 seconds time elapsed: 0.131000 seconds time elapsed: 0.131000 seconds time elapsed: 0.130000 seconds time elapsed: 0.131000 seconds time elapsed: 0.131000 seconds average time: 0.130900 seconds digest: 1.551060e+27 time elapsed: 0.499000 seconds time elapsed: 0.494000 seconds time elapsed: 0.506000 seconds time elapsed: 0.494000 seconds time elapsed: 0.506000 seconds time elapsed: 0.492000 seconds time elapsed: 0.489000 seconds time elapsed: 0.492000 seconds time elapsed: 0.493000 seconds time elapsed: 0.493000 seconds average time: 0.495800 seconds digest: 1.548983e+27 time elapsed: 0.083000 seconds time elapsed: 0.084000 seconds time elapsed: 0.083000 seconds time elapsed: 0.083000 seconds time elapsed: 0.082000 seconds time elapsed: 0.083000 seconds time elapsed: 0.083000 seconds time elapsed: 0.083000 seconds time elapsed: 0.083000 seconds time elapsed: 0.083000 seconds average time: 0.083000 seconds digest: 1.546111e+27 time elapsed: 0.490000 seconds time elapsed: 0.495000 seconds time elapsed: 0.494000 seconds time elapsed: 0.492000 seconds time elapsed: 0.492000 seconds time elapsed: 0.492000 seconds time elapsed: 0.490000 seconds time elapsed: 0.494000 seconds time elapsed: 0.504000 seconds time elapsed: 0.498000 seconds average time: 0.494100 seconds digest: 1.549664e+27 time elapsed: 0.083000 seconds time elapsed: 0.083000 seconds time elapsed: 0.084000 seconds time elapsed: 0.083000 seconds time elapsed: 0.083000 seconds time elapsed: 0.083000 seconds time elapsed: 0.084000 seconds time elapsed: 0.083000 seconds time elapsed: 0.083000 seconds time elapsed: 0.082000 seconds average time: 0.083100 seconds digest: 1.544840e+27 time elapsed: 0.438000 seconds time elapsed: 0.435000 seconds time elapsed: 0.440000 seconds time elapsed: 0.442000 seconds time elapsed: 0.436000 seconds time elapsed: 0.445000 seconds time elapsed: 0.459000 seconds time elapsed: 0.437000 seconds time elapsed: 0.439000 seconds time elapsed: 0.437000 seconds average time: 0.440800 seconds digest: 1.546155e+27 time elapsed: 0.012000 seconds time elapsed: 0.011000 seconds time elapsed: 0.012000 seconds time elapsed: 0.011000 seconds time elapsed: 0.012000 seconds time elapsed: 0.011000 seconds time elapsed: 0.012000 seconds time elapsed: 0.012000 seconds time elapsed: 0.011000 seconds time elapsed: 0.012000 seconds average time: 0.011600 seconds digest: 1.547439e+27 time elapsed: 0.436000 seconds time elapsed: 0.438000 seconds time elapsed: 0.438000 seconds time elapsed: 0.436000 seconds time elapsed: 0.439000 seconds time elapsed: 0.438000 seconds time elapsed: 0.434000 seconds time elapsed: 0.432000 seconds time elapsed: 0.431000 seconds time elapsed: 0.433000 seconds average time: 0.435500 seconds digest: 1.544299e+27 time elapsed: 0.011000 seconds time elapsed: 0.012000 seconds time elapsed: 0.012000 seconds time elapsed: 0.011000 seconds time elapsed: 0.012000 seconds time elapsed: 0.012000 seconds time elapsed: 0.011000 seconds time elapsed: 0.012000 seconds time elapsed: 0.012000 seconds time elapsed: 0.011000 seconds average time: 0.011600 seconds digest: 1.545412e+27 time elapsed: 0.012000 seconds time elapsed: 0.011000 seconds time elapsed: 0.012000 seconds time elapsed: 0.012000 seconds time elapsed: 0.011000 seconds time elapsed: 0.011000 seconds time elapsed: 0.012000 seconds time elapsed: 0.012000 seconds time elapsed: 0.011000 seconds time elapsed: 0.012000 seconds average time: 0.011600 seconds digest: 1.545636e+27 time elapsed: 0.433000 seconds time elapsed: 0.432000 seconds time elapsed: 0.434000 seconds time elapsed: 0.436000 seconds time elapsed: 0.436000 seconds time elapsed: 0.437000 seconds time elapsed: 0.440000 seconds time elapsed: 0.459000 seconds time elapsed: 0.444000 seconds time elapsed: 0.443000 seconds average time: 0.439400 seconds digest: 1.550911e+27 time elapsed: 0.085000 seconds time elapsed: 0.083000 seconds time elapsed: 0.082000 seconds time elapsed: 0.084000 seconds time elapsed: 0.084000 seconds time elapsed: 0.084000 seconds time elapsed: 0.083000 seconds time elapsed: 0.084000 seconds time elapsed: 0.082000 seconds time elapsed: 0.083000 seconds average time: 0.083400 seconds digest: 1.549246e+27 time elapsed: 0.497000 seconds time elapsed: 0.497000 seconds time elapsed: 0.499000 seconds time elapsed: 0.501000 seconds time elapsed: 0.499000 seconds time elapsed: 0.504000 seconds time elapsed: 0.491000 seconds time elapsed: 0.492000 seconds time elapsed: 0.494000 seconds time elapsed: 0.490000 seconds average time: 0.496400 seconds digest: 1.548120e+27 time elapsed: 0.011000 seconds time elapsed: 0.012000 seconds time elapsed: 0.011000 seconds time elapsed: 0.012000 seconds time elapsed: 0.012000 seconds time elapsed: 0.012000 seconds time elapsed: 0.011000 seconds time elapsed: 0.012000 seconds time elapsed: 0.012000 seconds time elapsed: 0.011000 seconds average time: 0.011600 seconds digest: 1.549310e+27 time elapsed: 0.428000 seconds time elapsed: 0.432000 seconds time elapsed: 0.432000 seconds time elapsed: 0.430000 seconds time elapsed: 0.431000 seconds time elapsed: 0.430000 seconds time elapsed: 0.434000 seconds time elapsed: 0.438000 seconds time elapsed: 0.431000 seconds time elapsed: 0.430000 seconds average time: 0.431600 seconds digest: 1.549153e+27 time elapsed: 0.081000 seconds time elapsed: 0.083000 seconds time elapsed: 0.083000 seconds time elapsed: 0.082000 seconds time elapsed: 0.083000 seconds time elapsed: 0.082000 seconds time elapsed: 0.082000 seconds time elapsed: 0.083000 seconds time elapsed: 0.082000 seconds time elapsed: 0.082000 seconds average time: 0.082300 seconds digest: 1.547394e+27 time elapsed: 0.493000 seconds time elapsed: 0.491000 seconds time elapsed: 0.492000 seconds time elapsed: 0.491000 seconds time elapsed: 0.491000 seconds time elapsed: 0.489000 seconds time elapsed: 0.490000 seconds time elapsed: 0.489000 seconds time elapsed: 0.491000 seconds time elapsed: 0.500000 seconds average time: 0.491700 seconds digest: 1.546289e+27 time elapsed: 0.130000 seconds time elapsed: 0.130000 seconds time elapsed: 0.129000 seconds time elapsed: 0.130000 seconds time elapsed: 0.129000 seconds time elapsed: 0.130000 seconds time elapsed: 0.129000 seconds time elapsed: 0.130000 seconds time elapsed: 0.129000 seconds time elapsed: 0.131000 seconds average time: 0.129700 seconds digest: 1.544147e+27 time elapsed: 0.130000 seconds time elapsed: 0.129000 seconds time elapsed: 0.130000 seconds time elapsed: 0.130000 seconds time elapsed: 0.129000 seconds time elapsed: 0.129000 seconds time elapsed: 0.130000 seconds time elapsed: 0.130000 seconds time elapsed: 0.129000 seconds time elapsed: 0.131000 seconds average time: 0.129700 seconds digest: 1.546214e+27 time elapsed: 0.082000 seconds time elapsed: 0.081000 seconds time elapsed: 0.082000 seconds time elapsed: 0.082000 seconds time elapsed: 0.082000 seconds time elapsed: 0.082000 seconds time elapsed: 0.083000 seconds time elapsed: 0.082000 seconds time elapsed: 0.083000 seconds time elapsed: 0.082000 seconds average time: 0.082100 seconds digest: 1.543729e+27 time elapsed: 0.082000 seconds time elapsed: 0.082000 seconds time elapsed: 0.082000 seconds time elapsed: 0.082000 seconds time elapsed: 0.081000 seconds time elapsed: 0.082000 seconds time elapsed: 0.082000 seconds time elapsed: 0.082000 seconds time elapsed: 0.082000 seconds time elapsed: 0.083000 seconds average time: 0.082000 seconds digest: 1.545947e+27 time elapsed: 0.149000 seconds time elapsed: 0.148000 seconds time elapsed: 0.149000 seconds time elapsed: 0.148000 seconds time elapsed: 0.148000 seconds time elapsed: 0.149000 seconds time elapsed: 0.148000 seconds time elapsed: 0.149000 seconds time elapsed: 0.148000 seconds time elapsed: 0.149000 seconds average time: 0.148500 seconds digest: 1.545514e+27 time elapsed: 0.149000 seconds time elapsed: 0.148000 seconds time elapsed: 0.150000 seconds time elapsed: 0.148000 seconds time elapsed: 0.149000 seconds time elapsed: 0.149000 seconds time elapsed: 0.156000 seconds time elapsed: 0.149000 seconds time elapsed: 0.149000 seconds time elapsed: 0.148000 seconds average time: 0.149500 seconds digest: 1.546514e+27 time elapsed: 0.129000 seconds time elapsed: 0.130000 seconds time elapsed: 0.129000 seconds time elapsed: 0.130000 seconds time elapsed: 0.129000 seconds time elapsed: 0.130000 seconds time elapsed: 0.129000 seconds time elapsed: 0.129000 seconds time elapsed: 0.132000 seconds time elapsed: 0.130000 seconds average time: 0.129700 seconds digest: 1.550013e+27 time elapsed: 0.141000 seconds time elapsed: 0.141000 seconds time elapsed: 0.142000 seconds time elapsed: 0.142000 seconds time elapsed: 0.146000 seconds time elapsed: 0.149000 seconds time elapsed: 0.141000 seconds time elapsed: 0.141000 seconds time elapsed: 0.142000 seconds time elapsed: 0.142000 seconds average time: 0.142700 seconds digest: 1.549078e+27 time elapsed: 0.487000 seconds time elapsed: 0.489000 seconds time elapsed: 0.490000 seconds time elapsed: 0.494000 seconds time elapsed: 0.494000 seconds time elapsed: 0.490000 seconds time elapsed: 0.497000 seconds time elapsed: 0.490000 seconds time elapsed: 0.492000 seconds time elapsed: 0.490000 seconds average time: 0.491300 seconds digest: 1.550673e+27 time elapsed: 0.075000 seconds time elapsed: 0.075000 seconds time elapsed: 0.075000 seconds time elapsed: 0.076000 seconds time elapsed: 0.076000 seconds time elapsed: 0.077000 seconds time elapsed: 0.075000 seconds time elapsed: 0.076000 seconds time elapsed: 0.076000 seconds time elapsed: 0.075000 seconds average time: 0.075600 seconds digest: 1.548041e+27 time elapsed: 0.494000 seconds time elapsed: 0.500000 seconds time elapsed: 0.511000 seconds time elapsed: 0.496000 seconds time elapsed: 0.496000 seconds time elapsed: 0.493000 seconds time elapsed: 0.492000 seconds time elapsed: 0.498000 seconds time elapsed: 0.499000 seconds time elapsed: 0.499000 seconds average time: 0.497800 seconds digest: 1.544200e+27 time elapsed: 0.077000 seconds time elapsed: 0.075000 seconds time elapsed: 0.076000 seconds time elapsed: 0.076000 seconds time elapsed: 0.079000 seconds time elapsed: 0.078000 seconds time elapsed: 0.080000 seconds time elapsed: 0.080000 seconds time elapsed: 0.076000 seconds time elapsed: 0.077000 seconds average time: 0.077400 seconds digest: 1.546579e+27 time elapsed: 0.447000 seconds time elapsed: 0.453000 seconds time elapsed: 0.444000 seconds time elapsed: 0.442000 seconds time elapsed: 0.437000 seconds time elapsed: 0.434000 seconds time elapsed: 0.434000 seconds time elapsed: 0.433000 seconds time elapsed: 0.438000 seconds time elapsed: 0.440000 seconds average time: 0.440200 seconds digest: 1.547097e+27 time elapsed: 0.009000 seconds time elapsed: 0.010000 seconds time elapsed: 0.009000 seconds time elapsed: 0.010000 seconds time elapsed: 0.010000 seconds time elapsed: 0.009000 seconds time elapsed: 0.010000 seconds time elapsed: 0.010000 seconds time elapsed: 0.009000 seconds time elapsed: 0.010000 seconds average time: 0.009600 seconds digest: 1.548300e+27 time elapsed: 0.451000 seconds time elapsed: 0.445000 seconds time elapsed: 0.446000 seconds time elapsed: 0.440000 seconds time elapsed: 0.437000 seconds time elapsed: 0.440000 seconds time elapsed: 0.442000 seconds time elapsed: 0.443000 seconds time elapsed: 0.437000 seconds time elapsed: 0.435000 seconds average time: 0.441600 seconds digest: 1.549666e+27 time elapsed: 0.009000 seconds time elapsed: 0.010000 seconds time elapsed: 0.010000 seconds time elapsed: 0.009000 seconds time elapsed: 0.010000 seconds time elapsed: 0.009000 seconds time elapsed: 0.010000 seconds time elapsed: 0.009000 seconds time elapsed: 0.010000 seconds time elapsed: 0.009000 seconds average time: 0.009500 seconds digest: 1.548350e+27

m_sorted = [(m,t) for (t,m) in sort([(t,m) for (m,t) in measurements])]

for (m,t) in m_sorted

println("$m --> $t");

end

ijk_crr --> 0.009399999999999999 ijk_rrr --> 0.009400000000000002 kji_ccc --> 0.0095 kji_crc --> 0.0096 jik_crr --> 0.011599999999999997 jik_rrr --> 0.011599999999999997 jki_ccc --> 0.011599999999999997 jki_crc --> 0.011599999999999997 ijk_ccr --> 0.0754 kji_rrc --> 0.07559999999999999 ijk_rcr --> 0.07659999999999999 kji_rcc --> 0.0774 ikj_rcc --> 0.0819 kij_rcc --> 0.08199999999999999 kij_rcr --> 0.08209999999999999 jik_ccr --> 0.0823 ikj_rcr --> 0.08279999999999998 jki_rrc --> 0.08299999999999999 jki_rcc --> 0.0831 jik_rcr --> 0.08339999999999999 kij_rrc --> 0.12969999999999998 kij_rrr --> 0.12969999999999998 ikj_rrc --> 0.1297 kij_ccr --> 0.1297 ikj_ccc --> 0.13090000000000002 ikj_ccr --> 0.1333 kij_ccc --> 0.1427 ikj_rrr --> 0.14310000000000003 ikj_crc --> 0.1474 kij_crr --> 0.1485 ikj_crr --> 0.1487 kij_crc --> 0.1495 jik_crc --> 0.4316000000000001 jki_ccr --> 0.43550000000000005 jik_rrc --> 0.4393999999999999 ijk_crc --> 0.43950000000000006 kji_crr --> 0.44020000000000004 jki_crr --> 0.44079999999999997 kji_ccr --> 0.44159999999999994 ijk_rrc --> 0.44419999999999993 kji_rrr --> 0.4913 jik_ccc --> 0.49169999999999997 ijk_ccc --> 0.4925 jki_rcr --> 0.4941 jki_rrr --> 0.4958 jik_rcc --> 0.49640000000000006 ijk_rcc --> 0.49660000000000004 kji_rcr --> 0.4977999999999999

maximum(t for (m,t) in m_sorted) / minimum(t for (m,t) in m_sorted)

52.95744680851064

An over $50 \times$ difference just from accessing memory in a different order!

Scan order¶

Scan order refers to the order in which the training examples are used in a learning algorithm.

Using a non-random scan order is an option that can sometimes improve performance by increasing memory locality. Usually it helps more with convergence!

Here are a few scan orders that people use:

Random sampling with replacement (a.k.a. random scan): every time we need a new sample, we pick one at random from the whole training dataset.

Random sampling without replacement: every time we need a new sample, we pick one at random and then discard it (it won't be sampled again). Once we've gone through the whole training set, we replace all the samples and continue.

Sequential scan (a.k.a. systematic scan): sample the data in the order in which it appears in memory. When you get to the end of the training set, restart at the beginning.

Shuffle-once: at the beginning of execution, randomly shuffle the training data. Then sample the data in that shuffled order. When you get to the end of the training set, restart at the beginning.

Random reshuffling: at the beginning of execution, randomly shuffle the training data. Then sample the data in that shuffled order. When you get to the end of the training set, reshuffle the training set, then restart at the beginning.

How does the memory locality of these different scan orders compare?

- Worst memory locality: random scan with and without resampling

- Okay memory locality: random reshuffling

- Very good: shuffle once

- Best: sequential scan

Two of these scan orders are actually statistically equivalent! Which ones?

- Random sampling w/o replacement and random reshuffling

How does the statistical performance of these different scan orders compare?

random reshuffling = w/o replacement > shuffle once > sequential scan > random with replacement

A good first choice when compute is light and the model is simple: shuffle once¶

Generally it performs quite well statistically (although it might have weaker theoretical guarantees), and it has good memory locality.

Another good choice: without-replacement sampling¶

This is particularly good when you're doing some sort of data augmentation, since you can construct the without-replacement minibatches on-the-fly.

Memory and sparsity¶

How does the use of sparsity impact the memory subsystem?

Two major effects:

- Sparsity lowers the total amount of memory in use by the program.

- Sparsity lowers the memory locality.

- Why? Accesses are not dense and so are less predictable.

What else can we do to lower the total memory usage of the machine learning pipeline?