Introduction to Computer Vision (CS4670), Fall 2010

Final Project

Assigned: Sunday, October 24

Proposal Due: Friday,

October 29 by 11:59pm (via CMS)

Status Report: Friday,

November 19, 11:59pm

Final Presentation Slides

Due: Sunday, December 12, 7:59pm

Final Presentation: Monday, December 13, 9-11:30am, *** Location: ***

Phillips 203

Writeup/Code Due: Tuesday, December 14,

11:59pm

Quick Links: Synopsis and Guidlines Requirements Resources Final Project Ideas!

Synopsis and Guidelines

The final project is an open-ended project in mobile computer vision. In teams of two or three, you will come up with a project idea (some ideas to get you started are described below), then implement it on a Nokia N900. Your project must involve some kind of demo that you can show in class, either through a live demo or a video that you have created of the system in action. The project could be a new research project, or a reimplementation of an existing system, but must involve a non-trivial implementation of an computer vision algorithm or system. Teams of three will be expected to devise and implement a more ambitious project than teams of two.

The goal of the project is (1) to learn more about a subfield of computer vision, and (2) get more hands-on experience with computer vision on mobile devices.

How ambitious/difficult should your project be? Each team member should count on committing at least twice the work as in Project 2ab.

Accordingly, you won't be able to implement something arbitrarily ambitious, but please feel free to use your imagination when coming up with projects, and to implement a prototype system that could be extended in interesting ways. As part of the project, you can use any capability that you can think of. For instance, the phone comes with wireless, a touch screen, accelerometer, GPU, GPS, and other bells and whistles. You can set up a remote server that listens for requests from the phone, and runs some vision algorithm on the server. You can use Google Streetview, or any other existing API on the Web (as long as you still implement something interesting yourselves).

Requirements

Proposal

Each team will turn in an approximately one-page proposal describing their project. It should specify:

- Your team members

- Project goals. Be specific. Describe what the inputs to the system are, and what the outputs will be.

- Brief description of your approach. If you are implementing or extending a previous method, give the reference and web link to the paper.

- Will you be using helper code (e.g., available online) or will you implement it all yourself?

- Breakdown--what will each team-member do? Ideally, everyone should do something imaging/vision related (it's not good for one team member to focus purely on user-interface, for instance).

- Special equipment that will be needed. We may be able to help with servers, extra cameras, etc.

Turn in the proposal via CMS by Thursday, October 28 (by 11:59pm).

Status Report

Each team will turn in a one page status report for their project on Friday, November 19 by 11:59pm. This report should present your progress to date, including preliminary results, as well as any problems that you are encountering.

Final Presentation

Each group will give a short (10 minute) PowerPoint presentation on their project to the class. Details will be announced closer to the time of the presentation. Your final presentation slides should be uploaded to CMS. Your presentation is expected to include some kind of (canned or live) demo.

Final Writeup

Turn in a web writeup describing your problem and approach. This writeup should include the following:

- title, team members

- short intro

- related work, with references to papers, web pages

- technical description including algorithm

- experimental results

- discussion of results, strengths/weaknesses, what worked, what didn't

- future work and what you would do if you had more time

Code

In addition to the writeup, you will be turning in the code associate with the project.

Resources

Coming soon...Final Project Ideas!

Here are several ideas that would make appropriate final projects. Feel free to choose variations of these or to devise your own projects that are not on this list. We're happy to meet with you to discuss any of these (or other) project ideas in more detail--if you can't make office hours, just email the instructor to set up a meeting.

- Nokia Goggles Lite. Write an app that can recognize some limited class of objects, like all book covers or all DVDs, all artwork, etc. You may need to write a scraper to download large sets of images from Amazon, for instance, in order to create the database. You'll probably also need to create a server for this project.

- Computational

Photography App. Computational photography (which we

will talk about in class) uses computation combined with imaging

to create better images (your panorama stitcher in Project 2 is

an example of a computational photography application). A mobile

device---combining a camera with computation---is an ideal

platform for computational photography. Devise and implement

a computational photography app on the N900. Here are some

possibilities:

- HDR (high dynamic range) imaging. Cameras are limited in the dynamic range (i.e., the range of intensities of light hitting the sensor) they can capture in a single photo; it is hard to capture very bright and very dark intensities in the same photo. However, if we take multiple photos with different exposures, we can combine them together to produce an HDR (high dynamic range) image. You can see many examples of HDR images on Flickr. Write an app for taking multiple photos with different exposures and combining them on the phone. See a related project from Li Zhang here. You can also get inspiration from the iPhone's version of this app, reviewed here on Ars Technica.

- 360 panorama capture. Extend your panorama app in Project 2b to create an entire 360 panorama on the phone (see the Monster Bells on the Project 2b page). Alternatively, make a real-time panorama capture app as described in this project, or as implemented in the N900 QuickPanorama app.

- Flash-no flash. Use the flash in the N900 to capture flash-no flash pairs then combine them into beautiful images, as described in this project.

- Video stabilization. Use optical flow to create a real-time image stabilizing algorithm.

- Image deblurring. Can you use the phone's accelerometer to help with image deblurring? See this project.

- Something else cool. Take a look at the Frankencamera project for more ideas for computational photography apps.

- Location-based games. The instructor is working on PhotoCity, a game for photographing all of Cornell. Part of this game is an app that runs on a mobile device. Build an N900 version of this app. For more details, talk to the instructor.

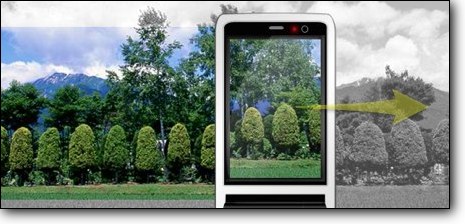

- Location recognition and augmented reality. Create a Cornell campus app that recognizes what building you are in front of by taking a photo and comparing it to a large database of Cornell images. If you are interested in this project, please talk to the instructor (who has tens of thousands of images of Cornell that could be used as a database). Use this in a campus tour, which displays additional information on top of the photo, such as the name of the building and a link to Wikipedia.

- Misc. Augmented Reality or Recognition App. There are many things you could do here. Take a picture of an airplane flying overhead, and automatically highlight the plane, along with the flight number, by connecting with an online flight database (and estimating the rough location and orientation of the phone). You could write a barcode scanner, and display useful information on an image of a product. You could write a vision-based Sudoku-capture app for taking a photo of a Sudoku puzzle and converting it to a digital version. And so on.

- Digital object insertion. Build an app to track the pose of a camera, and insert a digital 3D object into the real scene (as viewed through the phone. This makes the phone into an interface for viewing a virtual 3D object by simply walking around it.

- Face recognition. Write an app that can take an photo of a person and recognize them and display their name.

- Artistic image filtering. Create an image filtering app that in real-time applies an interesting (non-linear) filter to the stream of images. For instance, you could apply a cartooning effect, as in this project on real-time video abstraction.

- Vision-based user interface. Write an app that will implement a phone UI based on computer vision (this is most useful for a phone with a front-facing camera, e.g. an iPhone 4, but we can at least prototype one with the N900). If might track features on your face, for instance, or recognize gestures, in order to activate certain UI commands (e.g., ``raise left eyebrow'' might push the ``1'' button on the dialpad---you can probably think of much more useful ideas). This could be used as an interface for impaired users, or as an interface to a new game (imagine using your face to control a game character).

- Stereo/structure from motion. Use the camera to capture two images, then run stereo on them to produce an image with depth map. You'll first need to estimate the F-matrix between the two images.

- Autofocus. Create a camera app that implementes autofocus by recognizing faces in the image.