Synchronization

Synchronization and happens-before

As we have seen, a write to memory by one thread (e.g., an assignment to an instance variable) is not necessarily seen by a later read from the same location by another thread. Java, like most programming languages, offers only a weak consistency model that does not guarantee that every write is seen by every later read. When the consistency model considers two events to be causally related in this way, we say that one operation has a happens-before relationship to the other. A write that happens-before a read is guaranteed to be seen by the read (though it is also possible that the read will return the result of an even later write). Conversely, a read that happens-before a write is guarantee not to see the value written by that write.

Release consistency

Different weak consistency models make different guarantees about happens-before relationships. However, a useful least common denominator is release consistency. It guarantees that any operation performed by a thread to a location before releasing a lock will happen-before any operation by another thread to the same location after a later acquisition of the same lock. Any release and acquire operations on the same lock are always related by the happens-before relation.

For example, suppose we have two threads, T1 and T2, as depicted in the timelines below. Thread T1 performs some set of updates during period A, then releases a mutex m, and then performs some updates during period B. Thread T2 executes and at some point tries to acquire the mutex m, forcing it to wait until T1 has released it. In this case, period A happens-before T2's execution after acquiring the mutex. Therefore, before acquiring the mutex, T2 is not guaranteed to see any of the updates by T1, but may see some arbitrary subset of them. After acquiring the mutex, T2 is guaranteed to see all of T1's updates during period A, and may see some arbitrary subset of the updates during period B.

Figure 1: Guarantees made by release consistency

Thus, to make sure that updates done to shared state by one thread are seen by other threads, all accesses to the shared state must be guarded by the same lock.

Barriers

In scientific computing applications, barriers are another popular way to ensure that updates by one thread or set of threads are seen by other threads. A barrier is created with a specified number of threads that must reach the barrier. Each thread that reaches the barrier will block until the specified number of threads have all reached it, at which point all the threads unblock and are able to go forward. All operations in all threads that occur before the barrier is reached are guaranteed to happen-before all operations that occur after the barrier. Barriers make it easy to divide up a parallel computation into a series of communicating stages.

The Java system library provides a barrier abstraction,

java.util.concurrent.CyclicBarrier. An instance of this class

is created with the number of threads that are expected to reach the barrier.

A thread "reaches the barrier" by calling the barrier's await() method. This

causes it to block there until the required number of threads reach the

barrier, at which point all the waiting threads unblock and resume execution at the

instruction following the await() call.

The barrier then resets and can be used again.

Barriers also help ensure a consistent view of memory. Once threads waiting at a barrier unblock, they are guaranteed to see all the memory updates that other threads performed before reaching the barrier. The barrier style of computation allows a set of threads to divide up work and make progress, then exchange information via a barrier.

Monitors

The monitor pattern is another way to manage synchronization.

It builds synchronization into objects: a monitor

is an object with a built-in lock on which all of the monitor's

methods are synchronized. This design is accomplished in Java easily, because

every object can be used as a lock, and the synchronized keyword enforces the

monitor pattern. Java objects are designed to be used as monitors. A monitor

can also have some number of condition variables, which we'll return

to shortly.

The only objects that should be shared between threads are therefore immutable objects and objects protected by locks. Objects protected by locks include both monitors and objects encapsulated inside monitors, since objects encapsulated inside monitors are protected by their locks.

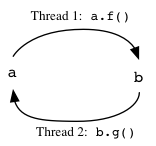

Deadlock

Monitors ensure consistency of data. But the locking they engage in

can cause deadlock, a condition in which every thread is waiting to

acquire a lock that some other thread is holding. For example,

consider two monitors a and b of classes

A and B, respectively,

where a.f() calls b.g() and

vice-versa:

class A {

synchronized void f() { b.g(); }

}

class B {

synchronized void g() { a.f(); }

}

Thread 1:a.f(); |

Thread 2:b.g(); |

Suppose two threads try to call a.f() and b.g(),

respectively. The two threads can acquire locks on a and b,

respectively, then deadlock trying to acquire the remaining lock.

We can represent this situation using a diagram like the following,

in which the deadlock shows up as a cycle in the graph.

To avoid creating cycles in the graph, the usual approach is to define an ordering < on locks and acquire any needed locks in an order consistent with <. For example, we might have a lock ordering in which a < b. In this scenario, b could not be waiting on the lock for a, because a method of b already holds a lock that is higher in the ordering, so it must already have acquired the lock for a.

In general, a thread may acquire a lock on (synchronize on) an object only if that object comes later in the lock ordering than all locks that the thread already holds.

Locking level

To prevent deadlock, we can impose a requirement that a method only hold locks up to a certain level in the order <. This requirement becomes part of the precondition of the method and is called the locking level of the method. The locking level specifies the highest level lock in the lock ordering that may be held when the method is called.

For example, suppose we have a < b in the lock ordering, and a lock level annotation to the methods f() and g() that says the highest level that can be held is the corresponding lock. The call to a.f() in B.g() violates the lock ordering, so we can see that it might result in deadlock.

class A {

/** Locking Level a */

synchronized void f() { b.g(); }

}

class B {

/** Locking Level b */

synchronized void g() { a.f(); }

}

Locks are not enough for waiting

Locks block threads from making progress, but in general, they are not a sufficiently powerful mechanism for blocking threads. More generally, we may want to block a thread until some condition becomes true. Examples of such situations are (1) when we want to communicate information between threads (which may need to block until some information becomes available) and (2) when we want to implement our own lock abstractions.

One such abstraction we might want to build is a barrier, because for simple uses of concurrency, barriers make it easy to build race-free, deadlock-free code.

For example, suppose we want to run two threads in parallel to compute

some results and wait until both results are available. We might

define a class WorkerPair that spawns two worker threads:

class WorkerPair extends Runnable {

int done; // number of threads that have finished

Object result;

WorkerPair() {

done = 0;

new Thread(this).start();

new Thread(this).start();

}

public void run() {

doWork();

synchronized(this) {

done++;

result = ...

}

}

// not synchronized, to allow concurrent execution<

public void doWork() {

// use synchronized methods here

}

Object getResult() {

while (done < 2) {} // oops: wasteful!

return result; // oops: not synchronized!

}

}

We might then use this code as follows:

w = new WorkerPair(); Object o = w.getResult();

As the comments in the code suggest, there are two serious problems

with the getResult implementation. First, the loop on done < 2

will waste a lot of time and energy. This is called busy waiting and should be avoided.

Second, there is no synchronization ensuring that updates to result are seen.

How can we fix this? We cannot make getResult()

synchronized, because this would block the final assignment

to done and result in the run method. We can't use the

lock of w to wait until done becomes 2.

Condition variables

A good solution to this problem is to use a condition variable, which is a mechanism for blocking a thread until some condition becomes true.

While monitors in general may have multiple condition variables,

every Java object implicitly has a single condition variable tied to

its lock. It is accessed using the wait() and notifyAll()

methods. (There is also a notify() method, but usually notifyAll()

is more useful and foolproof.)

The wait() method is used when a thread wants to wait for some

condition to become true. It may only be called when the lock is

held. The act of calling wait()

atomically releases the lock and blocks the current thread on the condition variable.

The thread will only wake up and start executing when notifyAll()

or notify() are called on that lock.

Java also has a version of wait() that includes a timeout

period, after which it will automatically wake itself up.

Note that a Thread waiting on a condition variable via wait()

will not wake up simply because the lock has been released by some other thread. The other

thread must call notifyAll() or notify().

Another thread should call the notifyAll() method when the condition

of the condition variable becomes true. Its effect is to wake up all

threads waiting on that condition variable. When a thread wakes up

from wait(), it immediately tries to acquire the lock. Only one thread can win;

the others block, waiting for the winner to release the lock.

Eventually they acquire the lock, though there is no guarantee that

the condition is true when any of the threads awake.

After a thread calls wait(), the condition it is waiting for might

be true when wait() returns. But it need not be. Some other thread

might have been scheduled first and may have made the condition false.

So wait() is usually called in a loop, like so:

while (!condition) wait();

Failure to test the condition after wait() leads to what

is called a wakeup–waiting race, in which threads awakened by

notifyAll() race to observe the condition as true. The winners of

the race can then spoil things for later awakeners.

Using condition variables, we can correctly implement getResult() as follows,

using a loop to avoid wakeup-waiting races:

synchronized Object getResult() {

while (done < 2) wait();

return result;

}

With this implementation, the lock is not held while the thread waits.

The implementation of run is also modified to call notifyAll():

...

synchronized(this) {

done++;

result = ...

if (done == 2) notifyAll();

}

In Java, the call to notifyAll() must be done when the lock is

held. Waiting threads will awaken but will immediately block trying to acquire

the lock. If there are threads waiting, one of them will win the race and

acquire the lock. In fact, since each awakened thread will test the

condition for itself, we need not even test it before calling notifyAll():

...

synchronized(this) {

done++;

result = ...

notifyAll();

}

Java objects also have a notify() method that wakes just one thread

instead of all of them. The use of notify() is error-prone and usually

should be avoided.

In general, a monitor may have multiple conditions under which it wants to wake

up threads. Given that a Java object has only one built-in condition variable,

how can this be managed? One possibility is to use a ConditionObject

object from the java.util.concurrent package. A second easy technique

is to combine all the multiple conditions into one condition variable that

represents the boolean disjunction of all of them. A notifyAll()

is sent whenever any of the conditions might become true; threads awakened by

notifyAll() then test to see if their particular condition has

become true; otherwise, they go back to sleep.

As an example of this disjunction pattern, here is another use of condition

variables: a blocking queue. The put method blocks whenever it

would put too many elements into the queue, waiting for another thread to take

an element out. The take method blocks whenever there is no

element to take. The same condition variable keeps track of two

conditions at the same time: queue full or queue empty. It is (always)

the responsibility of the waiting thread to ensure that the condition is true.

public class BlockingQueue{ private Queue queue = new LinkedList (); private int capacity; public BlockingQueue(int capacity) { this.capacity = capacity; } public synchronized void put(T element) throws InterruptedException { while (queue.size() == capacity) { wait(); } queue.add(element); notifyAll(); } public synchronized T take() throws InterruptedException { while (queue.isEmpty()) { wait(); } T item = queue.remove(); notifyAll(); return item; } }

Using background threads with JavaFX

In JavaFX, any background work should be done not by the Application thread, but rather in a separate thread. If the Application thread is busy doing work instead of handling user interface events, the UI becomes unresponsive. However, UI nodes are not thread-safe, so only the Application thread may access the component hierarchy.

The

Task class encapsulates useful functionality for

starting up background threads and for obtaining results from them.

This is easier than coding up your own mechanism using locks and

condition variables. The key methods are these:

Task.java

Some of the methods are designed to be used within the implementation of the task, and others are designed to be used by client code in other threads, to control the task and to interact with it.

To compute something of type V

in the background, a subclass of Task<V> is defined that

overrides the method call(). Because a Task is a

Runnable, the task can be

started by creating a new thread to run it:

Thread th = new Thread(task); th.start();

The work done by the tasks is defined in the call() method;

it should simply return the desired result at the end of the method in the

usual way. Notice that the call() method is not supposed to be

called by clients or by any subclass code; instead, it is automatically

called by the run method of the task.

To report progress back to the Application thread,

it may also call reportProgress(). When the task completes by returning

a value of type V from the call() method, the event handler

h defined by calling setOnSucceeded(h) is invoked in the

Application thread.

It is possible for a task to be canceled by calling the cancel() method;

however, it is incumbent on the implementation of the task to periodically check

whether the task has been canceled by using the isCancelled() method.

By listening on the property progressProperty(), client code in

the Application thread can keep track of the progress of the task and update

the GUI to reflect how far along the task is. The Task can also communicate

back to the Application thread by using method

Platform.runLater(), but this approach may couple the task

implementation with the GUI more than is desirable.