CCS'16 Reviewing Process

Shai Halevi

Christopher Kruegel

Andrew Myers

Problem: Scale

- 831 submitted papers

- 2.5K total reviews written

- 141 program committee members (from 20 countries)

- 360 external reviewers

- ~17 papers reviewed per PC member

- 137 (=16.5%) papers accepted (32 conditionally)

- 60 days

Schedule

How to assign reviews in 2 days?

Changes

- 2 PC Chairs → 3 PC Chairs

- Multiple rounds of reviewing (2)

- Automatic review assignments

Automatic review assignments

- Previous practice: reviewers express preferences on all papers,

conference system tries to match reviewers to papers accordingly.

Problem: not enough time to scan 831 papers, too much effort. - New experiment: use machine learning to automatically assign reviews

(Toronto Paper Matching System (TPMS), run by Laurent Charlin at U. Toronto)

- Reviewers provide a link to their list of publications instead of preferences, publication PDFs gathered by crawling web sites.

- TPMS extracts text from all pubs, converts to “bag of words”

- Unsupervised machine learning algorithm generates preference scores based on similarity between documents.

- Assignments chosen to optimize preference scores while respecting conflicts.

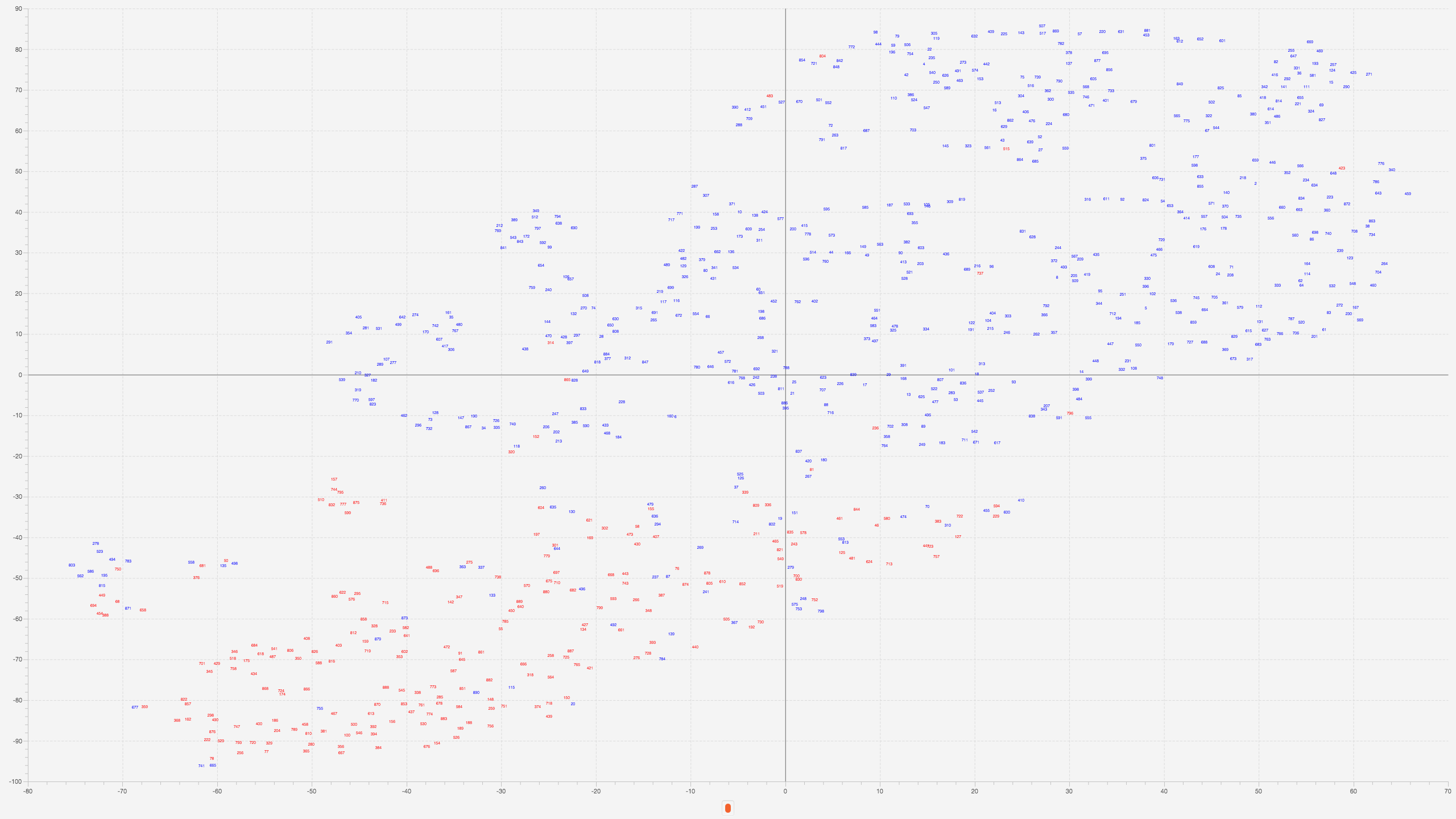

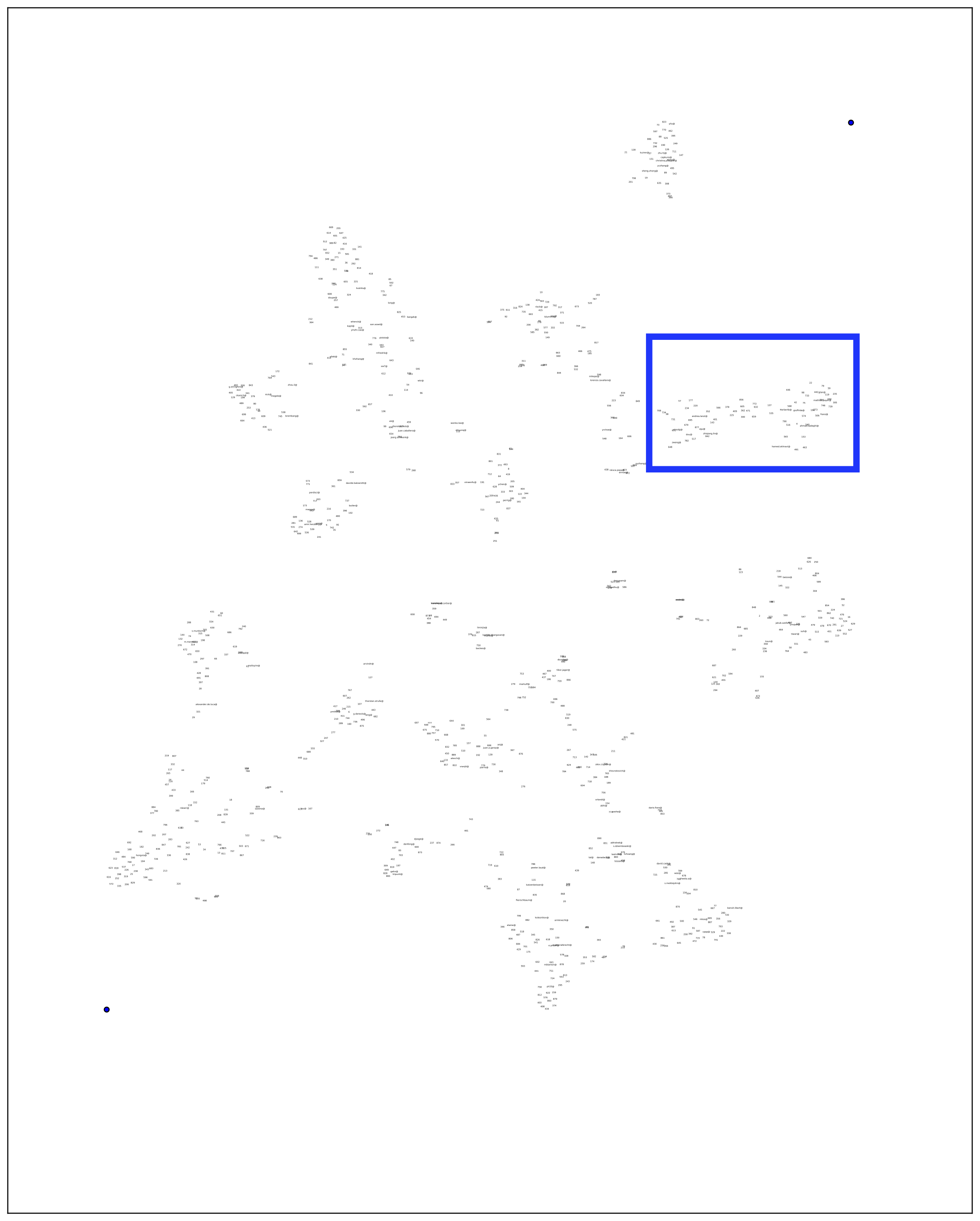

T-SNE plot of all submissions

Papers shown in red independently labeled as “crypto” by Shai Halevi

Papers shown in red independently labeled as “crypto” by Shai Halevi

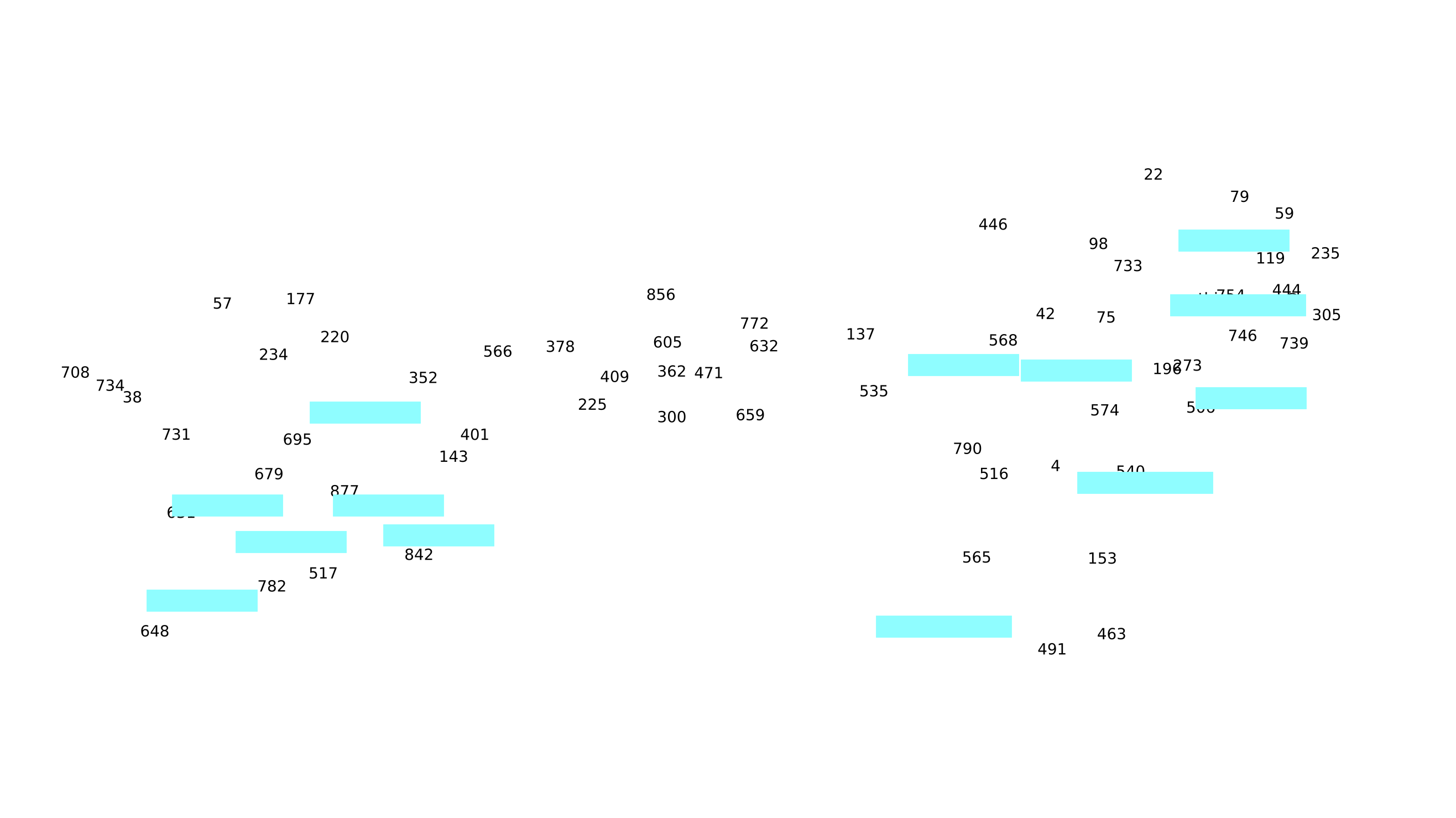

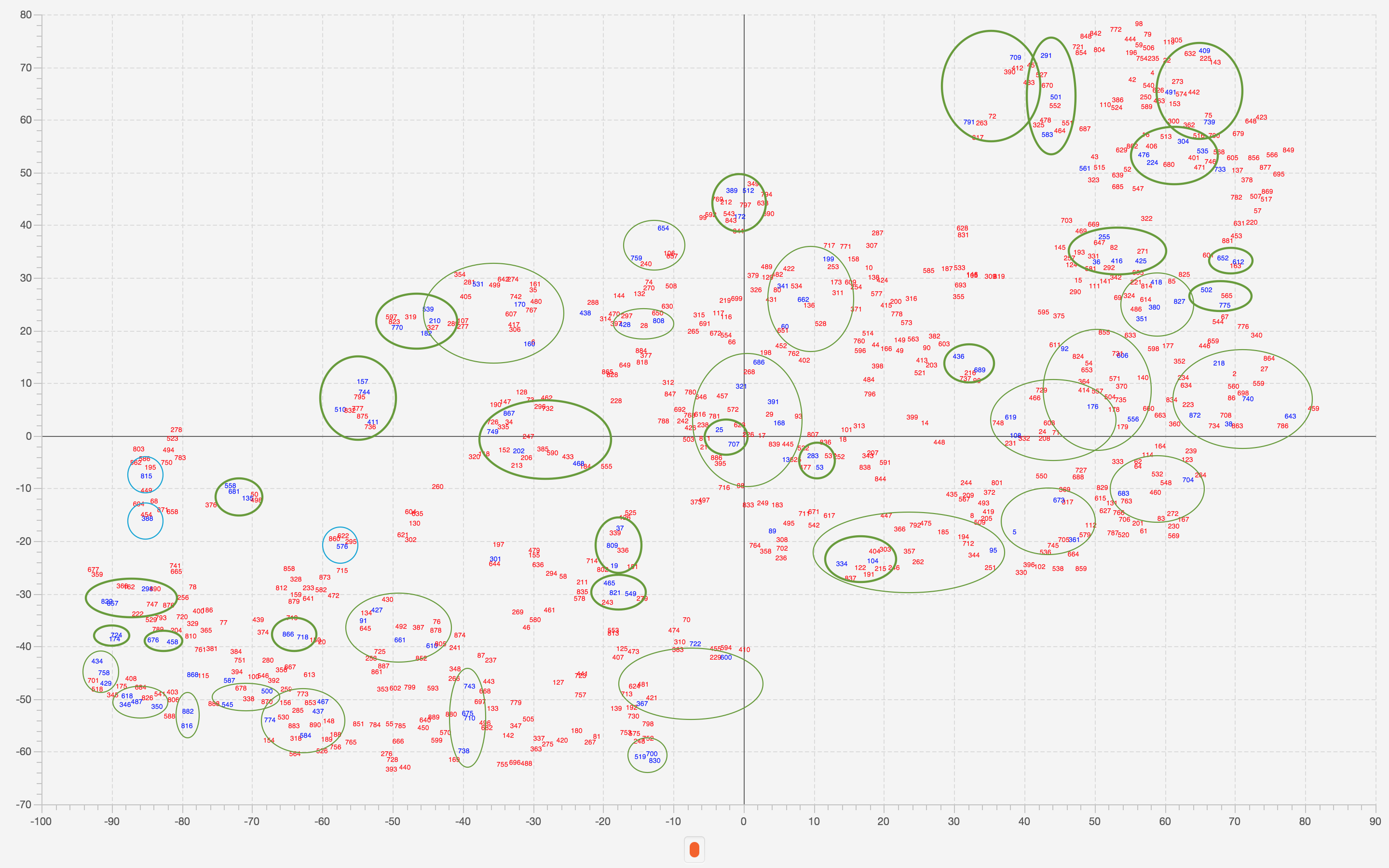

Combined embedding

(cyan rectangles are reviewers)

(cyan rectangles are reviewers)

Sessions

Same technique used to create sessions and to assign papers to rebuttal committee (which minded paper discussions)

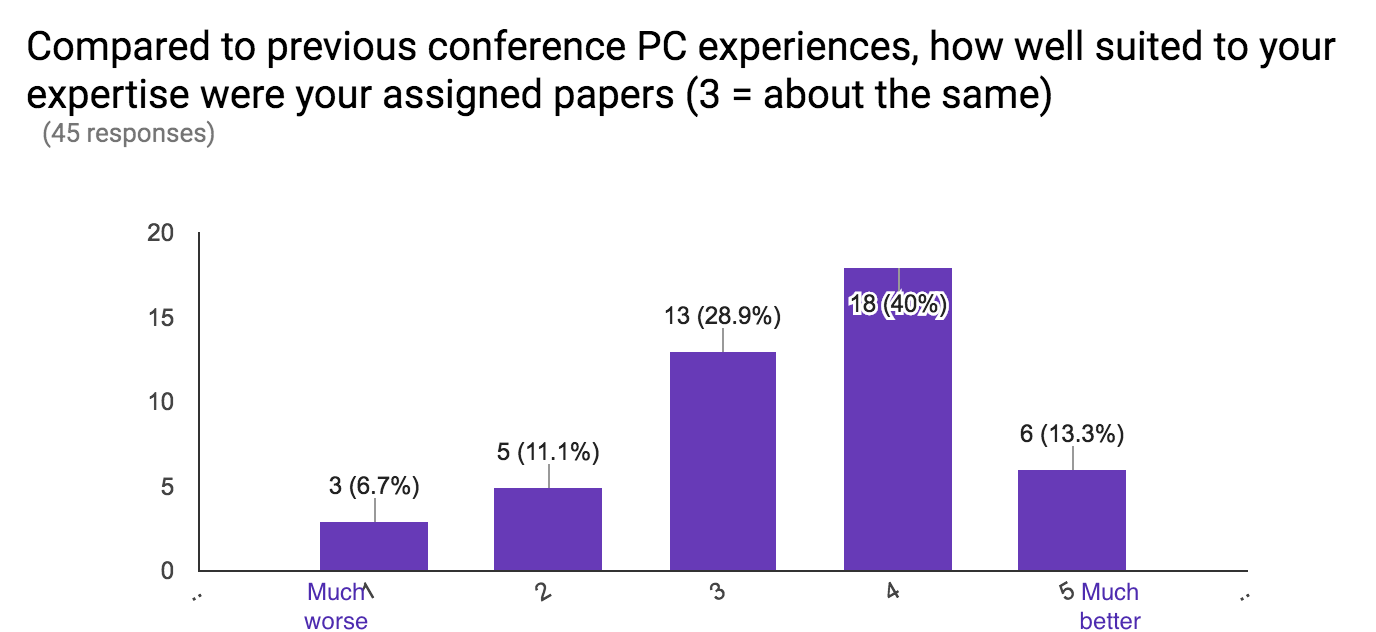

Appropriateness

Assignments were somewhat better suited to expertise

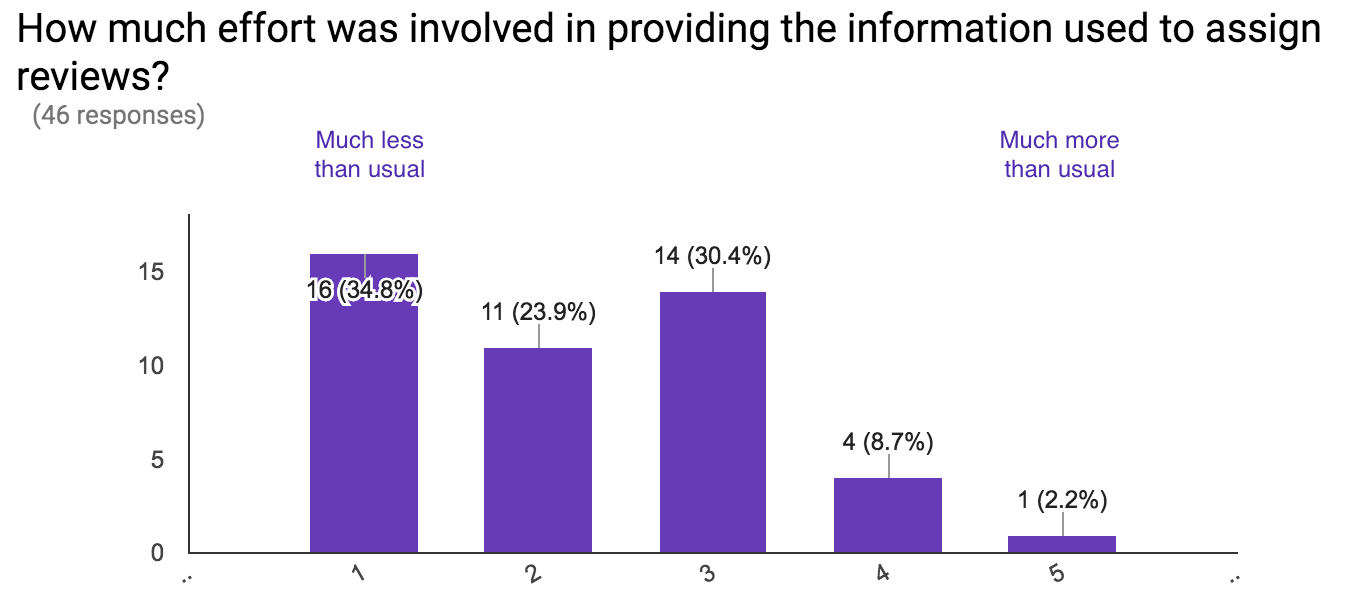

Effort from PC

Similar or less work for most PC members

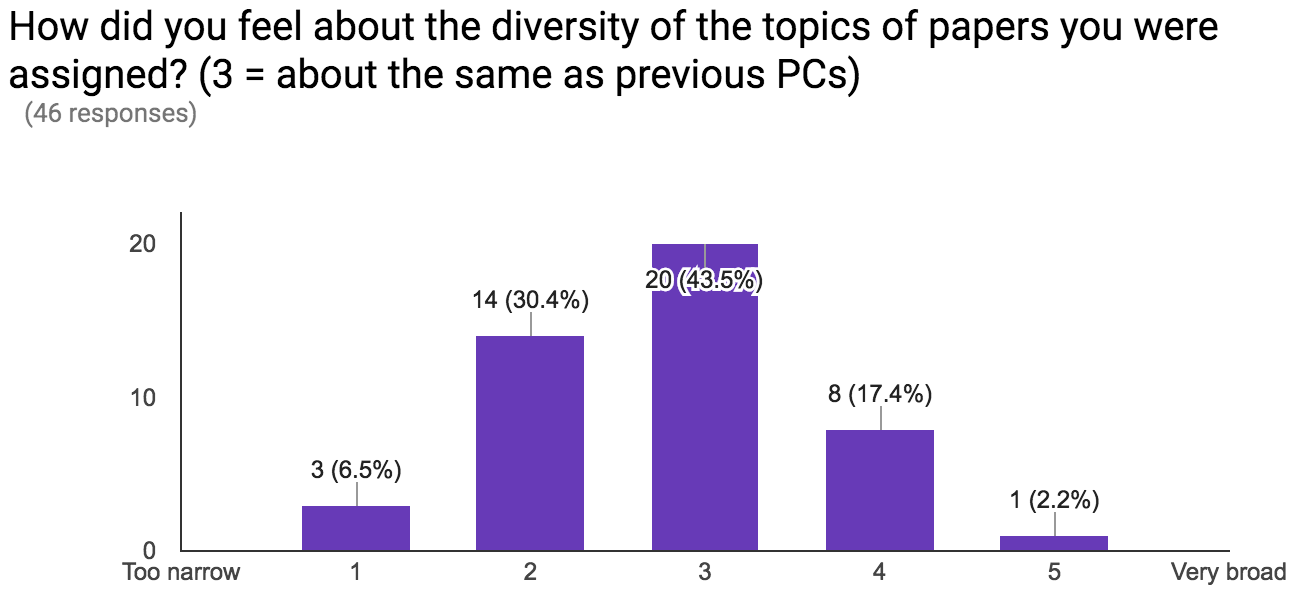

Diversity

Somewhat lower diversity of topics

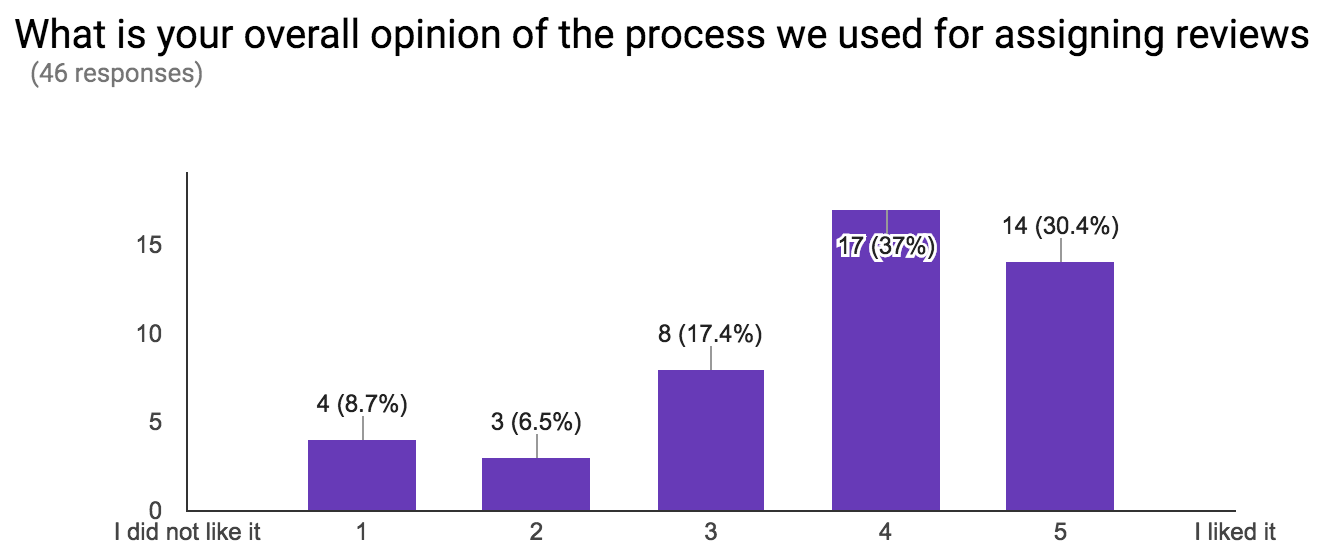

Overall

Generally positive!

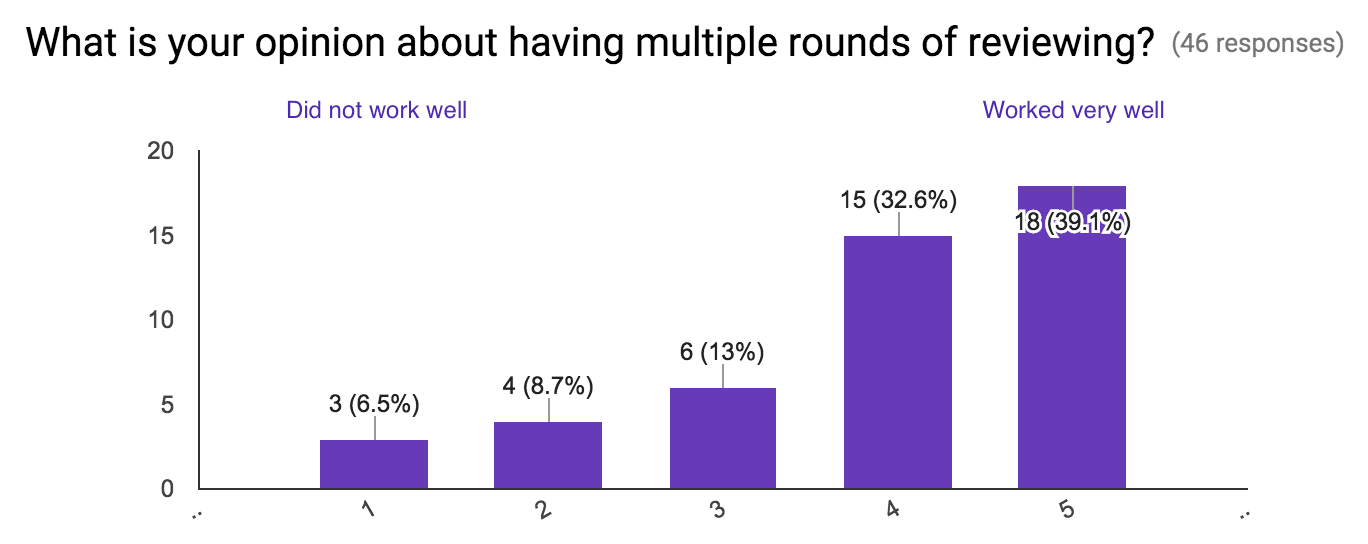

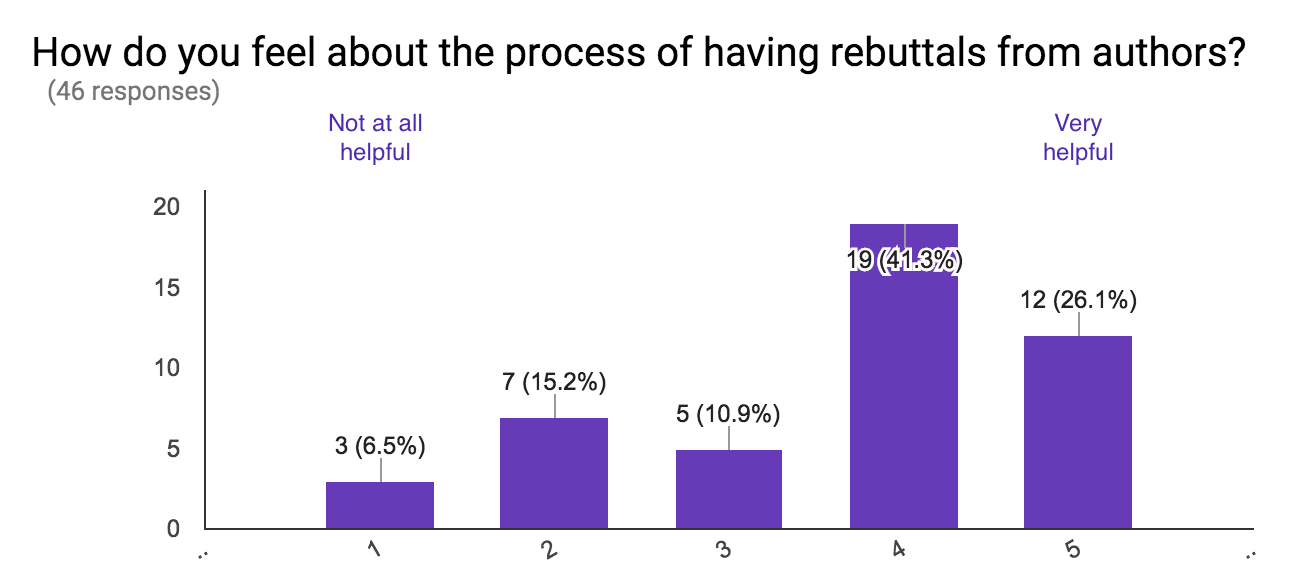

Multiple rounds

Rebuttals

Comments from PC poll

- Not enough time in 2nd round to find external reviewers

- Past papers don't capture current interest. Idea: bid (quickly) for a handful of papers but do the rest automatically.

Thank you!

- To everyone who submitted papers

- To the general chairs and other organizers

- To reviewers (PC and external)

Shweta AgrawalGail-Joon AhnMartin AlbrechtManos AntonakakisFrederik ArmknechtErman AydayMichael BackesDavide BalzarottiKarthikeyan BhargavanAlex BiryukovMarina BlantonAlexandra BoldyrevaHerbert BosElie BurszteinKevin ButlerJuan CaballeroYinzhi CaoSrdjan CapkunDavid CashLorenzo CavallaroYan ChenAlessandro ChiesaElisha ChoeOmar ChowdhuryVéronique CortierDana Dachman-SoledGeorge DanezisAnupam DattaAlexander De LucaRinku DewriAdam DoupéTudor DumitrasStefan DziembowskiManuel EgeleWilliam EnckDario FioreMichael FranzMatt FredriksonXinwen FuVinod GanapathyJuan GarayDeepak GargCristiano GiuffridaIan GoldbergZhongshu GuAmir HerzbergViet Tung HoangThorsten HolzAmir HoumansadrYan HuangTibor JagerAbhishek JainLimin JiaHongxia JinBrent Byunghoon KangChris KanichStefan KatzenbeisserFlorian KerschbaumDmitry KhovratovichTaesoo KimEngin KirdaMarkulf KohlweissVladimir KolesnikovRalf KuestersRanjit KumaresanAndrea LanziPeeter LaudWenke LeeAnja LehmannZhou LiZhenkai LiangBenoît LibertZhiqiang LinYao LiuBen LivshitsLong LuMatteo MaffeiTal MalkinMohammad MannanSarah MeiklejohnPrateek MittalIan MolloySteven MurdochArvind NarayananNick NikiforakisHamed OkhraviClaudio OrlandiXinming OuCharalampos PapamanthouBryan ParnoMathias PayerRoberto PerdisciAdrian PerrigMarco PistoiaMichalis PolychronakisEmmanuel ProuffChristina PöpperZhiyun QianKui RenKonrad RieckWilliam RobertsonAlejandro RussoAndrei SabelfeldAhmad-Reza SadeghiNitesh SaxenaJörg Schwenkabhi shelatElaine ShiTom ShrimptonKapil SinghSooel SonDouglas StebilaGianluca StringhiniThorsten StrufeEd SuhKun SunJakub SzeferGang TanStefano TessaroMohit TiwariNikos TriandopoulosMahesh TripunitaraXiaoFeng WangZhi WangHoeteck WeeEdgar WeipplDongyan XuWenyuan XuDaphne YaoTing YuDavid ZageKehuan ZhangXiangyu ZhangYanchao ZhangYinqian ZhangSheng ZhongHaojin Zhu

- Thanks for coming, see you next year!