Abstract : We discuss a novel decision tree architecture with soft decisions at the internal nodes where we choose both children with probabilities given by a sigmoid gating function. Our algorithm is incremental where new nodes are added when needed and parameters are learned using gradient-descent. We visualize the soft tree fit on a toy data set and then compare it with the canonical, hard decision tree over ten regression and classification data sets. Our proposed model has significantly higher accuracy using fewer nodes.

Code : My C++ code is here. Please cite the paper if you use it.

Data : The UCI datasets used in the paper are here, in a folder structure and file format that is compatible with the code. If you use the data, UCI Repository Citing Policy should apply.

If you want to replicate (sort of*) the results in the paper, simply extract the data and these bash scripts into the same folder as the code. Then, after compilation, running the scripts should run the experiments over each dataset in series, over all 10 folds in parallel, as background processes.

*: There are 2 reasons that these will not yield the exact results in the paper.

(1) After I refactored my code for release, there were numerical differences in the results. (2) For classification results in the paper, we used another implementation by Olcay Taner Yildiz, hence, there might be differences.

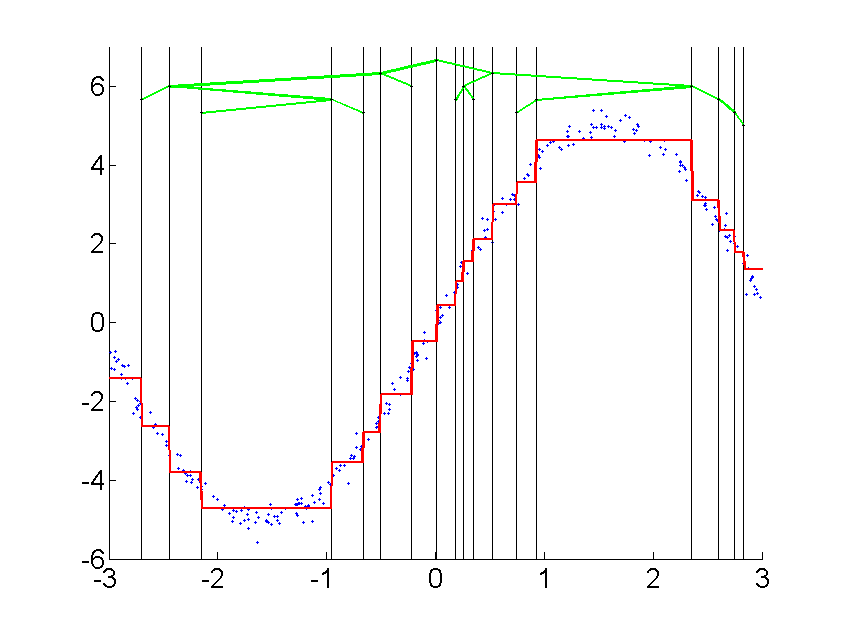

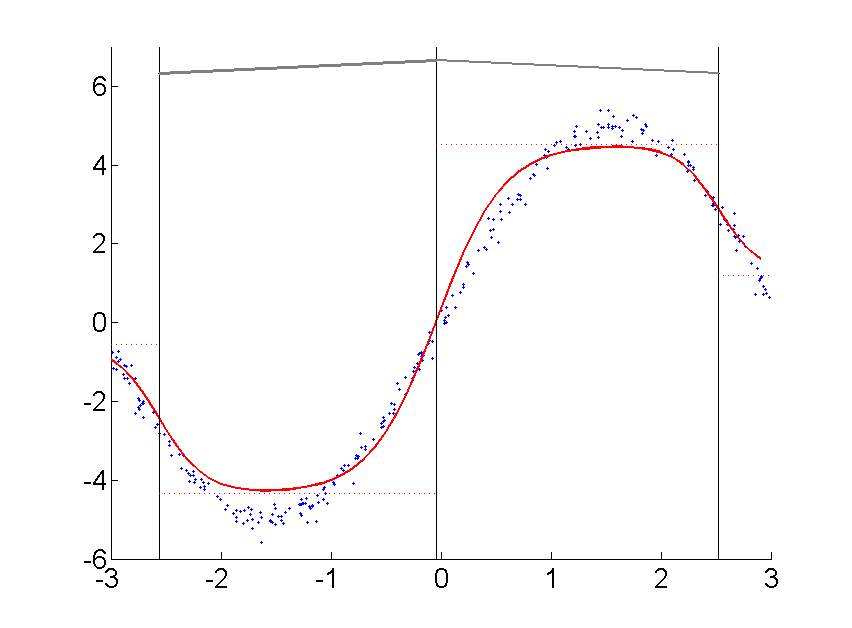

Hard (left) and soft (right) tree fits to sinusoidal data.

Trees show the split hierarchy and vertical bars show the split positions.

Red curve shows the fit.

Hard (left) and soft (right) tree fits to sinusoidal data.

Trees show the split hierarchy and vertical bars show the split positions.

Red curve shows the fit.More plots here.

Bibtex:

@inproceedings{irsoy2012soft,

title={Soft decision trees},

author={Irsoy, Ozan and Yildiz, Olcay Taner and Alpaydin, Ethem},

booktitle={International Conference on Pattern Recognition},

year={2012}

}