Stay Positive: Non-Negative Image Synthesis for Augmented Reality

Stay Positive: Non-Negative Image Synthesis for Augmented Reality

Abstract

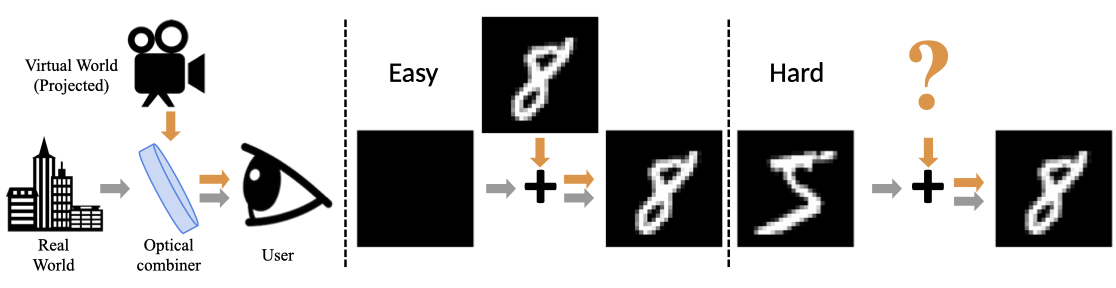

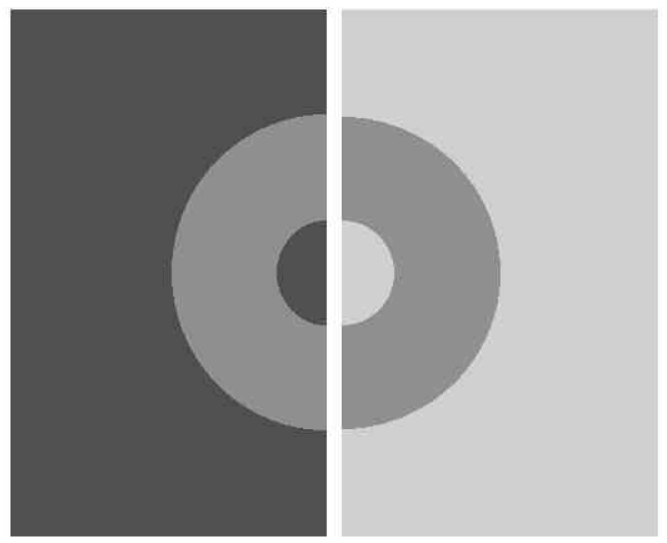

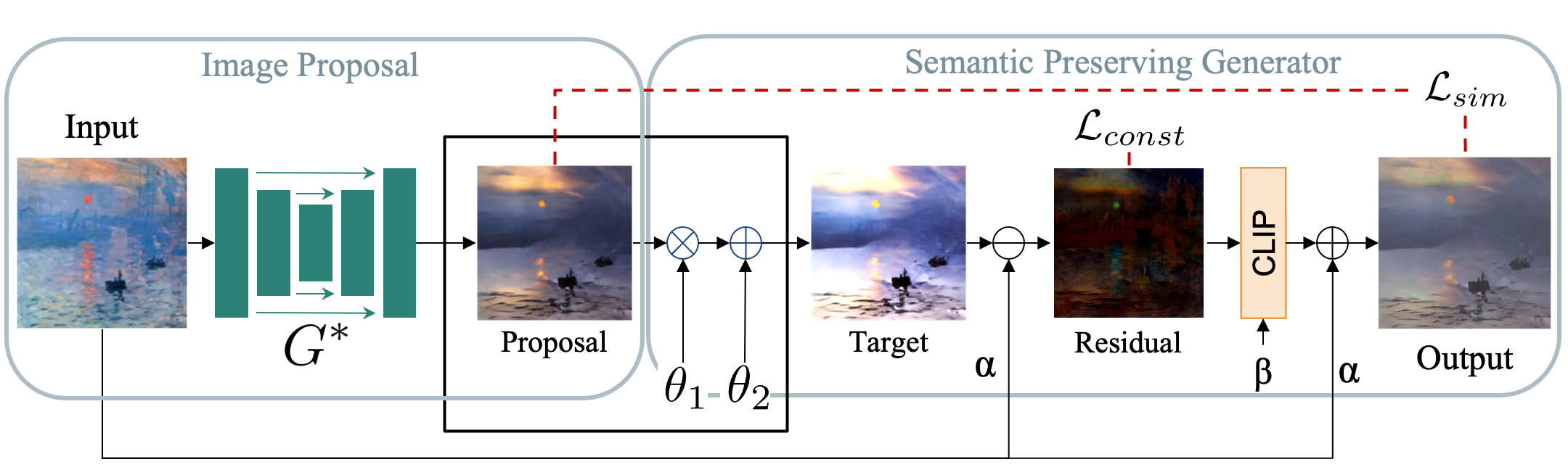

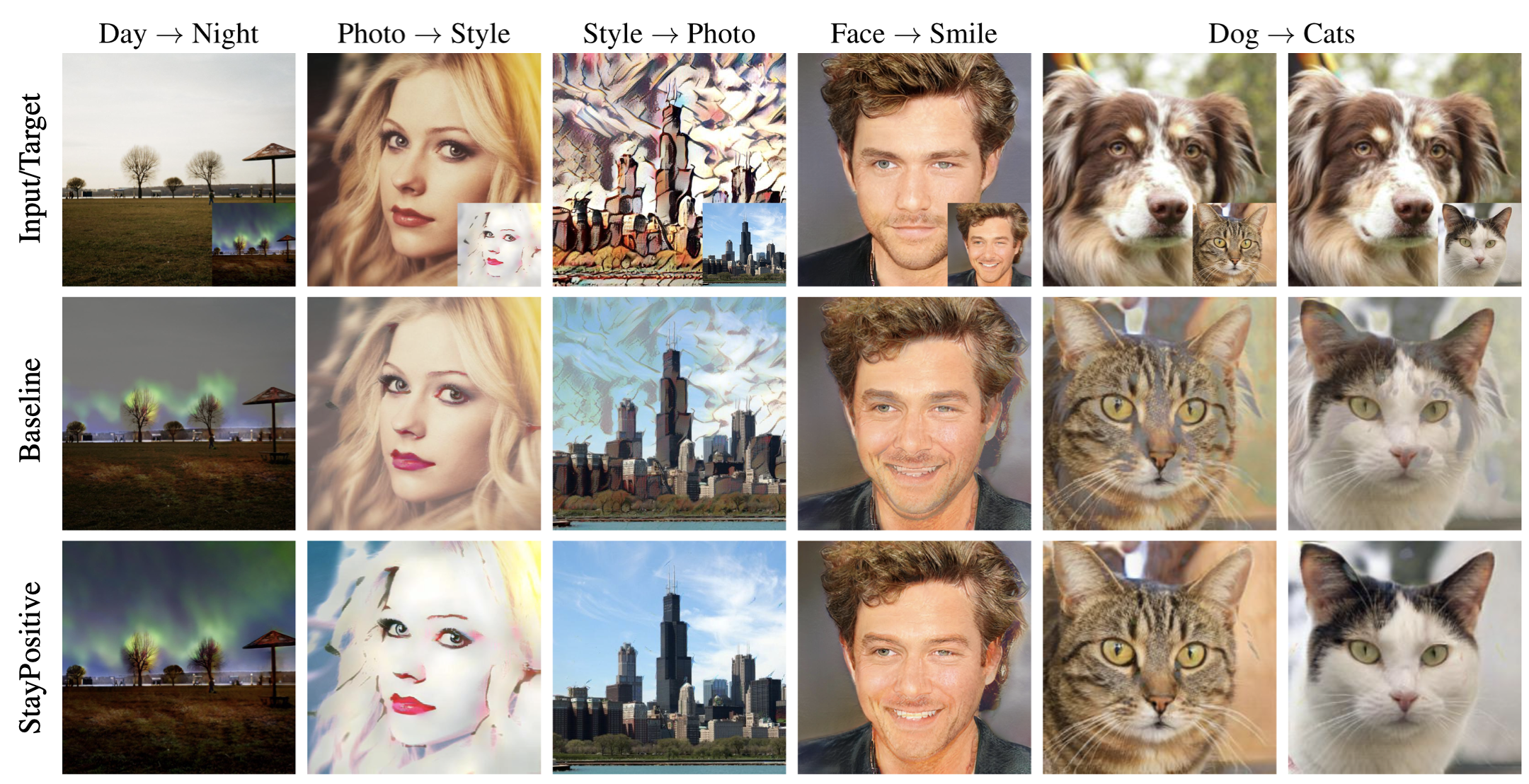

In applications such as optical see-through and projector augmented reality, producing images amounts to solving non-negative image generation, where one can only add light to an existing image. Most image generation methods, however, are ill-suited to this problem setting, as they make the assumption that one can assign arbitrary color to each pixel. In fact, naive application of existing methods fails even in simple domains such as MNIST digits, since one cannot create darker pixels by adding light. We know, however, that the human visual system can be fooled by optical illusions involving certain spatial configurations of brightness and contrast. Our key insight is that one can leverage this behavior to produce high quality images with negligible artifacts. For example, we can create the illusion of darker patches by brightening surrounding pixels. We propose a novel optimization procedure to produce images that satisfy both semantic and non-negativity constraints. Our approach can incorporate existing state-of-the-art methods, and exhibits strong performance in a variety of tasks including image-to-image translation and style transfer.

Our key insight: Leverage optical illusions to produce high quality images with negligible artifacts, e.g. we can create the illusion of darker patches by brightening surrounding pixels.

Result Visualizations

Video Presentation

Acknowledgements

This work was supported in part by grants from Magic Leap and Facebook AI, and a donation from NVIDIA.