|

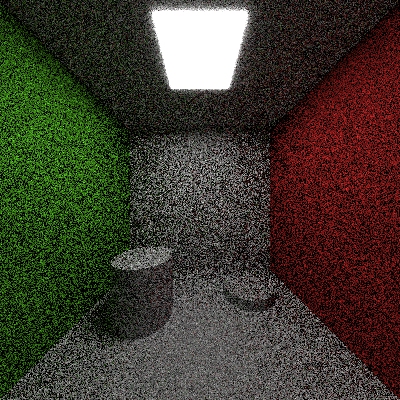

Light sampling = 2x2 jittered |

|

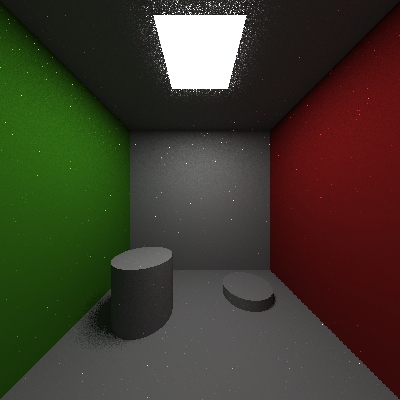

Light sampling = 4x4 jittered |

|

Light sampling = 8x8 jittered |

Montecarlo raytracing let you simulate the light transport in a very accurate (and

time-consuming) way.

Here are some of the detail of my two different implementations and images compared to the

images obtained by raytracing to show some of the possible effects you can obtain by

rendering with Montecarlo techniques.

The first of my implementation used Pixar RenderMan shading language.

This language is really powerful and let you implement in a very few line a Montecarlo

raytracer.

I implemented a very simple Montecarlo raytracer using a normal Montecarlo sampling and stratified sampling. I also added Next event estimation optimization, that reduces the noise a lot, but I should check better before being sure that the integration is completely correct (the image seems to be). The implementation is straightforward in RenderMan (about 30 total lines of code).

The feature of this implementation are the same as above with the addition of an option

for using pathtracing technique. I also implement a feature for including the rendering of

only the direct illumination, that can be helpful if you want to compare results in the

images you render.

To spare time, all the option are hardwired in an initialization function in the code.

This is not so bad, since I only run the code in debug mode, to check some strange

instabilities that sometimes happen (I have no clue why), and since I am only looking for

possible bugs somewhere.

Using Rayshade I had a lot of problems, because strange things happens sometimes, and I

really don't know why now (I didn't spend so much time in trying to figure out what can

be. My idea is that some strange things happens using Rayshade libraries and Microsoft

compiler - see below).

I also implemented next event estimation (that is just render the direct illumination

contribution in a separated way from the indirect), but I can't really use it because it

is pretty unstable. I implemented a "biased" Montecarlo integration and in this

way works perfectly, but it is biased, so it is physically wrong.

Since I am evaluating the time of the algorithm using the number of rays casted (the

execution of the rendering function is linear with respect to them), I can simply use the

formulas for calculating how many ray I cast to evaluate the running time.

For the raytracing implementation, the running time is res * (1 + light_res),

where res is the resolution of the rendered image, and light_res, is the

number of sampling used to approximate the light source.

For the pathtracing approach, the number of rays casted is res * (1 + res_theta *

res_phi * depth), where res_theta is the number of subdivision for

the angle theta, phi for the angle phi, and depth for the recursion

depth. I normally take res_theta = res_phi / 4.

For the Montecarlo approach, the number of rays casted is res * (1 + (res_theta

* res_phi) ^ depth).

The code runs in about 50 minutes for a pathtracing with depth = 8, res_phi

= 16 and res_theta = 4.

To compare the speed of the various algorithm used, I decided to compare just the

number of total ray casted.

This should be a good measure if you want to compare the quality vs. time ratio between

the various algorithm used. It is also better than comparing the processor time since I

always run the code in debug mode from the IDE, while I am working on other programs, so

this measure is not good enough.

I firstly render some images using raytracing. The scene a modified version of the

Cornell box rendered using the implementation based on Rayshade.

As expected, you loose interreflection, that is to say that raytracing is ok as far as the

contribution of indirect light is small. We can also say that it is ok as far as the

gradient diffuse light is really small in the region we want to render. The value of

ambient light I choose is just a trial value. You should normally try to match it with the

scene you want to render (especially if you are working in a interactive local

illumination environment to model the scene).

The soft shadows can be obtained using jittered sampling of the area light source in the

ceiling, so as argued before, this is not a problem for raytracing (at least you can

simulate them such that the scene looks "reasonable").

Here are three images rendered using different samplings for the area light. As expected

the noise is reduced a lot from the first to the last image simply by taking more sample

on the light source.

|

Light sampling = 2x2 jittered |

|

Light sampling = 4x4 jittered |

|

Light sampling = 8x8 jittered |

As it can be see from the images, the noise is really a lot. Even by changing the depth

of the recursion for raytracing, the noise seems to practically not be so different from

before, even if the rendering time grows almost linearly with the depth (see above).

I also tried to render a high resolution image and using some image processing program to

apply some filters, but, as expected, this is practically unuseful since the noise is too

high.

Just by playing with the gamma and exposure value in glimage, it is clear that the lack of

the direct illumination contribution is the main reason for such a noise at low number of

sampling per pixel (this is pretty obvious physically, but having a proof is always good).

See below for same images.

As expected, this very simple modification reduce the noise quite a bit, Chile

maintaining the same rendering time.

Here are two images rendered for depth = 2, res_phi = 16 and res_theta

= 4.

As can be seen in the central part of the floor, and on the central part of the walls, the

noise is clearly reduced even if I am using the same number of points.

|

Non-stratified sampling depth = 2 res_theta = 4 res_phi = 16 |

|

Stratified sampling depth = 2 res_theta = 4 res_phi = 16 |

As can be expected by the geometry of the scene, direct illumination plays a very

important role. This is due to the fact that a lot of the surfaces in the scene are

illuminated by direct lighting, this is the most important contribution to the

illumination of these surfaces. So to reduce drastically the noise in this areas it is

really important to raise as much as possible the resolution of the stratified sampling

grid to raise the probability to hit the light.

Here are three images rendered using pathtracing and stratified sampling, with different

grids for theta and phi.

|

depth = 2 res_theta = 4 res_phi = 16 |

|

depth = 2 res_theta = 8 res_phi = 32 |

|

depth = 2 res_theta = 16 res_phi = 64 |

As before, the depth plays not a so important role on reducing the noise, since it is

important only for indirect illumination. Since also the contribution of a random walk is

greater than zero if it hit the light source in the scene, than it is clear that also the

probability to have a contribution for indirect illumination does not converge very fast

and the noise for the indirect contribution is very large.

|

depth = 2 res_theta = 4 res_phi = 16 |

|

depth = 4 res_theta = 4 res_phi = 16 |

|

depth = 8 res_theta = 4 res_phi = 16 |

Since the time to render a Montecarlo image is exponential with the recursion depth,

the quality/speed ratio is not very good. To be useful you have to use a very low depth.

Since as shown above the real important thing is raising the res_phi and res_theta

as much as possible, the depth used is really really low. In a diffuse environment where

direct light plays an important role, like here, this is not a so bad. This is because in

every case the most important contribution to a lot of the surface area in the picture is

due to direct lighting, so trying to getting its contribution is the most important thing

normally.

But as can be clearly see by the comparison below, the area with indirect illumination

(ceiling and cylinders in shadow), have a clearly better look now than using a

pathtracing.

|

Montecarlo depth = 2 res_theta = 4 res_phi = 16 |

|

Pathtracing depth = 2 res_theta = 4 res_phi = 16 |

I implemented also next event estimation, but it seems to crash to program. So I

implemented it in a biased way. In this way the integrals are not estimated

correctly since I am biasing the random distribution of casted rays, but it is good to

estimate the convergence of the code. As expected the convergence is made very fast in

this way.

Here is an image obtained in this way compared to the same one without the implementation.

Since the code that uses the angle implementation was biased (in the sense that the

non-biased one crashes everything), I decided to implement a NEE using area integral

instead of angles.

This implementation runs ok, but I decided to leave the other images such that a

comparison can be made. It is interesting (at least for me), to see what does it

means to bias the result of a Montecarlo integration.

All the images in the table below are obtained at the same resolution, but it is clear

that the next event estimation one converges faster.

|

Next event estimation depth = 2 res_theta = 4 res_phi = 16 light sampling = 2x2 jittered |

|

Pathtracing depth = 2 res_theta = 4 res_phi = 16 |

|

Biased next event estimation depth = 2 res_theta = 4 res_phi = 16 light sampling = 4x4 jittered |