CS6670 -- Computer Vision, Spring 2011

Project 3: Location Recognition

Modified 4/6/11

Assigned:

Wednesday,

April 6, 2011

Due: Sunday,

April 17, 2011 (by 11:59pm)

Synopsis

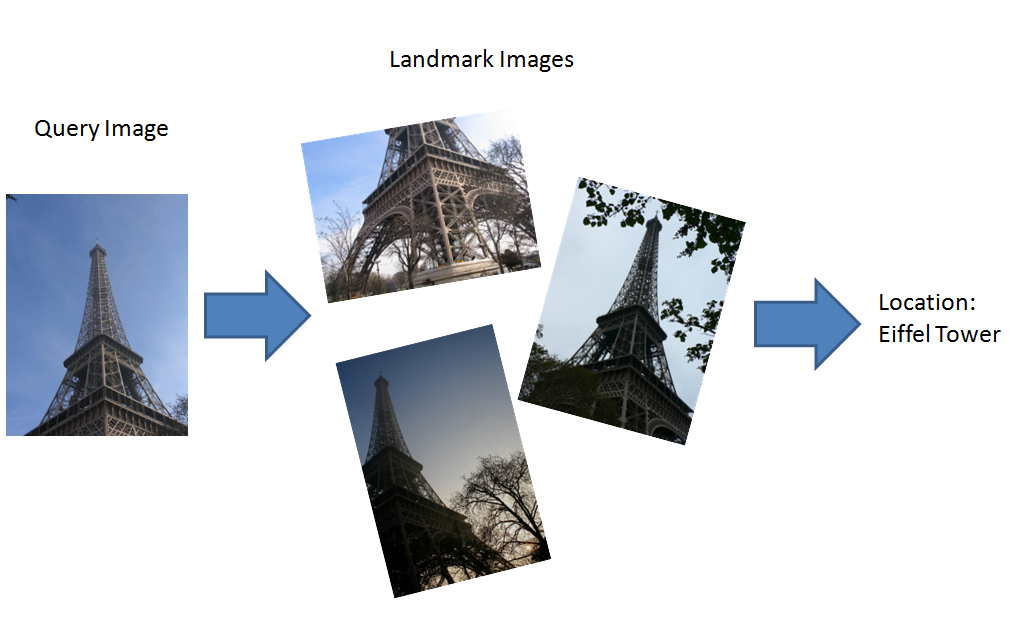

In this project you will create a location recognition system. This project is based on techniques described in this paper. In particular, visual words model will be used to retieve k most similar images in the database whose landmark information is known. Then voting is used to determine the landmark the query image belongs to. There are 3 parts in this project: learning a vocabulary tree using kmeans, building a weighted vocabulary tree using TFIDF weighting and location recognition using k-nearest neighbor and voting.

You are given a skeleton program that provides some tools and implementation to get started. Your job will be to fill in some critical functions from each of the 3 parts in this project mentioned above. This is a fairly good-sized project, so plan your time accordingly.

Datasets

The datasets you will be working with are "objects20", "objects100", "landmarks10" and "landmarks20". These are datasets with precomputed SIFT features included and the list of filenames for both query and database images. "objects" datasets are easier than landmarks datasets, which you should probably start with. Inside each directory are:

db: dababase images (thumnail versions) and corresponding SIFT feature files.

queries: query images (thumnail versions) and corresponding SIFT feature files.

list_db.txt: list of SIFT feature files of database images.

list_db_ld.txt: list of SIFT feature files of database images and corresponding landmark ID.

list_queries.txt: list of SIFT feature files of query images.

list_gt.txt: list of SIFT feature files of query images and corresponding landmark ID. (Ground truth)

script.linux.sh and script.windows.bat: scripts for testing for Linux (Mac) and Windows platforms.

In "objects20", the trees generated by solution code are also included to help you get started quickly. You could use these trees to test your database building or recognition code even if kmeans isn't totally working yet.

Skeleton Code

The skeleton code for this project could be downloaded here. Here are some descriptions about the basic structure of the skeleton code:

lib: Contains necessary libraries used in this project. Specifically, ann, ann_char (a modified version of ann), imagelib and zlib. Nothing need to be changed here.

VocabLearn (Step 1): Contains main function of learning a vocabulary tree using kmeans clustering algorithm, which corresponds to the 1st part of this project. The details of this algorithm could be found here. Please note that in this function only IO are dealt with, and the actual algorithm is not implemented here. Instead, most implementation of algorithms are in VocabLib. Thus nothing need to be changed here either.

VocabBuildDB (Step 2): Contains main function of building a TFIDF weighted vocabulary tree using second list of features.

VocabMatch (Step 3): Contains main function of matching a list of

new images to a given Vocab DB generated from Step 2. You'll need to

modify VocabMatch.cpp to implement kNN voting.

VocabLib: You will need to modify files in this directory to implement algorithms for above 3 steps. For VocabLearn, you'll need to modify kmeans.cpp to implement kmeans algorithm; for VocabBuildDB and VocabMatch, you'll need to implement relavant functions in VocabTree.cpp. The detailed information you need for such implementation could be found in TO DO section below.

If you are using linux (ubuntu), you probably need to install "zlib1g-dev" in order to compile the skeleton code successfully.

To Do

All the required work can be done in kmeans.cpp, VocabTree.cpp and VocabTreeBuild.cpp in VocabLib directory and VocabMatch.cpp in VocabMatch directory.

Each of the items below have an upper bound on the number of lines of code needed, not including comments. If you have many more lines of code for a given step than the upper bound, then you may be doing something wrong, or at least making more work for yourself. Note that points will not be taken off for making your code longer than necessary, as long as it is correct and well coded. However, you should try your best not to go too far over the upper bound, as it means unnecessary work for you and will make your code more difficult to read and harder to grade.

1. Implement compute_means in kmeans.cpp. This method recomputes the means based on the current clustering of the points.

Lines required: 17

1.5 Implement compute_error in kmeans.cpp. This method computes the error based on the current clustering of the points.

Lines required: 11

2. Implement compute_clustering in kmeans.cpp. This method recomputes the clustering based on the current means.

Lines required: 26

3. Implement kmeans in kmeans.cpp. This method runs kmeans clustering on a set of input descriptors.

Lines required: 50

4. Fill in VocabTreeInteriorNode::BuildRecurse in VocabTreeBuild.cpp. You'll need to fill in this part of the code for building a vocabulary tree using hierarchical k-means.

Lines required: 40

5. Implement VocabTreeInteriorNode::PushAndScoreFeature in VocabTree.cpp. This function will need to take the input descriptor v and recursively push it down the tree by finding the closest child descriptor at each node.

Lines required: 20

6. Implement VocabTreeLeaf::PushAndScoreFeature in VocabTree.cpp.

Lines required: 0

6 (real). Implement VocabTreeLeaf::ComputeTFIDFWeights in VocabTree.cpp. This part of the code sets the TFIDF weights of this leaf node (i.e., this visual word), according to how many documents this word is visible in.

Lines required: 5

7. Implement VocabTreeInteriorNode::ScoreQuery in VocabTree.cpp. This function, and the version for VocabTreeLeaf, will compute the similarity between the query histogram (stored in q), and the database images, stored in the inverted file.

Lines required: 5

8.

Implement VocabTreeLeaf::ScoreQuery

in VocabTree.cpp.This function will look at the inverted file at this

leaf node (visual word), stored in m_image_list, and will update the

similarities in scores based on the value of q. There are two types of

similarities: "dot" and "min": "dot" is a similarity computed by

summing the pair-wise product of two vectors on each dimension; "min"

is a similarity computed by summing the min value on each dimension

from 2 vectors.

Lines required: 15

9. Implement main in VocabMatch.cpp.You'll need to use k-nearest neighbors to compute the best landmark.

Lines required: 15

9. Run experiments with a variety of parameters --- i.e. try various

parameter values, larger vocabularies, different datasets and see what

works best. You'll need to report this part in some detail in you

webpage. (See "Experiments to Run" below for details.)

Experiments

Running Tests

There are scripts provided in each dataset to help you understand how to run the test. The steps are:

1. Learn a vocabulary tree (VocabLearn):

Usage: VocabLearn <list.in> <depth> <branching_factor> <restarts> <tree.out>

<list.in>: a list of newline-separated key filenames

<depth>: the depth of the tree (e.g. 4)

<branching_factor>: the number of children per parent node (e.g. 10)

<restarts>: the number of times to run k-means with random restarts at each node

<tree.out>: the output file to write the tree toExample:

> VocabLearn list_keys.txt 5 10 1 tree.out

2. Construct a weighted vocabulary tree using TFIDF weighting (VocabBuildDB):

Usage: VocabBuildDB <list.in> <tree.in> <db.out> [distance_type]

<list.in>: a list of newline-separated key filenames

<tree.in>: the (trained) input tree

<db.out>: the output database tree

[distance_type]: 0 is "dot"; 1 is "min". (As described in TODO #8, default "min")Example: VocabBuildDB list_keys_db.txt tree.out tree_db.out

3. Match a list of query images to weighted vocabulary tree (VocabMatch):

Usage: VocabMatch <tree.in> <db_ld.in> <query.in> <num_nbrs> <matches.out> [output.html] [distance_type]

<tree.in>: the input database

<db_ld.in>: the list of database key filenames and corresponding landmark ids

<query.in>: the list of query key filenames

<num_nbrs>: k value used in KNN

<matches.out>: the output file containing 3 columns: query id, the predicted category id, and the number of votes for predicted category out of <num_nbrs> votes in total.

[output.html]: the output html file, listing the top matches

[distance_type]: 0 is "dot"; 1 is "min". (As described in TODO #8, default "min")Example: VocabMatch tree_db.out list_keys_db.txt list_keys_query.txt 20 matches.txt 2

After generating matches.txt, you could evaluate the precision by using "datasets/eval.py" which takes in the ground truth file and matches.txt you generated and outputs the precision of your prediction.

Experiments to Run

For each of the four datasets, you will need to run your code with the best settings you have found and provide precision for each.You will also need to experiment with different settings: how does the performance change as the size of the vocabulary grows? What is the effect of the "dot" similarity vs. the "min" similarity?

For the landmark20 dataset, you will need to put a plot of performance vs. vocabulary size for each of the two similarity metrics, as well as a plot of performance vs. number of nearest neighbors used for knn with one of the similarity metrics. You should also link to the webpage output for landmark20 with your best settings for us to take a look at. Finally, you'll need to compare the performance of using a vocab tree learned on landmark20 and tested on landmark20 to one learned on objects20 and tested on landmark20. You are encouraged to include any additional experiments that you performed. Any extra credit you attempted should also be described on the webpage.

We'll run your code on our own test set, and the best-performing system will get a small bonus.

Write-up

Your source code and executable should be zipped up into an archive called 'code.zip', and your webpage describing your approach and results should be zipped up into an archive called 'webpage.zip'. Both of the arichives should be uploaded to CMS.

Your write-up should be an HTML document. You can also use KompoZer, an easy-to-use html editor that is installed on the lab machines. You should include the plots and experiment results described in "Experiments to Run". Finally, make sure to fully document any extra credit on this web page.