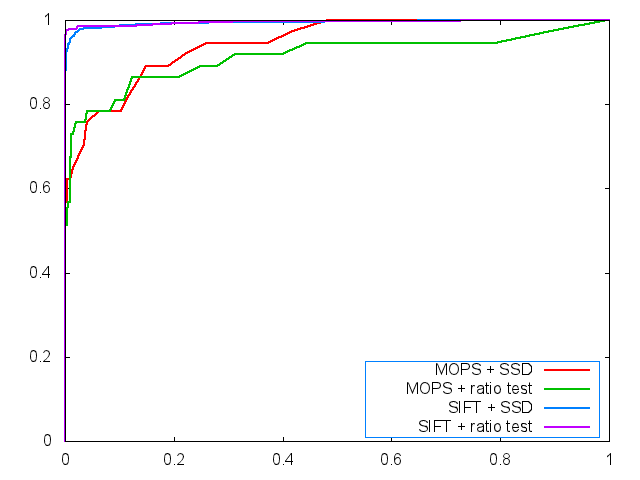

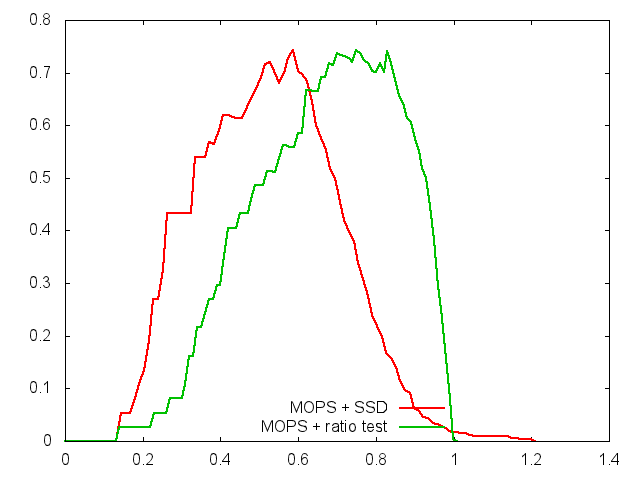

The custom feature descriptor I chose to use is a modified version of the MOPS descriptor from the lecture notes. Instead of converting the image to greyscale before computing the descriptor, I handle each color channel independently. It should maintain many of the same properties as the MOPS detector but could be helpful in environments where color is more uniform. I was hoping, for example, it would not match two features that are different colors but appear the same in greyscale. Each of these is intensity normalized independently of the others as well. Therefore, each feature takes up 3x as much data. This can end up being a lot of data and may be a potential problem. Additionally, it may be difficult to match images taken with different cameras since some cameras may handle colors differently. In general, this descriptor did not perform as well as I would like and may be useful only in special circumstances. It did better than the simple descriptor but not as well as the MOPS descriptor.

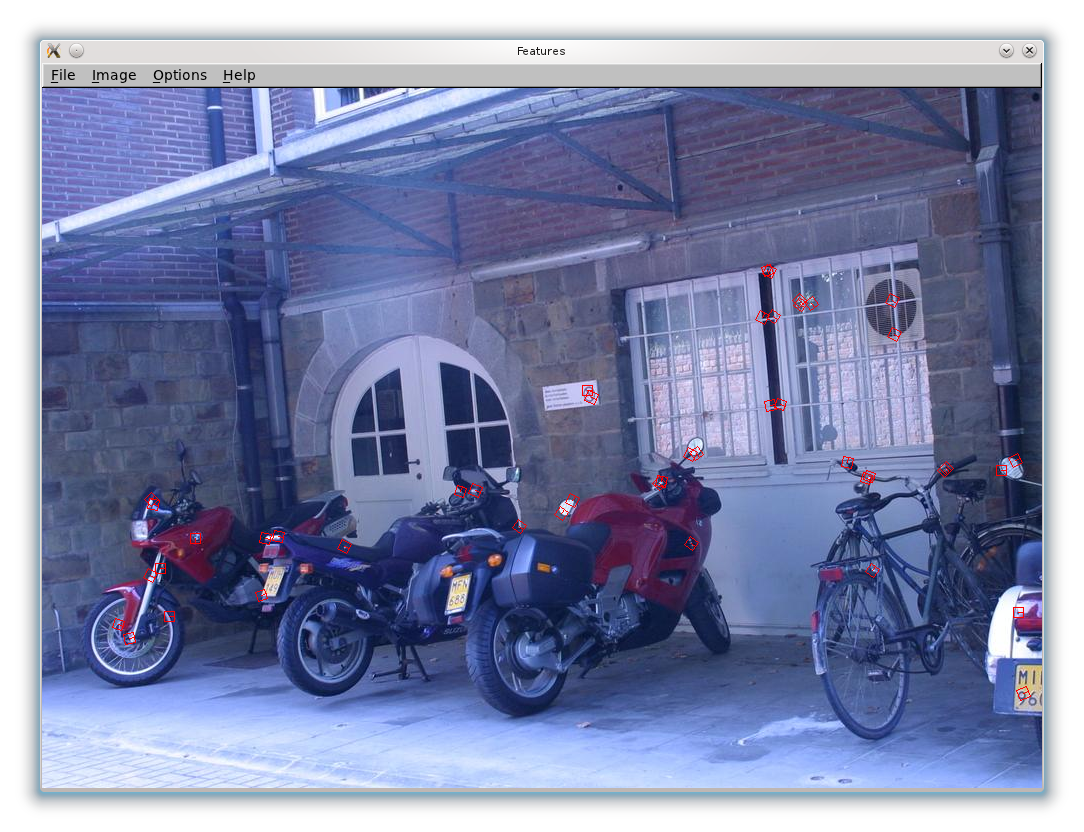

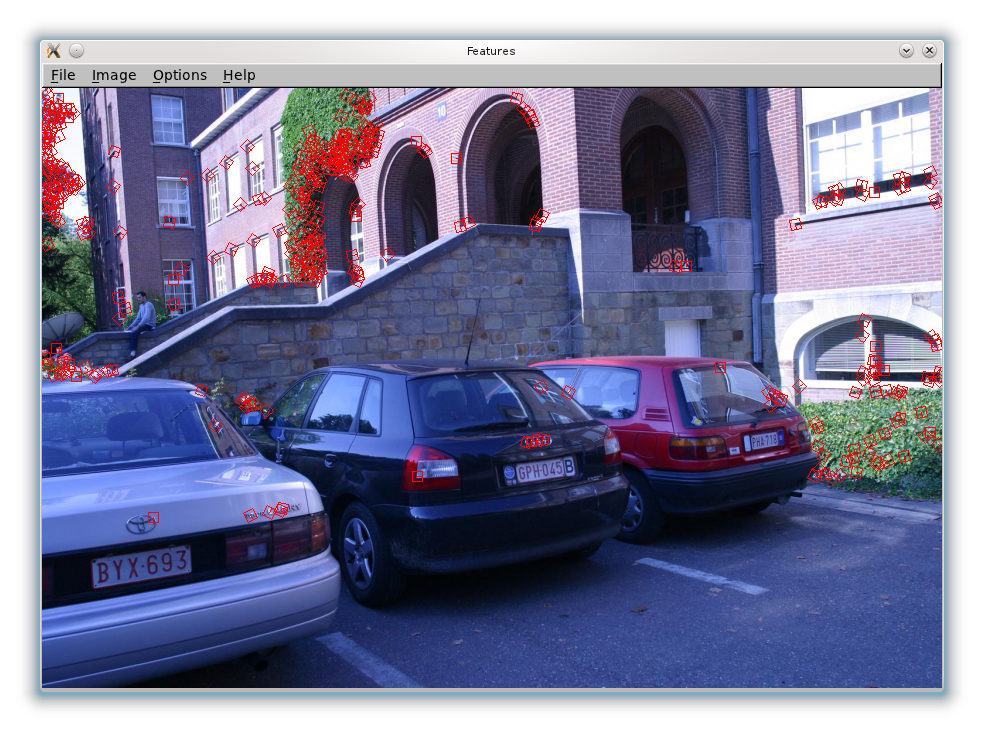

I didn't change much of the existing skeleton code at all besides where I implemented my own functions. The only change I can think of is I added 2 additional bands to the harrisImage object in ComputeHarrisFeatures(). This was done so I could calculate the angle of the corner in ComputeHarrisValues(). In my version of the code, the angle of the xmax Eigenvector of the Harris matrix is calculated for every point in the image and stored in the 2nd band of harrisImage. This is not an elegant solution but I did not want to go back and calculate the Harris matrix for each feature again so I used the existing values. In practice, this does not seem to cause any significant slowdown - images still complete on the order of milliseconds. I also found that a corner strength function threshold of about 0.015 to be a suitable middleground between number of features and noise.

| Image Set | Simple Descriptor | Custom Descriptor | ||

|---|---|---|---|---|

| SSD | Ratio Test | SSD | Ratio Test | SSD |

| graf | 0.524902 | 0.445869 | 0.560375 | 0.568705 |

| leuven | 0.410283 | 0.551652 | 0.538362 | 0.598124 |

| bikes | ? | ? | ? | ? |

| wall | 0.568017 | 0.442771 | 0.560919 | 0.592459 |