CS5625 PA7 Shadows

Out: Friday March 25, 2016

Due: Thursday April 8, 2016 at 11:59pm

Work in groups of 2.

Overview

In this assignment, you will implement two techniques for simulating shadows in interactive graphics application:

- vanilla shadow mapping, and

- shadow mapping with percentage closer filtering (PCF),

Task 1: Porting Your Own Code from PA6

For convenience, we will use the deferred shader which was used in PA6. First, modify

So that the übershader for PA7 has all the functionality of that used in PA6.

Notice that, when the übershader shades a fragment, it splits into two cases based on whether spotLight_enabled is true or not. If the variable is false, you should use the point lights to shade the pixels like in PA6. Otherwise, you should use the parameters of the spot light (stored in spotLight_color, spotLight_attenuation, and spotLight_eyePosition) to shade the fragment. You will also find that in the latter case, the shadowing factor is computed and stored in the factor variable. You should multiply this factor with spotLight_color before shading with the spot light before shading so that the light can be properly shadowed in the next tasks.

Task 2: Shadow Map Generation

In this programming assignment, we will implement spot lights whose illumination area is a rectangle. The spot light is represented by the ShadowingSpotLight class. As you will see from the code, the spot light contains a perspective camera within it. The rectangular cone emanating from the camera's eye position represents the space that can be lit by the spot light. The first step to implement the spot light is to generate the shadow map, which is basically a rendering of the scene from the point of view of this camera.

Edit

so that the shader writes the appropriate information into the shadow map.The shader contains the following uniforms and varying variables:

- geom_position: the eye-space position (with respect to the camera of the light) of the fragment.

- sys_projectionMatrix: the projection matrix of the light's camera.

- shadowMapWidth and shadowMapHeight: the width and height of the shadow map, respectively.

The information that needs to be written into the shadow map is determined by the needs of the shadow mapping shader that will read from this map and compute a shadow factor to be used in shading. That code needs to know the distance from the light to the nearest surface, and it also needs access to derivative information about this distance, to be used in computing slope-dependent shadow bias.

Let us call the above eye-space position $\mathbf{p}''$. The normalized device coordinate before the perspective divide is computed as $P\mathbf{p}'$ where $P$ denotes sys_projectionMatrix. Let $\mathbf{p}'$ denote $P\mathbf{p}''$. Recall that the depth of the fragment (that is used for depth testing and shadow mapping) is given by $\mathbf{p}'.z / \mathbf{p}'.w$. Because $P$ represents perspective projection, $\mathbf{p}'.w = -\mathbf{p}''.z$.

Because we will make use of both the depth afer perspective divide (Task 3) and the $z$-coordinate before the perspective divide (in the next PA), you need to write both the $z$-component and the $w$-component of $\mathbf{p}'$ to the shadow map. More specifically, if we let $\mathbf{q}$ denote the output fragment color of the shadow map (i.e., gl_FragColor), then set: \begin{align*} \mathbf{q}.z &:= \mathbf{p}'.z \\ \mathbf{q}.w &:= \mathbf{p}'.w \end{align*}

To implement slope-dependent bias, we need the screen space derivative of the depth $\mathbf{p}'.z/\mathbf{p}'.w$. By using the dFdx and dFdy functions, you can easily compute $\partial \mathbf{p}''/\partial x$ and $\partial \mathbf{p}''/\partial y$. Using these values and some basic calculus, you can compute $\partial (\mathbf{p}'.z / \mathbf{p}'.w) / \partial x$ and $\partial (\mathbf{p}'.z / \mathbf{p}'.w) / \partial y$. However, these partial derivatives actually depend on the size of the shadow map. (That is, if the shadow map is 2 times bigger in each dimension, the partial derivative will be 2 times smaller in magnitude.) To make the derivatives scale independent, you should multiply them with the side lengths of the shadow map. In effect, write these quantities to the $x$- and $y$-component of gl_FragColor: \begin{align*} \mathbf{q}.x &:= \frac{\partial (\mathbf{p}'.z / \mathbf{p}'.w)}{\partial x} \times \mathtt{shadowMapWidth} \\ \mathbf{q}.y &:= \frac{\partial (\mathbf{p}'.z / \mathbf{p}'.w)}{\partial y} \times \mathtt{shadowMapHeight} \end{align*}

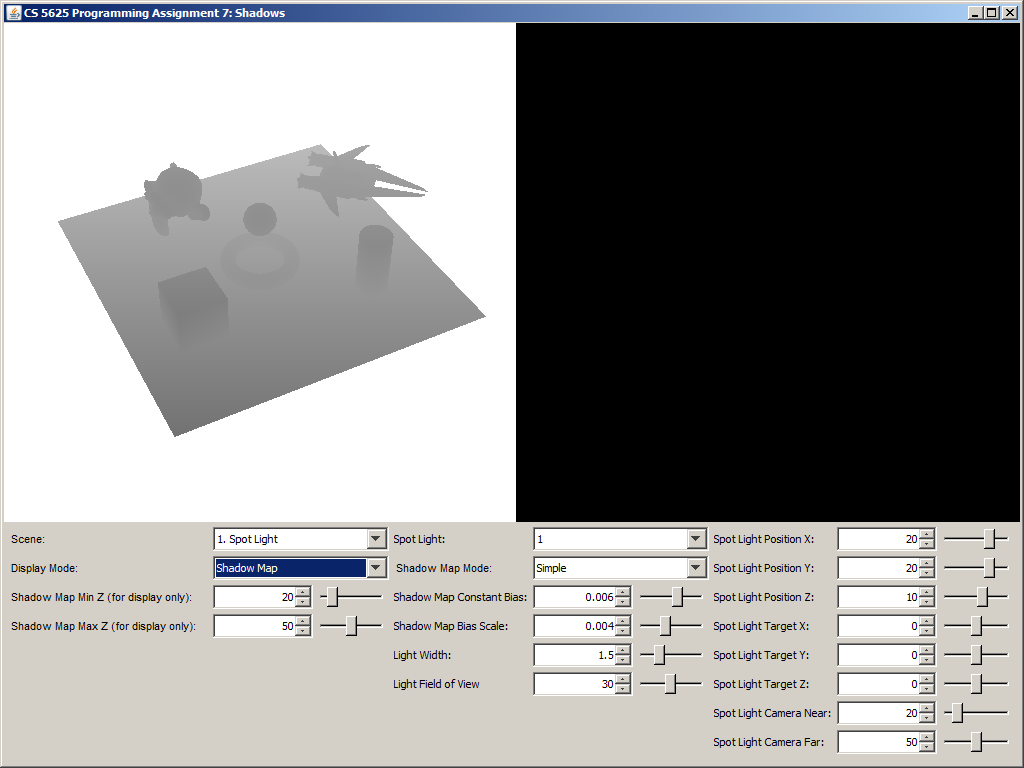

You can visualize the shadow map by selecting the "Shadow Map" display mode. The shader for this mode displays the $w$-component of the shadow map pixels in such a way that the value specified by the "Shadow Map Min Z" is displayed as black and the value specified by the "Shadow Map Max Z" is displayed as white.

A visualization of the shadow map of the "Spot Light" scene.

Task 3: Shadow Mapping

Edit

- the appropriate branch of the getShadowFactor function in student/src/shaders/deferred/ubershader.frag

Your implementation should make use of the following uniforms in the shader:

- spotLight_shadowMap: the shadow map.

- spotLight_shadowMapWidth: the width, in pixels, of the shadow map.

- spotLight_shadowMapHeight: the height, in pixels, of the shadow map.

- spotLight_viewMatrix: the view matrix of the light's camera.

- spotLight_projectionMatrix: the projection matrix of the light's camera.

- spotLight_shadowMapConstantBias: the constant shadow map bias.

- spotLight_shadowMapBiasScale: the multiplicative factor for the slope-dependent shadow map bias.

The getShadowFactor should return a floating point number whose value ranges from $0$ to $1$, where the value represents that fraction of light energy that reaches the point being shaded. The value $1$ means the shaded point receives all the light energy, and the value $0$ means the shaded point is completely in shadow. The function takes one argument, called position, which is the position of the point being shaded, retrieved from the G-Buffer. As such, this position is in the eye-space of the camera that is used to render the scene. Obviously, this camera is not the same as the one used to render the shadow map.

You shader should transform position to a texture coordinate of the shadow map. This involves first undoing the effect of the main camera's view transformation, which you can use the sys_inverseViewMatrix to do so. You should then transform the result with spotLight_viewMatrix and spotLight_projectionMatrix. Let us call the resulting 4D point $\mathbf{p}$. To get the texture coordinate, perform the perspective divide and then multiply with the appropriate scaling factors in the $x$ and $y$ components. Use result to read from the shadow map, and let us call the result $\mathbf{q}$. Recall from the last task that: \begin{align*} \mathbf{q}.x &= \frac{\partial (\mathbf{q}.z / \mathbf{q}.w)}{\partial x} \times \mathtt{shadowMapWidth} \\ \mathbf{q}.y &= \frac{\partial (\mathbf{q}.z / \mathbf{q}.w)}{\partial y} \times \mathtt{shadowMapHeight} \\ \mathbf{q}.w &= -\mathbf{p}''.z. \end{align*} where $\mathbf{p}''$ is point closest to the light (but farther than the shadow map's near plane) on the ray from the light source position to $\mathbf{p}$. Moreover, the $z$-component of the normalized device coordinate (i.e., the depth) is given by $\mathbf{q}.z / \mathbf{q}.w$.

In this task, the getShadowFactor should only output either $0$ or $1$ (i.e., the point is either entirely in shadow or not at all under shadow). The point is under shadow if and only if: $$ \frac{\mathbf{p}.z}{\mathbf{p}.w} > \frac{\mathbf{q}.z}{\mathbf{q}.w} + \mathtt{spotLight\_shadowMapBiasScale} \times \max ( |\mathbf{q}.x|, |\mathbf{q}.y| ) + \mathtt{spotLight\_shadowMapConstantBias}.$$

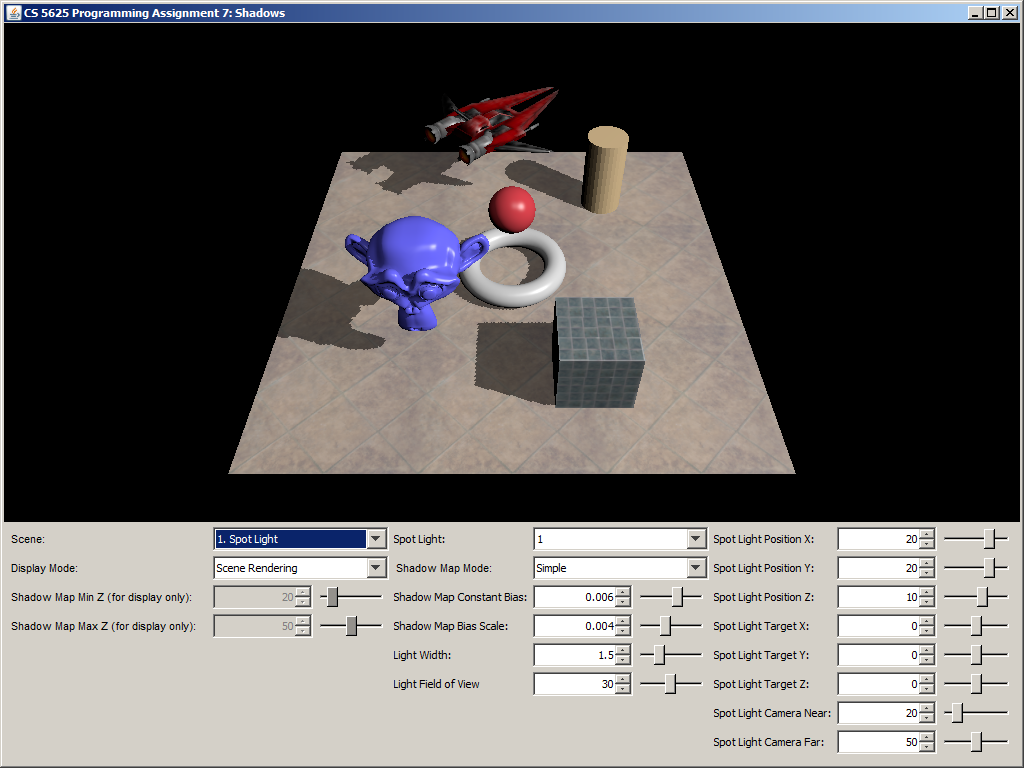

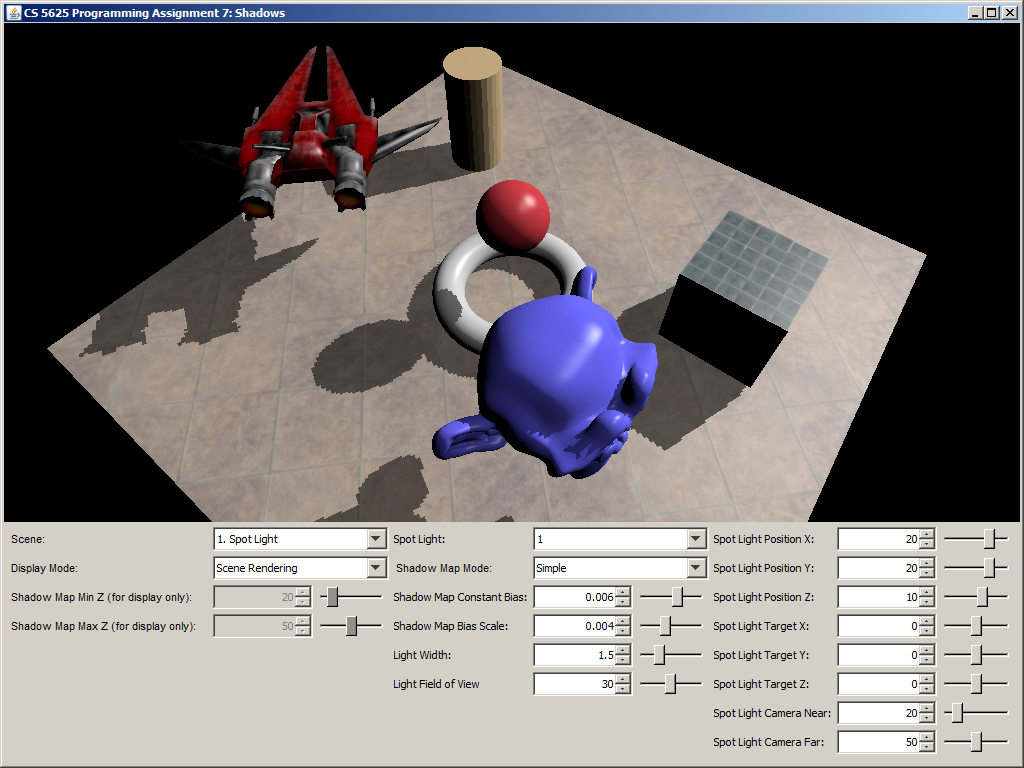

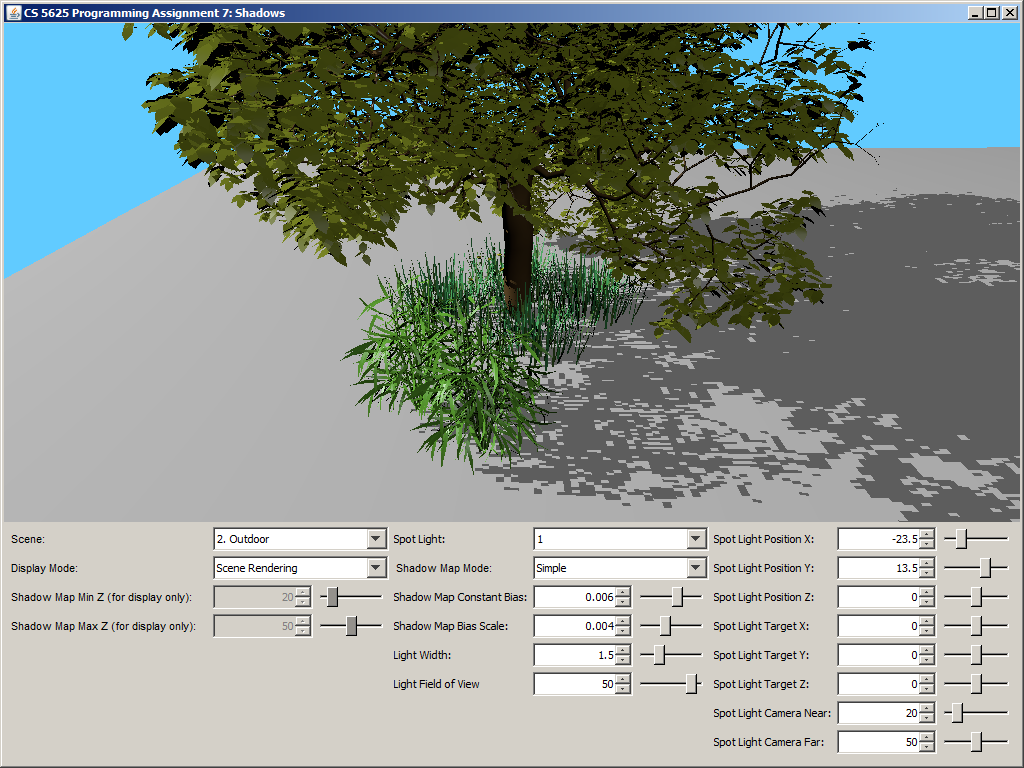

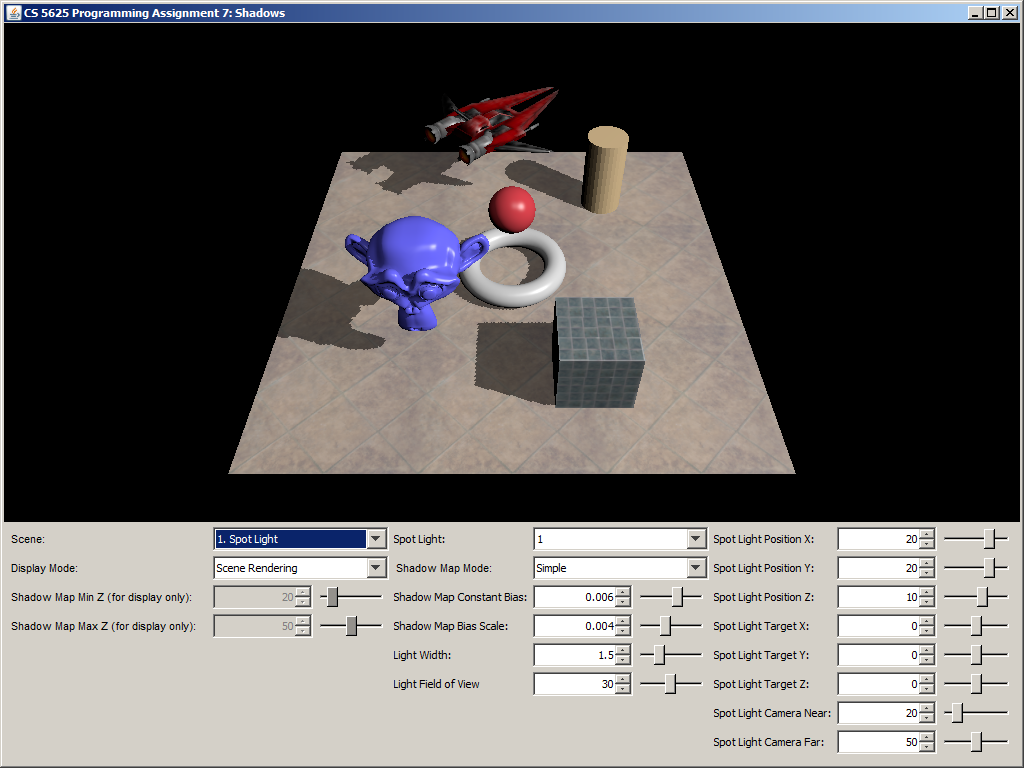

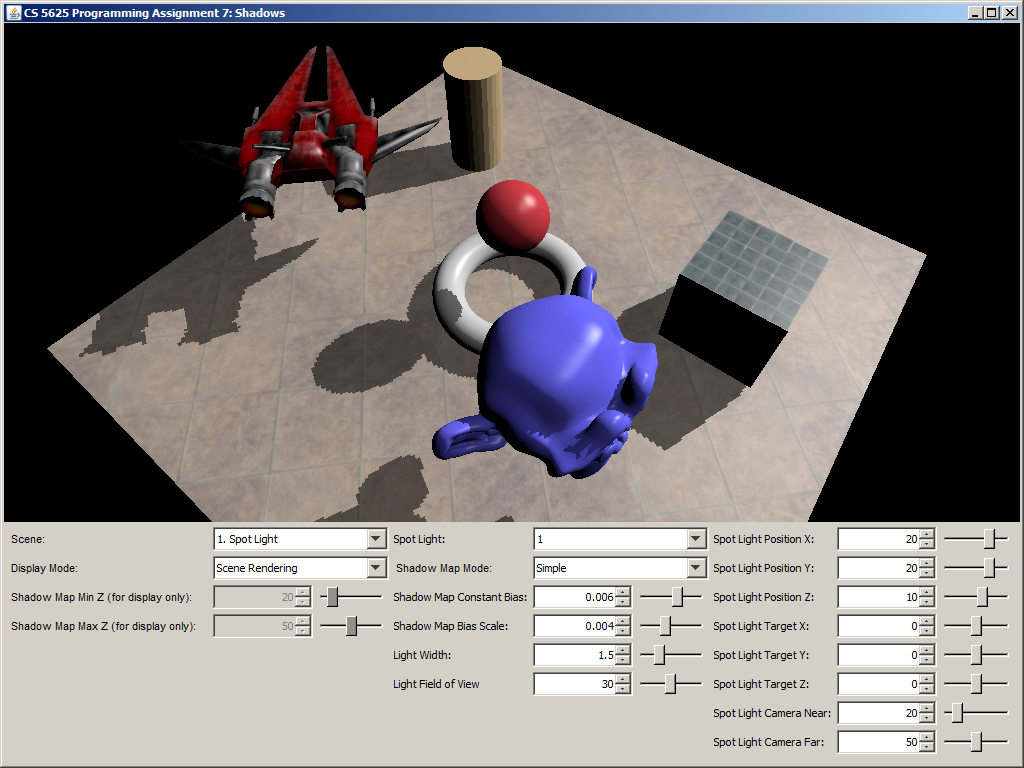

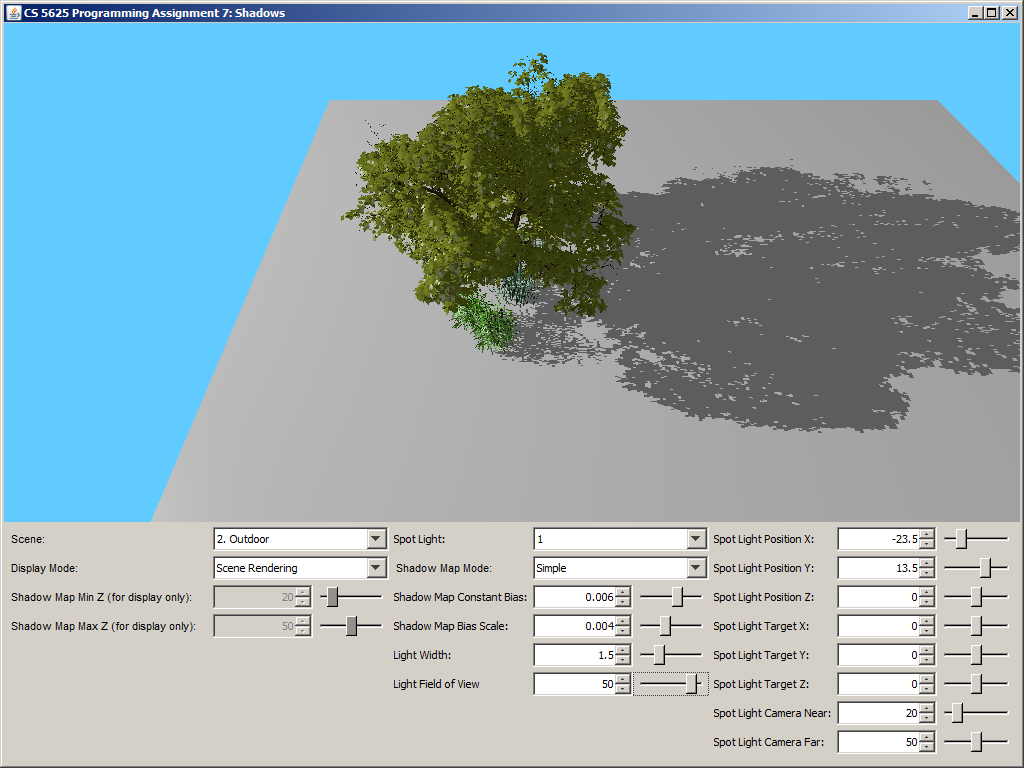

A correct implementation of the shader should yield the following pictures.

|

|

|

|

You should play with the constant bias and the slope-dependent bias factor to see its affect. In general, as you increase either bias, less of the scene should be in shadow. Also, if you set both parameters to zero, you should see lots of shadow acne. You should find that removing this artifact using only a constant bias requires a large bias value.

Task 4: Percentage Closer Filtering

Edit

- the appropriate branch of the getShadowFactor function in student/src/shaders/deferred/ubershader.frag

Your implementation should make use of the following uniforms in the shader:

- spotLight_pcfKernelSampleCount: the number of samples to use for this task.

- spotLight_pcfWindowWidth: the side length of the square window in the shadow map to sample from.

According to original PCF paper (Reeves et al.), one should take a pixel footprint in screen space, project this footprint into the shadow map, and sample shadow map points in this area. However, the GPU Gems article advises doing away with the aforementioned transformation. That is, we basically form a square window of size spotLight_pcfWindowWidth around the shadow map position, which is the position parameter to the getShadowFactor function. From this window, you should sample spotLight_pcfKernelSampleCount points using the shadow mapping algorithm from the last task, compute the fraction of samples whose depth (after incorporating bias) is less than position's depth, and output this fraction as the shadow factor.

We advise that you generate the sample points by using the blue noise sampling pattern that you used in PA3. The noise texture is available in the blueRandTex uniform. (spotLight_pcfKernelSampleCount is set to 40, and in this way you sample the whole row of the texture.) To remove banding artifacts, you should also apply a random rotation to the point set too.

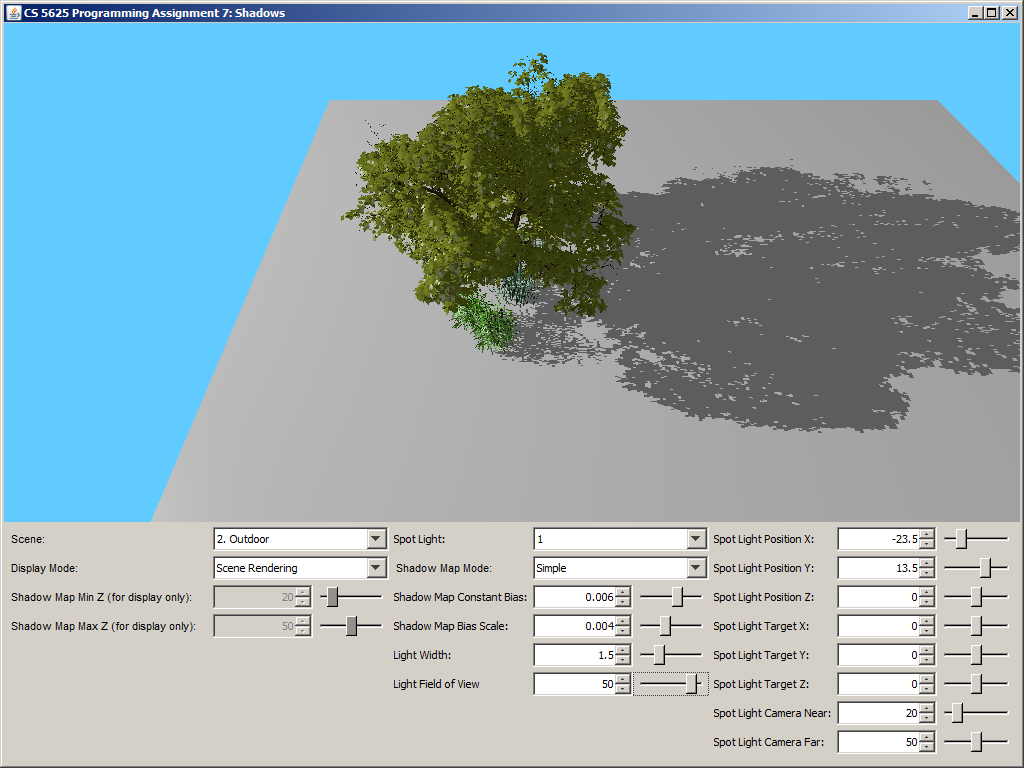

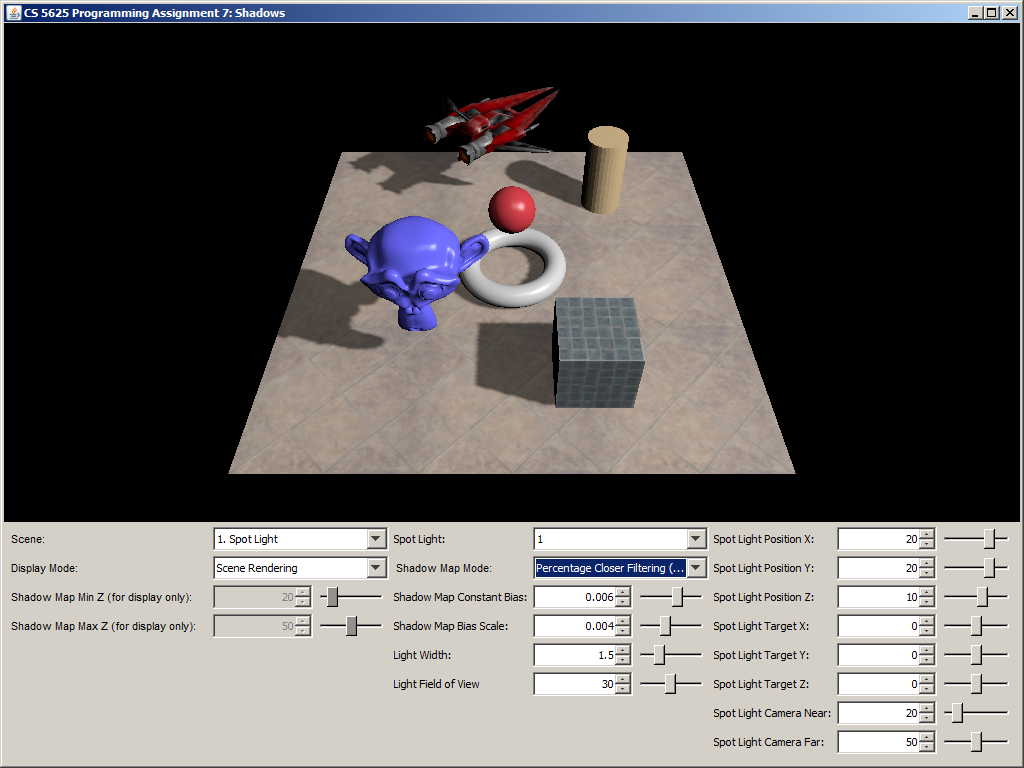

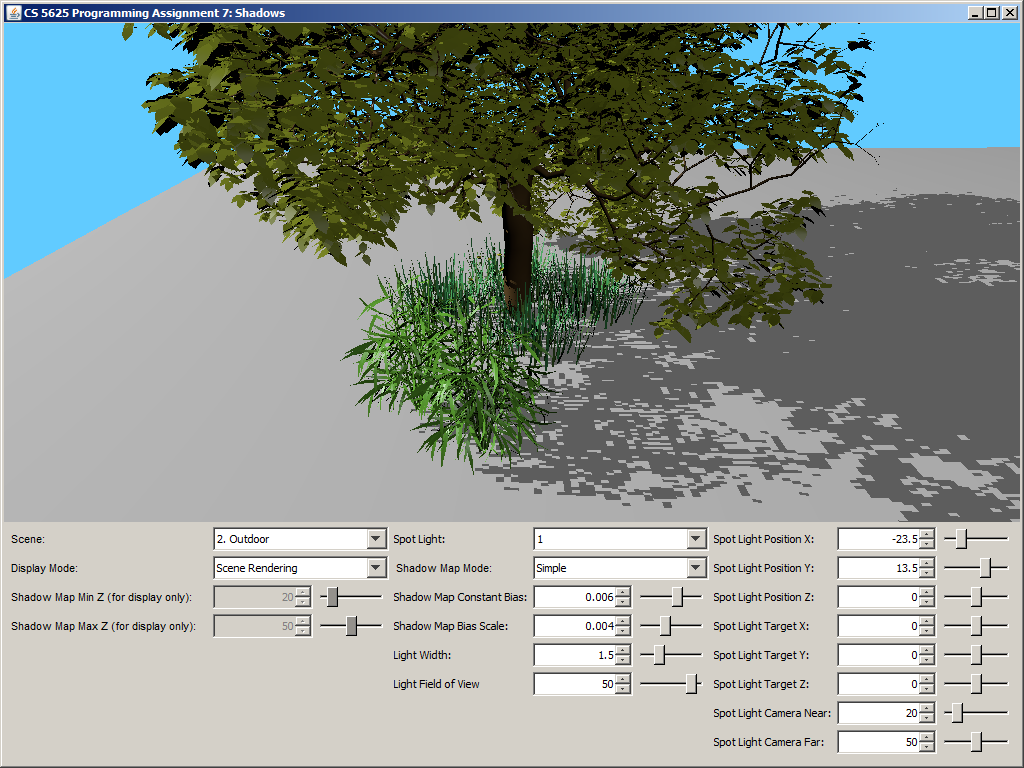

A correct implementation should yield the following images:

| No PCF | With PCF |

|

|

|

|

|

|

|

|