Finding the best weak learner

Gradient: $\frac{\partial l}{\partial H(x_i)}=-y_i \underbrace{e^{-y_i H(x_i)}}_\mathrm{defined \enspace from \enspace w_i} < 0 \enspace \forall x_i$

$\underbrace{r_i}_\mathrm{row\enspace weight}=e^{-H(x_i)y_i} \qquad \underbrace{w_i}_\mathrm{normalized\enspace weight}= \frac{e^{-H(x_i)y_i}}{\underbrace{z}_\mathrm{normalization\enspace for\enspace convenience}},\qquad \forall x_i$

$z=\sum_{i=1}^{n} e^{-H(x_i)y_i}$ so that $\sum_{i=1}^{n} w_i=1$

$argmin_{h}-\sum_{i=1}^{n}y_i e^{-H(x_i)y_i} h(x_i) = argmax_{h}\underbrace{\sum_{i=1}^{n} w_i \underbrace{y_i h(x_i)}_\mathrm{+1\enspace if\enspace h(x_i)=y_i,\enspace -1\enspace 0/w}}_\mathrm{this \enspace is \enspace the\enspace training\enspace accuracy\enspace (up\enspace to\enspace scaling) \enspace weighted\enspace by\enspace distribution\enspace w_1,w_2...w_n}$

So for AdaBoost, we only need a classifier that can take training data and a distribution over the training set and which returns a classifier $h\in H$ with less than 0.5 weighted training error.

Weighted training error: $\epsilon=\sum_{i:h(x_i)y_i=-1} w_i$

Condition: for $w_1,...,w_n$ s.t.: $ w_i\geq 0 $ and $ \sum_{i=1}^{n} w_i=1 \enspace h(D, w_1,...w_n) $ is such that $\epsilon <0.5$

Finding the stepsize $\alpha$

(by line search to minimize l)Remember: $\epsilon=\sum_{i:y_i h(x_i)=1} w_i$

Choose: $\alpha=argmin_{\alpha}l(H+\alpha h)$

= $argmin_{\alpha} \sum_{i=1}^{n} e^{y_i[H(x_i)+\alpha h(x_i)]}$

$\downarrow$ Differentiating w.r.l.$\alpha$ and equating with zero.

= $\sum_{i=1}^{n} e^{y_i H(x_i)+\alpha y_i h(x_i)} * \underbrace{y_i h(x_i)}_\mathrm{\in \{+1,-1\}}=0$

$-\sum_{i:h(x_i) y_i=1} e^{-(y_i H(x_i)+\alpha \underbrace{y_i h(x_i)}_\mathrm{1})} + \sum_{i:h(x_i) y_i \neq 1} e^{-(y_i H(x_i)+\alpha \underbrace{y_i h(x_i)}_\mathrm{-1})}=0 \mid : \sum_{\underbrace{i=1}_\mathrm{normalizer}}^{n} e^{-y_i H(x_i)}$

$-\sum_{i:h(x_i) y_i=1} w_i e^{-\alpha} + \sum_{i:h(x_i) y_i \neq 1} w_i e^{+\alpha}=0 $

$-(1-\epsilon)e^{-\alpha}+\epsilon e^{+\alpha}=0$

$e^{2 \alpha}=\frac{1-\epsilon}{\epsilon}$

$\alpha=\frac{1}{2}\ln \frac{1-\epsilon}{\epsilon}$

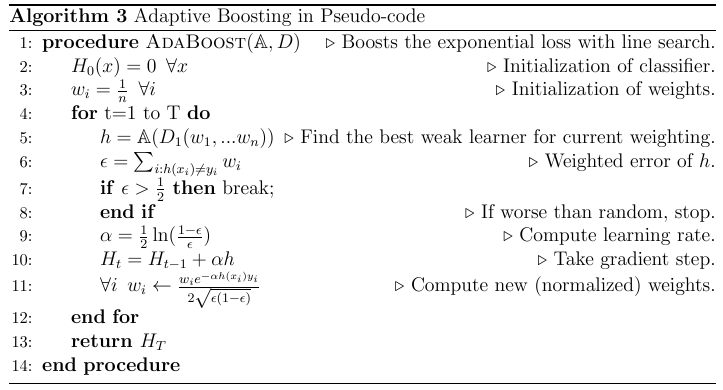

AdaBoost Pseudo-code