Project 5 $ Cache Design

CS 3410 Spring 2019

Project Due: April 26, 2019 at 11:59pm

Please answer the questions on Canvas and submit all code via CMS.

This is a partner project. You can ONLY work with your

assigned partner. Failure to adhere to this rule will result in an Academic

Integrity Violation.

Overview

In this assignment you will explore the effectiveness of

caches and the impact of different cache configurations. To do

this, you will write a C program that simulates the behavior

of a cache. The simulator will be configurable, meaning that

it can model the behavior of a variety of cache

configurations. When exploring cache designs, a performance

simulator like this is faster to work with than modeling the

caches at the circuit level (for example, in Logisim). Your

cache simulator will take as input a memory trace: a

list of load and store instructions that were executed during

a single run of a single program. You will use this single

memory trace to evaluate the impact of cache size,

associativity, block size, etc. on cache performance.

Deliverables

In addition to turning in your code on CMS, you will be asked to run 5 Experiments with your cache simulator.

You will be asked to answer questions about these experiments on Canvas. For tasks 5-8, you will be told:

- The experiment(s) you need to run for that task.

- The questions for each experiment (to be submitted via Canvas quiz).

The Trace

The memory trace you will be using was generated by running

a program called art and

recording

all of the loads and stores issued by the processor during that run. It

is one of many benchmarks used by processor designers to

measure the quality of their designs. (Normally you would not

just look at a single benchmark, but for the purposes of this

assignment, one is sufficient.) The trace file looks like

this:

0 3019b6c8

0 300e20d8

0 3026e398

0 30192830

1 30192830

0 300049d0

...

A leading 0 indicates a load and a 1 indicates a store. The second

number is the address of the memory operation (in hex). Taking

a look at

is_load and

is_store

variable in

simulator.c will help you further understand

the format of the trace file.

Assume that addresses are 32 bits for this entire project.

For our purposes a kilobyte (KB) is equivalent to a KiB, which is equal to 1024 (210) bytes.

A megabyte (MB) is equivalent to an MiB, which is 1,048,576 (220) bytes.

For example, 32KB is equal to 32*210B = 25*210B = 215B

and 64MB is equal to 64*220B = 26*220B = 226B.

Tasks

We have broken up the assignment into the following tasks:

Task 1: Cache Tag/Index/Offset Calculations

A cache has three primary configuration parameters:

- A: Associativity (number of ways per set)

- B: Block size (size of a single block, a block is also referred to as a cache line)

- C: Capacity or cache size (total amount of data in the cache)

These three parameters determine the number of sets and

ways in the cache. These parameters also specify how a memory

reference address is split into its three components: tag, index, and offset.

Paper Exercise: calculate the following values for a two-way set associative 32KB cache with

64B blocks (that is: A=2, B=64B, C=32KB):

- Number of offset bits in the address

- Number of sets in the cache

- Total number of cache lines in the cache

- Number of index bits in the address

- Number of tag bits in the address

You do not need to turn this in, but for the sake of your

understanding this assignment and your performance on Prelim 2,

please take it seriously!

Task 2: Generalizing the Calculations

Write formulas in terms of A, B,

and C for calculating the following quantities. You may find

using log(A), log(B), and log(C) in the formulas easier than

A, B, and C directly. For this assignment, you may assume A, B, and C

are all powers of 2. Write the formulas for:

- Number of offset bits in the address

- Number of sets in the cache

- Total number of cache lines in the cache

- Number of index bits in the address

- Number of tag bits in the address

Hint: you may find it helpful to first work out the numbers for a direct-mapped cache (that is,

consider just B and C), and then extend it to set-associative caches (in which multiple cache lines

reside in a single set).

Task 3: Initializing the Cache

Now use your formulas from Task 2 to complete 5 lines of the make_cache function in

cache.c.

You need to set the variables for n_offset_bit, n_set,

n_total_cache_line,

n_index_bit, and n_tag_bit.

Notice there is a log2 function in the included <math.h> library.

Because we're not actually manipulating the actual data values (as a real processor would), but

just calculating hit/miss for each memory reference, there is no need to store data values in the cache

(in fact, the trace doesn't even give you the data values).

The cache can be represented by a 2-dimensional array of tags. (The second dimension only

becomes relevant when the associativity of the cache is higher than 1.) Finish the make_cache

function by creating the array of tags that will be used to determine whether a cache hit or

miss has occurred. You must complete and use the helper function make_2d_matrix.

It will malloc an array, with each element holding a pointer to another malloced array to create

an n_row by n_col matrix whose elements are of size size.

To avoid using a valid bit, we initialize all the tag

values to zero; we assume that all blocks are valid and remain

valid throughout the simulation. (We can make this

simplification because we happen to know that the trace we're

using doesn't have any addresses in the address range that

would create an all-zero tag for the caches we

simulate.)

Completing the Access Method

The most challenging part of the assignment will be to write the access method access_cache,

which performs

the cache lookup for each load or store; it returns true if the access is a hit, false if the access

results in a miss. Read the rest of the assignment and the code you have been given to correctly

implement the access method. Do not try to implement the entire method at once. Instead, build

on the method as each new concept is introduced below.

Task 5: Implementing a Direct-Mapped Cache to explore Hit Rate vs. Capacity

Complete the access method for a direct-mapped cache by following the steps described in the function's

comment

(you can ignore lru_way and dirty_bits for now).

Your simulator can now simulate a direct-mapped cache parameterized

by B and C. Let's test it out!

The first experiment is to gauge the impact of cache size

on cache miss rate.

For now, handle loads and stores in the same fashion. In the next task you will set

dirty_bits depending on whether or not it is a store.

Additionally, if the tag is in cache_tags then it is a hit, regardless of if it was a load or

a store

Use your cache simulator to produce cache miss rates for varying cache sizes. Generate the data

for caches capacity from 256 bytes (28) to 4MB (222). Configure the block size to 64

bytes.

Below are the first two commands you should run. The first sets the cache capacity to 28 = 256

bytes

and the block size to 26 = 64 bytes. The second increases the cache capacity to 29 =

512 bytes.

./p5 -t art-full.trace -cache 8 6 1

./p5 -t art-full.trace -cache 9 6 1

Generate a line plot of this data. On the y-axis, plot the "cache miss rate (percent of all memory

references)".

The smaller the miss rate, the better. Use log of the cache size for x-axis..

Optional: Running this command 15 times can get tedious (especially if you don't use the up

arrow).

You can automate this process using python or bash scripting. Feel free

to try it out. Here is an example of a bash for-loop that you can modify and then directly copy into the

terminal, or

save in some file myscript.sh and then run any time by running bash myscript.sh.

for i in `seq 1 10`;

do

echo count: $i

done

Experiment 1

- Associativity = 1

- Block size = 64B

- Capacity = 256B, 512B, 1KB, 2KB, ..., 4MB

Questions

- What’s the smallest capacity that makes the miss rate of direct-mapped cache less than 15%?

- What’s the smallest capacity that makes the miss rate of direct-mapped cache less than 5%?

- Ratio of Hit Rate: 32KB vs. 64KB

Task 6: Implementing Set Associativity to explore Hit Rate vs. Associativity

Before moving on: create a copy of your working cache up to this point. Run the following command

cp cache.c cache_direct_mapped.c to copy your cache.c into a new file

cache_direct_mapped.c.

This will be to ensure that you can receive partial credit if your new changes break your

direct-mapped-cache implementation. You will

have the opportunity to submit both versions, but because your final cache.c will be a

super-set of cache_direct_mapped.c,

you are allowed to submit your final cache.c code for both.

Now modify your simulator to support n-way set associative caches. Each set in the cache will now

need an array of "tag" entries (one for each way), and a single "LRU" (least recently used) bit

used for cache replacement. An LRU update method has been provided for you. See the code to

understand how it works and how you should call it. When replacing a block, replace the LRU

entry (and don't forget to update the LRU bit to point to the other entry).

Generate miss rate data for the same block size and caches sizes as in the previous question, but

simulate two-way set-associative caches. Plot this result with the original data collected for a

direct-mapped cache (two lines on the graph: one for direct-mapped caches and one for the 2-way

set-associative cache).

Experiment 2

- Associativity = 2

- Block size = 64B

- Capacity = 256B, 512B, ..., 4MB

Experiment 3 (Optional)

- Associativity = 4

- Block size = 64B

- Capacity = 256B, 512B, ..., 4MB

Questions

- What’s the smallest capacity that makes the miss rate of 2-way cache less than 15%?

- What’s the smallest capacity that makes the miss rate of 2-way cache less than 5%?

- How large must the direct-mapped cache before it equals or exceeds the performance of the 1 KB 2-way

assoc?

- If two address reside in the same cache set, what do they have in common?

- Set index bits

- Tag bits

- Block index bits

- Valid bit

-

Memory address

m is 4-bit binary. Suppose we have a cache of capacity 8 bytes, blocksize = 2

bytes.

For the following memory access pattern, will you prefer a direct-mapped cache or a 2-way cache design if

you want a higher hit rate?

- Direct-mapped

- 2-way

Because the set-associative cache generally performs better than the direct-mapped cache, all

subsequent

experiments use the two-way set associative cache.

Task 7: Tracking bytes transferred to explore Write-Back vs. Write-Through Caches

Traffic into the cache is easy to calculate: it is just the number of misses multiplied by the

block size because for each miss you must bring into the cache that entire block of memory.

Traffic out of the cache is write traffic, and it depends on whether the cache is write-back

or write-through. Your simulator can calculate the traffic for both write-back and write-through

caches in a simulation as follows:

- Write-through: For the write-through cache, the traffic is the average number of bytes

written by each store multiplied by the number of stores. The trace doesn't specify how many

bytes each store writes, but for your calculations assume that each store writes 4 bytes.

- Write-back: For the write-back cache system, the traffic is the number of dirty evictions

multiplied by the block size. Because at most one eviction happens per miss, this write-back

traffic can at most double the overall traffic (in the case in which all evicted blocks were

dirty). To calculate the number of dirty evictions, modify your simulator by adding a "dirty"

bit for each block in the cache. Set the dirty bit whenever a store operation writes to the

block. This dirty bit is not per word or per byte; it is one dirty bit for the entire cache line.

(You'll need a dirty bit for each of the ways in the set; be sure to set the correct dirty bit.)

Compute and store the total traffic for both cache configurations in the cache_stats.c

variables total_bytes_transferred_wb and total_bytes_transferred_wt.

For both cases, use a write-allocate policy. This implies that the number of misses for the write-through

and write-back caches are the same, only the traffic is different.

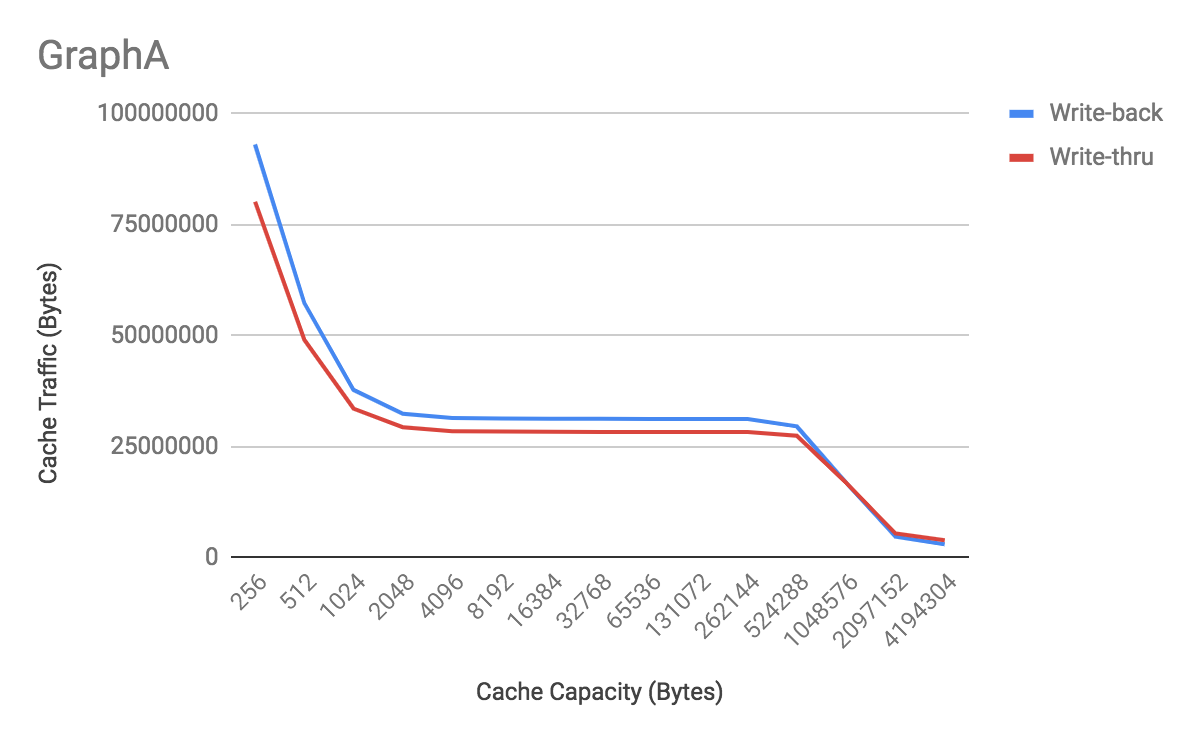

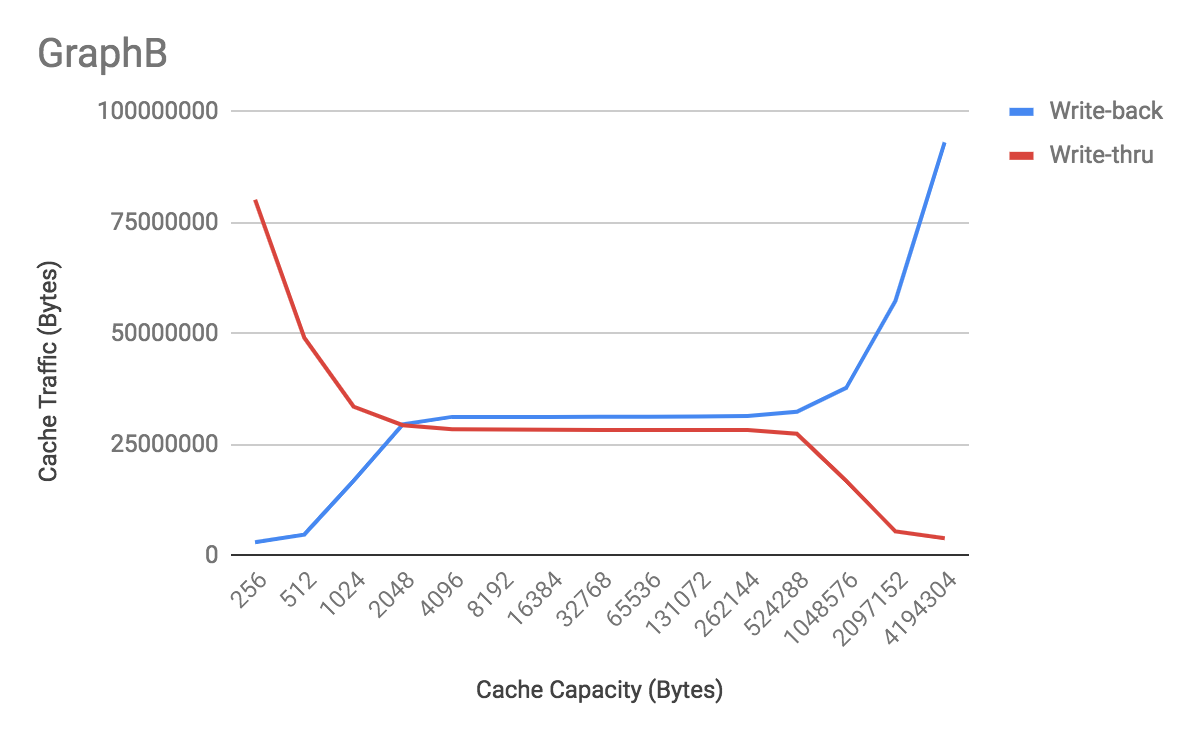

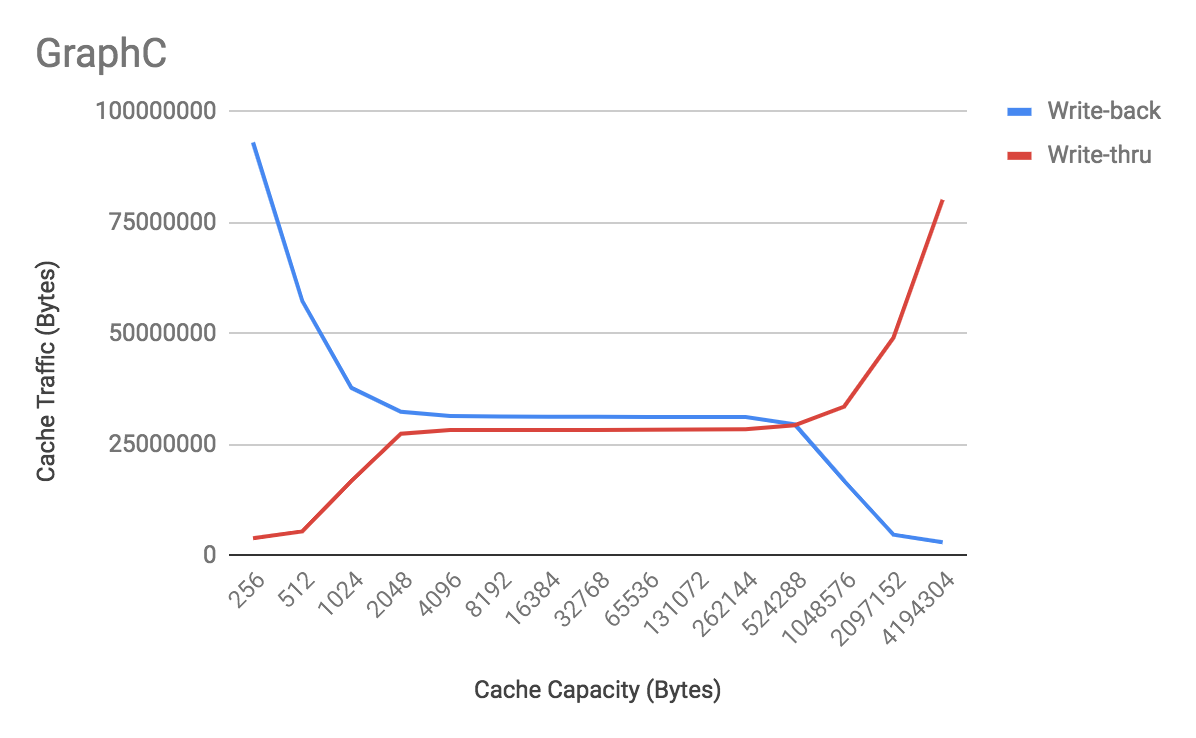

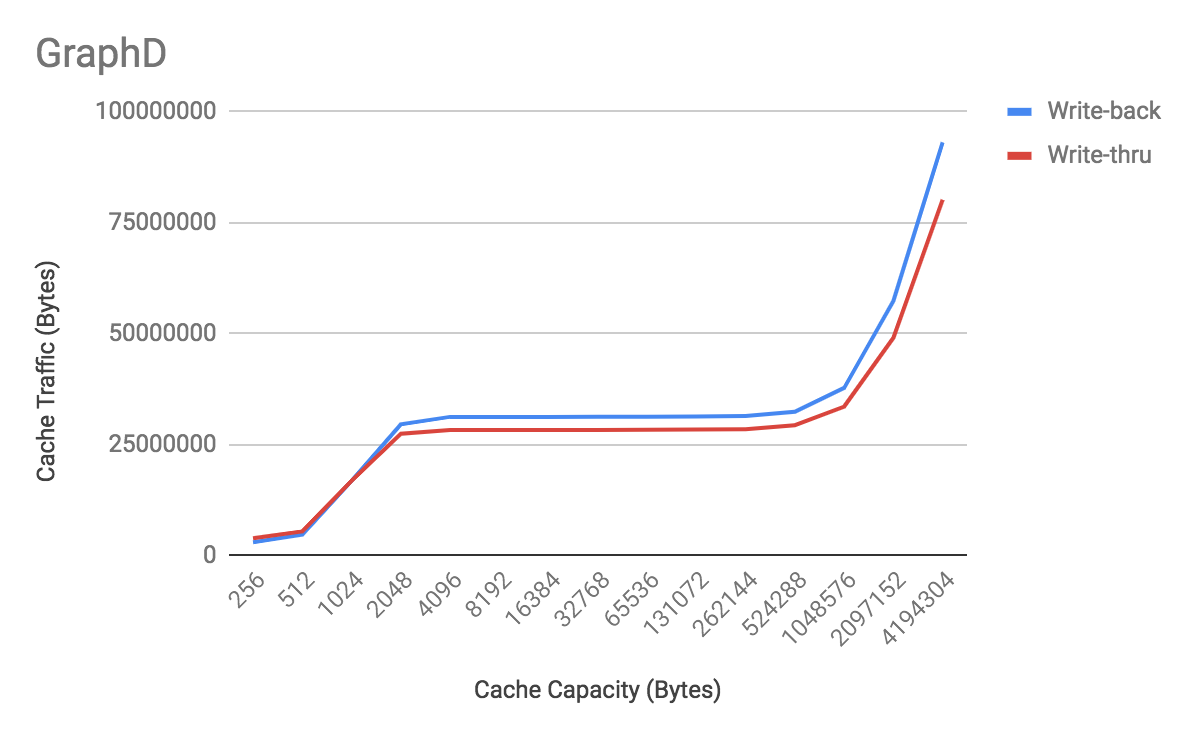

For a two-way set-associative cache with 64-byte blocks, generate a graph plotting the same

cache sizes as the previous question (on the x-axis) versus total data traffic per memory

operation (total number of bytes transferred divided by the total number of memory references).

Plot two lines: (1) the write-through cache (miss fill traffic + write-through traffic) and (2) the

write-back cache (miss fill traffic + write-back of dirty blocks).

Experiment 4

- Associativity = 2

- Block size = 64B

- Capacity = 256B, 512B, ..., 4MB

Questions

- Which of the following plot of cache traffic (traffic out + traffic in) is the closest of the one you

produced (both for write-back and write-through)?

- If we only plot the traffic out of the write-through cache, as capacity of the cache increases, how does

the traffic change?

- Increase

- Decrease

- Stay the same

- If we only plot the traffic out of the write-back cache, as capacity of the cache increases, how does

the traffic change?

- Increase

- Decrease

- Stay the same

Task 8: Hit Rate vs. Block size

Now let's explore the impact of different cache block sizes. Use the simulator to calculate the miss rate

for a 32KB, two-way set associative,

write-back cache with several different block sizes: 8B, 16B, 32B, 64B, 128B, 256B,

and 512B blocks. Create two graphs from this data:

- Miss Rate Graph. Plot the data on a graph with miss rate on the y-axis, log of the

cache block size on the x-axis, and the miss rate plotted as a line.

- Traffic Graph. Plot the data on a graph the total write-back traffic on the y-axis,

log of the cache block size on the x-axis, and the total write-back traffic

plotted as a line.

Experiment 5

- Associativity = 2

- Block size = 8B, 16B, 32B, ..., 512B

- Capacity = 32KB

Questions

- What is the block size with the highest hit rate?

- What is the block size with the lowest total write-back traffic (transferred in + write-back transferred

out)

- Based on the calculation, if a cache design uses a relatively large block size (say 2KB), which metric

is the design trying to minimize?

- Miss rate

- Traffic

- Based on the calculation, if a cache design uses a relatively small block size (say 8B), which metric is

the design trying to minimize?

- Miss rate

- Traffic

What to Turn In

Source Code. Submit your completed code via CMS under the P5 assignment.

cache.c

cache_direct_mapped.c

cache_stats.c

Answers. You will submit your answers to all of the above questions by completing the P5 Quiz on

Canvas.

Comments and Hints

Test Cases / Debugging. All of the data you plot and discuss should be generated from the

art-full.trace input trace.

Makefile. A Makefile can be your good friend to help you compile your source code more

conveniently. We provided the Makefile for you in this project. If you are interested reading

more about Makefiles, Section F.6 in

this chapter is a good read.

Sanity Test To help you debug your cache simulator, we've provided a sanity test. The way to use

it is to run the sample input command and compare your result with the sample output command.

Comparing your results to these sample outputs should help ensure your code is compiling properly

and satisfies basic functionality. These debug runs are a completely optional

portion of the assignment, but it is one way to make sure your are off to a good start.

Here are the three sample input command:

make

./p5 -t art-full.trace -cache 16 4 2

./p5 -t art-full.trace -cache 8 4 4

./p5 -t art-full.trace -cache 24 2 8

The three sample outputs are as follows:

Expected output for command 1:

-----------------------------------------------------

Trace name art-full.trace

Cache size = 65536B. Each block = 16B.

2-way set associative cache.

Tag = 17 bits, Index = 11 bits, Offset = 4 bits

There are 2048 sets total in the cache.

At this associativity, that means a total of 4096 cache lines.

Instruction Limit none

art-full.trace

Processed 1957764 trace lines.

Num Instructions: 1957764

Cache Hit Rate = 72.69% (Miss Rate: 27.31%)

Total number of bytes transferred write-back: 9516240

Total number of bytes transferred write-thru: 9459396

Expected output for command 2:

-----------------------------------------------------

Trace name art-full.trace

Cache size = 256B. Each block = 16B.

4-way set associative cache.

Tag = 26 bits, Index = 2 bits, Offset = 4 bits

There are 4 sets total in the cache.

At this associativity, that means a total of 16 cache lines.

Instruction Limit none

art-full.trace

Processed 1957764 trace lines.

Num Instructions: 1957764

Cache Hit Rate = 69.18% (Miss Rate: 30.82%)

Total number of bytes transferred write-back: 10615264

Total number of bytes transferred write-thru: 10558244

Expected output for command 3:

-----------------------------------------------------

Trace name art-full.trace

Cache size = 16777216B. Each block = 4B.

8-way set associative cache.

Tag = 11 bits, Index = 19 bits, Offset = 2 bits

There are 524288 sets total in the cache.

At this associativity, that means a total of 4194304 cache lines.

Instruction Limit none

art-full.trace

Processed 1957764 trace lines.

Num Instructions: 1957764

Cache Hit Rate = 88.76% (Miss Rate: 11.24%)

Total number of bytes transferred write-back: 880208

Total number of bytes transferred write-thru: 1783732

You can check your output against the sample output for sanity purpose. To compare your output

with ours systematically, we recommend using the command diff in the terminal.

diff file1 file2 will output contents that are different between file1 and file2.

Acknowledgements. This assignment was originally written by Milo Martin at University of

Pennsylvania and subsequently modified to include the coding infrastructure and an autograder

by Anne Bracy at Washington University in St. Louis. The assignment code base was converted from

Java to C by CS 3410 course staff at Cornell University to meet the needs of the class.

The Makefile reading material used in this project is from

Operating Systems: Three Easy Pieces

authored by Remzi H. Arpaci-Dusseau and Andrea C. Arpaci-Dusseau at University of Wisconsin-Madison.